Database migration is a complex process that usually involves capacity planning, data migration and cutover strategies, hardware and software procurement, and a lengthy debugging and testing schedule. The common challenge in migrating from a relational database to a non-relational database is selecting the appropriate modeling technique and migration technologies.

This is the second part of a two-part series. In the first post, we discussed data modelling for an internet-scale online transactional system using Amazon DynamoDB, a fully managed, serverless, key-value NoSQL database.

In this post, we discuss and build a methodology to migrate an online application from a relational database management system (RDBMS) to DynamoDB using AWS Database Migration Service (AWS DMS).

Solution overview

In the first post, we used the IMDb dataset normalized across multiple tables in a MySQL database as the source data. IMDb is well-known for its online database of movies, films, TV shows, and other media. In this post, we show you how to move a subset of the IMDb dataset from MySQL to a DynamoDB table using a single table design approach to meet the required access patterns. The solution includes the following steps:

Prepare the IMDb source application for migration.

Create an AWS Identity and Access Management (IAM) role for the AWS DMS jobs to assume.

Create an AWS DMS replication instance.

Create the source and target endpoints.

Configure and run AWS DMS replication tasks.

Verify the access patterns using PartiQL.

Prerequisites

A basic understanding of DynamoDB is required because this post introduces data modelling concepts and a migration strategy for highly transactional, internet-scale applications built on a relational database modeling system (RDBMS). A basic understanding of AWS CloudFormation and DMS is recommended as this post includes a CloudFormation template to build the target environment. An AWS account with the necessary IAM privileges is also required.

Before starting this post, complete the steps in Data modeling for an internet-scale online transactional system using Amazon DynamoDB. If you use your AWS account, you’ll incur a cost of approximately $30 per day for the resources provisioned by the CloudFormation template. To avoid future charges, make sure you delete the CloudFormation template when you’re done.

Prepare the IMDb source application for migration

In the first post, we discussed sample access patterns for an IMDb application. We used those access patterns to define collections of records using composite sort keys and built a single table design for the IMDb dataset.

Now, you’ll prepare the source dataset for migration. This is a heterogeneous migration, as the source application is running on a MySQL database on Amazon Elastic Compute Cloud (Amazon EC2), and the target environment is a key-value table on DynamoDB. You create a denormalized view of the information stored across the IMDb tables title_basics and title_principals. The information stored in the title_akas and title_ratings tables will be migrated one-to-one to the target DynamoDB table. The details of the design strategy are discussed in the first post.

To prepare the IMDb source application

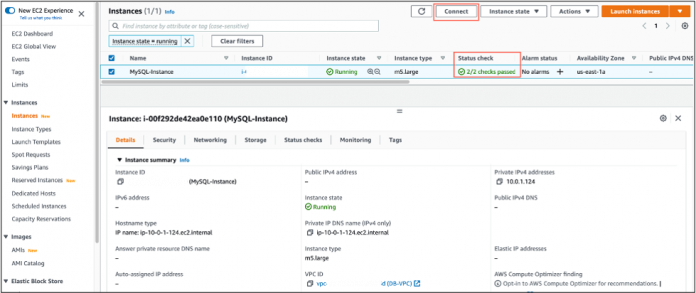

Go to the AWS Management Console for EC2 and choose Instances from the navigation pane. Select the instance named MySQL-Instance and choose Connect.

Note: The MySQL-Instance EC2 instance was created by the CloudFormation template in the first post.

Figure 1: Connect to the source EC2 MySQL instance

Select the Session Manager tab and choose Connect.

Figure 2: Connect to the source EC2 MySQL instance using Session Manager

Log in to MySQL using the username and password entered when creating dynamodb-designlab stack. mysql -u DbMasterUsername -pDbMasterPassword

Figure 3: Log in to MySQL

Run the following code to create a denormalized view of IMDb movies. CREATE VIEW imdbdata.movies AS

SELECT tp.tconst,

tp.ordering,

tp.nconst,

tp.category,

tp.job,

tp.characters,

tb.titleType,

tb.primaryTitle,

tb.originalTitle,

tb.isAdult,

tb.startYear,

tb.endYear,

tb.runtimeMinutes,

tb.genres,

nm.primaryName,

nm.birthYear,

nm.deathYear,

nm.primaryProfession,

tc.directors,

tc.writers

FROM imdbdata.title_principals tp

LEFT JOIN imdbdata.title_basics tb ON tp.tconst = tb.tconst

LEFT JOIN imdbdata.name_basics nm ON tp.nconst = nm.nconst

LEFT JOIN imdbdata.title_crew tc ON tc.tconst = tp.tconst;

To review the count of records for movies, run the following command: select count(*) from imdbdata.movies;

The denormalized view should contain around 866,763 records. Your source database is now ready to be migrated to DynamoDB.

Create an IAM role

The first step is to create an IAM role for the AWS DMS jobs to assume.

To create the IAM role

On the IAM console, choose Roles in the navigation pane.

Choose Create role.

Under AWS Services, choose DMS.

Choose Next: Permissions.

Attach the policy AmazonDMSVPCManagementRole.

Choose Next: Tags.

Choose Next: Review.

For Role name, enter dms-vpc-role.

Choose Create role.

Create an AWS DMS replication instance

Create an AWS DMS replication instance using a CloudFormation template to migrate data from MySQL to DynamoDB.

To create an AWS DMS replication instance

Launch the CloudFormation template.

Choose Next.

Figure 4: Use the CloudFormation stack to create a DMS replication instance

For Stack name, enter dynamodb-migrationlab.

Under Parameters:

Keep the default values of DMSSubnet1CIDR and DMSSubnet2CIDR .

Enter the imdbdata database password under DBMasterPassword.

Copy the DBServerIP and NATGateway ID from the dynamodb-designlab stack’s Outputs tab.

Select DB-VPC from the VpcID dropdown.

Choose Next.

Figure 5: Update the CloudFormation stack parameters

Select I acknowledge that AWS CloudFormation might create IAM resources with custom names to create the IAM resources.

Choose Create stack.

You can monitor the stack progress on the CloudFormation console. The stack takes 10–15 minutes to complete. When the stack shows the status CREATE_COMPLETE, you can confirm that the replication instance is listed on the AWS DMS console. You should see a replication instance with instance class dms.c5.2xlarge and the status Available.

Figure 6: Review the DMS replication instance status

Create the source and target endpoints

After you create the replication instance, you need to create two endpoints: one for the source MySQL environment and one for the target DynamoDB environment.

To create the source endpoint

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

Figure 7: Create DMS endpoints

For Endpoint type, select Source endpoint.

For Endpoint identifier, enter mysql-endpoint.

For Source engine, select MySQL.

For Access to endpoint database, select AWS Secrets Manager.

Copy the DBSecret and DMSReplicationRole values from the dynamodb-migrationlab stack’s Outputs tab and enter them in the Secret ID and IAM role boxes.

For SSL mode, select none.

For User name, enter dbuser.

For Password, enter the secret value of BlogDatabasePassword from AWS Secrets Manager.

Figure 8: Provide MySQL endpoint details

In the Test endpoint connection section, for VPC, select the VPC ending with DB-VPC.

Choose Run test to confirm your endpoint configuration is correct.

When the test is complete, choose Create endpoint.

To create the target endpoint

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

On the Endpoints page, choose Create endpoint.

For Endpoint type, select Target endpoint.

For Endpoint identifier, enter dynamodb-endpoint.

For Target engine, select Amazon DynamoDB.

For Service access role ARN, enter the role ARN of the dynamodb-access role. This role is created by the CloudFormation template at the beginning of this lab. You can find the ARN in the IAM dashboard.

In the Test endpoint connection section, for VPC, select the VPC ending with DB-VPC.

Choose Run test to confirm your endpoint configuration is correct.

When the test is complete, choose Create endpoint.

Configure and run AWS DMS replication tasks

Create three replication tasks to migrate the denormalized view (movies), ratings table (title_ratings), and regions and languages table (title_akas).

To configure and run replication tasks

On the AWS DMS console, select Database migration tasks in the navigation pane.

Choose Create task.

For Task identifier, enter historical-migration01.

For Replication instance, enter mysqltodynamodb-instance-*.

For Source database endpoint, enter mysql-endpoint.

For Target database endpoint, enter dynamodb-endpoint.

For Migration type, choose Migrate existing data.

Figure 9: Create the database migration task

For Editing mode under Task settings, select Wizard.

For Target table preparation mode, select Do nothing.

Note: If you don’t select this option, the data will be truncated with each subsequent job.

Select Turn on CloudWatch logs to log the AWS DMS tasks in Amazon CloudWatch.

For Editing mode under Table mappings, choose the JSON editor tab.

Copy and paste the following JSON code and then choose Create task. {

“rules”: [

{

“rule-type”: “selection”,

“rule-id”: “1”,

“rule-name”: “1”,

“object-locator”: {

“schema-name”: “imdbdata”,

“table-name”: “movies”,

“table-type”: “view”

},

“rule-action”: “include”,

“filters”: [

{

“filter-type”: “source”,

“column-name”: “tconst”,

“filter-conditions”: [

{

“filter-operator”: “eq”,

“value”: “tt0309377”

},

{

“filter-operator”: “eq”,

“value”: “tt12260846”

},

{

“filter-operator”: “eq”,

“value”: “tt1212419”

},

{

“filter-operator”: “eq”,

“value”: “tt1205489”

},

{

“filter-operator”: “eq”,

“value”: “tt1057500”

},

{

“filter-operator”: “eq”,

“value”: “tt0949815”

},

{

“filter-operator”: “eq”,

“value”: “tt0824747”

},

{

“filter-operator”: “eq”,

“value”: “tt0772168”

},

{

“filter-operator”: “eq”,

“value”: “tt0498380”

},

{

“filter-operator”: “eq”,

“value”: “tt0418689”

},

{

“filter-operator”: “eq”,

“value”: “tt0405159”

},

{

“filter-operator”: “eq”,

“value”: “tt0327056”

},

{

“filter-operator”: “eq”,

“value”: “tt2310814”

},

{

“filter-operator”: “eq”,

“value”: “tt2179136”

},

{

“filter-operator”: “eq”,

“value”: “tt2083383”

},

{

“filter-operator”: “eq”,

“value”: “tt1924245”

},

{

“filter-operator”: “eq”,

“value”: “tt1912421”

},

{

“filter-operator”: “eq”,

“value”: “tt1742044”

},

{

“filter-operator”: “eq”,

“value”: “tt1616195”

},

{

“filter-operator”: “eq”,

“value”: “tt6997426”

},

{

“filter-operator”: “eq”,

“value”: “tt6802308”

},

{

“filter-operator”: “eq”,

“value”: “tt3513548”

},

{

“filter-operator”: “eq”,

“value”: “tt3263904”

},

{

“filter-operator”: “eq”,

“value”: “tt3031654”

},

{

“filter-operator”: “eq”,

“value”: “tt8884452”

}

]

}

]

},

{

“rule-type”: “object-mapping”,

“rule-id”: “2”,

“rule-name”: “2”,

“rule-action”: “map-record-to-record”,

“object-locator”: {

“schema-name”: “imdbdata”,

“table-name”: “movies”,

“table-type”: “view”

},

“target-table-name”: “movies”,

“mapping-parameters”: {

“partition-key-name”: “mpkey”,

“sort-key-name”: “mskey”,

“exclude-columns”: [],

“attribute-mappings”: [

{

“target-attribute-name”: “mpkey”,

“attribute-type”: “scalar”,

“attribute-sub-type”: “string”,

“value”: “${tconst}”

},

{

“target-attribute-name”: “mskey”,

“attribute-type”: “scalar”,

“attribute-sub-type”: “string”,

“value”: “DETL|${category}|${ordering}”

}

]

}

}

]

}

This code includes source-to-target mapping, including any transformation of the records that is performed during migration. The task automatically starts loading the selected movies from the source to the target DynamoDB table.

Note: To reduce the load time, we limited the migration list. The preceding JSON code uses a list of 28 movies. The remaining steps focus on just those movies. However, feel free to load the remaining data if you want to explore the full dataset. To load the full dataset, remove the filter-condition key-values from the following JSON code. We provide more information about the full dataset at the end of this section.

To create the second task

Repeat the previous procedure to create the second task. For this task, enter historical-migration02 as the Task identifier.

Use the following JSON code to migrate the title_akas table from the MySQL IMDb database:

{

“rules”: [

{

“rule-type”: “selection”,

“rule-id”: “1”,

“rule-name”: “1”,

“object-locator”: {

“schema-name”: “imdbdata”,

“table-name”: “title_akas”,

“table-type”: “table”

},

“rule-action”: “include”,

“filters”: [

{

“filter-type”: “source”,

“column-name”: “titleId”,

“filter-conditions”: [

{

“filter-operator”: “eq”,

“value”: “tt0309377”

},

{

“filter-operator”: “eq”,

“value”: “tt12260846”

},

{

“filter-operator”: “eq”,

“value”: “tt1212419”

},

{

“filter-operator”: “eq”,

“value”: “tt1205489”

},

{

“filter-operator”: “eq”,

“value”: “tt1057500”

},

{

“filter-operator”: “eq”,

“value”: “tt0949815”

},

{

“filter-operator”: “eq”,

“value”: “tt0824747”

},

{

“filter-operator”: “eq”,

“value”: “tt0772168”

},

{

“filter-operator”: “eq”,

“value”: “tt0498380”

},

{

“filter-operator”: “eq”,

“value”: “tt0418689”

},

{

“filter-operator”: “eq”,

“value”: “tt0405159”

},

{

“filter-operator”: “eq”,

“value”: “tt0327056”

},

{

“filter-operator”: “eq”,

“value”: “tt2310814”

},

{

“filter-operator”: “eq”,

“value”: “tt2179136”

},

{

“filter-operator”: “eq”,

“value”: “tt2083383”

},

{

“filter-operator”: “eq”,

“value”: “tt1924245”

},

{

“filter-operator”: “eq”,

“value”: “tt1912421”

},

{

“filter-operator”: “eq”,

“value”: “tt1742044”

},

{

“filter-operator”: “eq”,

“value”: “tt1616195”

},

{

“filter-operator”: “eq”,

“value”: “tt6997426”

},

{

“filter-operator”: “eq”,

“value”: “tt6802308”

},

{

“filter-operator”: “eq”,

“value”: “tt3513548”

},

{

“filter-operator”: “eq”,

“value”: “tt3263904”

},

{

“filter-operator”: “eq”,

“value”: “tt3031654”

},

{

“filter-operator”: “eq”,

“value”: “tt8884452”

}

]

}

]

},

{

“rule-type”: “object-mapping”,

“rule-id”: “2”,

“rule-name”: “2”,

“rule-action”: “map-record-to-record”,

“object-locator”: {

“schema-name”: “imdbdata”,

“table-name”: “title_akas”,

“table-type”: “table”

},

“target-table-name”: “movies”,

“mapping-parameters”: {

“partition-key-name”: “mpkey”,

“sort-key-name”: “mskey”,

“exclude-columns”: [],

“attribute-mappings”: [

{

“target-attribute-name”: “mpkey”,

“attribute-type”: “scalar”,

“attribute-sub-type”: “string”,

“value”: “${titleId}”

},

{

“target-attribute-name”: “mskey”,

“attribute-type”: “scalar”,

“attribute-sub-type”: “string”,

“value”: “REGN|${region}”

}

]

}

}

]

}

To create the third task

Repeat the previous procedure to create the third task. For this task, enter historical-migration03 as the Task identifier.

Use the following JSON code to migrate the title_ratings table from the MySQL IMDb database: {

“rules”: [

{

“rule-type”: “selection”,

“rule-id”: “1”,

“rule-name”: “1”,

“object-locator”: {

“schema-name”: “imdbdata”,

“table-name”: “title_ratings”,

“table-type”: “table”

},

“rule-action”: “include”,

“filters”: [

{

“filter-type”: “source”,

“column-name”: “tconst”,

“filter-conditions”: [

{

“filter-operator”: “eq”,

“value”: “tt0309377”

},

{

“filter-operator”: “eq”,

“value”: “tt12260846”

},

{

“filter-operator”: “eq”,

“value”: “tt1212419”

},

{

“filter-operator”: “eq”,

“value”: “tt1205489”

},

{

“filter-operator”: “eq”,

“value”: “tt1057500”

},

{

“filter-operator”: “eq”,

“value”: “tt0949815”

},

{

“filter-operator”: “eq”,

“value”: “tt0824747”

},

{

“filter-operator”: “eq”,

“value”: “tt0772168”

},

{

“filter-operator”: “eq”,

“value”: “tt0498380”

},

{

“filter-operator”: “eq”,

“value”: “tt0418689”

},

{

“filter-operator”: “eq”,

“value”: “tt0405159”

},

{

“filter-operator”: “eq”,

“value”: “tt0327056”

},

{

“filter-operator”: “eq”,

“value”: “tt2310814”

},

{

“filter-operator”: “eq”,

“value”: “tt2179136”

},

{

“filter-operator”: “eq”,

“value”: “tt2083383”

},

{

“filter-operator”: “eq”,

“value”: “tt1924245”

},

{

“filter-operator”: “eq”,

“value”: “tt1912421”

},

{

“filter-operator”: “eq”,

“value”: “tt1742044”

},

{

“filter-operator”: “eq”,

“value”: “tt1616195”

},

{

“filter-operator”: “eq”,

“value”: “tt6997426”

},

{

“filter-operator”: “eq”,

“value”: “tt6802308”

},

{

“filter-operator”: “eq”,

“value”: “tt3513548”

},

{

“filter-operator”: “eq”,

“value”: “tt3263904”

},

{

“filter-operator”: “eq”,

“value”: “tt3031654”

},

{

“filter-operator”: “eq”,

“value”: “tt8884452”

}

]

}

]

},

{

“rule-type”: “object-mapping”,

“rule-id”: “2”,

“rule-name”: “2”,

“rule-action”: “map-record-to-record”,

“object-locator”: {

“schema-name”: “imdbdata”,

“table-name”: “title_ratings”,

“table-type”: “table”

},

“target-table-name”: “movies”,

“mapping-parameters”: {

“partition-key-name”: “mpkey”,

“sort-key-name”: “mskey”,

“exclude-columns”: [],

“attribute-mappings”: [

{

“target-attribute-name”: “mpkey”,

“attribute-type”: “scalar”,

“attribute-sub-type”: “string”,

“value”: “${tconst}”

},

{

“target-attribute-name”: “mskey”,

“attribute-type”: “scalar”,

“attribute-sub-type”: “string”,

“value”: “RTNG”

}

]

}

}

]

}

The replication job for historical migration moves data from the MySQL imdbdata.movies view, title_akas table, and title_ratings table. If you’re loading records based on the preceding code, it will take 5–10 minutes to complete all three jobs. Loading the full dataset will take longer:

The historical-migration01 job migrates over 800,000 records and normally takes 2–3 hours

The historical-migration02 job migrates over 747,000 records and normally takes 2–3 hours

The historical-migration03 job migrates over 79,000 records and normally takes 10–15 minutes

Figure 10: Review three database migration tasks

You can track the status of the data load on the Table statistics tab of the migration task. When the status of all three tasks is Load complete, you can move to the next step.

Verify the access patterns using PartiQL

DynamoDB supports PartiQL—a SQL-compatible query language—to select, insert, update, and delete data in DynamoDB. With PartiQL, you can use the DynamoDB console to interact with DynamoDB tables and run queries. In this exercise, you explore a few access patterns using PartiQL statements.

You use PartiQL scripts to demonstrate the access patterns discussed in the first post. You get the details for each movie using its unique tconst. The denormalized table is created with each row representing a unique combination of movie and crew (tconst and nconst). Because tconst is part of the partition key for the base table, you can use WHERE conditions to select the details.

To demonstrate the access patterns

On the DynamoDB console, choose PartiQL editor in the navigation pane.

Select the movies table that the AWS DMS job created.

Choose the options menu (three dots) and select Scan table.

Figure 11: Explore the movies table using PartiQL editor

Use the following commands in the PartiQL query editor to verify the access patterns:

Find all cast and crew of a movie: SELECT * FROM “movies”

WHERE “mpkey” = ‘tt0309377′ and begins_with(“mskey”,’DETL|’)

Output: Items returned (10)

Find all actors of a movie: SELECT * FROM “movies”

WHERE “mpkey” = ‘tt0309377′ and begins_with(“mskey”,’DETL|actor’)

Output: Items returned (2)

Find details of a movie (such as title, publication year, runtime, and other information): SELECT * FROM “movies”

WHERE “mpkey” = ‘tt0309377′ and begins_with(“mskey”,’DETL|’) and “ordering” = ‘1’

Output: Items returned (1)

Find all published regions, languages, and titles of a movie: SELECT * FROM “movies”

WHERE “mpkey” = ‘tt0309377′ and begins_with(“mskey”,’REGN|’)

Output: Items returned (36)

Find a movie title for a specific region of the movie: SELECT * FROM “movies”

WHERE “mpkey” = ‘tt0309377′ and begins_with(“mskey”,’REGN|NZ’)

Output: Items returned (1)

Find the original title of a movie published across multiple languages and regions: SELECT * FROM “movies”

WHERE “mpkey” = ‘tt0309377′ and begins_with(“mskey”,’REGN|’) and “types” = ‘original’

Output: Items returned (1)

To access data at the crew member level

To access information at the crew member level, we need to create an additional global secondary index (GSI) with a new partition key nconst (unique for crew member). This allows you to query on the new partition key for the GSI instead of scanning the base table.

In the DynamoDB console, choose Tables in the navigation pane.

Select the movies table and then select Create index from the Actions menu.

Figure 12: Create a global secondary index (GSI)

For Partition key, enter nconst.

For Data type, select String.

For Sort key, enter startYear.

For Data type, select String.

For Index name, enter nconst-startYear-index.

Figure 13: Configure a GSI

Choose Create index. Index creation can take 5–10 minutes depending on the number of records in the base table.

When the GSI status column changes from Pending to Available, go back to the PartiQL editor to run the following queries on the GSI:

Find all movies by a specific crew member: SELECT * FROM “movies”.”nconst-startYear-index”

WHERE “nconst” = ‘nm0000142’

Output: Items returned (25)

Find all the movies by a specific actor since the year 2002: SELECT * FROM “movies”.”nconst-startYear-index”

WHERE “nconst” = ‘nm0000142’ and “startYear” >= ‘2002’

ORDER BY “startYear”

Output: Items returned (25)

Congratulations! You have completed and verified the RDBMS migration exercise.

Clean up

When you’ve completed your work, remove any resources you created to avoid incurring additional charges:

IAM role: dms-vpc-role.

Endpoints: dynamodb-endpoint and mysql-endpoint.

AWS DMS tasks: historical-migration-01, historical migration-02 and historical-migration-03.

CloudWatch log groups created by the AWS DMS tasks.

Delete CloudFormation stacks: dynamodb-migrationlab and dynamodb-designlab.

Conclusion

In this post, we showed you how to migrate an application from a self-managed MySQL database on Amazon EC2 to DynamoDB by using AWS DMS to transfer the data into a single-table design schema. We demonstrated using PartiQL queries to access specific patterns from the base table and secondary index. Additionally, we discussed DynamoDB modeling based on access patterns.

We welcome your feedback and suggestions. Let us know what you’re planning to build in the comments section, and how this post has helped you in your AWS journey.

About the Authors

Abhishek Srivastav is a Senior Solutions Architect at AWS. He is passionate about enabling customers to accelerate their cloud adoption. He is an IoT enthusiast and holds deep expertise in NoSQL databases, analytics, and AI/ML technologies. He is passionate about finding answers to complex problems by drawing on his in-depth understanding of these technologies. He has held lead positions for NoSQL Center of Excellence roles at various enterprise customers prior to joining AWS.

Chad Tindel is a DynamoDB Specialist Solutions Architect based out of New York City. He works with large enterprises to evaluate, design, and deploy DynamoDB-based solutions. Prior to joining Amazon, he held similar roles at Red Hat, Cloudera, MongoDB, and Elastic.

Read MoreAWS Database Blog