Amazon DocumentDB (with MongoDB compatibility) is a highly efficient, scalable, and fully managed enterprise document database service designed to handle native JSON workloads. Amazon DocumentDB simplifies storing, querying, and indexing JSON data as a document database.

The Amazon DocumentDB profiler feature is a valuable tool for monitoring the slowest operations on your cluster to help you improve individual query performance and overall cluster performance. When enabled, operations are logged to Amazon CloudWatch Logs, and you can use CloudWatch Logs Insights to analyze, monitor, and archive your Amazon DocumentDB profiling data.

When handling databases with personally identifiable information (PII) like Social Security numbers (SSN), driver’s license information, financial records, or medical data, there’s a possibility of such information being present in the slow query profiler logs. To uphold data security, it is strongly recommended to mask sensitive information in the logs, so it remains inaccessible to unauthorized individuals.

In this post, we discuss how you can mask the PII data using the CloudWatch Logs data protection feature.

Solution overview

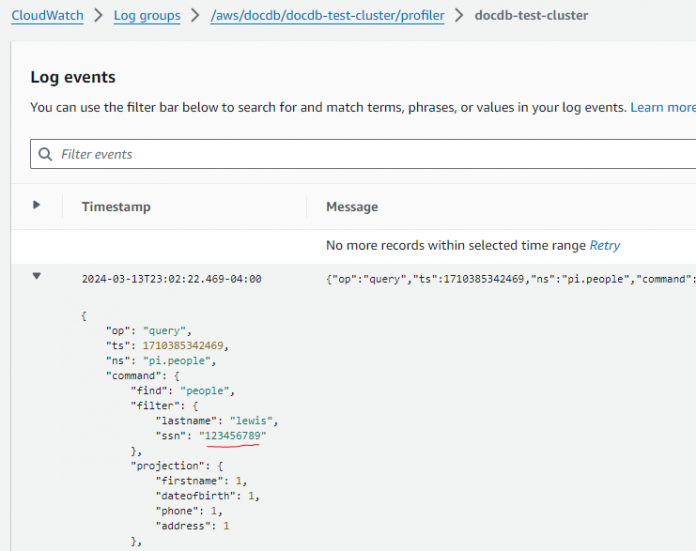

The following screenshot illustrates an operation that includes an SSN, representing a substantial risk because individuals with CloudWatch Logs access could potentially access this sensitive data.

You can detect and protect sensitive data in CloudWatch Logs by implementing log group data protection policies. With these policies, you can effectively audit and mask any sensitive data that appears in log events being ingested by the log groups in your account. By default, when a data protection policy is created, sensitive data that matches the selected data identifiers is automatically masked at all egress points, such as CloudWatch Logs Insights, metric filters, and subscription filters. The ability to view unmasked data is restricted to users with the logs:Unmask AWS Identity and Access Management (IAM) permission.

The following diagram illustrates the flow of the Amazon DocumentDB profiler logs using CloudWatch Logs data protection policies for masking sensitive data.

The solution described in this post includes the following tasks:

Identify the sensitive information within your Amazon DocumentDB cluster.

Create a data protection policy for the Amazon DocumentDB profiler log group.

Validate the sensitive data stored in CloudWatch Logs.

Prerequisites

To follow along with the examples, you can use an existing Amazon DocumentDB cluster or create a new one. Additionally, you should enable profiling on the Amazon DocumentDB cluster to log slow operations.

Identify sensitive information

Collaborate with your organization’s data security team to determine the classification of sensitive information in accordance with your organization’s policies. Typically, sensitive information refers to credentials, financial information, personal health information (PHI), and PII.

The next step is to map the identified sensitive information to the data identifiers that CloudWatch Logs supports.

CloudWatch Logs offers more than 100 managed data identifiers in addition to the option of defining your own custom data identifiers using custom regular expressions, which can then be used in your data protection policy.

In this post, we formulate a data protection policy that incorporates two data identifiers:

Ssn-US – This is a managed data identifier designed for masking SSN information related to the United States within the log group

Ud{6} – You can use this regular expression as a custom data identifier for masking sensitive unique IDs specific to your business

Create a data protection policy for the log group

When creating a data protection policy in CloudWatch Logs you can create it at the account level or for a specific log group. An account-level data protection policy is applied to all existing and future log groups within an account, whereas a log group-level data protection policy applies to a specific log group. Account-level and log group-level log data protection policies work in combination to support data identifiers for specific use cases. For example, you can create an account-level policy to detect and protect log data containing names, credit cards, and addresses. If you have a specific Amazon DocumentDB cluster that handles specific forms of sensitive data you can create a data protection policy specifically for that cluster’s log group.

In the following example, we walk you through creating a log group-level data protection policy. If an account-level data protection is applicable to your use case, refer to Create an account-wide data protection policy.

To create a data protection policy using the AWS Management Console, complete the following steps:

On the CloudWatch console, in the navigation pane, choose Logs, Log groups.

Select the name of your Amazon DocumentDB cluster log group.

On the Actions menu, choose Create data protection policy.

For Managed data identifiers, choose the types of data that you want to audit and mask in this log group (for this post, we use Ssn-US).

Under Custom data identifier configuration, Choose Add custom data identifier.

Enter values for Name (UniqueId) and Regex (Ud{6}).

Select Amazon CloudWatch Logs as the audit destination, and choose an existing log group. Alternatively, you can create a new log group and choose different destination options.

Choose Activate data protection.

In this post, we created a data protection policy targeting a single log group.

Validate the sensitive data

Upon the enforcement of data protection policies, the sensitive data in the CloudWatch logs will be masked, as shown in the following screenshot.

All log entries ingested since enabling data protection will have PII masked in them.

Additionally, you have the option to set up a CloudWatch alarm on the vended CloudWatch metric LogEventsWithFindings to receive proactive notifications whenever PII is detected in your logs. As shown in the following screenshot, the metric is in the AWS/Logs name space and is emitted per log group that has data protection enabled.

While creating the data protection policy, we chose CloudWatch Logs as the audit destination. This audit log provides details on the sensitive data’s position within a log event, the managed data identifier or custom data identifier that was invoked, and the data protection policy that activated the finding. Data protection audit logs can be delivered to CloudWatch Logs, Amazon Simple Storage Service (Amazon S3), or Amazon Data Firehose. Refer to Audit finding reports for more information.

The following screenshot shows the audit report with two findings (Ssn-US and UniqueId) and the exact data positions within a log event.

Clean up

To avoid ongoing costs, clean up the resources you no longer need that you created as part of this solution. You can use the Amazon DocumentDB console or the AWS Command Line Interface (AWS CLI) to delete the Amazon DocumentDB cluster (use delete-db-cluster with the AWS CLI) and CloudWatch log group that you created.

Conclusion

Profiler logs are critical when it comes to resolving Amazon DocumentDB performance issues. However, it’s imperative to mask sensitive data in CloudWatch Logs. Consider incorporating data protection policies into CloudWatch Logs in order to secure your sensitive information.

If you have any inquiries or suggestions, share them in the comments section.

About the Authors

Karimulla Shaik is a Sr. DB Specialty Architect with the Professional Services team at Amazon Web Services. He helps customers migrate traditional on-premises databases to the AWS Cloud. He specializes in database design, architecture, and performance tuning.

David Myers is a Sr. Product Manager – Technical with Amazon CloudWatch. With over 20 years of technical experience observability has been part of his career from the start. David loves improving customers observability experiences at Amazon Web Services.

Read MoreAWS Database Blog