When sensitive personally identifiable information (PII) is involved, large businesses frequently encounter difficulties when transferring production data to non-production or lateral environments for testing or other purposes. Most firms are required by compliance regulations to mask production PII data when it’s transferred for testing and development reasons.

Currently, data that has been copied or migrated to a lower or lateral environment needs to be manually masked before it can be released to end-users. This typically requires a lot of time, energy, and additional manual labor. Additionally, as it requires database subject matter experts to review the documentation to locate and processes to preserve PII data, this can be extremely difficult for firms if database subject matter experts or documentation are not currently available in the organization.

In this post, we present a solution to identify PII data using Amazon Macie, mask it using AWS Database Migration Service (AWS DMS), and migrate it from an Amazon Relational Database Service (Amazon RDS) for Oracle production source database to an RDS for Oracle development target database, before releasing the environment to users. This way, you can save time and make sure that sensitive data is protected.

Solution overview

This post shows how we can identify PII data within a source RDS for Oracle database using Macie managed and custom identifiers, and mask that PII data while it’s being migrated to the target database using AWS DMS.

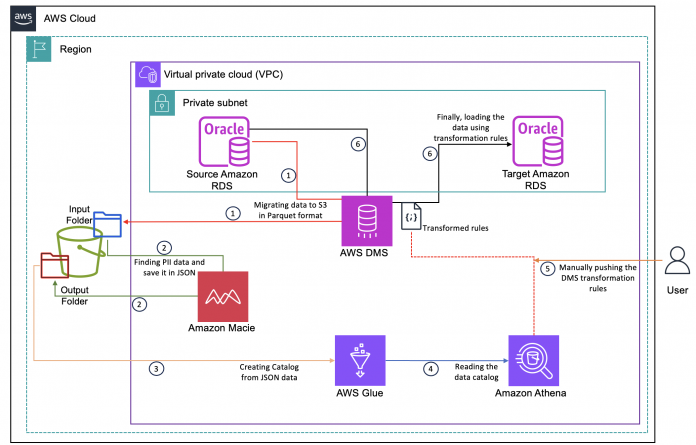

The following diagram presents an overview of the solution architecture.

The process includes the following steps:

Use AWS DMS to extract data from the source RDS for Oracle DB instance and save it in Parquet format in a folder in an Amazon Simple Storage Service (Amazon S3) bucket.

Enable Macie to scan the Parquet files to discover PII data and store the findings in another folder in the S3 bucket.

Use AWS Glue to create a Data Catalog by crawling through the JSONL file created in the previous step, which has metadata information of where PII is stored in the RDS for Oracle data copy.

Use Amazon Athena to query the Data Catalog to extract the metadata information—the schema name, table name, and column name of where PII data is stored in the RDS for Oracle data copy. This information is needed to create transformation rules in the next step.

Create transformation rules in AWS DMS for those tables and columns identified in the previous step.

Use AWS DMS to migrate data to the target RDS for Oracle database with PII data masked as per the transformation rules.

Implementing the solution consists of the following steps:

Populate the source RDS for Oracle database with example PII data.

Configure AWS DMS and Amazon S3 components.

Migrate the PII data from the source RDS for Oracle database to Amazon S3 using AWS DMS.

Configure a Macie job and AWS Glue crawler.

Configure Athena to extract PII metadata.

Run an AWS DMS replication task with transformation rules to migrate the source data with PII data masked to the target RDS for Oracle database.

Prerequisites

Before you get started, you must have the following prerequisites:

Familiarity with the following AWS services to follow along with this post:

Amazon Athena

AWS Database Migration Service (AWS DMS)

AWS Glue

AWS Identity and Access Management (IAM)

Amazon Macie

Amazon RDS for Oracle

Amazon Simple Storage Service (Amazon S3)

A source RDS for Oracle DB instance

A target RDS for Oracle DB instance

Permissions to create IAM roles and policies

Populate the source RDS for Oracle database with example PII data

Complete the following steps to populate the source database:

Connect to the source RDS for Oracle database using your preferred SQL client.

Create a database user named AWS_TEST_USER:

Grant necessary privileges to the user AWS_TEST_USER:

Create three tables and insert records:

Configure AWS DMS and Amazon S3 components

In this section, we detail the steps to configure your AWS DMS and Amazon S3 components.

Create a replication instance

To create an AWS DMS replication instance, complete the following steps:

On the AWS DMS console, choose Replication instances in the navigation pane.

Choose Create replication instance.

Follow the instructions in Creating a replication instance to create a new replication instance.

Choose the same VPC, Region, and Availability Zone as the RDS for Oracle instances.

The source and destination database security groups should have ingress permissions opened for the AWS DMS replication instance for successful connection.

Create the S3 bucket with a customer managed key

In this step, you create your S3 bucket with a customer managed AWS Key Management Service (AWS KMS) key.

On the Amazon S3 console, choose Buckets in the navigation pane.

Choose Create bucket.

Follow the instructions in Create your first S3 bucket to create a new bucket.

In the section Default encryption, select Server-side encryption with AWS Key Management Service keys (SSE-KMS) and create a KMS key for your bucket.

Add an S3 bucket policy

Update the bucket policy to allow Macie to store its findings in the S3 bucket after scanning the RDS for Oracle data stored in Amazon S3.

Putobject is allowed to ensure Macie can access the S3 bucket to store the JSONL files with its findings.

GetBucketLocation is allowed for Macie to scan through the RDS for Oracle data in the S3 bucket to find PII data.

Furthermore, to prevent accessing the data using S3 select on parquet file, which has PII data, and to prevent the download action on those Parquet files, the Getobject privilege on Amazon S3 has to be denied with an exception for Macie to scan these files to find PII data.

Complete the following steps:

Navigate to your bucket on the Amazon S3 console.

Choose the Permissions tab and edit the bucket policy.

Add the following to your bucket policy:

Create IAM roles and policies for the S3 bucket

In this step, you create IAM roles and policies for the S3 bucket where AMS DMS will store the RDS for Oracle data. First, create the policy.

On the IAM console, choose Policies in the navigation pane.

Choose Create policy.

On the JSON tab, choose Edit and enter the following policy:

Choose Next.

Enter a name for your policy.

Choose Create policy.

Now you can create an IAM role with AWS DMS as a trust relationship to access the S3 bucket.

On the IAM console, choose Roles in the navigation pane.

Choose Create role.

For Trusted entity type, choose AWS service.

For Use case, choose DMS, then choose Next.

For Add permission, choose the policy you created, then choose Next.

Enter a name for your role, then choose Next.

Because the S3 object is encrypted using a customer managed KMS key (SSE-KMS encryption), permissions on this key have be granted to Macie, which will let it decrypt the files with PII data. Therefore, we add a statement to the policy for the KMS key that allows the Macie service-linked role and Amazon S3 IAM role to use the key to encrypt and decrypt data.

On the AWS KMS console, navigate to your key.

Switch to Policy view.

Edit the policy and add the following code:

Create AWS DMS endpoints

In this step, you create endpoints for the source and target databases. For more information, refer to Creating source and target endpoints.

First, you create an endpoint that connects to the source database from which PII data has to be identified.

On the AWS DMS console, create an AWS DMS source endpoint with Oracle as the source engine.

Next, you create an endpoint for the target database where masked PII data will be migrated.

Create a target endpoint for the target Oracle database with Oracle as the source engine.

Next, you create a second target endpoint for Amazon S3 as the target. This connects to the S3 bucket as an intermediate location where the files with PII data are placed for Macie to access it.

Create a second target endpoint with the source engine as Amazon S3.

For the service access role ARN, enter your IAM role ARN.

For the bucket name, enter the bucket you created.

For the bucket folder, enter the folder you created (for this post, we use orcldata).

For the endpoint settings, select Use endpoint connection attributes.

In the Extra connection attributes section, enter the following:

Migrate the PII data from the source RDS for Oracle database to Amazon S3 using AWS DMS

To create your database migration task, complete the following steps:

On the AWS DMS console, choose Database migration tasks in the navigation pane.

Choose Create task.

For Task identifier, enter rds-to-s3.

For Replication instance, enter your replication instance.

For Source database endpoint, enter your source Oracle endpoint.

For Target database endpoint, enter your target Amazon S3 endpoint.

For Migration type, choose Migrate existing data.

Select Turn on CloudWatch Logs if monitoring is needed.

Set Target load and Target apply to Debug.

Other detailed debug and log options can be enabled; these are the basic recommended ones that can help troubleshoot most common issues.

For Table mappings, add a new selection rule.

For Schema, enter a schema.

For Source name, enter the source schema in your environment (for this post, we use AWS_TEST_USER).

For Source table name, enter %.

For Action, choose Include.

Choose Create task.

Configure a Macie job and AWS Glue crawler

In this section, we detail the steps to create and run a Macie job and AWS Glue crawler.

Enable Macie to analyze the Parquet files generated by AWS DMS

We enable Macie and set the S3 bucket details so that a jsonl.gz file is generated that has PII findings. We create a Data Catalog in AWS Glue from this file later.

On the Macie console, choose Discovery results in the navigation pane.

Choose your bucket (optionally, you can create a new bucket if you want to save the PII findings in another location).

For Encryption settings, choose your KMS key.

Choose Save.

KMS key selection is important to make sure we don’t encounter a putclassificationExportconfiguration error that would prevent creating the discovery results.

Configure a Macie job to read Parquet files from Amazon RDS for Oracle

In this step, PII identification is based on managed identifiers in Macie. If managed identifiers aren’t sufficient to match the PII value detection in your environment, you can create custom identifiers as per your needs to identify PII data. In this post, a Custom identifier is created for Passport.

Complete the following steps to create your Macie job:

On the Macie console, choose Jobs in the navigation pane.

Choose Create job.

For Select S3 bucket, enter your bucket, then choose Next.

For Refine the scope, choose One-time job, then choose Next.

Select your data identifiers:

Choose Managed and then Next to use managed identifiers (recommended).

Choose Custom to open a new page to create a custom identifier.

Choose Create.

For Name, enter Passport.

For Regular expression, enter [C]d{2}[A-Z]{2}d{4} so sample data like C53HL5134 will work.

For Keywords, enter Passport, PASSPORT.

Choose Submit.

Return to the job configuration page and choose Passport, then choose Next.

For Job name, enter a name (for this post, we use rds-pii-macie-findings).

Choose Next.

Review the details, then choose Submit.

Create an AWS Glue crawler

We create an AWS Glue crawler to create a Data Catalog for the JSONL file created by Macie.

On the AWS Glue console, expand Data Catalog in the navigation pane and choose Crawlers.

Choose Create crawler.

For Crawler name, enter a name (for this post, we use dms-macie-pii).

Choose Next.

For Data sources and classifiers, choose Add a data source.

For Data source, choose S3.

For S3 path, enter s3://<YOUR BUCKET NAME>/AWSLogs.

Choose Add S3 data source.

In the Configure security settings IAM role section, choose Create new IAM role.

Enter a name for the role (for this post, AWSGlueServiceRole-dms-macie-crawler) and choose Next.

In the Output configuration, Target database section, choose Add database to open a new window.

For Database name, enter the name of your database.

Return to the crawler configuration page, refresh the database names, and choose your database.

For Table name prefix, enter dms-mask-.

Choose Next, then choose Create crawler.

Add an inline policy to the crawler IAM role

Now you add an inline policy to the IAM role you created (AWSGlueServiceRole-dms-macie-crawler). The policy allows the crawler to decrypt the S3 bucket, which is encrypted by SSE-KMS.

On the IAM console, navigate to the AWSGlueServiceRole-dms-macie-crawler role.

In the Permissions section, choose Add permissions.

Choose Create inline policy.

On the JSON tab, enter the following contents:

Choose Review policy.

For Policy name, enter a name (for this post, we use dms-glue-inline-policy).

Choose Create policy.

Run the AWS Glue crawler

To run the crawler, complete the following steps:

On the AWS Glue console, choose Crawlers in the navigation pane.

Select the crawler you created (dms-macie-pii).

Choose Run crawler.

Configure Athena to extract PII metadata

Now you’re ready to use Athena to query the PII metadata.

On the Athena console, launch the query editor.

For Data source, choose AwsDataCatalog.

For Database, choose your database.

Before you run your first query, set up the query result location in Amazon S3 as s3://<YOUR BUCKET NAME>/orcldata.

Select Encrypt query results.

For Encryption type, choose SSE_KMS.

Choose your KMS key.

Save your settings.

Create a view to get the subset of columns needed to identify PII:

Query the view that you created from the query editor:

The following screenshot shows our output.

Run an AWS DMS replication task to migrate the source data with PII data masked to the target RDS for Oracle database

In this step, we create the final transformation rule in an AWS DMS replication task, which uses the found PII metadata information.

On the AWS DMS console, create a new database migration task.

For Task identifier, enter dms-pii-masked-migration.

For Replication instance, enter your replication instance.

Select your source and target Oracle endpoints.

For Migration type, choose Migrate existing data.

Under Task settings, leave the default values or modify them as needed.

Under Table mappings, choose JSON editor and enter the following contents:

Create the task and run it.

In the preceding code, the values passed are derived based on the output of the Athena query we ran earlier:

schema-name – We use AWS-TEST-USER, which is derived from the value of the schema_nm column.

table-name – We use CUSTOMER and EMPLOYEE, which are values value of the table_nm column.

rule-action – We use remove-column for PASSPORT and SSN. In the target, we don’t include the original column that has PII data. For this post, we add a rule to remove the passport and SSN columns when migration is complete.

rule-action – We use add-column for DMS-PASSPORT and DMS-SSN to add the masked data columns.

The expression in the transformation rules is an example to show the logic of how PII data can be masked in the added column. You should use your own expression logic as needed.

Verify if the task is complete without any errors and verify the results set of the tables in the target database, which has PII data masked.

Post-migration steps

Now you can complete some post-migration steps.

Firstly, in the target RDS for Oracle database, new columns with the DMS_ prefix have masked PII data. However, column names should be renamed to remove this prefix. Therefore, you should rename those columns.

In the SQL client, issue the following DDL commands:

If column order matters for your application, use the invisible option to hide columns and add the columns you need, then revert the invisible columns to visible again, so the column order is preserved.

2. Reorder the columns with the following steps to preserve the order of the Passport column in the Employee table

The Column_id for the Passport column, which should be 3 as per the source table, would have been moved to last in the target.

To fix the order back to 3, run the following invisible command in the target from column 3 onwards:

Add the passport column to the employee table and verify the column order:

Now the Column_id for the Passport column is 3 when you query all_tab_cols.

Once verified, run the following command to revert the invisible columns back to visible:

Update the employee table to copy data from the dms_passport column to the newly created passport column, which is in the correct order:

Remove the dms_passport column after copying to the passport column:

Clean up

To clean up your resources, you should delete the database migration tasks to prevent reverse engineering of PII data detection:

On the AWS DMS console, choose Database migration tasks in the navigation pane.

Select the task which has the PII masking transformation rule dms-pii-masked-migration

On the Action menu, choose Delete.

Select the task rds-to-s3.

On the Action menu, choose Delete.

You should also delete the associated resources you created during this post to prevent incurring any costs.

Delete the AWS DMS replication instance.

Delete the S3 bucket.

Delete the Athena database.

Delete the AWS Glue crawler.

Delete the Macie job.

Delete the associated IAM roles and policies.

Delete the KMS key.

Conclusion

In this post, we showed how to set up Macie, an AWS Glue crawler, and Athena to discover PII data and how to setup AWS DMS transformation rules to mask PII data and migrate data from an RDS for Oracle database with PII data masked to the target RDS for Oracle database.

For more information on masking data in PostgreSQL, see Data masking using AWS DMS.

As always, AWS welcomes feedback. If you have any comments or questions on this post, please share them in the comments section.

About the Authors

Chithra Krishnamurthy is a Database Consultant, with the Professional Services team working at Amazon Web Services. She works with enterprise customers to help them achieve their business outcomes by providing technical guidance for database migrations to AWS, guidance on setting up bi-directional replication.

Mansi Suratwala is a Sr Database Consultant working at Amazon Web Services. She closely works with companies from different domains to provide scalable and secure database solutions in AWS cloud. She is passionate to collaborate with customers to achieve their cloud adoption goals.

Read MoreAWS Database Blog