An Amazon Aurora PostgreSQL-Compatible Edition cluster consists of a primary/writer node and up to 15 reader nodes in a Region. You may offload read-only workloads to reader nodes to scale-out reads. Long-running queries on reader nodes that are accessing tables with high transactional activities on the primary node can cause conflicts, and lead to undesirable effects. In this post, we go over the potential conflicts and share best practices for managing long-running read queries on Aurora PostgreSQL.

Storage differences between Amazon RDS and Aurora PostgreSQL

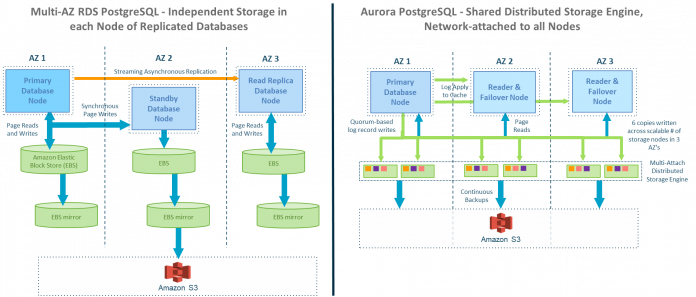

In Amazon Relational Database Service (Amazon RDS) for PostgreSQL, each read replica instance has its own independent copy of the database, kept in sync by logical replication from the primary node. In contrast, in Aurora PostgreSQL, there is a single shared database managed by a distributed storage engine redundantly stored on six storage nodes to which each database compute instance attaches. The following graphic shows the fundamental differences in these two approaches.

The shared storage model of Aurora has many advantages, such as minimal time to spin up new reader nodes because the new instance simply attaches to existing storage nodes over the network. However, with this approach, Aurora PostgreSQL must keep the different reader nodes’ cached copies of the same page more closely in sync than Amazon RDS for PostgreSQL because there is only one physical copy of the database shared by all the nodes of the Aurora PostgreSQL cluster.

Impacts of long-running queries on Aurora reader nodes

Normal DML operations (such as insert, update, and delete) on an Aurora primary node generate transaction log records that, in addition to being sent to all the Aurora storage nodes, are also sent to all the Aurora reader nodes in the cluster in case any reader node has a copy of the same page in its own buffer cache. In the preceding figure, these are the cache update data flows from the primary node to the reader nodes. If a reader node doesn’t have the page buffer in memory, those log records are ignored; otherwise they’re processed in transaction sequence once they’re committed. As queries are processed, either on the primary or reader nodes, sometimes they need to hold an internal lock on a page buffer in memory to ensure the page buffer contents don’t change underneath it. Generally, these are known as buffer pins and although they’re non-transactional, they can affect concurrency.

PostgreSQL tends to be smart about handling conflicts on the primary node itself because it understands all queries running on it at that moment. For example, the PostgreSQL engine on the primary node could choose to skip autovacuum of a page buffer if a different read session is holding a buffer pin on that page buffer. However, the primary node is oblivious to the workload and buffer cache contents in the reader nodes, so a common challenge in Aurora reader nodes is the potential for conflicts that block applying log records affecting the page buffers in memory in a timely manner.

When conflict occurs, if the conflicting query is short, it’s desirable to allow it to complete by delayed applying log records on the reader nodes. However, in the case of a long-running query on the reader node, allowing the transaction log apply process to wait indefinitely will cause the reader node to increasingly lag farther behind the primary node. To balance between running queries and high availability, Aurora PostgreSQL takes the following actions when there is conflict on the reader node that blocks applying the log records:

Delay application of the log records on the reader node to allow the conflicting query on the reader node to finish, then apply the log records. The amount of time to delay is determined by the configured max_standby_streaming_delay parameter value. Aurora PostgreSQL allows a configuration of max_standby_streaming_delay up to 30 seconds. If you’re running mixed OLTP and OLAP workloads, the OLAP queries may run for longer than 30 seconds. One of the objectives of this post is to share best practices to manage long-running queries and mitigate conflicts in this scenario.

Cancel the conflicting query on the reader node if the conflict lasts longer than max_standby_streaming_delay (maximum 30 seconds). This is different from Amazon RDS or self-managed PostgreSQL. With Amazon RDS or self-managed PostgreSQL, the instance has its own physical copy of the database, and you’re able to set the parameter max_standby_streaming_delay as high as you want to prevent query cancellation.

If the conflicting query can’t cancel in time, or if multiple long-running queries are causing the replication lag to go beyond 60 seconds, Aurora restarts the reader node to ensure it’s not lagging far behind the primary node.

Common causes of conflict in an Aurora reader node

There are several common scenarios that can cause conflicts when applying log records on the reader node:

Snapshot conflicts — A conflict can occur if the vacuum process removes dead tuples on the primary node, and a long-running query on the reader node started before the vacuum and has an older snapshot.

Lock conflicts – PostgreSQL’s MVCC protocol eliminates the need to lock entire page buffers in memory during normal DML commands. However, there are also heavier operations that affect a physical page, such as DDL (alter and drop table), vacuum full, lock table, truncating page from a relation, and some autovacuum operations where the operation must acquire an Access Exclusive lock. This lock then generates a XLOG_STANDBY_LOCK log record, which causes reader nodes to acquire the Access Exclusive lock in order to invalidate the memory structure in its buffer cache.

Buffer pin conflicts — This conflict occurs when you have an exclusive page lock on the primary node while a long-running read query on the reader node has the page pinned.

You can follow the steps in this post to reproduce a lock conflict scenario.

Prerequisites

Before you get started, make sure you have the following prerequisites:

An AWS account.

An Aurora PostgreSQL cluster with one reader node. The sample in this post deploys both the primary node and the reader node on db.r6g.large running PostgreSQL v13.6.

A client environment with connectivity set up to your Aurora cluster. The sample in this post uses psql as the client tool.

We assume that you’re familiar with working with Aurora PostgreSQL and a PostgreSQL client environment.

Generate test data

Open a new psql connection to the primary node and run the following code to create sample test data:

The following screenshot shows our output.

Verify max_standby_streaming_delay settings on the reader node

Open a new psql connection to the reader node and check the max_standby_streaming_delay parameter setting. The default value may change in different Aurora PostgreSQL versions. Aurora PostgreSQL now allows you to change the default value using a customized parameter groups. You can set max_standby_streaming_delay to any value between 1,000–30,000 milliseconds on both the cluster parameter groups or database instance parameter groups levels. If both places are set, the configuration setting on the cluster level takes precedence.

Check the parameter with the following code:

In this test, max_standby_streaming_delay is set to 14,000 milliseconds (14 seconds).

Run a long-running query

On an existing connection to your Aurora reader node, enable timing and run a long-running query and capture the query runtime when there is no replication conflict:

On my environment with a db.r6.large DB instance, the long-running query ran for about 72 seconds.

Rerun the long-running query with a conflict

On your existing connection to the Aurora reader node, rerun the long-running query from the previous step. This time, we manually generate a conflicting DDL operation from the Aurora primary node.

Observe the conflict on the primary node

Switch to the session with the existing connection to your Aurora primary node while the long-running query is running on the reader node. Run the following DROP INDEX statement and observe what happens to the query on the reader node:

Review the error message on the reader node

Switch to the Aurora reader session. If you wait a little bit, you should see an error message similar to the following screenshot.

The DROP INDEX operation on the primary node affected physical pages on Aurora shared storage. When the transaction log records are applied to the reader node, it causes the reader node to acquire the Access Exclusive lock in order to invalidate the memory structure in its buffer cache. However, the existing long-running query has the memory pages pinned. The DROP INDEX operation resulted in a lock conflict. In this scenario, Aurora PostgreSQL waited 14 seconds (the max_standby_streaming_delay value configured) for the query to finish before cancelling the query.

Considerations and best practices

Now that you have a better understanding of the Aurora shared storage architecture and scenarios that cause conflicts, you can take measurements to manage long-running queries to avoid or proactively handle a conflicting situation. The followings are options to consider:

Query tuning – Tune your query on the reader node to finish before reaching max_standby_streaming_delay. On an OLTP system, queries are typically expected to return within a short time. An unintended long-running query can be caused by a missing index. If you don’t expect your query to run over 30 seconds, you should set the database parameter for statement_timeout and log_min_error_statement to ERROR or lower on the reader nodes to proactively cancel any runaway queries and log the query statement in the PostgreSQL log for later tuning.

Implement table partitioning – If the size of table is large, you can consider table partitioning to reduce query runtime. Partition query pruning can reduce the amount of data Aurora PostgreSQL needs to process for a query and enhance query performance.

Implement manual vacuum – If you’re running mixed OLTP and OLAP workloads that include long-running queries on the reader nodes, consider turning off autovacuum and setting up operation methods (such as pg_cron) to manually run vacuum at appropriate times of the day or over the weekend when workload volume allows.

Implement retry logics in the application – The occurrence of a replication conflict depends on the nature of the operations that happened on the primary node and what’s running on the reader nodes. A query being canceled may very well be able to run successfully if it’s submitted again. You should implement retry logics in the application to catch a query being canceled and resubmit the query. You should also have application logics in place to reconnect and resubmit the query in the case of a connection failure.

Redirect the query to the Aurora primary node – To avoid long-running read queries being forcibly canceled or causing an unexpected Aurora reader restart, you can redirect the query to the primary node. When a query runs and holds resources for a long time on the primary node, other sessions may result in waiting. But the query will finish unless you take actions to cancel the query.

Offload the query to an Aurora fast clone – You can consider creating a clone of the database using the Aurora fast database cloning feature. Long-running queries that don’t need access to real-time production data may be offloaded to an Aurora clone (for example, a query used to generate quarterly reports).

Split the query into multiple smaller queries – Breaking down a complex long-running query into multiple shorter and smaller queries allows you to better utilize the horizontal scalability of the application layer and distribute queries to multiple application instances.

Keep your environment up to date on the latest minor version release – AWS makes continuous efforts on enhancements and bug fixes to mitigate conflicts. For example, Aurora PostgreSQL v12.9 and v13.5 (and higher) added an optimization to minimize the need to cancel some queries running longer than max_standby_streaming_delay under buffer pin conflicts. For more information on the enhancement, refer to Amazon Aurora PostgreSQL updates. It’s highly recommended that you keep your Aurora environment up to date on the latest minor releases.

It’s important to continue running heavy maintenance operations like vacuum or DDL at times when workload volumes are lighter or more tolerant of query cancellations.

Conclusion

In this post, we discussed common scenarios of long-running read queries on Aurora reader nodes that may cause conflicts with DML and DDL operations on the Aurora primary node. We shared best practices to proactively manage long-running read queries and mitigate impacts of potential conflicts.

We welcome your feedback. Share your experience and any questions in the comments.

Since you’re reading this post, you may also be interested in the following:

About the authors

Wanda He is a Sr. Database Specialist Solutions Architect at Amazon Web Services. She works with customers on design, deploy, and optimize relational databases on AWS.

Avi Jain is a Sr. Product Manager with the Technical Benchmarking team at Amazon. He is part of team that evaluates, measures, and drives improvements to the end-to-end customer experience (CX) of using Amazon technical products and services.

Read MoreAWS Database Blog