Generative AI – a category of artificial intelligence algorithms that can generate new content based on existing data — has been hailed as the next frontier for various industries, from tech to financial services, e-commerce and healthcare. And indeed, we’re already seeing the many ways Generative AI is being adopted. ChatGPT is one example of Generative AI, a form of AI that does not require a background in machine learning (ML); virtually anyone with the ability to ask questions in simple English can utilize it. The driving force behind the capabilities of generative AI chatbots lies in their foundation models. These models consist of expansive neural networks meticulously trained on vast amounts of unstructured, unlabeled data spanning various formats, including text and audio. The versatility of foundation models enables their utilization across a wide range of tasks, showcasing their limitless potential. In this post, we cover two use cases in the context of pgvector and Amazon Aurora PostgreSQL-Compatible Edition:

First, we build an AI-powered application that lets you ask questions based on content in your PDF files in natural language. We upload PDF files to the application and then type in questions in simple English. Our AI-powered application will process questions and return answers based on the content of the PDF files.

Next, we make use of the native integration between pgvector and Amazon Aurora Machine Learning. Machine learning integration with Aurora currently supports Amazon Comprehend and Amazon SageMaker. Aurora makes direct and secure calls to SageMaker and Comprehend that don’t go through the application layer. Aurora machine learning is based on the familiar SQL programming language, so you don’t need to build custom integrations, move data around or learn separate tools.

Overview of pgvector and large language models (LLMs)

pgvector is an open-source extension for PostgreSQL that adds the ability to store and search over ML-generated vector embeddings. pgvector provides different capabilities that let you identify both exact and approximate nearest neighbors. It’s designed to work seamlessly with other PostgreSQL features, including indexing and querying. Using ChatGPT and other LLM tooling often requires storing the output of these systems, i.e., vector embeddings, in a permanent storage system for retrieval at a later time. In the previous post, Building AI-powered search in PostgreSQL using Amazon SageMaker and pgvector, we provided an overview of storing vector embeddings in PostgreSQL using pgvector, and a sample implementation for an online retail store.

Large language models (LLMs) have become increasingly powerful and capable. You can use these models for a variety of tasks, including generating text, chatbots, text summarization, image generation, and natural language processing capabilities such as answering questions. Some of the benefits offered by LLMs include the ability to create more capable and compelling conversational AI experiences for customer service applications or bots, and improving employee productivity through more intuitive and accurate responses. LangChain is a Python module that makes it simpler to use LLMs. LangChain provides a standard interface for accessing LLMs, and it supports a variety of LLMs, including OpenAI’s GPT series, Hugging Face, Google’s BERT, and Facebook’s RoBERTa.

Although LLMs offer many benefits for natural language processing (NLP) tasks, they may not always provide factual or precisely relevant responses to specific domain use cases. This limitation can be especially crucial for enterprise customers with vast enterprise data who require highly precise and domain-specific answers. For organizations seeking to improve LLM performance for their customized domains, they should look into effectively integrating their enterprise domain information into the LLM.

Solution overview

Use case 1: Build and deploy an AI-powered chatbot application

Prerequisites

Aurora PostgreSQL v15.3 with pgvector support.

Install Python with the required dependencies (in this post, we use Python v3.9). You can deploy this solution locally on your laptop or via Amazon SageMaker Notebooks.

This solution incurs costs. Refer to Amazon Aurora Pricing to learn more.

How it works

We use a combination of pgvector, open-source foundation models (flan-t5-xxl for text generation and all-mpnet-base-v2 for embeddings), LangChain packages for interfacing with its components and Streamlit for building the bot front end. LangChain’s Conversational Buffer Memory and ConversationalRetrievalChain allows chatbots to store and recall past conversations and interactions as well as to enhance our personalized chatbot by adding memory to it. This will enable our chatbot to recall previous conversations and contextual information, resulting in more personalized and engaging interactions.

NLP question answering is a difficult task, but recent developments in transformer-based models have greatly enhanced its ease of use. Hugging Face’s Transformers library offers pre-trained models and tools that make it simple to do question-answering activities. The widely used Python module Streamlit is used to create interactive online applications, while LangChain is a toolkit that facilitates retrieving documentation context data based on keywords.

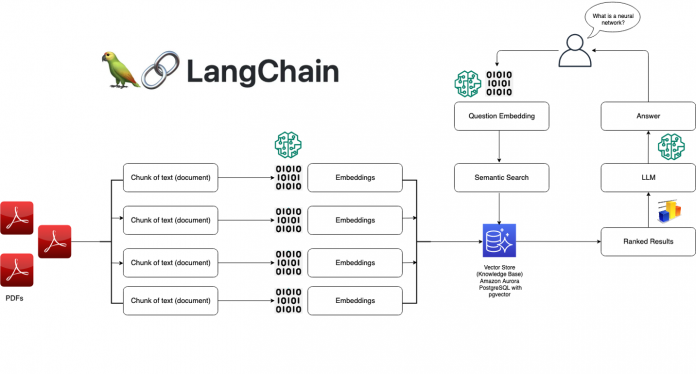

The following diagram illustrates how it works:

The application follows these steps to provide responses to your questions:

The app reads one or more PDF documents and extracts their text content.

The extracted text is divided into smaller chunks that can be processed effectively.

The application utilizes a language model to generate vector representations (embeddings) of the text chunks and stores the embeddings in pgvector (vector store).

When you ask a question, the app compares it with the text chunks and identifies the most semantically similar ones.

The selected chunks are passed to the language model, which generates a response based on the relevant content of the PDFs.

Environment setup

To get started, we need to install the required dependencies. You can use pip to install the necessary packages either on your local laptop or via SageMaker Jupyter notebook:

Create the pgvector extension on your Aurora PostgreSQL database (DB) cluster:

Note: When you use HuggingFaceEmbeddings, you may get the following error: StatementError: (builtins.ValueError) expected 1536 dimensions, not 768.

This is a known issue (see pgvector does not work with HuggingFaceEmbeddings #2219). You can use the following workaround:

Update ADA_TOKEN_COUNT = 768 in local (site-packages) langchain/langchain/vectorstores/pgvector.py on line 22.

Update the vector type column for langchain_pg_embedding table on your Aurora PostgreSQL DB cluster:

Import libraries

Let’s begin by importing the necessary libraries:

To load the pre-trained question answering model and embeddings, we import HuggingFaceHub and HuggingFaceInstructEmbeddings from LangChain utilities. For storing vector embeddings, we import pgvector as a vector store, which has a direct integration with LangChain. Note that we’re using two additional important libraries – ConversationBufferMemory, which allows for storing of messages, and ConversationalRetrievalChain, which allows you to set up a chain to chat over documents with chat history for follow-up questions. We use RecursiveCharacterTextSplitter to split documents recursively by different characters, as we’ll see in our sample app. For the purpose of creating the web application, we additionally import Streamlit. For the demo, we use a popular whitepaper as the source PDF document – Amazon Aurora: Design considerations for high throughput cloud-native relational databases.

Create the Streamlit app

We start by creating the Streamlit app and setting the header:

This line sets the header of our web application to “GenAI Q&A with pgvector and Amazon Aurora PostgreSQL.”

Next, we take our PDFs as input and split them into chunks using RecursiveCharacterTextSplitter:

Load the embeddings and LLM into Aurora PostgreSQL DB cluster

Next, we load the question answering embeddings using the sentence transformer sentence-transformers/all-mpnet-base-v2 into Aurora PostgreSQL DB cluster as our vector database using the pgvector vector store in LangChain:

Note that pgvector needs the connection string to the database. We load it from the environment variables.

Next, we load the LLM. We use Google’s flan-t5-xxl LLM from the HuggingFaceHub repository:

By default, LLMs are stateless, meaning that each incoming query is processed independently of other interactions. The only thing that exists for a stateless agent is the current input. There are many applications where remembering previous interactions is very important, such as chatbots. Conversational memory allows us to do that. ConversationBufferMemory and ConversationalRetrievalChain allow us to provide the user’s question and conversation history to generate the chatbot’s response while allowing room for follow-up questions:

User input and question answering

Now, we handle the user input and perform the question answering process:

Demonstration

Streamlit is an open-source Python library that makes it simple to create and share beautiful, custom web apps for machine learning and data science. In just a few minutes you can build and deploy powerful data apps. Let’s explore a demonstration of the app.

To install Streamlit:

The starting UI looks like the following screenshot:

Follow the instructions in the sidebar:

Browse and upload PDF files.

You can upload multiple PDFs because we set the parameter accept_multiple_files=True for the st.file_uploader function.

Once you’ve uploaded the files, click Process.

You should see a page like the following:

Start asking your questions in the search bar. For example, let’s start with a simple question – “What is Amazon Aurora?”

The following response is generated:

Let’s ask a different question, a bit more complex – “How does replication work in Amazon Aurora?”

The following response is generated:

Note here that the conversation history is preserved due to Conversational Buffer Memory. Also, ConversationalRetrievalChain allows you to set up a chain with chat history for follow-up questions.

We can also upload multiple files and ask questions. Let’s say we uploaded another file “Constitution of the United States” and ask our app – “What is the first amendment about?”

The following is the response:

For full implementation details about the code sample used in the post, see the GitHub repo.

Use Case 2: pgvector and Aurora Machine Learning for Sentiment Analysis

Prerequisites

Aurora PostgreSQL v15.3 with pgvector support.

Install Python with the required dependencies (in this post, we use Python v3.9).

Jupyter (available as an extension on VS Code or through Amazon SageMaker Notebooks).

AWS CLI installed and configured for use. For instructions, see Set up the AWS CLI.

This solution incurs costs. Refer to Amazon Aurora Pricing to learn more.

Amazon Comprehend is a natural language processing (NLP) service that uses machine learning to find insights and relationships in text. No prior machine learning experience is required. This example will walk you through the process of integrating Aurora with the Comprehend Sentiment Analysis API and making sentiment analysis inferences via SQL commands. For our example, we have used a sample dataset for fictitious hotel reviews. We use Hugging Face’s sentence-transformers/all-mpnet-base-v2 model for generating document embeddings and store vector embeddings in our Aurora PostgreSQL DB cluster with pgvector.

Use Amazon Comprehend with Amazon Aurora

Create an IAM role to allow Aurora to interface with Comprehend.

Associate the IAM role with the Aurora DB cluster.

Install the aws_ml and vector extensions. For installing the aws_ml extension, see Installing the Aurora machine learning extension.

Setup the required environment variables.

Run through each cell in the given notebook pgvector_with_langchain_auroraml.ipynb.

Run Comprehend inferences from Aurora.

1. Create an IAM role to allow Aurora to interface with Comprehend

Run the following commands to create and attach an inline policy to the IAM role we just created:

2. Associate the IAM role with the Aurora DB cluster

Associate the role with the DB cluster by using following command:

Run the following command and wait until the output shows as available, before moving on to the next step:

Validate that the IAM role is active by running the following command:

You should see an output similar to the following:

For more information or instructions on how to perform steps 1 and 2 using the AWS Console see: Setting up Aurora PostgreSQL to use Amazon Comprehend.

3. Connect to psql or your favorite PostgreSQL client and install the extensions

4. Setup the required environment variables

We use VS Code for this example. Create a .env file with the following environment variables:

5. Run through each cell in the given notebook pgvector_with_langchain_auroraml.ipynb

Import libraries

Begin by importing the necessary libraries:

Use LangChain’s CSVLoader library to load CSV and generate embeddings using Hugging Face sentence transformers:

If the run is successful, you should see an output as follows:

Split the text using LangChain’s CharacterTextSplitter function and generate chunks:

If the run is successful, you should see an output as follows:

Create a table in Aurora PostgreSQL with the name of the collection. Make sure that the collection name is unique and the user has the permissions to create a table:

Run a similarity search using the similarity_search_with_score function from pgvector.

If the run is successful, you should see an output as follows:

Use the Cosine function to refine the results to the best possible match:

If the run is successful, you should see an output as follows:

Similarly, you can test results with other distance strategies such as Euclidean or Max Inner Product. Euclidean distance depends on a vector’s magnitude whereas cosine similarity depends on the angle between the vectors. The angle measure is more resilient to variations of occurrence counts between terms that are semantically similar, whereas the magnitude of vectors is influenced by occurrence counts and heterogeneity of word neighborhood. Hence for similarity searches or semantic similarity in text, the cosine distance gives a more accurate measure.

6. Run Comprehend inferences from Aurora

Aurora has a built-in Comprehend function which can call the Comprehend service. It passes the inputs of the aws_comprehend.detect_sentiment function, in this case the values of the document column in the langchain_pg_embedding table, to the Comprehend service and retrieves sentiment analysis results (note that the document column is trimmed due to the long free form nature of reviews):

You should see results as shown in the screenshot below. Observe the columns sentiment, and confidence. The combination of these two columns provide the inferred sentiment for the text in the document column, and also the confidence score of the inference.

For full implementation details about the code sample used in the post, see the GitHub repo.

Conclusion

In this post, we explored how to build an interactive chatbot app for question answering using LangChain and Streamlit and leveraged pgvector and its native integration with Aurora Machine Learning for sentiment analysis. With this sample chatbot app, users can input their questions and receive answers based on the provided information, making it a useful tool for information retrieval and knowledge exploration, especially in large enterprises with a massive knowledge corpus. The integration of embeddings generated using LangChain and storing them in Amazon Aurora PostgreSQL-Compatible Edition with the pgvector open-source extension for PostgreSQL presents a powerful and efficient solution for many use cases such as sentiment analysis, fraud detection and product recommendations.

Now Available

The pgvector extension is available on Aurora PostgreSQL 15.3, 14.8, 13.11, 12.15 and higher in AWS Regions including the AWS GovCloud (US) Regions.

To learn more about this launch, you can also tune in to AWS On Air at 12:00pm PT on 7/21 for a live demo with our team! You can watch on Twitch or LinkedIn.

If you have questions or suggestions, leave a comment.

About the Author

Shayon Sanyal is a Principal Database Specialist Solutions Architect and a Subject Matter Expert for Amazon’s flagship relational database, Amazon Aurora. He has over 15 years of experience managing relational databases and analytics workloads. Shayon’s relentless dedication to customer success allows him to help customers design scalable, secure and robust cloud native architectures. Shayon also helps service teams with design and delivery of pioneering features.

Read MoreAWS Database Blog