IPFS (InterPlanetary File System) is a storage protocol that allows content to be stored in a decentralized manner on the global IPFS network, without relying on any central entity. IPFS-enabled applications can benefit from a high level of redundancy and low latency, because content can be replicated, looked up, and downloaded from nearby IPFS nodes. The IPFS network is open and censorship-resistant. These properties make IPFS a medium of choice for blockchain applications to store information such as NFT-related data.

This series of posts provides a comprehensive introduction to running IPFS on AWS:

In Part 1, we introduce the IPFS concepts and test IPFS features on an Amazon Elastic Compute Cloud (Amazon EC2) instance

In Part 2, we propose a reference architecture and build an IPFS cluster on Amazon Elastic Kubernetes Service (Amazon EKS)

In Part 3, we deploy an NFT smart contract using Amazon Managed Blockchain and illustrate the use of IPFS as a way to decentrally store NFT-related data

Solution overview

All the services used in this post are included in the AWS Free Tier.

It’s also important to note that there are multiple valid alternatives to deploy IPFS and Web3 applications on AWS. This series of posts is therefore not intended to represent the single source of truth, but instead an opinionated way of deploying such an architecture favoring Ubuntu, Kubernetes, Remix, and MetaMask as building blocks and Web3 tools. Alternatives based on Amazon Linux, Amazon Elastic Container Service (Amazon ECS), and HardHat (for example) would be as valid as the ones presented here.

In the following sections, we demonstrate how to deploy the IPFS server, install the AWS Command Line Interface (AWS CLI), install IPFS, add and view files, and archive data in Amazon Simple Storage Service (Amazon S3).

Prerequisites

The only prerequisite to follow the steps in this series is to have a working AWS account. We do not assume any prior knowledge of blockchain, nor any coding experience. However, we expect you to know how to navigate the AWS Management Console and to have a basic knowledge of Kubernetes.

Deploy the IPFS server

To understand the different components the IPFS daemon is made of, we start by installing IPFS on a virtual machine.

We will use the EC2 Instance Connect method to connect to the virtual machine, so we first need to lookup the IP address range assigned to this service in your region. Choose the CloudShell icon in the navigation pane and enter the following command:

Take note of the returned IP address range (18.202.216.48/29 in the case of the Ireland region, for example).

On the Amazon EC2 console, choose Instances in the navigation pane.

Choose Launch an instance.

For Name, enter ipfs-server.

For Amazon Machine Image (AMI), choose Ubuntu Server 22.04 LTS (HVM), SSD Volume Type.

For Architecture, choose 64-bit (x86).

For Instance type, choose t2.micro.

For Key pair name, choose Proceed without a key pair.

For Allow SSH traffic from, choose Custom and enter the IP address range previously looked up.

Keep all other default parameters

After you create the instance, on the Instances page, select it and choose Connect.

Connect it using the EC2 Instance Connect method.

You will see a prompt similar to the following in a new tab:

Unless instructed otherwise, all commands in the following sections should be run from this session.

Install the AWS CLI

If you do not already have access keys for your user, create access keys (refer to Get your access keys).

Install the AWS CLI, which will be useful later on:

Refer to Setting up the AWS CLI if you’re not familiar with the AWS CLI configuration process.

Install IPFS

For instructions to install IPFS on Linux, refer to Install IPFS Kubo.

When done, initialize IPFS:

Then start the IPFS daemon (you can safely ignore the leveldb error):

The last output line should indicate Daemon is ready.

Add files to IPFS

Let’s start by adding a file to IPFS. Open a new EC2 Instance Connect prompt on the EC2 instance and enter the following commands:

We see in that output that IPFS computed a “CID” (a label used to point to material in IPFS) for this file:

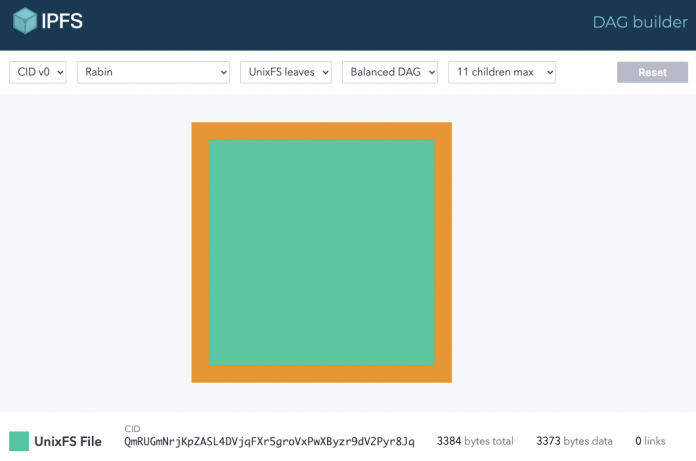

Let’s walk through what just happened. When dealing with files, IPFS splits the content of the file into different chunks and links them in a Direct Acyclic Graph (DAG). The “CID” represents the root of this Merkle DAG. To illustrate this process, you can download the file locally on your computer, and upload it to dag.ipfs.io (make sure you choose the same parameters as shown in the following screenshot).

You can check that the generated CID matches with the CID generated by the previous command. If you change the parameters in the DAG builder webpage, you will get a different CID. The same file can be represented by many different DAGs in IPFS. However, a given DAG (or CID) can only represent a unique content. CIDs are used to lookup content on the IPFS network; this is why we are referring to “content addressing“, as opposed to location (IP) addressing.

View files using an IPFS gateway

IPFS can be viewed as a public storage network. After we add the aws.png file to our IPFS server, anyone requesting the CID QmRUGmNrjKpZASL4DVjqFXr5groVxPwXByzr9dV2Pyr8Jq can access the corresponding DAG and rebuild the aws.png file. For more information about how IPFS deals with files, refer to the video How IPFS Deals With Files. The ipfs.io public gateway will handle the process of reconstructing the file from the CID when we access the https://ipfs.io/ipfs/QmRUGmNrjKpZASL4DVjqFXr5groVxPwXByzr9dV2Pyr8Jq URL.

An IPFS gateway is included in the IPFS install, so we can use our own gateway instead of the one from ipfs.io. By default, IPFS is only exposing the gateway locally, so we need to edit the $HOME/.ipfs/config file and update the Gateway property under Addresses to the following value:

We also need to modify the security group of the IPFS server instance to allow inbound traffic on port 8080. To do that, select the ipfs-server instance on the Instances page of the Amazon EC2 console and on the Security tab, choose the security group of your instance. Choose Edit inbound rules and add rule as shown in the following screenshot.

Save this rule and restart the IPFS daemon. We can now connect to the IPFS gateway using the http://<ipfs_instance_public_address>:8080/ipfs/QmRUGmNrjKpZASL4DVjqFXr5groVxPwXByzr9dV2Pyr8Jq URL.

If you install the ipfs-companion extension or use a browser like Brave that supports IPFS natively, you might also visualize the ipfs://QmRUGmNrjKpZASL4DVjqFXr5groVxPwXByzr9dV2Pyr8Jq URL directly.

Archive data on Amazon S3

IPFS offers the possibility to pin CIDs (which can be used to reconstruct files) that we want to keep. By default, all newly added files are pinned. We can double-check that this is the case for the file that we previously created:

We can access the file that corresponds to this CID as long as the various blocks of the DAG are available on the IPFS network. To make sure this is the case, we can use an IPFS pinning service. Alternatively, we can simply archive the data on Amazon S3, and add it back to IPFS whenever needed.

Let’s work with the multi-page website from the IPFS documentation.

First, download the files provided in the documentation:

The following folder has been created:

Update the content of the file about.html (for example, by updating the Created by ___ line) so we have a unique version of the website (and therefore a CID that is not already on the IPFS network).

Add the entire folder to ipfs (you will see different CIDs):

Record the CID of the multi-page-website object:

We can query the website through the IPFS gateway using the http://<ipfs_instance_public_address>:8080/ipfs/<CID of multi-page-website e.g. QmV5wgziYJoTXhcyzNq8WzBuY6V5uYDMct22M3ugi585MU>/index.html URL.

Let’s archive this version of the website to Amazon S3.

To get an archive of the website, we use the get command against the website root CID:

A website-backup.tar.gz archive of the website has just been created. Keep in mind that the same content can correspond to multiple CIDs, depending on the parameters that were used while adding the content to IPFS. Therefore, we should not expect to get the same CID while adding this archive back to IPFS. Having said that, IPFS content is often advertised by its CID, so it might be useful to record the CID and its generation parameters in the metadata of the S3 backup object (refer to the IPFS documentation for the full list of parameters).

Create an S3 bucket to store the backup:

Upload the backup with specific metadata:

Now let’s test our backup.

Delete the archive and unpin the CID of the website:

Unless someone pinned the CID of the website (which is highly unlikely), our previous request to the website through the IPFS gateway should timeout.

Let’s restore the website from the S3 archive:

We can check that the CID of the website has been rebuilt successfully and that we can access the webpage again.

Clean up

Delete the S3 bucket:

Delete the virtual machine:

On the Amazon EC2 console, choose Instances in the navigation pane.

Select the ipfs-server instance.

Select Instance state, and choose Terminate instance.

Conclusion

In this post, we installed an IPFS server on an Amazon EC2 instance, introduced several IPFS concepts, and tested the main IPFS features. In Part 2, we demonstrate how to deploy a production IPFS cluster on Amazon EKS.

About the Author

Guillaume Goutaudier is a Sr Partner Solution Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and a member of the Technical Field Community for blockchain.

Read MoreAWS Database Blog