Amazon DocumentDB (with MongoDB compatibility) is a scalable, highly durable, and fully managed database service for operating mission-critical MongoDB workloads without having to worry about managing the underlying infrastructure. As a document database, Amazon DocumentDB makes it easy to store, query, and index JSON data.

Amazon DocumentDB has added support for auditing Data Manipulation Language (DML) events. This added support is an extension of the existing DDL auditing capabilities available; DML auditing lets you audit reads, updates, inserts, and delete operations to Amazon CloudWatch. For the entire list of supported events, refer to Supported Events.

Although Amazon DocumentDB imposes no additional charge to enable DML auditing, you get charged at the standard rates for using CloudWatch Logs. For more information on CloudWatch Logs pricing, see Amazon CloudWatch pricing.

In this post we talk about DML auditing, explain why it’s important for some organizations, and walk you through setting it up on Amazon DocumentDB. Then, using an example, we show how native integration between Amazon DocumentDB and CloudWatch makes it easier to record and analyze audit trails (change events) from multiple instances in the cluster without the use of additional tools.

Benefits of DML auditing

Some organizations using Amazon DocumentDB and operating in highly regulated industry sectors may need to audit activity on the database, for two main reasons: to comply with SOX, EFTA, PCI, HIPPA, and other governance and compliance regulations, and to enhance security posture.

Compliance regulations – Maintaining the audit trail of data changes and access privileges for a specific duration is one of the SOX’s requirements to help analyze any incidents involving regulated data. The audit trail should contain the following information, because it’s important to identify who, what, when, how, and where was data accessed or altered:

Time when change event happened

User who is responsible for the change event

Data or privilege that is changed

Origin of change event (for example, source IP)

What caused the change event (for example, insert, update, grant, and so on)

Enhance security posture – Database administrators managing sensitive database workloads may want to monitor database events in order to receive alerts when specific events such as data deletions, bulk updates, or reads takes place. Without an audit trail of events, it can be difficult to assess the impact of such incidents and ascertain the time and origins of the query. In some cases, these incidents are only discovered when a user complains, which may result in an unpleasant user experience.

Let’s look at how the DML auditing capabilities of Amazon DocumentDB can help you achieve such compliance requirements and security needs for your organization.

Solution overview

Auditing is not enabled by default. You can enable it while creating new clusters and for existing ones by modifying the configuration through the AWS Management Console or AWS Command Line Interface (AWS CLI) without needing to restart your Amazon DocumentDB cluster. Enabling auditing is a two-step process. The first step is to set the dynamic audit_logs parameter to allowed values; the second step is to enable Amazon DocumentDB to export logs to CloudWatch.

The audit_logs parameter is a comma-delimited list of events to log, specified in lowercase, which allows you to choose all events, specific events, or a combination of particular events. For example, you can choose to audit only DDL events, DML read events, or DML write events. Or you can select a combination of events like DDL and DML read, DDL and DML write, or DML read and DML write.

The audit_logs parameter can have one or a combination of the options outlined in the following table.

Valid Values

Description

Used in combination

ddl

Enables auditing for DDL events

Yes

dml_read

Enables auditing for only DML read events

Yes

dml_write

Enables auditing for only DML write events

Yes

all

Enables auditing for all database events

No

none

Disables auditing for all events

No

We do not recommend to use enabled or disabled as valid values for audit_logs parameter as they may be deprecated in future. For detailed information of allowed values and combinations, refer to Supported Events.

For this post, let’s use an Amazon DocumentDB cluster identified as dml-audit-demo to see DML auditing in action.

Prerequisites

You need a custom parameter group to start with. If you don’t have one already, create a new cluster parameter group and assign it to your Amazon DocumentDB cluster during creation. For instructions, refer to Creating Amazon DocumentDB Cluster Parameter Groups.

You can also modify an existing Amazon DocumentDB cluster to use a custom cluster parameter group.

Modify the audit_logs parameter

If you want to modify parameters in a custom parameter group using the AWS CLI, refer to Modifying Amazon DocumentDB Cluster Parameter Groups.

To modify the audit_logs parameter using the console, complete the following steps:

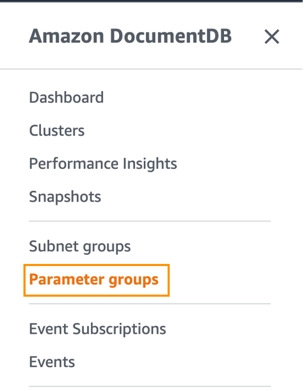

On the Amazon DocumentDB console, choose Parameter groups.

Locate and open your custom parameter group. Here we are using an existing custom parameter group docdb40dml.

Select the audit_logs parameter and choose Edit.

Set the value that is applicable for the events to be audited (for this post, we want to log all events, so we choose all).

Enable CloudWatch Logs export

While you’re creating your cluster, make sure to enable audit log exports (as shown in the following screenshot). For more details, see Create a Cluster: Additional Configurations.

If you’re modifying an existing cluster using the console or AWS CLI, refer to the steps to enable exporting audit logs in Modifying an Amazon DocumentDB Cluster.

Access the audit records in CloudWatch

When auditing is enabled, a new CloudWatch log group with “never expire” retention is created for your Amazon DocumentDB cluster with the name of cluster-identifier/audit containing folders for your cluster’s instances. Folder names may begin with the instance name and end with a number (for example, dml-audit-demo2.1, where dml-audit-demo2 is the name of an instance in the Amazon DocumentDB cluster).

A user, group, or role with CloudWatch read permissions can access audit trails in CloudWatch Logs. Permissions can be changed by updating your AWS Identity and Access Management (IAM) polices. Refer to Customer managed policy examples for examples of IAM polices for CloudWatch. As a best practice, when creating IAM polices, we advise you to follow the least privilege principle.

If you’re creating an Amazon DocumentDB cluster with a cluster identifier used earlier that has profiling enabled, you may notice the new cluster sending the audit logs to the existing log groups if they’re present. Deleting an Amazon DocumentDB cluster doesn’t delete the log groups.

Audit logs are recorded in the JSON format and have the following main fields:

atype – Action type, which is one of the supported events

ts – Unix timestamp of the event

timestamp_utc – Time of the event in UTC format, not seen for DDL events

remote_ip – Machine IP from where the event is originating, or the socket name if it’s system event

users – Name of the user responsible for the event and the database on which the event is performed

param – A subdocument consisting of event details that includes if it’s an insert, delete, update, or other event

To access the audit record, complete the following steps:

Connect to your Amazon DocumentDB cluster and insert a document into a new collection called products in the catalog database using the following query:

From the list of log groups, locate and choose your log group /aws/docdb/yourClusterIdentifier/audit.The following screenshot shows the CloudWatch log group for the cluster dml-demo-audit.

To see the logs, select a particular folder or choose Search all on the Log streams tab.

The following screenshot shows the DDL events generated by the creation of the catalog.products collection.

The following is the audit trail for the insert query (DML events are recorded under atype:authCheck):

Analyze the audit trail data

We use the cases dataset to show you how we can analyze an Amazon DocumentDB audit trail to achieve the compliance and security requirements mentioned earlier. Using mongoimport, we imported the dataset to the case_data collection to the databases cases_db and reports_db in our dml-demo-audit Amazon DocumentDB cluster, which contains two users: cases_user and reports_user.

Consider a situation where data in cases_db is permanent, but data in reports_db can be deleted by its users. An extract, transform, and load (ETL) process refreshes reports_db data regularly from cases_db. In this scenario, the reports_db user accidentally deletes a set of records in cases_db rather than in reports_db.

If there is a mechanism to send automated alerts in such cases, database admins can act quickly and devise preventive measures to avoid reoccurrence. Let’s see how to configure a CloudWatch alarm to send an alert if such an event occurs.

To do this, we create a metric filter in CloudWatch that searches for events of interest to us (delete events on cases_db). Then we use this filter to create a CloudWatch alarm. To see the CloudWatch alarm in action, we delete some documents in cases_db.

Create a metric filter

To create a metric filter, complete the following steps:

On the CloudWatch console, open your log group aws/docdb/dml-audit-demo/audit.

On the Actions menu, choose Create metric filter.

Because we’re interested in monitoring deletes on the case_data collection in the cases_db database, we define our filter pattern as {$.param.command = “delete” && $.param.ns = “cases_db.case_data”} and choose Next.

For more information on filter patterns with JSON log events, see Using metric filters to match terms and extract values from JSON log events.

Next, we assign metrics to our filter pattern.

For Filter name, enter deletes_on_cases_db.

For Metric namespace, enter DML_Audit_Namespace.

For Metric name, enter deletes_cases_db_metric.

For Metric value, enter 1.

Choose Next.

On the next page, review the details and choose Create metric filter.

Create a CloudWatch alarm

To create your CloudWatch alarm, complete the following steps:

On the CloudWatch console, locate and open your Log group aws/docdb/dml-audit-demo/audit.

On the Metric filters tab, select the metric filter created in the previous step and choose Create alarm.

Next, we configure values for our alarm. Even for a single delete event, we want to raise the CloudWatch alarm as soon as it happens, so we keep these values at the minimum without attracting additional charges.

For Period, select 1 minute

For Whenever metric is, select Greater/Equal

For than…, enter 1

Choose Next.

In the Configure actions section, we create a new Amazon Simple Notification Service (Amazon SNS) topic with an email endpoint and leave all other options at their default.You can use existing topics in your environment or create a new SNS topic. If you create a new SNS topic, make sure to confirm the subscription.

On next page, enter a name and description for the alarm, then choose Next.

Review and confirm all the values provided for the alarm.

Validate the alarm

We now connect to our Amazon DocumentDB cluster dml-audit-demo and simulate an accidental delete to trigger the alarm.

Connect to the cluster as report_user and run some queries on the case_data collection to remove documents with Cases equal to zero.

As shown in the following screenshot, out of the 320,341 total documents in the collection, 192,093 documents were removed by the db.case data.remove(Cases:$eq:0) query.

The remove query also created the following audit trail in CloudWatch Logs.

Because we set up the alarm to notify us in case of such an event, CloudWatch triggered the following email notification.

To meet compliance and security requirements, organizations can use Amazon DocumentDB DML auditing features to maintain and analyze their audit trails.

Disable DML auditing

To disable auditing, set the audit_log parameter value in the cluster parameter group to none. If you want to just disable DML adulting and let DDL auditing continue, set the audit_log parameter value in the cluster parameter group to ddl.

Please note, DML auditing consumes database resources to record the events, which may impact overall Amazon DocumentDB performance. Resources consumed by the DML audit depend on multiple factors, such as workload on the Amazon DocumentDB instance and the event categories being audited.

Auditing of events gets prioritized over database traffic. For efficient resource management, enable DML auditing to audit only the event categories required.

Summary

In this post, we discussed the significance of DML auditing, showed how to set it up on Amazon DocumentDB, and demonstrated how to analyze audit trail data using a sample dataset.

For more information about the DML auditing feature, refer to Auditing Amazon DocumentDB Events.

About the authors

Kaarthiik Thota is a Senior DocumentDB Specialist Solutions Architect at AWS based out of London. He is passionate about database technologies and enjoys helping customers solve problems and modernize applications leveraging NoSQL databases. Before joining AWS, he worked extensively with relational databases, NoSQL databases, and Business Intelligence technologies for more 14 years

Dipti Ranjan Sahoo is a Senior Product Manager at AWS based out of Seattle. He has over 15 years of experience of building successful networking, analytics, and database products used by many enterprises worldwide. He is passionate about building internet scale, high performance products and solutions on Public Cloud. Before joining AWS, he worked extensively on public cloud technologies including databases, analytics, networking, and security for more than 14 years.

Read MoreAWS Database Blog