Amazon Timestream is a fully managed, scalable, and secure time series database designed for workloads such as infrastructure observability, user behavioral analytics, and Internet of Things (IoT) workloads. It’s built to handle trillions of events per day, and designed to scale horizontally to meet your needs. With features like multi-measure records and scheduled queries, Timestream enables you to analyze time series data and gain valuable insights in a cost-effective way.

The flexible data model of Timestream allows you to store and query data in a way that makes sense for your use case. Whether you are tracking performance metrics for a fleet of devices, or analyzing customer behavior in real time, Timestream can address your needs. And now, with the introduction of customer-defined partition keys, you can access your time series data faster than before due to query optimizations tailored to your specific needs.

The need for customer-defined partition keys

Many customers and internal services, like our internal Alexa Voice Service (AVS) team, uses Timestream because their use cases require highly scalable ingestion and optimized query execution. As their systems scaled over time, we learned that though the existing partitioning schema enabled scaling, there was still a need for a flexible options that could accommodate query patterns more attuned to their use cases. This differed from the fleet-wide trends tracking and analysis on measures we had initially optimized for.

With this in mind, we are excited to announce the launch of customer-defined partition keys, a new feature that provides you the flexibility you need to speed up your queries and derive insights more efficiently based on your specific time series data-related needs. Partitioning is a technique used to distribute data across multiple physical storage units, allowing for faster and more efficient data retrieval. With customer-defined partition keys feature, customers can create a partitioning schema that better aligns with their query patterns and use cases.

In Timestream, a record is a single data point in a time series. Each record contains three parts:

Timestamp – This usually indicates when data was generated for a given record

Set of dimensions – This is metadata that uniquely identifies an entity (for example, a device)

Measures – These represent the actual value being measured by a record and tracked in a time series

To learn more about Timestream key concepts, refer to Amazon Timestream concepts.

By allowing you to choose a specific dimension to partition your data on, Timestream reduces the amount of data scanned during queries, thereby substantially reducing query latency for access patterns that match your partitioning schema. For instance, filtering by customer ID, device ID, or location was a very common access pattern that many of our customers use. By allowing you to choose any of such dimensions as your partition key, you can optimize your queries and get the most out of your time series data.

With this added flexibility, Timestream will be able to better adapt to specific customer workloads, and we are excited to see the innovative ways you will use this new feature to extract more value from your data.

How customer-defined partition keys work

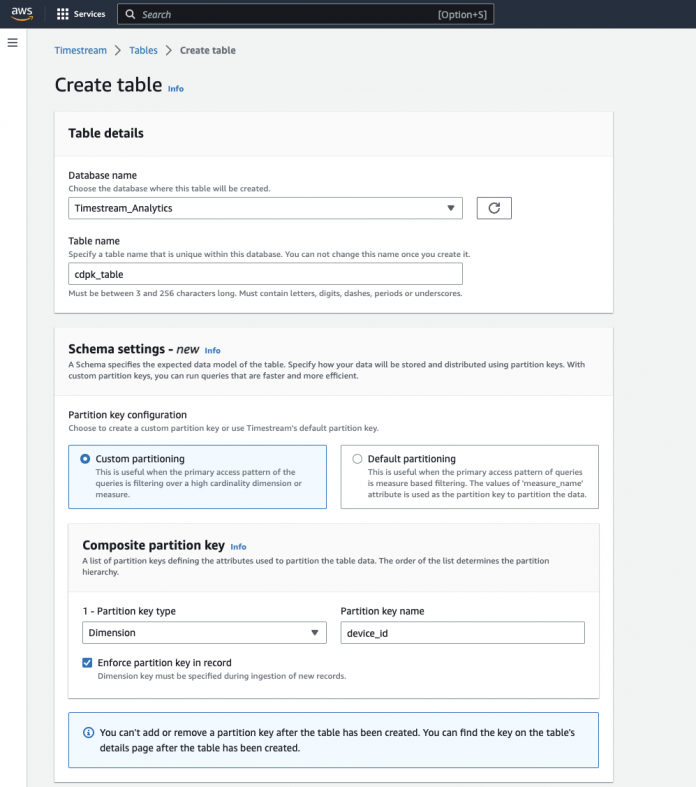

To get started, simply create a new table and select a specific partition key based on your most common access pattern. Usually, this is your main filtering condition in your query predicate (for example, customer ID or device ID). Identifying and selecting the right dimension by which to partition will ensure you get the best possible performance from the feature.

Also, you can optionally configure your timestream table to accept only records containing non-null values for your partition key. This will further optimize partitioning the data in your table for the best query performance.

When choosing the partition key type, don’t forget to choose Dimension if you want to choose you own dimension. If you chose Measure name, Timestream will adopt the default partitioning schema, which is better suited to analyze fleet-wide variations of a specific measure over time.

When we talk about common access patterns, we’re referring to the types of filters or predicates that are typically used when querying time series data. These filters may include things like a specific customer ID, device ID, or any other high-cardinality dimension that’s important to your business. For this hypothetical use case where the partitioning key is DeviceModel, it could be something like the following code:

For example, if you’re using Timestream to store data from smart devices or IoT applications, you might want to partition your data based on the device model. This would allow you to quickly retrieve data for a specific set of devices without having to scan through all of the data in the table. Similarly, if you’re storing data about customer interactions, consider partitioning your data based on the customer ID to quickly retrieve all interactions for a specific customer. This will also provide visibility of all customer-associated devices.

In cases where you could have multiple different access patterns that could be derived from the same raw data, you could even generate a scheduled query with a different partition key optimized for different use cases, for example, one to understand behavior by device model and another for root cause analysis related to a specific customer issue.

By understanding your most common access patterns, you can choose the dimension that will be most beneficial as a partition key. This will allow Timestream to optimize query performance for those specific access patterns, leading to faster and more efficient analysis of your time series data.

After you choose your partitioning dimension, Timestream automatically partitions the ingested data to optimize the performance of queries that are based on such partitioning. It’s important to carefully consider the query patterns and select the most appropriate dimension as the partition key because once the table is partitioned, it cannot be changed.

To ensure the accuracy of your data and maximize query efficiency, we are also launching a new capability called schema enforcement. This will allow you to configure the table so that it will reject write requests that don’t contain the dimension that is being used as the partition key. This will help ensure that your data is properly partitioned, leading to improved query performance and faster insights. It’s recommended to set the enforcement level to REQUIRED, which ensures that you will get the full benefits of your partitioning schema. However, we understand that there may be cases where a small amount of data might not contain the partitioning dimensions, and it’s still the customer’s preference to accept those records. In such cases, setting the enforcement level to OPTIONAL can be useful. All records that don’t contain the required dimension will still be written to the system, but will be collocated together. The enforcement level can be changed at any time during the table existence.

Using customer-defined partition keys: A case study

Let’s look at an example of how our internal AVS device monitoring teams benefited from customer-defined partition keys feature to monitor the performance of millions of in-field connected device performance. AVS device monitoring team ingests data from millions of connected devices on a daily basis, including device metrics, system health metrics, and cloud-side metrics. The goal is to ensure a delightful customer experience across all their devices. With such a large volume of data, it’s important to have an efficient way of analyzing data at the entity level. In this particular use case, an entity is an individual device or all the devices owned by a particular form factor segment . The device monitoring systems needs to analyze device and cloud side metrics near real time, detect any anomalies, and proactively mitigate these issues to minimize long-lasting impact on customer engagement.

The following diagram illustrates the architecture that was implemented for the above use case.

One possible data model could include data for region, device type, health metric, measure and more. By defining a partition key on Device Type, for example, it optimizes entity-level queries, such as root cause analysis of issues or deviations based on this particular attribute. This partitioning schema efficiently collocates data in a way that closely resembles their specific query patterns, thereby reducing the amount of data scanned during queries. This improved query performance, allowing for faster insight generation or issue detection, so the team could act quickly to provide the best possible query latency.

Let’s look at an example data model.

Column Name

Data Type

Column Type

app_name

varchar

dimension

date_time_emitted

timestamp

time

date_time_received

timestamp

measure

time_spent

double

measure

event_id

varchar

dimension

event_type

varchar

dimension

device_type

varchar

dimension

device_model

varchar

dimension

segment

varchar

dimension

domain

varchar

dimension

metric_name

varchar

dimension

Metric_value

double

measure

This data model contains few dimensions with a cardinality that could be in the millions. In this data model, device monitoring team chose the device_type dimension as the partition key. Let’s look at a few examples that would see performance benefits if data is partitioned by app_name, Device_type, or device_model (Timestream allows only one partition-key to be chosen on a table). With this schema, we could get very valuable insights, such as the following:

If your primary use case requires finding all devices used by a specific application ranked by times used, your ideal partition key is app_name.

If your primary use case is to find all failed and successful request events for a specific segment and calculate the average latency or outcome experience by device type, your ideal partition key is segment.

If your primary use case is to calculate latencies related to successful interactions, responses, and successful prompt detection for all domain events, your ideal partition key is Domain.

If your goal is to generate dashboards that show the most problematic device type, based on failed events around the world, your ideal partition key is device_type)

By utilizing customer-defined-partition-key feature, in conjunction with other features like scheduled queries, we have observed improved levels of query performance and further cost optimization thanks to more efficient data access patterns. To learn more about scheduled queries, refer to Using scheduled queries in Timestream.

Now it’s to optimize your queries!

Customer-defined partition keys feature can be a powerful tool for optimizing query performance on entity-level analysis. By understanding the most common access patterns and choosing a high-cardinality dimension that fits most data access needs, you can benefit from faster query times and improved insights. In the above use case for device monitoring, optimizing queries for specific dimension-level analysis helped the team to better understand their device performance and improve their service accordingly. With the added benefit of schema enforcement, you can ensure that your tables are properly configured according to your specific needs, so you can decide to either to reject or allow write requests that don’t contain the dimension being used as the partition key. Get started with Amazon Timestream and Customer Defined Partition Keys for Free, taking advantage of our 30-day Free trial and start enhancing your time-series workloads!

About the authors

Victor Servin is a Senior Product Manager for the Amazon Timestream team at AWS, bringing over 18 years of experience leading product and engineering teams in the Telco vertical. With an additional 5 years of expertise in supporting startups with Product Led Growth strategies and scalable architecture, Victor’s data-driven approach is perfectly suited to drive the adoption of analytical products like Timestream. His extensive experience and commitment to customer success allows him to help customers to efficiently achieve their goals.

Yogeesh Rajendra is a tenured Engineering Tech Lead at Alexa Amazon in Bellevue, WA, USA. With over 10 years of expertise in software development and AWS technologies, he excels in building highly scalable and robust distributed systems for customers. Yogeesh’s focus is on handling high-volume, high-variance, and high-velocity data, ensuring that customer experiences on Alexa-enabled devices worldwide are seamless and never compromised. His technical leadership and collaborative approach enable him to deliver innovative solutions, continuously enhancing the capabilities and performance of Alexa devices.

Ramsundar Muthusubramanian is a Senior Data Engineer at Alexa Amazon in Bellevue. With over 12 years of experience in Data Warehousing and Analytics, Ram had delivered big data projects like Realtime Engine Telemetrics, Demand Forecasting Data Analytics, Realtime Certification Analytics using a range of AWS Services like Redshift, Kinesis Streams, Dynamo DB, Kinesis Firehose, Lambda. Excels in designing data lake architectures and solving data problems with best data modeling practices.

Seshadri Pillailokam is a Senior Software Dev Manager, Alexa Developer Experience and Customer Trust. He runs the engineering org that builds few monitoring products for Alexa using various AWS technologies. For the last few years, he has been solving security, and risk challenges through various big data technologies, to keep his customers secure. Previously he worked for over a decade on developer facing tools and technologies for Amazon, and others.

Brutus Martin is a Software Development Manager, Alexa Developer Experience and Customer Trust. His team is responsible for building platforms, tools and systems that are required to monitor 3P contents & devices to ensure customer safety and trust and better experience. Brutus has 17 years of experience in building software services and products. His team’s main expertise is in building distributed scalable and extensible systems to detect anomalies, analyzing digital contents across text, image, audio and video in terms of policy, security and functional infractions that could cause a trust busting experience for customers. Brutus also manages data team that integrates data from various systems across Alexa teams to build business and technical insights.

Read MoreAWS Database Blog