With the simulated DNS caching, we again observed approximately 10 seconds of downtime, but then the client started reporting a connection to a “reader”, and never switched to the “writer”. It demonstrates that the client didn’t follow the DNS update, and kept sending queries to the old primary instance indefinitely. If the old primary was affected by a real issue, we would have seen connection errors instead of the instance responding as “reader”. This demonstrates our second challenge: a client that’s subject to DNS caching might be late to recognize the new writer, or it might not recognize it at all.

A similar issue can occur in real-world applications due to DNS caching in the application stack. It can be caused by a caching DNS resolver used in networking setup, a DNS cache running in the client’s operating system, or even the application’s execution environment settings (e.g., DNS TTL settings in Java Virtual Machines).

In real-world environments, you can identify potential issues caused by DNS propagation delays by observing application behavior during events that involve an endpoint change. You might see the following symptoms if DNS delays are involved:

In order to investigate a potential DNS-related connectivity issue, consult the RDS event records to confirm when the underlying event (e.g., a failover) started, and when it finished. Then, review the application logs and identify the time frame when the application experienced matching symptoms. If the application began experiencing symptoms at the start of the event, but didn’t recover until long after the event finished, it’s a sign of the application not picking up the DNS change.

If you believe that your application might be impacted by DNS propagation delays, there are several solutions you can consider.

As noted earlier, Aurora DNS zones use a Time to Live (TTL) of 5 seconds. You can confirm this by resolving an endpoint using the dig command. The ANSWER section of the response shows the endpoint’s IP address and the TTL of the record:

You’ll also find the DNS server address towards the bottom of the output. If the server is different than the default VPC DNS server (network range plus two, here it’s 172.31.0.2), the client is using a custom DNS server that might introduce delays on top of the default TTL. You’ll notice that as you run the command several times, the TTL will count down to zero before resetting to the base value. A base value different than 5 indicates that the client’s DNS server overrides the default TTL.

As an example, the following output indicates that the client uses a local DNS cache (indicated by the server address 127.0.0.1), and the TTL is 120 seconds (2 minutes). As a result, this client could experience up to 2 minutes of additional downtime during an Aurora failover:

[ec2-user@ip-XX-XX-XX-XX ~]$ dig <Aurora read/write endpoint>

…

;; ANSWER SECTION:

<Aurora read/write endpoint>. 120 IN A XX.XX.XX.XX

…

;; SERVER: 127.0.0.1#53(127.0.0.1)

…

There’s nothing fundamentally wrong with using a caching DNS resolver, and it might even be necessary in order to prevent DNS resolution throttling. That said, if you must use a DNS cache, keep the TTL as low as possible, and avoid overriding the default TTLs with higher custom values. When investigating DNS caching issues, remember to check all layers of the application stack, including virtual environments (e.g., JVM) and containers.

Remember that the DNS system is hierarchical, so any TTL value configured at the caching layer will count in addition to the existing 5-second TTL of the Aurora zones. As such, adjusting client settings can help avoid additional DNS delays (and thus, additional downtime) on top of the default DNS behavior, but the client will still rely on DNS to recognize changes in instance roles.

Use Amazon RDS Proxy

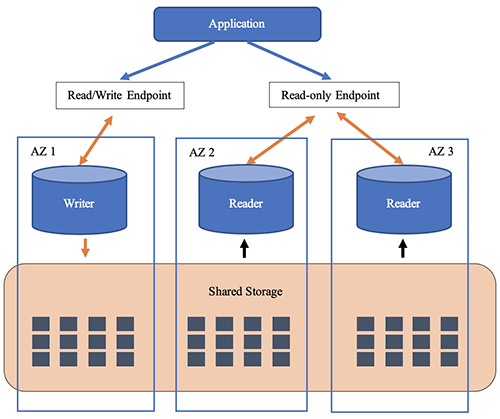

Amazon RDS Proxy is a managed proxy for Amazon Aurora and Amazon Relational Database Service (RDS). In addition to the common proxy features such as connection pooling and multiplexing, RDS Proxy helps minimize application downtime by connecting directly to database instances, bypassing the cluster DNS endpoints.

When paired with Aurora, RDS Proxy provides read/write and read-only DNS endpoints that can be used instead of the endpoints available with Aurora clusters. The key difference is that the proxy endpoints don’t change during database failovers, so there’s no DNS update that the clients could miss. The proxy continues to accept client connections under the same endpoint/IP, monitors the status of database instances, and automatically directs read and write queries to the available instances. It can even handle failovers without dropping idle connections, which further reduces impact on application’s availability.

Using RDS Proxy helps avoid all of the issues listed previously:

The clients no longer need to recognize changes in instance roles. The RDS Proxy tracks instance roles automatically based on the cluster metadata, without using DNS.

The Proxy provides a static endpoint that doesn’t change, so the clients won’t see delays or connection errors as a result of DNS changes, such as when instances are added, deleted, or renamed.

The Proxy considers the connection count on each replica before eastablishing new ones, so the risk of uneven workload distribution is greatly reduced.

For more information about using RDS Proxy with Aurora clusters, refer to Getting started with RDS Proxy.

Let’s demonstrate RDS Proxy using the client command we introduced earlier. This time, we’ll use two simultaneous client sessions for a side-by-side comparison. The commands are identical except for the DNS endpoints: one of the sessions pings the cluster’s writer endpoint, and the other session pings the proxy read/write endpoint. DNS cache is not used, and we removed the harcoded cluster IP from /etc/hosts.

Example command using the built-in cluster writer endpoint:

while true; do mysql -haurora-test.cluster-xxx.us-west-2.rds.amazonaws.com –batch –skip-column-names -e “select now(), if(@@innodb_read_only = 1, ‘reader’, ‘writer’)”; sleep 1; done;

Example command using a proxy endpoint:

while true; do mysql -htest-proxy.proxy-xxx.us-west-2.rds.amazonaws.com –batch –skip-column-names -e “select now(), if(@@innodb_read_only = 1, ‘reader’, ‘writer’)”; sleep 1; done;

With both commands running, initiate a failover and observe the outputs. The results of using the cluster writer endpoint look familiar:

2022-12-13 02:22:41 writer

2022-12-13 02:22:42 writer

2022-12-13 02:22:43 writer

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

ERROR 2003 (HY000): Can’t connect to MySQL server on ‘aurora-test.cluster-xxx.us-west-2.rds.amazonaws.com:3306’ (111)

2022-12-13 02:22:51 reader

2022-12-13 02:22:52 writer

2022-12-13 02:22:53 writer

And here’s the output for the proxy endpoint, annotated for readability:

2022-12-13 02:22:41 writer

2022-12-13 02:22:42 writer

2022-12-13 02:22:43 writer << downtime starts here

2022-12-13 02:22:45 writer << downtime ends here

2022-12-13 02:22:46 writer

2022-12-13 02:22:47 writer

2022-12-13 02:22:48 writer

2022-12-13 02:22:49 writer

2022-12-13 02:22:50 writer

2022-12-13 02:22:51 writer

Thanks to the proxy’s ability to quickly detect changes in instance roles, and with DNS updates out of the equation, the difference is quite striking. The client not only avoided connectivity errors, but the downtime window was merely two seconds long, which is 80% less than the downtime of DNS change (10 seconds).

Use AWS Database Drivers for MySQL and PostgreSQL

AWS Database Drivers are designed to reduce application downtime and avoid DNS propagation issues. The open source AWS JDBC Driver for MySQL and AWS JDBC Driver for PostgreSQL are great examples of a smart driver built for Java applications. When the driver connects to an Aurora cluster, it learns the cluster topology from the replication status tables. It then monitors the individual instances and updates its topology metadata accordingly. The topology metadata is used to direct client connections and queries to the appropriate instances.

The following diagram describes the interaction between the app, the AWS JDBC Driver for MySQL, and the database:

For each client session, the driver maintains a logical connection (app to driver) and a physical connection (driver to database). If a physical connection fails due to a failover, the driver notices the failure and initiates its own failover handling protocol while keeping the logical connection alive. It discovers a new writer instance as soon as one is promoted. Upon detecting a new writer, the driver opens a new physical connection and returns control to the client.

The AWS JDBC driver for MySQL uses a connection string URL preceded by jdbc:mysql:aws, but otherwise it can be used as a drop-in replacement for other MySQL drivers for Java:

jdbc:mysql:aws://<cluster name>.<cluster id>.<region>.rds.amazonaws.com:3306/<database name>?useSSL=<true>&characterEncoding=<utf-8>

The faster failover handling for Aurora is enabled by default, and can be disabled by adjusting driver parameters. Note that AWS JDBC Driver for MySQL is primarily useful in reducing the impact of failovers, but does not support connection multiplexing or load balancing which are available with RDS Proxy today. Since the driver operates on each client machine separately, the final result will partially depend on the client architecture and behavior.

To learn more about the failover performance of the AWS JDBC Driver compared to other drivers, read our post: Improve application availability with the AWS JDBC Driver for Amazon Aurora MySQL.

If your clients don’t use the JDBC interface, you can still use the driver’s failover handling logic as inspiration for building similar functionality into your application. When doing so, you can use the following building blocks:

The replication status tables for learning the cluster topology.

The innodb_read_only variable for determining instance role (writer or reader).

Health check queries with a timeout that’s short enough to detect issues quickly, but not short enough to cause false negative outcomes.

Improve application response in case of connectivity issues

The options described above can address availability and DNS propagation issues by resolving client configuration issues, or more broadly by introducing a smart abstraction layer between the client and the database. If you’re unable to use any of these options, there are still ways to improve application response to failovers and other connectivity-related events.

Examples:

Introduce explicit exception handling for query errors that indicate repeated read-only errors on connection that are expected to be writable. Upon detecting an error, the application could attempt remediation steps such as reopening the connection or restarting the application container.

Detect failovers and other availability events by subscribing to RDS events. Event subscriptions can be used to generate alerts for database operators, or to invoke automation routines such as application restarts.

Routinely analyze application logs to detect and investigate unexpected connection closures, timeouts, and other errors that suggest misalignment between actual database status, and the status assumed by the application.

Conclusion

The availability of business-critical workloads can be greatly improved by making the most of Aurora’s fast failover capability. In this post, we discussed issues that might prolong application downtime during failovers, and presented a number of solutions. In general, RDS Proxy is the most complete solution that should work well with a variety of applications and client types. AWS JDBC drivers are a good option if your application is using Java programming language. Lastly, targeted improvements in the application’s error handling logic may help in the absence of an abstraction layer such as a proxy.

If you have any comments or questions, leave them in the comments section.

About the Authors

Lili Ma is a Database Solutions Architect at AWS, with over 10 years of experience in the database industry. Lili has been involved in the R&D of the Hadoop/Hive NoSQL database, enterprise-level database DB2, distributed data warehouse Greenplum/Apache HAWQ, and Amazon’s cloud-native databases such as Amazon Aurora, Amazon ElastiCache and Amazon MemoryDB.

Szymon Komendera is a Database Solutions Architect at AWS, with nearly 20 years of experience in databases, software development, and application availability. He spent the majority of his 8-year AWS tenure developing Aurora MySQL, and supporting other AWS databases such as Amazon Redshift and Amazon ElastiCache.