R is a popular analytic programming language used by data scientists and analysts to perform data processing, conduct statistical analyses, create data visualizations, and build machine learning (ML) models. RStudio, the integrated development environment for R, provides open-source tools and enterprise-ready professional software for teams to develop and share their work across their organization Building, securing, scaling and maintaining RStudio yourself is, however, tedious and cumbersome.

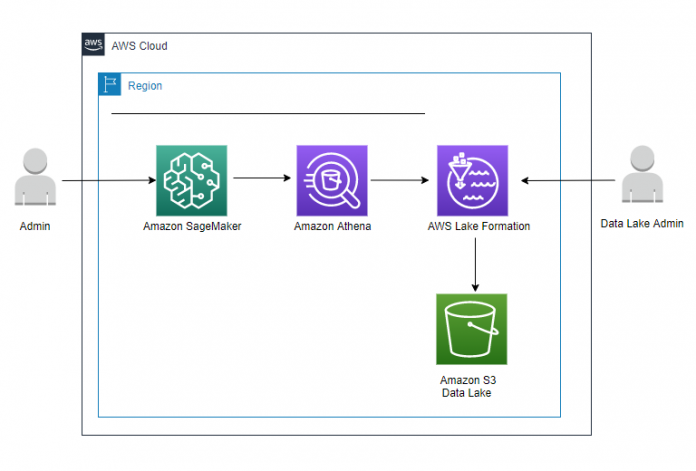

Implementing the RStudio environment in AWS provides elasticity and scalability that you don’t have when deploying on-prem, eliminating the need of managing that infrastructure. You can select the desired compute and memory based on processing requirements and can also scale up or down to work with analytical and ML workloads of different sizes without an upfront investment. This lets you quickly experiment with new data sources and code, and roll out new analytics processes and ML models to the rest of the organization. You can also seamlessly integrate your Data Lake resources to make them available to developers and Data Scientists and secure the data by using row-level and column-level access controls from AWS Lake Formation.

This post presents two ways to easily deploy and run RStudio on AWS to access data stored in data lake:

Fully managed on Amazon SageMaker

RStudio on amazon SageMaker is a managed service option which allows you to avoid having to manage the underlying infrastructure for your RStudio environment. You can easily bring your own RStudio Workbench license using AWS License Manager

You can also use RStudio on Amazon SageMaker’s integration with AWS Identity and Access Management or AWS IAM Identity Center (successor of AWS Single Sign On) to implement user-level security access controls. As we will see later in this post, you can secure your data lake by using row-level and column-level access controls from AWS Lake Formation.

RStudio on Amazon SageMaker enables you to dynamically choose an instance with desired compute and memory from a wide array of ML instances available on SageMaker.

Self-hosted on Amazon Elastic Compute Cloud (Amazon EC2)

You can choose to deploy the open-source version of RStudio using an EC2 hosted approach that we will also describe in this post. The self-hosted option requires the administrator to create an EC2 instance and install RStudio manually or using a AWS CloudFormation There is also less flexibility for implementing user-access controls in this option since all users have the same access level in this type of implementation.

RStudio on Amazon SageMaker

You can launch RStudio Workbench with a simple click from SageMaker. With SageMaker customers don’t have to bear the operational overhead of building, installing, securing, scaling and maintaining RStudio, they don’t have to pay for the continuously running RStudio Server (if they are using t3.medium) and they only pay for RSession compute when they use it. RStudio users will have flexibility to dynamically scale compute by switching instances on-the-fly. Running RStudio on SageMaker requires an administrator to establish a SageMaker domain and associated user profiles. You also need an appropriate RStudio license

Within SageMaker, you can grant access at the RStudio administrator and RStudio user level, with differing permissions. Only user profiles granted one of these two roles can access RStudio in SageMaker. For more information about administrator tasks for setting up RStudio on SageMaker, refer to Get started with RStudio on Amazon SageMaker. That post also shows the process of selecting EC2 instances for each session, and how the administrator can restrict EC2 instance options for RStudio users.

Use Lake Formation row-level and column-level security access

In addition to allowing your team to launch RStudio sessions on SageMaker, you can also secure the data lake by using row-level and column-level access controls from Lake Formation. For more information, refer to Effective data lakes using AWS Lake Formation, Part 4: Implementing cell-level and row-level security.

Through Lake Formation security controls, you can make sure that each person has the right access to the data in the data lake. Consider the following two user profiles in the SageMaker domain, each with a different execution role:

User Profile

Execution Role

rstudiouser-fullaccess

AmazonSageMaker-ExecutionRole-FullAccess

rstudiouser-limitedaccess

AmazonSageMaker-ExecutionRole-LimitedAccess

The following screenshot shows the rstudiouser-limitedaccess profile details.

The following screenshot shows the rstudiouser-fullaccess profile details.

The dataset used for this post is a COVID-19 public dataset. The following screenshot shows an example of the data:

After you create the user profile and assign it to the appropriate role, you can access Lake Formation to crawl the data with AWS Glue, create the metadata and table, and grant access to the table data. For the AmazonSageMaker-ExecutionRole-FullAccess role, you grant access to all of the columns in the table, and for AmazonSageMaker-ExecutionRole-LimitedAccess, you grant access using the data filter USA_Filter. We use this filter to provide row-level and cell-level column permissions (see the Resource column in the following screenshot).

As shown in the following screenshot, the second role has limited access. Users associated with this role can only access the continent, date, total_cases, total_deaths, new_cases, new_deaths, and iso_codecolumns.

Fig6: AWS Lake Formation Column-level permissions for AmazonSageMaker-ExecutionRole-Limited Access role

With role permissions attached to each user profile, we can see how Lake Formation enforces the appropriate row-level and column-level permissions. You can open the RStudio Workbench from the Launch app drop-down menu in the created user list, and choose RStudio.

In the following screenshot, we launch the app as the rstudiouser-limitedaccess user.

You can see the RStudio Workbench home page and a list of sessions, projects, and published content.

Choose a session name to start the session in SageMaker. Install Paws (see guidance earlier in this post) so that you can access the appropriate AWS services. Now you can run a query to pull all of the fields from the dataset via Amazon Athena, using the command “SELECT * FROM “databasename.tablename”, and store the query output in an Amazon Simple Storage Service (Amazon S3) bucket.

The following screenshot shows the output files in the S3 bucket.

The following screenshot shows the data in these output files using Amazon S3 Select.

Only USA data and columns continent, date, total_cases, total_deaths, new_cases, new_deaths, and iso_code are shown in the result for the rstudiouser-limitedaccess user.

Let’s repeat the same steps for the rstudiouser-fullaccess user.

You can see the RStudio Workbench home page and a list of sessions, projects, and published content.

Let’s run the same query “SELECT * FROM “databasename.tablename” using Athena.

The following screenshot shows the output files in the S3 bucket.

The following screenshot shows the data in these output files using Amazon S3 Select.

As shown in this example, the rstudiouser-fullaccess user has access to all the columns and rows in the dataset.

Self-Hosted on Amazon EC2

If you want to start experimenting with RStudio’s open-source version on AWS, you can install Rstudio on an EC2 instance. This CloudFormation template provided in this post provisions the EC2 instance and installs RStudio using the user data script. You can run the template multiple times to provision multiple RStudio instances as needed, and you can use it in any AWS Region. After you deploy the CloudFormation template, it provides you with a URL to access RStudio from a web browser. Amazon EC2 enables you to scale up or down to handle changes in data size and the necessary compute capacity to run your analytics.

Create a key-value pair for secure access

AWS uses public-key cryptography to secure the login information for your EC2 instance. You specify the name of the key pair in the KeyPair parameter when you launch the CloudFormation template. Then you can use the same key to log in to the provisioned EC2 instance later if needed.

Before you run the CloudFormation template, make sure that you have the Amazon EC2 key pair in the AWS account that you’re planning to use. If not, then refer to Create a key pair using Amazon EC2 for instructions to create one.

Launch the CloudFormation templateSign in to the CloudFormation console in the us-east-1 Region and choose Launch Stack.

You must enter several parameters into the CloudFormation template:

InitialUser and InitialPassword – The user name and password that you use to log in to the RStudio session. The default values are rstudio and Rstudio@123, respectively.

InstanceType – The EC2 instance type on which to deploy the RStudio server. The template currently accepts all instances in the t2, m4, c4, r4, g2, p2, and g3 instance families, and can incorporate other instance families easily. The default value is t2.micro.

KeyPair – The key pair you use to log in to the EC2 instance.

VpcId and SubnetId – The Amazon Virtual Private Cloud (Amazon VPC) and subnet in which to launch the instance.

After you enter these parameters, deploy the CloudFormation template. When it’s complete, the following resources are available:

An EC2 instance with RStudio installed on it.

An IAM role with necessary permissions to connect to other AWS services.

A security group with rules to open up port 8787 for the RStudio Server.

Log in to RStudio

Now you’re ready to use RStudio! Go to the Outputs tab for the CloudFormation stack and copy the RStudio URL value (it’s in the format http://ec2-XX-XX-XXX-XX.compute-1.amazonaws.com:8787/). Enter that URL in a web browser. This opens your RStudio session, which you can log into using the same user name and password that you provided while running the CloudFormation template.

Access AWS services from RStudio

After you access the RStudio session, you should install the R Package for AWS (Paws). This lets you connect to many AWS services, including the services and resources in your data lake. To install Paws, enter and run the following R code:

To use an AWS service, create a client and access the service’s operations from that client. When accessing AWS APIs, you must provide your credentials and Region. Paws searches for the credentials and Region using the AWS authentication chain:

Explicitly provided access key, secret key, session token, profile, or Region

R environment variables

Operating system environment variables

AWS shared credentials and configuration files in .aws/credentials and .aws/config

Container IAM role

Instance IAM role

Because you’re running on an EC2 instance with an attached IAM role, Paws automatically uses your IAM role credentials to authenticate AWS API requests.

For production environment, we recommend using the scalable Rstudio solution outlined in this blog.

Conclusion

You learned how to deploy your RStudio environment in AWS. We demonstrated the advantages of using RStudio on Amazon SageMaker and how you can get started. You also learned how to quickly begin experimenting with the open-source version of RStudio using a self-hosted installation using Amazon EC2. We also demonstrated how to integrate RStudio into your data lake architectures and implement fine-grained access control on a data lake table using the row-level and cell-level security feature of Lake Formation.

In our next post, we will demonstrate how to containerize R scripts and run them using AWS Lambda.

About the authors

Venkata Kampana is a Senior Solutions Architect in the AWS Health and Human Services team and is based in Sacramento, CA. In that role, he helps public sector customers achieve their mission objectives with well-architected solutions on AWS.

Dr. Dawn Heisey-Grove is the public health analytics leader for Amazon Web Services’ state and local government team. In this role, she’s responsible for helping state and local public health agencies think creatively about how to achieve their analytics challenges and long-term goals. She’s spent her career finding new ways to use existing or new data to support public health surveillance and research.

Read MoreAWS Machine Learning Blog