In this post, we test Oracle GoldenGate (OGG) bidirectional replication between two Amazon Relational Database Service (Amazon RDS) for Oracle instances. This can allow mission-critical applications to be highly available across Regions and provide data redundancy and resilience.

Active-active replication allows multiple database instances with the same application tables to support read/write operations running independently with changes synchronized between them.

A common scenario for active-active database replication is to deploy a single application across the globe to improve the performance of the database across different Regions. In this case, the user queries are routed to the nearest Region, and any data changes are asynchronously replicated to the other Regions by the database layer, ensuring both visibility of these changes globally and acting as high availability and disaster recovery copies in the case of outages. Active-active replication is a core component of Oracle’s platinum Maximum Availability Architecture definition, which can be used to achieve zero downtime and zero data loss.

OGG is a solution that allows you to replicate, filter, and transform data from one database to another database. Due to their existing OGG licenses or DBA expertise, many AWS customers are looking to use their existing OGG software and knowledge to augment and enhance their AWS database deployments.

This post covers the concepts and methodology to set up multiple RDS for Oracle instances and use OGG bidirectional replication to synchronize databases either within a single Region or across multiple Regions according your requirements. This can allow mission-critical applications to be highly available across Regions and provide data redundancy and resilience.

Solution overview

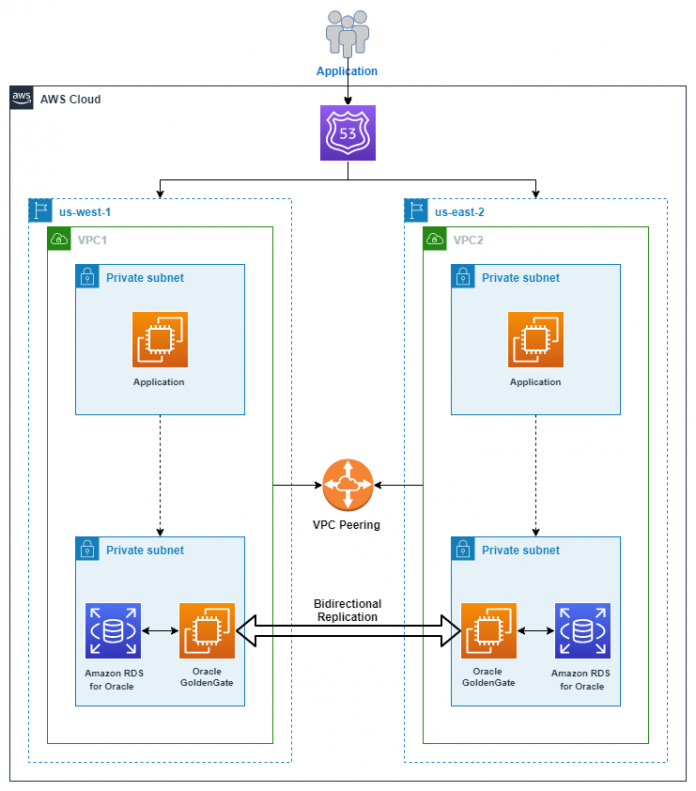

The following diagram shows the architecture for our solution.

The architecture consists of the following components:

An instance type. The one you choose depends on your workloads. In this post, we use us-west-1 and us-east-2 Regions.

OGG running on Amazon Elastic Compute Cloud (Amazon EC2) instances in the same Region as the databases.

An established VPC peering between two VPCs.

The establishment of Amazon Route 53, which connects users to the application.

Prerequisites

To follow along, complete the following prerequisites:

Create two EC2 instances (Amazon Linux 2) configured with OGG in the source and target database Regions.

Set up a VPC peering connection between VPCs in each Region.

Install the latest Oracle client on both EC2 instances. For instructions, refer to the Oracle client documentation.

Install OGG version 19c or above on both EC2 instances. For instructions, refer to Setting up Oracle GoldenGate.

Configure a security group to allow OGG processes in one Region to communicate with OGG processes in the other Regions. For instructions on installing OGG in a high availability configuration, see Implement Oracle GoldenGate high availability in the AWS Cloud.

Set up an Amazon Simple Storage Service (Amazon S3) bucket in the source Region and enable cross-Region replication to the target Region.

Create the AWS Identity and Access Management (IAM) role RDSS3IntegrationRole with PutObject, GetObject, and ListBucket access to Region-specific S3 buckets.

Oracle GoldenGate and some RDS for Oracle environments on AWS require the customer to provide their own license. For more information, refer to RDS for Oracle licensing options.

Review Considerations for an Active-Active Configuration when implementing an active-active application.

Set up RDS for Oracle databases in both Regions

Complete the followings steps to set up your databases:

Create an RDS for Oracle database in each Region. For instructions on creating database instances, see Creating an Oracle DB instance and connecting to a database on an Oracle DB instance.

Set up a source database for use with GoldenGate on Amazon RDS.

Set up a target database for use with GoldenGate on Amazon RDS.

Add the S3_INTEGRATION option to the RDS for Oracle database in the source and target Regions for the initial load using the Oracle Data Pump expdp/impdp process.

Assign the RDSS3IntegrationRole IAM role to the RDS for Oracle instance in the source and target Regions for the initial load of tables using expdp/impdp.

Configure OGG on the source database

In this section, we describe the tasks to configure OGG for the source database:

Log in to the Amazon EC2 host where OGG is installed.

Set the following environment variables in .bash_profile (Note: Change ORACLE_BASE, ORACLE_HOME and OGG_HOME as per your standards.):

# Oracle Settings

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0.0/db_1

export PATH=$ORACLE_HOME/bin:$PATH

export TNS_ADMIN=$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export OGG_HOME=/u01/app/ogg

alias cdg=’cd $OGG_HOME’

Start the GoldenGate command line interface (ggsci) and run the following commands to create the necessary subdirectories:

cdg

./ggsci

GGSCI> create subdirs

Create a manager parameter file. Select an unused port for the manager process:

GGSCI> edit param mgr

port 7810

purgeoldextracts dirdat/*, usecheckpoints, minkeepdays 2

lagcriticalhours 1

laginfominutes 30

lagreportminutes 15

downreportminutes 30

autostart er *

autorestart er *, retries 5, waitminutes 2, resetminutes 10

# start the manager process

GGSCI >start mgr

To learn more about the parameters, refer to Summary of Manager Parameters.

Create the OGG wallet file to store encryption keys:

GGSCI> create wallet

Created wallet.

Opened wallet.

GGSCI> add masterkey

GGSCI> info masterkey

Master encryption key name: OGG_DEFAULT_MASTERKEY

Version Creation Date Status

1 2022-08-31T20:52:20.000+00:00 Current

Create credentialstore for securely storing database user passwords:

GGSCI> add credentialstore

GGSCI> alter credentialstore add user ggs_admin@west_orcl password xxxxx alias west_orcl

Credential store altered.

GGSCI> info credentialstore

Reading from credential store:

Default domain: OracleGoldenGate

Alias: west_orcl

Userid: ggs_admin@west_orcl

GGSCI> dblogin USERIDALIAS west_orcl

Successfully logged into database.

Add a heartbeat and checkpoint table:

GGSCI > add heartbeattable

GGSCI > add checkpointtable ggs_admin.checkpoint

Enable table-level supplemental logging on the schema:

GGSCI> ADD SCHEMATRANDATA dms_sample

Create an extract group to fetch incremental changes. The extract group name can be eight characters long and needs to be unique across a single configuration.The DMS_SAMPLE schema is a sample database for sporting event ticketing application. We’re using some of the tables in the application for demonstration purposes.

GGSCI> edit param esrc

setenv (ORACLE_HOME=”/u01/app/oracle/product/19.3.0.0/db_1″)

setenv (TNS_ADMIN=”/u01/app/oracle/product/19.3.0.0/db_1/network/admin”)

extract esrc

useridalias west_orcl

tranlogoptions integratedparams (max_sga_size 1024)

tranlogoptions excludeuser ggs_admin

exttrail dirdat/es

cachemgr cachesize 1gb, cachedirectory ./dirtmp

table dms_sample.seat;

table dms_sample.person;

table dms_sample.player;

table dms_sample.sporting_event &

getbeforecols(on update all , on delete all );

table dms_sample.sport_location;

table dms_sample.sport_team;

table dms_sample.seat_type;

table dms_sample.sport_type;

table dms_sample.ticket_purchase_hist &

getbeforecols(on update all , on delete all );

To learn more about OGG bidirectional replication for Oracle, see Configuring Oracle GoldenGate for Active-Active Configuration. To prevent data looping, we set tranlogoptions excludeuser ggs_admin in the extract parameter file for the extract process to be aware of the Replicat database user and exclude its transactions.

Before starting the OGG extract, you must register it with the corresponding database:

GGSCI> register extract esrc database

# Create extract

GGSCI> add extract esrc tranlog, integrated tranlog, begin now

# Create Local Trail File

GGSCI> add exttrail dirdat/es extract esrc, megabytes 10

Configure the OGG Pump process to send the extracted trail files to the target EC2 instance. We have decoupled the Extract and Pump processes to allow the extract to continue to pull changes even if there is a network issue between the source and target Regions. To create the Data Pump file, specify the target EC2 OGG hub instance host name/IP in the RMTHOST section:

GGSCI> edit param psrc

extract psrc

useridalias west_orcl

discardfile dirrpt/psrc.dsc, append, megabytes 10

rmthost <gg-remote-host>, mgrport 7810, timeout 30

rmttrail dirdat/es

passthru

table dms_sample.*;

# Create extract pump

GGSCI> add extract psrc, exttrailsource dirdat/es

# Create remote trail file location

GGSCI> add rmttrail dirdat/es, extract psrc

Start the Extract and Data Pump processes on the source database:

# Start Extract

GGSCI> start esrc

# Start Extract Pump

GGSCI> start psrc

Check the status of the processes:

GGSCI> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT RUNNING ESRC 00:00:00 00:00:02

EXTRACT RUNNING PSRC 00:00:00 00:00:05

Perform the initial data load from the source database to the target database

For OGG versions 12.2 and above, the FLASHBACK_SCN parameter is no longer required when the Replicat parameter DBOPTIONS ENABLE_INSTANTIATION_FILTERING is enabled. Data Pump and OGG have a tighter integration. The CSN for each table is captured on a Data Pump export. The CSN is then applied to system tables and views on the target database on an import. These views and system tables are referenced by Replicat when applying data to the target database.

Make sure there is sufficient undo space available to run through the export. If the production system is very busy and there is no disk space available to add any undo space, but you can configure a duplicate system, then you can use that duplicate system to run a transactionally consistent export. (Assuming the duplicate system will be idle, there’s no need to use FLASHBACK_SCN.)

During this initial load process, the source database (where all users are connected) can remain open for read/write activity. However, while we configure the bidirectional replication, users should not connect to the target database until all records are synchronized.

Export tables to be set up for active-active replication:

expdp admin@west_orcl DIRECTORY=DATA_PUMP_DIR DUMPFILE=filename_%U.dmp LOGFILE=schema_expdp.log schemas=dms_sample

Validate export datapump log file for any errors.

# Check instantiated scn

sqlplus admin@west_orcl

SQL> select table_name, scn from dba_capture_prepared_tables where table_owner = ‘DMS_SAMPLE’;

Until the first export of the tables, dba_capture_prepared_tables doesn’t get populated. The system change number (SCN) is the smallest SCN for which the table can be instantiated. It isn’t the export SCN.

Copy the file from DATA_PUMP_DIR to Amazon S3:

SELECT rdsadmin.rdsadmin_s3_tasks.upload_to_s3(

p_bucket_name => ‘<bucket_name>‘,

p_prefix => ”,

p_s3_prefix => ”,

p_directory_name => ‘DATA_PUMP_DIR’)

AS TASK_ID FROM DUAL;

Make sure that the upload to the S3 bucket is successful:

aws s3 ls s3://<bucket_name> –recursive –human-readable –summarize

When Amazon S3 replicates the data from the source to target Region, copy the Data Pump files from Amazon S3:

S3 to DATA_PUMP_DIR:

# Copy file from S3 in target region to DATA_PUMP_DIR

SELECT rdsadmin.rdsadmin_s3_tasks.download_from_s3(

p_bucket_name => ‘<bucket_name>‘,

p_s3_prefix => ”,

p_directory_name => ‘DATA_PUMP_DIR’)

AS TASK_ID FROM DUAL;

Make sure that the download from the S3 bucket is successful:

SELECT * FROM TABLE(rdsadmin.rds_file_util.listdir(p_directory => ‘PRODUCT_DESCRIPTIONS’));

Import data in the target Region:

impdp admin@east_orcl DIRECTORY=DATA_PUMP_DIR DUMPFILE=filename_%U.dmp LOGFILE=schema_impdp.log schemas=dms_sample

Check the import Data Pump log file for any errors.

Configure OGG on the target database

Repeat the steps to configure OGG on the source database on the target EC2 instance. Make sure to change the following configurations in the target OGG hub instance:

Use TGT instead of SRC as the suffix in the OGG process names

Point OGG to the Region-specific database instance

Set up Replicat in target database

To set up Replicat in the target database, use the following code:

Log in to the Amazon EC2 host where OGG is installed.

Set the following environment variables in .bash_profile (Note: Change ORACLE_BASE, ORACLE_HOME and OGG_HOME as per your standards.):

# Oracle Settings

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0.0/db_1

export PATH=$ORACLE_HOME/bin:$PATH

export TNS_ADMIN=$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export OGG_HOME=/u01/app/ogg

alias cdg=’cd $OGG_HOME’

Start the GoldenGate command line interface (ggsci):

cdg

./ggsci

Set up the Replicat file on the target:

edit param rtgt

setenv (ORACLE_HOME=”/u01/app/oracle/product/19.3.0.0/db_1″)

setenv (TNS_ADMIN=”/u01/app/oracle/product/19.3.0.0/db_1/network/admin”)

replicat rtgt

useridalias east_orcl

discardfile dirrpt/rtgt.dsc, append, megabytes 10

reportcount every 30 minutes, rate

reportrollover at 00:01 on sunday

discardrollover at 00:01 on sunday

batchsql

dboptions enable_instantiation_filtering

— include ddl for mapped objects only

ddl include mapped;

ddloptions report

— Update metadata after DDL changes have been applied to source

ddloptions updatemetadata

map dms_sample.seat, target dms_sample.seat;

map dms_sample.person, target dms_sample.person;

map dms_sample.player, target dms_sample.player;

map dms_sample.sporting_event , target dms_sample.sporting_event, &

comparecols (on update keyincluding (start_date_time), on delete keyincluding (start_date_time)), &

resolveconflict (updaterowexists, (default, usemax (start_date_time))), &

resolveconflict (insertrowexists, (default, usemax (start_date_time))), &

resolveconflict (deleterowexists, (default, ignore)), &

resolveconflict (updaterowmissing, (default, overwrite)), &

resolveconflict (deleterowmissing, (default, discard));

map dms_sample.sport_location, target dms_sample.sport_location;

map dms_sample.sport_team, target dms_sample.sport_team;

map dms_sample.seat_type, target dms_sample.seat_type;

map dms_sample.sport_type, target dms_sample.sport_type;

map dms_sample.ticket_purchase_hist , target dms_sample.ticket_purchase_hist, &

comparecols (on update keyincluding (transaction_date_time), on delete keyincluding (transaction_date_time)), &

resolveconflict (updaterowexists, (default, usemax (transaction_date_time))), &

resolveconflict (insertrowexists, (default, usemax (transaction_date_time))), &

resolveconflict (deleterowexists, (default, ignore)), &

resolveconflict (updaterowmissing, (default, overwrite)), &

resolveconflict (deleterowmissing, (default, discard));

Add Replicat on the target:

GGSCI> add replicat rtgt, exttrail dirdat/es, checkpointtable ggs_admin.checkpoint

Start Replicat on the target:

GGSCI> start replicat rtgt

Check the status:

GGSCI (ip-10-5-3-135.us-east-2.compute.internal) 4> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT RUNNING ETGT 00:00:00 00:00:01

EXTRACT RUNNING PTGT 00:00:00 00:00:02

REPLICAT RUNNING RTGT 00:00:00 00:00:06

Set up Replicat in the source database

Use the following code to set up Replicat in the source database:

Log in to the Amazon EC2 host where OGG is installed.

Set the following environment variables in .bash_profile (Note: Change ORACLE_BASE, ORACLE_HOME and OGG_HOME as per your standards.):

# Oracle Settings

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0.0/db_1

export PATH=$ORACLE_HOME/bin:$PATH

export TNS_ADMIN=$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export OGG_HOME=/u01/app/ogg

alias cdg=’cd $OGG_HOME’

Start the GoldenGate command line interface (ggsci):

Setup replicat file on source

cdg

./ggsci

Set up the Replicat file on the source:

edit param rsrc

setenv (ORACLE_HOME=”/u01/app/oracle/product/19.3.0.0/db_1”)

setenv (TNS_ADMIN=”/u01/app/oracle/product/19.3.0.0/db_1/network/admin”)

replicat rsrc

useridalias west_orcl

discardfile dirrpt/rsrc.dsc, append, megabytes 10

reportcount every 30 minutes, rate

reportrollover at 00:01 on Sunday

discardrollover at 00:01 on Sunday

dboptions enable_instantiation_filtering

— include ddl for mapped objects only

ddl include mapped;

ddloptions report

— Update metadata after DDL changes have been applied to source

ddloptions updatemetadata

map dms_sample.seat, target dms_sample.seat;

map dms_sample.person, target dms_sample.person;

map dms_sample.player, target dms_sample.player;

map dms_sample.sporting_event , target dms_sample.sporting_event, &

comparecols (on update keyincluding (start_date_time), on delete keyincluding (start_date_time)), &

resolveconflict (updaterowexists, (default, usemax (start_date_time))), &

resolveconflict (insertrowexists, (default, usemax (start_date_time))), &

resolveconflict (deleterowexists, (default, ignore)), &

resolveconflict (updaterowmissing, (default, overwrite)), &

resolveconflict (deleterowmissing, (default, discard));

map dms_sample.sport_location, target dms_sample.sport_location;

map dms_sample.sport_team, target dms_sample.sport_team;

map dms_sample.seat_type, target dms_sample.seat_type;

map dms_sample.sport_type, target dms_sample.sport_type;

map dms_sample.ticket_purchase_hist , target dms_sample.ticket_purchase_hist, &

comparecols (on update keyincluding (transaction_date_time), on delete keyincluding (transaction_date_time)), &

resolveconflict (updaterowexists, (default, usemax (transaction_date_time))), &

resolveconflict (insertrowexists, (default, usemax (transaction_date_time))), &

resolveconflict (deleterowexists, (default, ignore)), &

resolveconflict (updaterowmissing, (default, overwrite)), &

resolveconflict (deleterowmissing, (default, discard)); resolveconflict (deleterowexists, (default, ignore)), &

resolveconflict (updaterowmissing, (default, overwrite)), &

resolveconflict (deleterowmissing, (default, discard));

Add Replicat on the source:

GGSCI> add replicat rsrc, exttrail dirdat/et, checkpointtable ggs_admin.checkpoint

Start Replicat on the source:

GGSCI> start replicat rsrc

Check the status:

GGSCI (ip-10-10-5-109.us-west-1.compute.internal) 4> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT RUNNING ESRC 00:00:00 00:00:01

EXTRACT RUNNING PSRC 00:00:00 00:00:05

REPLICAT RUNNING RSRC 00:00:00 00:00:06

To enable conflict detection and resolution, you need to make a few changes to the Extract and Replicat configuration.

Extract changes

Edit the extract parameter file and set the GetBeforeCols option of the extract table parameter to specify columns for which you want the extract to capture the before image of an update. In the following code, we tell OGG to extract a before image for all columns on update:

table dms_sample.sporting_event &

getbeforecols(on update all , on delete all );

Replicat changes

We add the COMPARECOLS option to specify which columns are used to detect updates and delete conflicts. We use the RESOLVECONFLICT option to specify the conflict resolution method. In the following example, OGG persists the record with the latest transaction_date_time value:

map dms_sample.sporting_event , target dms_sample.sporting_event, &

comparecols (on update keyincluding (start_date_time), on delete keyincluding (start_date_time)), &

resolveconflict (updaterowexists, (default, usemax (start_date_time))), &

resolveconflict (insertrowexists, (default, usemax (start_date_time))), &

resolveconflict (deleterowexists, (default, ignore)), &

resolveconflict (updaterowmissing, (default, overwrite)), &

resolveconflict (deleterowmissing, (default, discard));

For more information about these rules, refer to CDR Example 1: All Conflict Types with USEMAX, OVERWRITE, DISCARD.

Testing replication

Create the following tables for testing:

CREATE TABLE dms_sample.person(

id DOUBLE NOT NULL,

full_name varchar(60) NOT NULL,

last_name varchar(30),

first_name varchar(30)

);

create table sporting_event

(id NUMBER NOT NULL,

sport_type_name VARCHAR2(15) NOT NULL

constraint se_sport_type_fk references sport_type(name),

home_team_id NUMBER NOT NULL

constraint se_home_team_id_fk references sport_team(id),

away_team_id NUMBER NOT NULL

constraint se_away_team_id_fk references sport_team(id),

location_id NUMBER NOT NULL

constraint se_location_id_fk references sport_location(id),

start_date_time DATE NOT NULL,

sold_out NUMBER(1) DEFAULT 0 NOT NULL,

constraint chk_sold_out CHECK (sold_out IN (0,1)),

constraint sporting_event_pk primary key(id)

);

Insert test

Use the dms_sample.person table for your one-way replication tests.

Connect to the source database and insert one row:

WEST_ORCL> insert into dms_sample.person values (7055279,’Sean Adams’,’Adams’,’Sean’);

1 row created.

WEST_ORCL> commit;

Commit complete.

WEST_ORCL> select * from dms_sample.person where id=7055279;

ID FULL_NAME LAST_NAME FIRST_NAME

———- ———— ————- ————–

7055279 Sean Adams Adams Sean

On the target database, verify the replicated data:

EAST_ORCL> select * from dms_sample.person where id=7055279;

ID FULL_NAME LAST_NAME FIRST_NAME

———- ———— ————- ————–

7055279 Sean Adams Adams Sean

Now we test the reverse replication and insert data at the target database and check if it gets replicated to the source.

Connect to the target database and insert the following data:

EAST_ORCL> insert into dms_sample.person values (7055280,’John Doe’,’Doe’,’John’);

1 row created.

EAST_ORCL>commit;

Commit complete.

Verify the data on the source:

WEST_ORCL> select * from dms_sample.person where id=7055280;

ID FULL_NAME LAST_NAME FIRST_NAME

———- —————- —————- ——————

7055280 John Doe Doe John

Conflict detection and resolution test

Use the dms_sample.sporting_event table for our conflict detection and resolution tests.

Connect to the source database and update a record but don’t commit:

WEST_ORCL> select * from SPORTING_EVENT where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

ID SPORT_TYPE_NAME HOME_TEAM_ID AWAY_TEAM_ID LOCATION_ID START_DATE_TIME SOLD_OUT

———- ————— ———— ———— ———– ——————- ———-

1 baseball 1 11 28 04/03/2021 17:00:00 0

WEST_ORCL> update SPORTING_EVENT set SOLD_OUT=1,START_DATE_TIME=sysdate where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

1 row updated.

WEST_ORCL> select * from SPORTING_EVENT where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

ID SPORT_TYPE_NAME HOME_TEAM_ID AWAY_TEAM_ID LOCATION_ID START_DATE_TIME SOLD_OUT

———- ————— ———— ———— ———– ——————- ———-

1 baseball 1 11 28 01/05/2023 21:34:37 1

Connect to the target database and update the same record but don’t commit:

EAST_ORCL> select * from SPORTING_EVENT where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

ID SPORT_TYPE_NAME HOME_TEAM_ID AWAY_TEAM_ID LOCATION_ID START_DATE_TIME SOLD_OUT

———- ————— ———— ———— ———– ——————- ———-

1 baseball 1 11 28 04/03/2021 17:00:00 0

EAST_ORCL> update SPORTING_EVENT set SOLD_OUT=1,START_DATE_TIME=sysdate where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

1 row updated.

EAST_ORCL> select * from SPORTING_EVENT where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

ID SPORT_TYPE_NAME HOME_TEAM_ID AWAY_TEAM_ID LOCATION_ID START_DATE_TIME SOLD_OUT

———- ————— ———— ———— ———– ——————- ———-

1 baseball 1 11 28 01/05/2023 21:35:13 1

The Extract, Pump, and Replicat processes are in the RUNNING state in the source and target environments. The UPDATE statement has the same ID and will cause a conflict. For a resolution mechanism, we used USEMAX(start_date_time). Because the start_date_time is most recent in the EAST_ORCL Region, the Replicat process applies this change to the database in the WEST_ORCL Region. USEMAX will force it to use the most recent start_date_time for an insert or update.

Run COMMIT in both the databases.

The row in the WEST_ORCL Region is updated with the latest change:

WEST_ORCL> select * from SPORTING_EVENT where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

ID SPORT_TYPE_NAME HOME_TEAM_ID AWAY_TEAM_ID LOCATION_ID START_DATE_TIME SOLD_OUT

———- ————— ———— ———— ———– ——————- ———-

1 baseball 1 11 28 01/05/2023 21:35:13 1

EAST_ORCL> select * from SPORTING_EVENT where HOME_TEAM_ID=1 and AWAY_TEAM_ID=11;

ID SPORT_TYPE_NAME HOME_TEAM_ID AWAY_TEAM_ID LOCATION_ID START_DATE_TIME SOLD_OUT

———- ————— ———— ———— ———– ——————- ———-

1 baseball 1 11 28 01/05/2023 21:35:13 1

The following code block shows the status of the CDR in OGG:

GGSCI (ip-10-10-5-109.us-west-1.compute.internal) 13> stats rsrc latest reportcdr

Sending STATS request to Replicat group RSRC …

Start of statistics at 2023-01-05 21:41:19.

DDL replication statistics:

*** Total statistics since replicat started ***

Operations 0.00

Mapped operations 0.00

Unmapped operations 0.00

Other operations 0.00

Excluded operations 0.00

Errors 0.00

Retried errors 0.00

Discarded errors 0.00

Ignored errors 0.00

Replicating from DMS_SAMPLE.SPORTING_EVENT to DMS_SAMPLE.SPORTING_EVENT:

*** Latest statistics since 2023-01-05 21:35:50 ***

Total inserts 0.00

Total updates 1.00

Total deletes 0.00

Total upserts 0.00

Total discards 0.00

Total operations 1.00

Total CDR conflicts 1.00

CDR resolutions succeeded 1.00

CDR UPDATEROWEXISTS conflicts 1.00

End of statistics.

Monitor with Amazon CloudWatch

To set up Amazon CloudWatch monitoring, use the following code:

Install the CloudWatch agent:

[ec2-user@ip-10-10-5-109 ~]$ sudo yum install amazon-cloudwatch-agent

Loaded plugins: extras_suggestions, langpacks, priorities, update-motd

amzn2-core | 3.7 kB 00:00:00

1 packages excluded due to repository priority protections

Package matching amazon-cloudwatch-agent-1.247354.0b251981-1.amzn2.x86_64 already installed. Checking for update.

Nothing to do

[ec2-user@ip-10-10-5-109 ~]$ rpm -qa |grep cloudwatch

amazon-cloudwatch-agent-1.247355.0b252062-1.x86_64

[ec2-user@ip-10-10-5-109 ~]$

Use the following CloudWatch config file for ggserr.log monitoring:

cat /opt/aws/amazon-cloudwatch-agent/bin/config.json

{

“agent”: {

“metrics_collection_interval”: 60,

“run_as_user”: “cwagent”

},

“logs”: {

“logs_collected”: {

“files”: {

“collect_list”: [

{

“file_path”: “/u01/app/ogg/ggserr.log”,

“log_group_name”: “GoldenGateLogs”,

“log_stream_name”: “{instance_id}”

}

]

}

}

}

}

Start CloudWatch monitoring for the ggserr.log file:

sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -a fetch-config -m ec2 -s -c file:/opt/aws/amazon-cloudwatch-agent/bin/config.json

The following screenshot shows the ggserr.log output in the CloudWatch log group.

Clean up

We recommend that you clean up all the resources that aren’t in use by deleting the RDS instances and deleting the EC2 instances.

Conclusion

In this post, we covered how to implement bidirectional replication for Amazon RDS for Oracle databases running in two different Regions with Oracle GoldenGate 19c.

If you have questions or suggestions, leave a comment.

About the authors

Jay Singh is a Database Consultant with the Professional Services Team at Amazon Web Services. He works as database migration specialist to help Amazon customers to move their on-premises database environments to AWS Cloud database solutions.

Chandan Acharya is a Database Consultant with the Professional Services Team at Amazon Web Services. He works as database migration specialist to help Amazon customers move their on-premises database environment to AWS cloud database solutions.

Wajid Ali Mir is a Database Consultant with AWS ProServe, Global Competency Centre. His background spans a wide depth and breadth of expertise and experience in SQL and NoSQL database technologies. He is an AWS Database Migration Service expert and has developed many successful database solutions addressing challenging business requirements for moving databases from on premises to Amazon RDS and Amazon Aurora PostgreSQL/MySQL.

Kishore Kumar Balasundaram is a Sr. Solutions Architect with WWPS. He has passion to help customers design modern cloud architecture and recommend the right services for their requirements. He understands business use cases and translates them to secured, scalable, and resilient IT solutions.

AWS Database Blog