Amazon Relational Database Service (Amazon RDS) Custom is a managed database service for legacy, custom, and packaged applications that require access to the underlying OS and DB environment. With RDS Custom for Oracle, you can now access and customize your database server host and operating system, for example by applying special patches and changing the database software settings to support third-party applications that require privileged access. Through the time-saving benefits of a managed service, Amazon RDS Custom for Oracle allows you to focus on more important business-impacting, strategic activities. By automating backups and other operational tasks, you can rest easy knowing your data is safe and ready to be recovered if needed. Finally, Amazon RDS Custom cloud-based scalability can help your database infrastructure keep pace as their business grows. For more information about Amazon RDS Custom, refer to Amazon RDS Custom for Oracle – New Control Capabilities in Database Environment.

Currently, you can achieve high availability (HA) on Amazon RDS Custom for Oracle by creating read replicas, which you can also promote to standalone databases if the need arises.

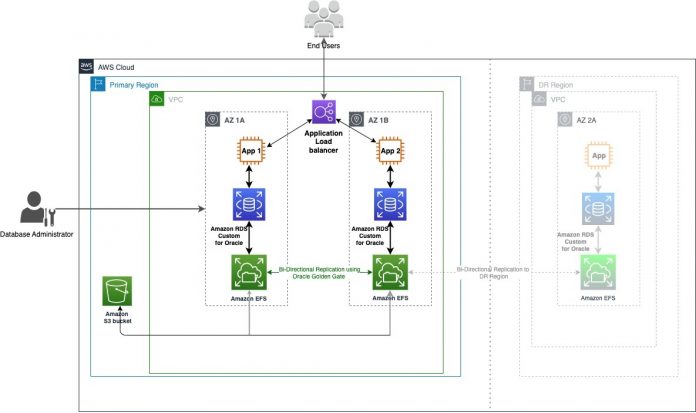

In this post, we show you how to use Oracle GoldenGate (GoldenGate) to set up bi-directional replication on Amazon RDS Custom for Oracle. GoldenGate collects, replicates, and manages transactional data between databases. It is a log-based change data capture (CDC) and replication software package used with Oracle databases for online transaction processing (OLTP) systems. GoldenGate creates trail files that contain the most recent changed data from the source database and then pushes these files to the target database. We also use Amazon Elastic File System (Amazon EFS) along with GoldenGate to provide high availability (HA) and disaster recovery (DR) with near zero data loss in case of an Availability Zone (AZ) failure.

Amazon EFS is designed to provide 99.999999999% (11 9’s) of durability over a given year. You can use Amazon EFS replication or AWS Backup to guard your Amazon EFS Single-AZ file system against the loss of an Availability Zone.

You can further scale the architecture by creating up to five read replicas of each RDS Custom instance, and you can also configure manual HA with Oracle Data Guard.

Amazon RDS Custom for Oracle is a bring your own license (BYOL) service. You should make sure you have GoldenGate license along with an Oracle EE license.

For more information about Amazon RDS Custom for Oracle and its features, refer to Working with Amazon RDS Custom.

Solution overview

We have two RDS Custom for Oracle instances running in two different AZ’s within the same AWS Region, which provides high availability. We can also create a cross-region solution for DR purposes that would have different design considerations and support different recovery objectives.

Our solution has the following components:

Two RDS Custom for Oracle instances running in two different AZ’s.

Two Amazon EFS file systems attached to each RDS Custom for Oracle instance.

Oracle GoldenGate binaries installed on each of the instances, and residing on an Amazon Elastic Block Store (Amazon EBS) volume.

The GoldenGate shared files are stored on an Amazon EFS (EFS) volume on each RDS Custom for Oracle instance.

GoldenGate can also be configured on EBS without using EFS, but using the EFS filesystem for trailfiles provides the following benefits

EFS provides the High Availability in the event of instance failure. By default, every EFS file system object (such as directory, file, and link) is redundantly stored across multiple AZs for file systems using Standard storage classes.

EFS mount point can be mounted across several EC2 instances within the region.

As EFS is Highly Available EFS provides near Zero RPO, EBS RPO is dependent on the restore time of the Snapshot.

When deciding if you should be using file or block storage for your cloud application deployment, there are many factors to consider. The table below compares performance and storage characteristics for Amazon’s highest performing file and block cloud storage offerings.

.

.

File

Amazon EFS

Block

Amazon EBS

Performance

Per-operation latency

Low, consistent

Lowest, consistent

Throughput scale

Multiple GBs per second

Single GB per second

Characteristics

Data Availability/Durability

Stored redundantly across multiple AZs

Stored redundantly in a single AZ

Access

One to thousands of EC2 instances or on-premises servers, from multiple AZs, concurrently

Single EC2 instance in a single AZ

Use Cases

Web serving and content management, enterprise applications, media and entertainment, home directories, database backups, developer tools, container storage, big data analytics

Boot volumes, transactional and NoSQL databases, data warehousing & ETL

The following diagram illustrates the architecture for the RDS Custom for Oracle instance in a disaster recovery Region.

We have used in-Region Amazon RDS Custom for Oracle to implement HA, but we can also implement this as an HA/DR solution by creating the second Amazon RDS Custom instance in another Region .

This solution has the following advantages:

Active-active RDS Custom for Oracle database instances support multiple writers in different geographies.

Amazon EFS helps achieve a Recovery Point Objective (RPO) of near zero, meaning no data loss, because it holds the trail files even in case of AZ failure.

You have an RPO of near zero by using Oracle bi-directional replication and Amazon EFS.

With Amazon RDS Custom for Oracle, you can create up to five managed Oracle replicas of your RDS Custom for Oracle source DB instance in the same Region, which can provide read scaling or additional HA. You can also create your own manually configured (external) Oracle replicas in the same region or across regions which can provide HA/DR and read scaling. External replicas don’t count toward your managed replica limit.

Considerations for an active-active configuration for Amazon RDS Custom for Oracle

The following considerations apply in an active-active configuration. For detailed explanations, refer to Considerations for an Active-Active Configuration.

TRUNCATES

Bi-directional replication of TRUNCATES is not supported, but you can configure these operations to be replicated in one direction, while data is replicated in both directions. To replicate TRUNCATES (if supported by Oracle GoldenGate for the database) in an active-active configuration, the TRUNCATES must originate only from one database, and only from the same database each time.

Application design

When using active-active replication, the time zones must be the same on both systems so that timestamp-based conflict detection and resolution can operate.

Active-active replication is not recommended for use with commercially available packaged business applications, unless the application is designed to support it.

Keys

For accurate detection of conflicts, all records must have a unique, not-null identifier. If possible, create a primary key. If that is not possible, use a unique key or create a substitute key with a KEYCOLS option of the MAP and TABLE parameters. In the absence of a unique identifier, Oracle GoldenGate uses all the columns that are valid in a WHERE clause, but this will degrade performance if the table contains numerous columns.

Triggers and cascaded deletes

Triggers and ON DELETE CASCADE constraints generate DML operations that can be replicated by Oracle GoldenGate. To prevent the local DML from conflicting with the replicated DML from these operations, do the following:

Modify triggers to ignore DML operations that are applied by GoldenGate Replicat. If the target is an Oracle database, Replicat handles triggers without any additional configuration when in integrated mode. Parameter options are available for a nonintegrated Replicat for Oracle. For more information, see 12.1 Disabling Triggers and Referential Cascade Constraints on Target Tables.

Disable ON DELETE CASCADE constraints and use a trigger on the parent table to perform the required record deletion on the child tables. Create it as a BEFORE trigger so that the child tables are deleted before the delete operation is performed on the parent table. This reverses the logical order of a cascaded delete but is necessary so that the operations are replicated in the correct order to prevent “table not found” errors on the target. For Oracle targets, if Replicat is in integrated mode, constraints are handled automatically without special configuration.

Database-generated values

Do not replicate database-generated sequential values, such as Oracle sequences, in a bi-directional configuration. The range of values must be different on each system, with no chance of overlap. For example, in a two-database environment, you can have one server generate even values, and the other odd. For an n-server environment, start each key at a different value and increment the values by the number of servers in the environment. This method may not be available to all types of applications or databases. If the application permits, you can add a location identifier to the value to enforce uniqueness.

Database configuration

One of the databases must be designated as the trusted source. This is the source database and its host system from which the other database is derived in the initial synchronization phase and in any subsequent resynchronizations that become necessary. Maintain frequent backups of the trusted source data.

Prevent data looping

In a bi-directional configuration, SQL changes that are replicated from one system to another must be prevented from being replicated back to the first system. Otherwise, it moves back and forth in an endless loop, as in this example:

A user application updates a row on system A.

GoldenGate Extract captures the change on system A and sends it to system B.

Replicat updates the row on system B.

GoldenGate Extract captures the change on system B and sends it back to system A.

The row is applied on system A (for the second time).

This loop continues endlessly.

To prevent data loopback, you may need to provide instructions that do the following:

Prevent the capture of SQL operations that are generated by Replicat, but enable the capture of SQL operations that are generated by business applications if they contain objects that are specified in the Extract parameter file

Identify local Replicat transactions, in order for the Extract process to ignore them

There are multiple ways to identify Replicat transaction in an Oracle environment. When Replicat is in classic or integrated mode, you use the following parameters:

Use DBOPTIONS with the SETTAG option in the Replicat parameter file. Replicat tags the transactions being applied with the specified value, which identifies those transactions in the redo stream. The default SETTAG value is 00. Valid values are a single TAG value consisting of hexadecimal digits. For more information about tags, see Reference for Oracle GoldenGate for Windows and UNIX.

Use the TRANLOGOPTIONS parameter with the EXCLUDETAG option in the Extract parameter file. The logmining server associated with that Extract excludes redo that is tagged with the SETTAG value.

If you are excluding multiple tags, each must have a separate TRANLOGOPTIONS EXCLUDETAG statement specified.

You can also use the transaction name or user ID of the Replicat user to identify Replicat transactions.

Manage conflicts

Uniform conflict-resolution procedures must be in place on all systems in an active-active configuration. Conflicts should be identified immediately and handled with as much automation as possible; however, different business applications present their own unique set of requirements in this area.

Because Oracle GoldenGate is an asynchronous solution, conflicts can occur when modifications are made to identical sets of data on separate systems at (or almost at) the same time.

To avoid conflicts, replication latency must be kept as low as possible. When conflicts are unavoidable, they must be identified immediately and resolved using the Oracle GoldenGate Conflict Detection and Resolution (CDR) feature, or through methods developed on your own. Custom methods can be integrated into Oracle GoldenGate processing through the SQLEXEC and user exit functionality. See Configuring Conflict Detection and Resolution for more information about using Oracle GoldenGate to handle conflicts.

We walk you through the following high-level steps:

Integrate an RDS Custom for Oracle instance with Amazon EFS.

Install GoldenGate on Amazon RDS Custom using Amazon EFS.

Set up a source database for use with GoldenGate on Amazon RDS Custom for Oracle.

Configure replication from the source RDS Custom for Oracle instance to the target RDS Custom for Oracle instance.

Configure replication from the target RDS Custom for Oracle instance to the source RDS Custom for Oracle instance.

Prerequisites

In order to follow along you must have the following:

Two RDS Custom for Oracle instances running on Amazon Elastic Compute Cloud (Amazon EC2) with Oracle Linux, and the same Oracle Database software versions. The instances can be running in two different AZ’s or different Regions. For instructions, see Working with Amazon RDS Custom.

The solution is applicable when the source database instance is on premises as long as you have appropriate network connectivity between the on-premises network and your Amazon Virtual Private Cloud (Amazon VPC).

Connectivity between the two RDS Custom for Oracle instances, and the necessary ports opened for GoldenGate replication.

We walk you through the steps to install Oracle GoldenGate on Amazon RDS Custom for Oracle. We also cover the steps to configure bi-directional replication between two RDS Custom instances.

Follow the steps in Creating and connecting to a DB instance for Amazon RDS Custom for Oracle to create your two instances.

Implement Oracle GoldenGate on Amazon RDS Custom using Amazon EFS

Oracle GoldenGate collects, replicates, and manages transactional data between databases. We can use GoldenGate with Amazon RDS custom to do the following:

Active-active database replication

Disaster recovery

Data protection

In-Region and cross-Region replication

Zero-downtime migration and upgrades

We can directly install GoldenGate on an RDS Custom for Oracle EC2 instance, but in case of failover, the RPO can be up to 5 minutes.

To achieve zero RPO, we integrate Amazon EFS with Amazon RDS Custom for Oracle, so that all the GoldenGate files that are required to replay the database transactions are stored on Amazon EFS. In a failover scenario, we can create a new read replica from the surviving database, mount the same Amazon EFS file system again, and start the bi-directional replication of GoldenGate to reestablish high availability.

You can also achieve the same resiliency if you decide to use one file system mounted across the two RDS Custom for Oracle DB instances. For this solution, we use two different Amazon EFS mount points, one for the source and one for the target, which helps make the management of GoldenGate easier and also makes it easier to troubleshoot.

Integrate an RDS Custom for Oracle instance with Amazon EFS

This integration has the following key benefits:

Amazon EFS is used to store GoldenGate shared files.

In failover scenarios, the same file system can be mounted on the new DB instance without risk of losing any data.

Amazon EFS mounted on RDS Custom for Oracle DB instances as a landing zone for various Oracle files required for migration or data transfer. Since, Amazon EFS can be mounted across different AZ’s and replicated cross regions enabling you to achieve faster migration.

Using Amazon EFS as a landing zone also helps to save allocation of extra storage space on the instance to hold the files.

Amazon EFS provides consistent performance to each compute instance. It’s designed to be highly durable and highly available.

The file systems can automatically scale from gigabytes to petabytes of data without needing to provision storage.

For integration instructions, refer to Integrate Amazon RDS Custom for Oracle with Amazon EFS.

The following screenshot shows the Amazon RDS Custom integration with Amazon EFS on the source instance.

The following screenshot shows the Amazon RDS Custom integration with Amazon EFS on the target instance.

Install Golden Gate on the source and target RDS Custom for Oracle instances

Follow the steps in this section for both instances.

Download Oracle GoldenGate compatible release version from Obtaining the Oracle GoldenGate Distribution. To download the binaries for Oracle GoldenGate using WGET directly on to RDS Custom instance, follow the support doc WGET to download My Oracle Support (MOS) Doc ID 980924.1

Download the file gg_download.sh in user home and run the script to download the Oracle GoldenGate 19c software. This download require approximate 1 GB of additional space in the filesystem. Make sure the RDS Custom EC2 instance has internet access. For more information, refer to Connect to the internet using an internet gateway.

The script prompts for the Oracle SSO user name and the password:

Move the downloaded Zip file to the directory where you want to install the Golden binary files and then unzip the file as shown.

To install the GoldenGate software, we use the ./runInstaller graphical user interface (GUI). Alternatively, silent mode installation allows us to configure necessary Oracle components without using a GUI. We can use the response file to provide all the required information for the installation, so no additional user input is required.

Take a backup of the original response file:

To prepare the response file for GoldenGate installation, edit the following parameters and save the changes to the response file. Make sure to create required directories and provide the appropriate local filesystem location for the parameters.

Now you can complete GoldenGate silent installation by running the following script. We also include the output of the silent installation.

Run the following command as root user.

Validate the golden gate installation by running the ggsci command.

After the GoldenGate installation we need to create required subdirectories through the GoldenGate Software Command Interface (GGSCI) using create subdirs command. Since we are using EFS, these subdirectories must be created on Amazon EFS manually and then create symbolic links from GoldenGate home (also known as soft links) that point to Amazon EFS.

There is a two-step process to create the required 15 symbolic links.

Step 1:

Create the subdirectories on Amazon EFS. The following commands shows how to create the subdirectories. You should run these commands using the RDS Custom for Oracle operating system user rdsdb. We assume the default owners and paths, make sure to adjust as appropriate for your Custom Oracle home. This operating system user is not the root user and doesn’t require root privileges. For more information about required privileges, see Operating System Privileges. In the following screenshot we created a directory gg_data under the EFS location and then created the directories under gg_data.

Step 2:

Create the symbolic links from the GoldenGate home directory.

Create a soft link for each with the same name pointing to the Amazon EFS location where we want the trail files to get generated. Make sure these directories are deleted from default GoldenGate home before running these commands. For example:

The following screenshot shows how the soft link looks like from the Oracle GoldenGate home directory to the Amazon EFS file system.

The higher-level gg directory can’t be symbolically linked. The symbolic links must only be created for the dir* directories shown in the preceding screenshot. There are files specific to the GoldenGate installation that must not be shared across GoldenGate environments, specifically GoldenGate error logs, libraries, and executables.

Symbolic links are supported for GoldenGate subdirectories. Oracle has published a My Oracle Support (MOS) document validating this configuration. The MOS Doc ID is 1366597.1.

Perform the GoldenGate binary configuration steps on second instance following the same steps.

Set up a source database for use with GoldenGate on Amazon RDS Custom for Oracle

Complete the following prerequisite configurations for Oracle Database in order to set up GoldenGate on the source and target database. The following are the database-related parameters that you must configure before setting up GoldenGate.

You must also create the administrator and user or schema owners on both the source and target database and grant required privileges. The following code grants the necessary account privileges to the GoldenGate user (for this post, gguser) for the RDS Custom for Oracle source and target environments. The best practice is to grant least access for the GoldenGate user for the RDS Custom for Oracle database. For more information, visit Granting the Appropriate User Privileges.

Add the following entries to $ORACLE_HOME/network/admin/tnsnames.ora in the Oracle home to be used by the EXTRACT process. For more information on the tnsnames.ora file, see Local Naming Parameters in the tnsnames.ora File.

Set up the manager process on the source database:

Set up the manager process on the target database:

Configure a GoldenGate credential store to maintain encrypted database passwords and user IDs.

Use the following code for the source database:

Use the following code for the target:

Create an Oracle GoldenGate checkpoint table on the source and target by running the following code on each environment:

Set up the demo schema and sample table (Heartbeat) by running the following SQL*Plus commands on each environment.

The GoldenGate ADD SCHEMATRANDATA command acts on all current and future tables in a given schema to automatically enable schema-level supplemental logging. ADD TRANDATA for Oracle enables the unconditional logging of the primary key and the conditional supplemental logging of all unique keys and foreign keys of the specified table. If possible, use the ADD SCHEMATRANDATA command rather than the ADD TRANDATA command. The ADD SCHEMATRANDATA command ensures replication continuity should DML occur on an object for which DDL has just been performed

The following excerpt is for the source, using ADD SCHEMATRANDATA:

The following excerpt is for the target, using ADD SCHEMATRANDATA:

Validate the log group created in source and target database using following SQL*Plus commands:

Lastly, edit the GLOBALS parameter file on both the source and target GoldenGate home directory form the operating system. The GLOBALS file stores parameters that relate to the Oracle GoldenGate instance as a whole. The parameters in the GLOBALS file apply to all processes in the Oracle GoldenGate instance, but can be overridden by specific process parameters. From the Oracle GoldenGate installation location, run GGSCI and enter the following command, or open a file in a text editor.

Configure Replication from the source to target instance

To configure the source Extract group, use the EDIT PARAMS command to create a parameter file for the ECUST_S group. Include the following parameters plus any others that apply to your database environment. For possible additional required parameters, see the Oracle GoldenGate installation and setup guide for your database.

Run the following command on GGSCI prompt.

To configure the data pump extract process, use the following GGSCI commands:

Run the following command on GGSCI prompt.

To configure the Replicat group, use the following GGSCI commands:

Run the following command on GGSCI prompt.

Configure Replication from the target to source instance

To configure the target Extract group, use the EDIT PARAMS command to create a parameter file for the ECUST_T group. Include the following parameters plus any others that apply to your database environment. For possible additional required parameters, see the Oracle GoldenGate installation and setup guide for your database.

Run the following command on GGSCI prompt.

To configure the data pump extract process on the target, use the EDIT PARAMS GGSCI command to create a parameter file for the PCUST_T group:

Run the following command on GGSCI prompt

To configure the Replicat group, use the EDIT PARAMS command to create a parameter file for the RCUST_S group. Include the following parameters plus any others that apply to your database environment. For possible additional required parameters, see the Oracle GoldenGate installation and setup guide for your database.

Run the following command on GGSCI prompt

Start all the Extract and Replicat processes on the source and target instances and verify that there’s no lag for the output column Lag at chkpt and Time since chkpt. Use the following GGSCI commands to start the processes on source.

The following screenshot refers to the GoldenGate processes (Manager, Extract, Pump, and Replicat) running from the source RDS Custom for Oracle instance.

Use the following GGSCI commands to start the processes on target.

The following screenshot refers to the GoldenGate processes running from the target instance.

Perform bi-directional replication validation between the source and target with the following code:

Perform sample data insertion in source and target database using following SQL*Plus commands:

Insert data on Source:

Insert data on target:

Make sure the GoldenGate processes are running without any lag or errors. If the process is not RUNNING, verify the error by using GGSCI command View Report <Process_name>.

The following screenshot shows data validation from the source instance using SQL*Plus.

The following screenshot shows data validation from the target instance using SQL*Plus.

To monitor GoldenGate processes for any replication lag, refer to the following GGSCI commands.

The following shows the replication lag monitoring on the source instance using GGSCI commands.

The following shows the replication lag monitoring on the target instance using GGSCI commands.

What happens during failover

In a failover scenario, one of the RDS Custom for Oracle instances is unavailable. If we’re using Route 53 or a proxy as a solution, it automatically directs all the application traffic to the RDS Custom instance that is available. Because this solution uses Oracle GoldenGate bi-directional replication, the RPO is 0.

At this point, Amazon RDS Custom for Oracle is running as a standalone database, without any HA or DR. After the application failover is complete and tested, we can focus on recreating the HA architecture.

The following are the high-level steps to recreate the HA architecture after failover:

If the RDS Custom source instance in AZ1 goes down, you can divert the application traffic to the RDS Custom target instance in AZ2. If you’re using Route 53 or a proxy, then it is handled automatically.

Create the new RDS instance in AZ1 as a read replica from the running RDS Custom for Oracle instance in AZ2.

Set the name to that of the instance that failed .

At this point, the Oracle Golden Gate Capture process keeps capturing all the transactions to trail files from the RDS Custom instance running in AZ2, but the pump (Extract) process goes into the ABENDED state because the manager process is down currently not running because RDS Custom instance in AZ1 in not running.

Promote the read replica to a standalone RDS Custom for Oracle instance in AZ1.

The Amazon EFS that was mounted on Failed RDS Custom on AZ1 will still have all the trail files and GoldenGate shared files.

After the instance is successfully promoted, mount the same Amazon EFS file system that was mounted on the failed RDS Custom instance in AZ1

Reconfigure all the GoldenGate processes on RDS Custom instance in AZ1 and start the Extract (pump) process on the GoldenGate running on RDS Custom instance in AZ2.

After all the GoldenGate processes are started successfully on RDS Custom instance in AZ1 and AZ2, GoldenGate starts receiving all the backlog Replicat trail files on the RDS Custom instance in AZ1.

Replicat makes checkpoints in the trail file to mark its last read position.

When the replication lag is zero, all the pending transactions are applied to AZ1 to make sure the source and target are in sync.

What to expect during database instance recovery

You could experience scenarios like database point-in-time recovery, recovery from failed instances, read replica promotions, and so on, when you may have to perform database restore and recovery steps on the RDS Custom instance. Use the following high level checklist to validate the configuration after the recovery.

After the database restore has been completed, you must reconfigure and validate database-related parameters before restarting the Oracle Golden Gate processes. For example, ENABLE_GOLDENGATE_REPLICATION must be TRUE.

Validate and verify the Amazon EFS file system is mounted to the restored instance. Refer to Integrate Amazon RDS Custom for Oracle with Amazon EFS for more information.

Validate the configuration of the automatic remount of the Amazon EFS file system on reboot of the instance under /etc/fstab.

Validate the symbolic links from the Oracle GoldenGate home directory to the Amazon EFS file system have been reestablished after restore.

Validate the Oracle GoldenGate binaries are accessible on new instance.

Update the Oracle Home TNS entries with the updated host endpoint on both the source and target DB instances under $ORACLE_HOME/network/admin/tnsnames.ora.

Invoke GGSCI> on the newly restored instance and validate the CREDENTIALSTORE for the successful connection by making dblogin USERIDALIAS.

Start the GoldenGate Manager process on the newly restored RDS Custom instances.

Start the GoldenGate ABENDED/STOPPED Extract process on newly restored RDS Custom instances. Whenever we start the ABENDED Extract processes, the process is getting recovered from the last ABEND point. The Extract process searches back to its online redo log file or archive log file record for the opened transactions when it crashed. Make sure the archive files are available for Extract process recovery to avoid any data loss. If needed, register the Extract to return to an existing LogMiner data dictionary build with a specified SCN. This allows for faster creation captures by using existing dictionary builds. See Restarting a Primary Extract after System Failure or Corruption for more details.

Start the GoldenGate extract Pump process by updating the rmthost Pump process parameter with the updated host entry. The extract Pump process will ABEND with the following error if the host address isn’t updated in the GoldenGate parameter files: TCP/IP error 111 (Connection refused), endpoint.

Start the Replicat process and Oracle GoldenGate will pick up the trail file information from the checkpoint table. See Starting Replicat after Point In Time Restore Of Database for more information.

In case of any error, follow the Oracle GoldenGate troubleshooting best practices.

Conclusion

In this post, we showed you how to configure and use Oracle GoldenGate bi-directional replication with Amazon RDS Custom for Oracle to achieve near zero HA and/or DR with near zero data loss and minimal downtime using Amazon EFS.

In Part 2 of this series, we discuss how to make this this architecture even more resilient and provide HA and DR with zero Data loss in the event of a AZ failure and near zero data loss in the event of a Region failure.

To learn more about Amazon RDS Custom and Amazon EFS, refer to the following resources:

Amazon RDS Custom pricing

Amazon RDS Custom User Guide

Amazon Elastic File System Documentation

Implement Oracle GoldenGate high availability in the AWS Cloud

About the authors

Vishal Srivastava is a Senior Partner Solutions Architect specializing in databases at AWS. In his role, Vishal works with ISV Partners to provide guidance and technical assistance on database projects, helping them improve the value of their solutions when using AWS. He also works with Public Sector ISV Partners to help them design scalable, secure, performant, robust database architectures in the AWS Cloud.

Wasim Shaikh is a Senior Partner Solutions Architect specializing in databases at AWS. He works with customers to provide guidance and technical assistance about various database and analytical projects, helping them improving the value of their solutions when using AWS.

Vineet Agarwal is a Senior Database Specialist Solutions Architect with Amazon Web Services (AWS). Prior to AWS, Vineet has worked for large enterprises in financial, retail and healthcare verticals helping them with database and solutions architecture. In his spare time, you’ll find him playing poker, trying a new activity or a DIY project.

Maharshi Desai is a Worldwide GTM Specialist for RDS with Amazon Web Services. He defines strategies, plans and execute it to drive the growth for RDS business. He is also leading the Healthcare and Lifesciences domain to drive RDS strategies to align with Industry use cases.

Read MoreAWS Database Blog