Amazon SageMaker JumpStart is the Machine Learning (ML) hub of SageMaker providing pre-trained, publicly available models for a wide range of problem types to help you get started with machine learning.

JumpStart also offers example notebooks that use Amazon SageMaker features like spot instance training and experiments over a large variety of model types and use cases. These example notebooks contain code that shows how to apply ML solutions by using SageMaker and JumpStart. They can be adapted to match to your own needs and can thus speed up application development.

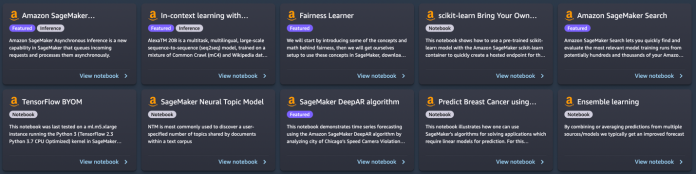

Recently, we added 10 new notebooks to JumpStart in Amazon SageMaker Studio. This post focuses on these new notebooks. As of this writing, JumpStart offers 56 notebooks, ranging from using state-of-the-art natural language processing (NLP) models to fixing bias in datasets when training models.

The 10 new notebooks can help you in the following ways:

They offer example code for you to run as is from the JumpStart UI in Studio and see how the code works

They show the usage of various SageMaker and JumpStart APIs

They offer a technical solution that you can further customize based on your own needs

The number of notebooks that are offered through JumpStart increase on a regular basis as more notebooks are added. These notebooks are also available on github.

Notebooks overview

The 10 new notebooks are as follows:

In-context learning with AlexaTM 20B – Demonstrates how to use AlexaTM 20B for in-context learning with zero-shot and few-shot learning on five example tasks: text summarization, natural language generation, machine translation, extractive question answering, and natural language inference and classification.

Fairness linear learner in SageMaker – There have recently been concerns about bias in ML algorithms as a result of mimicking existing human prejudices. This notebook applies fairness concepts to adjust model predictions appropriately.

Manage ML experimentation using SageMaker Search – Amazon SageMaker Search lets you quickly find and evaluate the most relevant model training runs from potentially hundreds and thousands of SageMaker model training jobs.

SageMaker Neural Topic Model – SageMaker Neural Topic Model (NTM) is an unsupervised learning algorithm that attempts to describe a set of observations as a mixture of distinct categories.

Predict driving speed violations – The SageMaker DeepAR algorithm can be used to train a model for multiple streets simultaneously, and predict violations for multiple street cameras.

Breast cancer prediction – This notebook uses UCI’S breast cancer diagnostic dataset to build a predictive model of whether a breast mass image indicates a benign or malignant tumor.

Ensemble predictions from multiple models – By combining or averaging predictions from multiple sources and models, we typically get an improved forecast. This notebook illustrates this concept.

SageMaker asynchronous inference – Asynchronous inference is a new inference option for near-real-time inference needs. Requests can take up to 15 minutes to process and have payload sizes of up to 1 GB.

TensorFlow bring your own model – Learn how to train a TensorFlow model locally and deploy on SageMaker using this notebook.

Scikit-learn bring your own model – This notebook shows how to use a pre-trained Scikit-learn model with the SageMaker Scikit-learn container to quickly create a hosted endpoint for that model.

Prerequisites

To use these notebooks, make sure that you have access to Studio with an execution role that allows you to run SageMaker functionality. The short video below will help you navigate to JumpStart notebooks.

In the following sections, we go through each of the 10 new solutions and discuss some of their interesting details.

In-context learning with AlexaTM 20B

AlexaTM 20B is a multitask, multilingual, large-scale sequence-to-sequence (seq2seq) model, trained on a mixture of Common Crawl (mC4) and Wikipedia data across 12 languages, using denoising and Causal Language Modeling (CLM) tasks. It achieves state-of-the-art performance on common in-context language tasks such as one-shot summarization and one-shot machine translation, outperforming decoder only models such as Open AI’s GPT3 and Google’s PaLM, which are over eight times bigger.

In-context learning, also known as prompting, refers to a method where you use an NLP model on a new task without having to fine-tune it. A few task examples are provided to the model only as part of the inference input, a paradigm known as few-shot in-context learning. In some cases, the model can perform well without any training data at all, only given an explanation of what should be predicted. This is called zero-shot in-context learning.

This notebook demonstrates how to deploy AlexaTM 20B through the JumpStart API and run inference. It also demonstrates how AlexaTM 20B can be used for in-context learning with five example tasks: text summarization, natural language generation, machine translation, extractive question answering, and natural language inference and classification.

The notebook demonstrates the following:

One-shot text summarization, natural language generation, and machine translation using a single training example for each of these tasks

Zero-shot question answering and natural language inference plus classification using the model as is, without the need to provide any training examples.

Try running your own text against this model and see how it summarizes text, extracts Q&A, or translates from one language to another.

Fairness linear learner in SageMaker

There have recently been concerns about bias in ML algorithms as a result of mimicking existing human prejudices. Nowadays, several ML methods have strong social implications, for example they are used to predict bank loans, insurance rates, or advertising. Unfortunately, an algorithm that learns from historical data will naturally inherit past biases. This notebook presents how to overcome this problem by using SageMaker and fair algorithms in the context of linear learners.

It starts by introducing some of the concepts and math behind fairness, then it downloads data, trains a model, and finally applies fairness concepts to adjust model predictions appropriately.

The notebook demonstrates the following:

Running a standard linear model on UCI’s Adult dataset.

Showing unfairness in model predictions

Fixing data to remove bias

Retraining the model

Try running your own data using this example code and detect if there is bias. After that, try removing bias, if any, in your dataset using the provided functions in this example notebook.

Manage ML experimentation using SageMaker Search

SageMaker Search lets you quickly find and evaluate the most relevant model training runs from potentially hundreds and thousands of SageMaker model training jobs. Developing an ML model requires continuous experimentation, trying new learning algorithms, and tuning hyperparameters, all while observing the impact of such changes on model performance and accuracy. This iterative exercise often leads to an explosion of hundreds of model training experiments and model versions, slowing down the convergence and discovery of a winning model. In addition, the information explosion makes it very hard down the line to trace back the lineage of a model version—the unique combination of datasets, algorithms, and parameters that brewed that model in the first place.

This notebook shows how to use SageMaker Search to quickly and easily organize, track, and evaluate your model training jobs on SageMaker. You can search on all the defining attributes from the learning algorithm used, hyperparameter settings, training datasets used, and even the tags you have added on the model training jobs. You can also quickly compare and rank your training runs based on their performance metrics, such as training loss and validation accuracy, thereby creating leaderboards for identifying the winning models that can be deployed into production environments. SageMaker Search can quickly trace back the complete lineage of a model version deployed in a live environment, right up until the datasets used in training and validating the model.

The notebook demonstrates the following:

Training a linear model three times

Using SageMaker Search to organize and evaluate these experiments

Visualizing the results in a leaderboard

Deploying a model to an endpoint

Tracing lineage of the model starting from the endpoint

In your own development of predictive models, you may be running several experiments. Try using SageMaker Search in such experiments and experience how it can help you in multiple ways.

SageMaker Neural Topic Model

SageMaker Neural Topic Model (NTM) is an unsupervised learning algorithm that attempts to describe a set of observations as a mixture of distinct categories. NTM is most commonly used to discover a user-specified number of topics shared by documents within a text corpus. Here each observation is a document, the features are the presence (or occurrence count) of each word, and the categories are the topics. Because the method is unsupervised, the topics aren’t specified up-front and aren’t guaranteed to align with how a human may naturally categorize documents. The topics are learned as a probability distribution over the words that occur in each document. Each document, in turn, is described as a mixture of topics.

This notebook uses the SageMaker NTM algorithm to train a model on the 20NewsGroups dataset. This dataset has been widely used as a topic modeling benchmark.

The notebook demonstrates the following:

Creating a SageMaker training job on a dataset to produce an NTM model

Using the model to perform inference with a SageMaker endpoint

Exploring the trained model and visualizing learned topics

You can easily modify this notebook to run on your text documents and divide them into various topics.

Predict driving speed violations

This notebook demonstrates time series forecasting using the SageMaker DeepAR algorithm by analyzing the city of Chicago’s Speed Camera Violation dataset. The dataset is hosted by Data.gov, and is managed by the U.S. General Services Administration, Technology Transformation Service.

These violations are captured by camera systems and are available to improve the lives of the public through the city of Chicago data portal. The Speed Camera Violation dataset can be used to discern patterns in the data and gain meaningful insights.

The dataset contains multiple camera locations and daily violation counts. Each daily violation count for a camera can be considered a separate time series. You can use the SageMaker DeepAR algorithm to train a model for multiple streets simultaneously, and predict violations for multiple street cameras.

The notebook demonstrates the following:

Training the SageMaker DeepAR algorithm on the time series dataset using spot instances

Making inferences on the trained model to make traffic violation predictions

With this notebook, you can learn how time series problems can be solved using the DeepAR algorithm in SageMaker and try applying it on your own time series datasets.

Breast cancer prediction

This notebook takes an example for breast cancer prediction using UCI’S breast cancer diagnostic dataset. It uses this dataset to build a predictive model of whether a breast mass image indicates a benign or malignant tumor.

The notebook demonstrates the following:

Basic setup for using SageMaker

Converting datasets to Protobuf format used by the SageMaker algorithms and uploading to Amazon Simple Storage Service (Amazon S3)

Training a SageMaker linear learner model on the dataset

Hosting the trained model

Scoring using the trained model

You can go through this notebook to learn how to solve a business problem using SageMaker, and understand the steps involved for training and hosting a model.

Ensemble predictions from multiple models

In practical applications of ML on predictive tasks, one model often doesn’t suffice. Most prediction competitions typically require combining forecasts from multiple sources to get an improved forecast. By combining or averaging predictions from multiple sources or models, we typically get an improved forecast. This happens because there is considerable uncertainty in the choice of the model and there is no one true model in many practical applications. Therefore, it’s beneficial to combine predictions from different models. In the Bayesian literature, this idea is referred to as Bayesian model averaging, and has been shown to work much better than just picking one model.

This notebook presents an illustrative example to predict if a person makes over $50,000 a year based on information about their education, work experience, gender, and more.

The notebook demonstrates the following:

Preparing your SageMaker notebook

Loading a dataset from Amazon S3 using SageMaker

Investigating and transforming the data so that it can be fed to SageMaker algorithms

Estimating a model using the SageMaker XGBoost (Extreme Gradient Boosting) algorithm

Hosting the model on SageMaker to make ongoing predictions

Estimating a second model using the SageMaker linear learner method

Combining the predictions from both models and evaluating the combined prediction

Generating final predictions on the test dataset

Try running this notebook on your dataset and using multiple algorithms. Try experimenting with various combination of models offered by SageMaker and JumpStart and see which combination of model ensembling gives the best results on your own data.

SageMaker asynchronous inference

SageMaker asynchronous inference is a new capability in SageMaker that queues incoming requests and processes them asynchronously. SageMaker currently offers two inference options for customers to deploy ML models: a real-time option for low-latency workloads, and batch transform, an offline option to process inference requests on batches of data available up-front. Real-time inference is suited for workloads with payload sizes of less than 6 MB and require inference requests to be processed within 60 seconds. Batch transform is suitable for offline inference on batches of data.

Asynchronous inference is a new inference option for near-real-time inference needs. Requests can take up to 15 minutes to process and have payload sizes of up to 1 GB. Asynchronous inference is suitable for workloads that don’t have subsecond latency requirements and have relaxed latency requirements. For example, you might need to process an inference on a large image of several MBs within 5 minutes. In addition, asynchronous inference endpoints let you control costs by scaling down endpoint instance count to zero when they’re idle, so you only pay when your endpoints are processing requests.

The notebook demonstrates the following:

Creating a SageMaker model

Creating an endpoint using this model and asynchronous inference configuration

Making predictions against this asynchronous endpoint

This notebook shows you a working example of putting together an asynchronous endpoint for a SageMaker model.

TensorFlow bring your own model

A TensorFlow model is trained locally on a classification task where this notebook is being run. Then it’s deployed on a SageMaker endpoint.

The notebook demonstrates the following:

Training a TensorFlow model locally on the IRIS dataset

Importing that model into SageMaker

Hosting it on an endpoint

If you have TensorFlow models that you developed yourself, this example notebook can help you host your model on a SageMaker managed endpoint.

Scikit-learn bring your own model

SageMaker includes functionality to support a hosted notebook environment, distributed, serverless training, and real-time hosting. It works best when all three of these services are used together, but they can also be used independently. Some use cases may only require hosting. Maybe the model was trained prior to SageMaker existing, in a different service.

The notebook demonstrates the following:

Using a pre-trained Scikit-learn model with the SageMaker Scikit-learn container to quickly create a hosted endpoint for that model

If you have Scikit-learn models that you developed yourself, this example notebook can help you host your model on a SageMaker managed endpoint.

Clean up resources

After you’re done running a notebook in JumpStart, make sure to Delete all resources so that all the resources that you created in the process are deleted and your billing is stopped. The last cell in these notebooks usually deletes endpoints that are created.

Summary

This post walked you through 10 new example notebooks that were recently added to JumpStart. Although this post focused on these 10 new notebooks, there are a total of 56 available notebooks as of this writing. We encourage you to log in to Studio and explore the JumpStart notebooks yourselves, and start deriving immediate value out of them. For more information, refer to Amazon SageMaker Studio and SageMaker JumpStart.

About the Author

Dr. Raju Penmatcha is an AI/ML Specialist Solutions Architect in AI Platforms at AWS. He received his PhD from Stanford University. He works closely on the low/no-code suite services in SageMaker that help customers easily build and deploy machine learning models and solutions.

Read MoreAWS Machine Learning Blog