This post is written in collaboration with Dima Zadorozhny and Fuad Babaev from VirtuSwap.

VirtuSwap is a startup company developing innovative technology for decentralized exchange of assets on blockchains. VirtuSwap’s technology provides more efficient trading for assets that don’t have a direct pair between them. The absence of a direct pair leads to costly indirect trading, meaning that two or more trades are required to complete a desired swap, leading to double or triple trading costs. VirtuSwap’s Reserve-based Virtual Pools technology solves the problem by making every trade direct, saving up to 50% of trading costs. Read more at virtuswap.io.

In this post, we share how VirtuSwap used the bring-your-own-container feature in Amazon SageMaker Studio to build a robust environment to host their GPU-intensive simulations to solve linear optimization problems.

The challenge

The VirtuSwap Minerva engine creates recommendations for optimal distribution of liquidity between different liquidity pools, while taking into account multiple parameters, such as trading volumes, current market liquidity, and volatilities of traded assets, constrained by a total amount of liquidity available for distribution. To provide these recomndations, VirtuSwap Minerva uses thousands of historical trading pairs to simulate their run through various liquidity configurations to find the optimal distribution of liquidity, pool fees, and more.

The initial implementation was coded using pandas dataframes. However, as the simulation data grew, the runtime nearly quadrupled, along with the size of the problem. The result of this was that iterations slowed down and it was almost impossible to run larger dimensionality tasks. VirtuSwap realized that they needed to use GPU instances for the simulation to allow faster results.

VirtuSwap needed a GPU-compatible pandas-like library to run their simulation and chose cuDF, a GPU DataFrame library by Rapids. cuDF is used for loading, joining, aggregating, filtering, and otherwise manipulating data, in a pandas-like API that accelerates the work on dataframes, using CUDA for significantly faster performance than pandas.

Solution overview

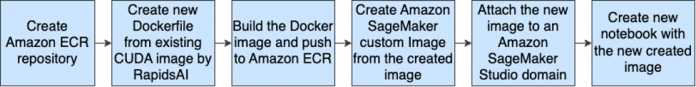

VirtuSwap chose SageMaker Studio for end-to-end development, starting with iterative, interactive development in notebooks. Due to the flexibility of SageMaker Studio, they decided to use it for their simulation as well, taking advantage of Amazon SageMaker custom images, which allow VirtuSwap to bring their own custom libraries and software needed, such as cuDF. The following diagram illustrates the solution workflow.

In the following sections, we share the step-by-step instructions to build and use a Rapids cuDF image in SageMaker.

Prerequisites

To run this step-by-step guide, you need an AWS account with permissions to SageMaker, Amazon Elastic Container Registry (Amazon ECR), AWS Identity and Access Management (IAM), and AWS CodeBuild. In addition, you need to have a SageMaker domain ready.

Create IAM roles and policies

For the build process of SageMaker custom notebooks, we used AWS CloudShell, which provides all the required packages to build the custom image. In CloudShell, we used SageMaker Docker Build, a CLI for building Docker images for and in SageMaker Studio. The CLI can create the repository in Amazon ECR and build the container using CodeBuild. For that, we need to provide the tool an IAM role with proper permissions. Complete the following steps:

Sign in to the AWS Management Console and open the IAM console.

In the navigation pane on the left, choose Policies.

Create a policy named sm-build-policy with the following permissions:

The permissions provide the ability to utilize the utility in full: create repositories, create a CodeBuild job, use Amazon Simple Storage Service (Amazon S3), and send logs to Amazon CloudWatch.

Create a role named sm-build-role with the following trust policy, and add the policy sm-build-policy that you created earlier:

Now, let’s review the steps in CloudShell.

Create a cuDF Docker image in CloudShell

For our purposes, we needed a Rapids CUDA image, which also includes an ipykernel, so that the image can be used in a SageMaker Studio notebook.

We use an existing CUDA image by RapidsAI that is available in the official Rapids AI Docker hub, and add the ipykernel installation.

In a CloudShell terminal, run the following command:

This will create the Dockerfile that will build our custom Docker image for SageMaker.

Build and push the image to a repository

As mentioned, we used the SageMaker Docker Build library, which allows data scientists and developers to easily build custom container images. For more information, refer to Using the Amazon SageMaker Studio Image Build CLI to build container images from your Studio notebooks.

The following command creates an ECR repository (if the repository doesn’t exist). sm-docker will create it, and build and push the new Docker image to the created repository:

In case you are missing sm-docker in your CloudShell, run the following code:

On completion, the ECR image URI will be returned.

Create a SageMaker custom image

After you have created a custom Docker image and pushed it to your container repository (Amazon ECR), you can configure SageMaker to use that custom Docker image. Complete the following steps:

On the SageMaker console, choose Images in the navigation pane.

Choose Create image.

Enter the image URI output from the previous section, then choose Next.

For Image name and Image display name, enter rapids.

For Description, enter a description.

For IAM role, choose the proper IAM role for your SageMaker domain.

For EFS mount path, enter /home/sagemaker-user (default).

Expand Advanced configuration.

For User ID, enter 1000.

For Group ID, enter 100.

In the Image type section, select SageMaker Studio Image.

Choose Add kernel.

For Kernel name, enter conda-env-rapids-py.

For Kernel display name, enter rapids.

Choose Submit to create the SageMaker image.

Attach the new image to your SageMaker Studio domain

Now that you have created the custom image, you need to make it available to use by attaching the image to your domain. Complete the following steps:

On the SageMaker console, choose Domains in the navigation pane.

Choose your domain. This step is optional; you can create and attach the custom image directly from the domain and skip this step.

On the domain details page, choose the Environment tab, then choose Attach image.

Select Existing image and select the new image (rapids) from the list.

Choose Next.

Review the custom image configuration and make sure to set Image type as SageMaker Studio Image, as in the previous step, with the same kernel name and kernel display name.

Choose Submit.

The custom image is now available in SageMaker Studio and ready for use.

Create a new notebook with the image

For instructions to launch a new notebook, refer to Launch a custom SageMaker image in Amazon SageMaker Studio. Complete the following steps:

On the SageMaker Studio console, choose Open launcher.

Choose Change environment.

For Image, choose the newly created image, rapids v1.

For Kernel, choose rapids.

For Instance type¸ choose your instance.

SageMaker Studio provides the option to customize your computing power by choosing an instance from the AWS accelerated compute, general purpose compute, compute optimized, or memory optimized families. This flexibility allowed you to seamlessly transition between CPUs and GPUs, as well as dynamically scale up or down the instance sizes as needed. For our notebook, we used the ml.g4dn.2xlarge instance type to test cuDF performance while utilizing GPU accelerator.

Choose Select.

Select your environment and choose Create notebook, then wait until the notebook kernel becomes ready.

Validate your custom image

To validate that your custom image was launched and cuDF is ready to use, create a new cell, enter import cudf, and run it.

Clean up

Power off the Jupyter instance running the test notebook in SageMaker Studio by choosing Running Terminals and Kernels and powering off the running instance.

Runtime comparison results

We conducted a runtime comparison of our code using both CPU and GPU on SageMaker g4dn.2xlarge instances, with a time complexity of O(N). The results, as shown in the following figure, reveal the efficiency of using GPUs over CPUs.

The main advantage of GPUs lies in their ability to perform parallel processing. As we increase the value of N, the runtime on CPUs increases at a rate of 3N. On the other hand, with GPUs, the rate of increase can be described as 2N, as illustrated in the preceding figure. The larger the problem size, the more efficient the GPU becomes. In our case, using a GPU was at least 20 times faster than using a CPU. This highlights the growing importance of GPUs in modern computing, especially for tasks that require large amounts of data to be processed quickly.

With SageMaker GPU instances, VirtuSwap is able to dramatically increase the dimensionality of the solved problems and find solutions faster.

Conclusion

In this post, we showed how VirtuSwap customized SageMaker Studio by using a custom image to solve a complex problem. With the ability to easily change the run environment and switch between different instances, sizes, and kernels, VirtuSwap was able to experiment fast and speed up the runtime by 15x and deliver a scalable solution.

As a next step, VirtuSwap is considering broadening their usage of SageMaker and running their processing in Amazon SageMaker Processing to process the massive data they’re collecting from various blockchains into their platform.

About the Authors

Adir Sharabi is a Principal Solutions Architect with Amazon Web Services. He works with AWS customers to help them architect secure, resilient, scalable and high performance applications in the cloud. He is also passionate about Data and helping customers to get the most out of it.

Omer Haim is a Senior Startup Solutions Architect at Amazon Web Services. He helps startups with their cloud journey, and is passionate about containers and ML. In his spare time, Omer likes to travel, and occasionally game with his son.

Dmitry Zadorozhny is a data analyst at virtuswap.io. He is responsible for data mining, processing and storage, as well as integrating cloud services such as AWS. Prior to joining virtuswap, he worked in the data science field and was an analytics ambassador lead at dydx foundation. Dima has a M.Sc in Computer Science. Dima enjoys playing computer games in his spare time.

Fuad Babaev serves as a Data Science Specialist at Virtuswap (virtuswap.io). He brings expertise in tackling complex optimization challenges, crafting simulations, and architecting models for trade processes. Outside of his professional career Fuad has a passion in playing chess.

Read MoreAWS Machine Learning Blog