Twilio is a customer engagement platform that drives real-time, personalized experiences for leading brands. Twilio has democratized communications channels like voice, text, chat, and video by virtualizing the world’s telecommunications infrastructure through APIs that are simple enough for any developer to use, yet robust enough to power the world’s most demanding applications.

Twilio supports an omni-channel of phone, SMS, online chat, and email. At every stage of the customer journey, Twilio Messaging supports their customers on their preferred application. Twilio can proactively inform customers about account activity purchase confirmations and shipping notifications with a programmable messaging API.

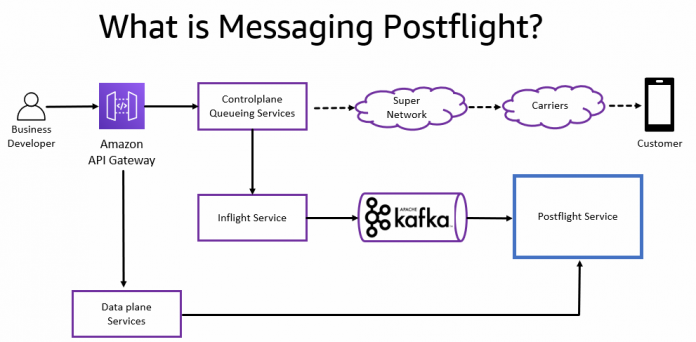

The Twilio Messaging data pipeline lifecycle for messages is split into two states:

Inflight – Throughout the lifecycle of a message, an event must transition through various inflight states (such as queued, sent or delivered) until it’s complete. Due to the asynchronous nature of the Twilio Messaging service, the inflight states must be managed and persisted somewhere in the system.

Postflight – Upon reaching a postflight state, the message record must be persisted to long-term storage for later retrieval and analytical processing.

Historically, all Twilio Messaging data was placed in the same data store regardless of where the message was in its lifecycle.

Twilio Messaging Postflight is a backend system at Twilio that powers the data plane for all messaging traffic (SMS, MMS, and OTT such as WhatsApp) handled by Twilio. It allows both users and internal systems to query, update, and delete message log data through the Postflight API. The service is responsible for long-term storage and retrieval of finalized messages, diverse query patterns, privacy, and custom data retention.

In this post, we discuss how Twilio used Amazon DynamoDB to modernize its Messaging Postflight service data store. DynamoDB is a fully managed, serverless, key-value NoSQL database designed to run high-performance applications at any scale. DynamoDB offers built-in security, continuous backups, automated multi-Region replication, in-memory caching, and data import/export tools.

This modernization removed the operational complexity and over-provisioning challenges Twilio had experienced with their legacy architecture. After the modernization, Twilio saw processing delay incidents decrease from approximately one per month to zero. With the legacy MySQL data store, Twilio experienced a delay of 10–19 hours during their 2020 Black Friday and Cyber Monday peak period. With DynamoDB, Twilio experienced zero delays during the same peak period in 2021. Twilio has now decommissioned their entire 90-node MySQL database, resulting in a $2.5 million annualized cost savings.

Legacy architecture overview

The Postflight data store handles access patterns of tens of billions of records associated with the top Twilio accounts. The legacy architecture handled 450 million messages per day, had 260 TB of storage, 40,000 writes RPS at peak, and 12,000 read RPS at peak.

Twilio Messaging Postflight had been using a sharded MySQL cluster architecture to process and store 260 TB of current and historical message log data. Although the architecture is scalable in theory, from a practical standpoint it wasn’t nimble enough to seamlessly accommodate spikes in customer traffic.

There were many problems with the legacy architecture, especially at scale. Historical challenges included the operational management complexities of managing the MySQL 90-node cluster, high write contention during traffic spikes, data delays, over-provisioning of capacity during off-peak hours, and noisy neighbor problems, where large customer traffic spikes caused write contention and degraded the MySQL database availability.

The legacy data store had the following attributes and requirements:

Writing into one MySQL table, with each record less than 1 KB. Data is ingested into the MySQL database from the Kafka messaging bus.

Regionalization of the MySQL database involves creating new clusters in new Regions.

A thousand to tens of thousands of writes per second, which is also spiky.

Data is retained in the database for several months.

A minimum of 1 million active customers.

Data is expected to be accessible to customers within a few minutes.

85 percent write traffic and 15 percent read traffic.

The MySQL cluster is comprised of one primary cluster and three replicas for each shard.

Account-based sharding.

The MySQL cluster uses several Amazon Elastic Compute Cloud (Amazon EC2) I3en clusters.

The MySQL database currently contains approximately 100 billion records.

The Twilio Messaging data pipeline involves messages written to an Apache Kafka topic. An intermediate service transforms the messages, and another service ingests the message into the MySQL database.

The following figure illustrates the legacy architecture.

Twilio faced the following challenges regarding scaling and maintaining their legacy self-managed MySQL data store:

The sharded MySQL design consisted of multiple self-managed clusters running on Amazon EC2.

Account-based sharding was sensitive to spikes in traffic from large customers, especially noisy neighbors.

Shard splitting is time consuming and risky, considering its impact on live traffic.

Large spikes in customer traffic require throttling of processing speed for the whole shard to prevent database failure. This can cause data delays for all customers on the shard, an increase in support tickets, and loss of customer trust.

Provisioning database clusters for peak load is inefficient because the capacity is lightly used most of the time.

In the following sections, we describe the design considerations that led to choosing DynamoDB as the storage layer, and the steps involved in building, scaling, and migrating the data processing pipeline to DynamoDB.

To help identify the right purpose-built AWS database, AWS Professional Services reviewed the access pattern of the Twilio Postflight workload and recommended DynamoDB as a good fit based on the use case. AWS Professional Services is a global team of experts that can help you realize your desired business outcomes when using the AWS Cloud.

Solution overview

Twilio Messaging chose DynamoDB for the following reasons:

The Postflight query patterns can be transformed to range queries in an ordered key-value store.

DynamoDB supports low-latency lookup and range queries that meets Twilio’s hundreds of milliseconds requirement.

DynamoDB provides predictable performance with seamless scalability. While a single DynamoDB partition can provide 3,000 read capacity units (RCUs) and 1,000 write capacity units (WCUs), with a good partition key design maximum throughput is effectively unlimited through horizontal scaling. See Scaling DynamoDB: How partitions, hot keys, and split for heat impact performance (Part 1: Loading) to learn more.

DynamoDB has auto scaling capacity features that can dynamically adjust provisioned throughput capacity based on user traffic, so Twilio doesn’t have to over-provision for peak workloads associated with the legacy MySQL database.

DynamoDB is serverless and has no operational complexities compared to self-managed MySQL. With DynamoDB you don’t have to worry about hardware provisioning, setup and configuration, replication, software patching, or cluster scaling.

To ensure DynamoDB would meet Twilio’s requirements as a replacement database for the legacy MySQL cluster, certain success criteria had to be met:

Ensure write traffic can be scaled to 100,000 requests per second (RPS) to the base table with latency in the tens of milliseconds

Ensure the range queries can be served with a reasonable latency for 1,000 RPS

Ensure writes from base table replicate with lag of 1 second or less to the index

Twilio Messaging’s lead architect, with help from AWS Professional Services, performed a load test to ensure DynamoDB met Twilio’s performance and latency requirements. AWS Professional Services used Locust, a performance tool, to build the test environment to generate synthetic random workloads from multiple applications ingesting into DynamoDB and scale up the write traffic quickly.

AWS Professional Services reviewed the DynamoDB data model (schema design) derived from the legacy MySQL data structure to ensure it can scale seamlessly to peak traffic requirements without reaching per-partition throughput limits.

The partition key design includes support for KeyConditions queries through range lookups and synthetic partition key suffixes to protect against write hotspots. Sharding using calculated suffixes is another recommendation. By default, every partition in a DynamoDB table will strive to deliver the full capacity of 3,000 RCU and 1,000 WCU. If a workload has a sort key that isn’t monotonically increasing, split for heat can provide additional capacity to a single partition key without write sharding. In other cases, write sharding can be used to allow additional capacity to low cardinality partition keys.

AWS Professional Services confirmed through the load test that DynamoDB can scale to meet Twilio’s requirements. AWS and Twilio reviewed the load test results and confirmed the results met Twilio’s success criteria.

The following figure shows the replacement solution for Twilio Messaging Postflight’s multi-node, self-managed MySQL data store running on EC2 i3en instances with DynamoDB.

Conclusion

In this post, you saw how Twilio modernized their 90-node MySQL cluster with DynamoDB and reduced their data processing delays to zero, thereby removing the operational complexity and over-provisioning challenges and significantly reducing costs.

Modernize your existing and new workloads to gain the benefits of performance at scale using a cost-effective, fully managed, and reliable datastore by using an AWS purpose-built database. Consider using one of the three options available to help you through your migration: a self-service migration using a purpose-built database, AWS Professional Services and partners, or the Database Freedom migration program.

About the Authors

ND Ngoka is a Sr. Solutions Architect with AWS. He has over 18 years experience in the storage field. He helps AWS customers build resilient and scalable solutions in the cloud. ND enjoys gardening, hiking, and exploring new places whenever he gets a chance.

Nikolai Kolesnikov is a Principal Data Architect and has over 20 years of experience in data engineering, architecture, and building distributed applications. He helps AWS customers build highly-scalable applications using Amazon DynamoDB and Keyspaces. He also leads Amazon Keyspaces ProServe migration engagements.

Greg Krumm is a Sr. DynamoDB Specialist SA with AWS. He helps AWS customers architect highly available, scalable, and performant systems on Amazon DynamoDB. In his spare time, Greg likes spending time with family and hiking in the forests around Portland, OR.

Vijay Bhat is a Principal Software Engineer at Twilio, working on core data backend systems powering the Communications Platform. He has deep industry experience building high performance data systems for machine learning and analytics applications at scale. Prior to Twilio, he led teams building marketing automation and incentive program systems at Lyft, and also led consulting engagements at Facebook, Intuit and Capital One. Outside of work, he enjoys playing with his two young daughters, traveling and playing guitar.

Read MoreAWS Database Blog