When you’re migrating from a MongoDB or Amazon DocumentDB (with MongoDB compatibility) database using AWS Database Migration Service (AWS DMS) in full load mode, a primary consideration is the ability to performantly migrate data. By default, when you provision a replication instance in AWS DMS, it uses a single threaded process to migrate data. A single threaded approach to migrate a large database using AWS DMS might result in the migration being slow, and slow performance can affect your entire migration experience.

We’re excited to announce a new feature of AWS DMS that automatically performs a segmented (multi-threaded) unload from a MongoDB or Amazon DocumentDB collection to any supported target for a full load migration. This is an additional capability to the existing range segmentation feature, which also performs a segmented unload based on the range boundaries provided by you. Similar to the performance of range segmentation, auto segmentation could improve migration performance by up to three times faster.

In this post, we show you how use auto segmentation and range segmentation to migrate data from MongoDB and Amazon DocumentDB source endpoints in AWS DMS.

Prerequisites

You should have a basic understanding of how AWS DMS works. If you’re just getting started with DMS, review the AWS DMS documentation. You should also have a MongoDB or an Amazon DocumentDB source cluster and a supported AWS DMS target to perform a migration.

Using Auto Segmentation With AWS DMS

AWS DMS auto segmentation for MongoDB and Amazon DocumentDB allows you to load data in parallel during the full load phase of the migration based on the segmentation parameters you provide. You can achieve a better performance with auto segmentation when the document sizes in the collection are uniformly distributed and aren’t skewed. When the dataset is skewed and the size of the documents vary greatly in the collection, it might be more beneficial to use range segmentation.

With auto segmentation, you can provision multiple threads, each of which is responsible for transferring a chunk of data from the source collection to the target. AWS DMS computes the scope of each segment (a set of documents defined by ObjectId) to migrate by determining the lower boundary of the segment. AWS DMS collects the total number of documents in the collection and sorts the collection by ObjectId, and performs paginated skips to determine range boundaries. You can specify the maximum number of documents to skip each time during pagination.

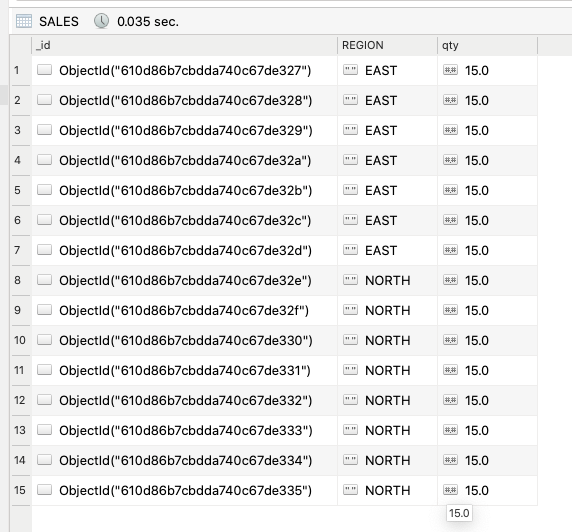

Consider the following MongoDB collection with 15 documents and ObjectID in the _id field.

The following JSON block is an example parallel load rule in the AWS DMS table mappings when creating a full load replication task to enable auto segmentation:

The table mapping has the following parameters:

type – Setting type to partitions-auto is a required parameter to enable auto segmentation.

number-of-partitions – The total number of partitions (segments) that AWS DMS uses for the migration. This is an optional parameter with a default value of 16 and a min value of 1 and a max value of 49. The MaxFullLoadSubTasks value in the task settings must be greater than or equal to the number-of-partitions value that you specify. For more information, see Full-load task settings.

collection-count-from-metadata –When enabled, AWS DMS uses collection.estimatedDocumentCount() to fetch the estimated number of documents in the collection from the collection metadata. Setting this to false uses db.collection.count() to return the number of documents that match an empty query predicate. The document count is used to automatically segment the load across multiple threads. This parameter is optional with a default value of true.

max-records-skip-per-page – The maximum number of documents to skip at a time during segmentation. For each segment, the collection is sorted and paginated skips are iteratively applied to find the lower range boundary for the segment. This parameter is optional with a default value of 10000 and a min value of 10 and a max value of 5000000. A higher skip value is generally recommended for better performance, and a lower skip value is useful when the source latency is high or in the event of cursor timeouts.

batch-size – The maximum number of documents that AWS DMS retrieves in each batch of the response from the database. By default, the batch size value is 0, which means that AWS DMS uses the maximum batch size defined on the source MongoDB or Amazon DocumentDB server to retrieve the documents. This parameter is optional.

The following is an example table mapping for migrating the preceding SALES collection in the HR database:

The preceding configuration migrates the example data in three segments (three threads in parallel):

The first segment migrates five documents from the SALES collection with _id >= 610d86b7cbdda740c67de327 in the collection

The second segment migrates five documents with _id >= 610d86b7cbdda740c67de32c in the collection

The third segment migrates five documents with _id >= 610d86b7cbdda740c67de331 in the collection

After specifying the table mappings, create and start a migration task to migrate the data to the target. For more information, see Creating a task.

Limitations of auto segmentation

Auto Segmentation has the following limitations:

Because AWS DMS has to compute the segment boundaries through pagination and sorting the primary key _id, an overhead is associated with it.

Because AWS DMS uses the minimum _id of each segment as a boundary and to paginate based on, changing the minimum _id in the collection during segment boundary computation might lead to duplicate row errors or data loss. Make sure that the lowest _id in the collection remains constant.

For a full list of limitations, see Segmenting MongoDB collections and migrating in parallel and Segmenting Amazon DocumentDB collections and migrating in parallel.

Using Range Segmentation with AWS DMS

With range segmentation, you can specify the number of segments and boundaries within the table mapping rule of the replication task. Compared to auto segmentation, range segmentation has more flexibility in creating segments. If the document size doesn’t follow a uniform distribution in the collection or if the dataset is skewed, range segmentation enables you to provide disproportionate load for each segment. In addition, range segmentation supports partitions based on multiple fields when the values from two fields in combination follow a more straightforward pattern to segment. For MongoDB and Amazon DocumentDB source endpoints, the following field types are supported as the partition fields:

ObjectId

String

Integer (INT32 and INT64)

Double

The following JSON block is an example parallel load rule in the table mappings when creating a replication task to enable range segmentation:

Read MoreAWS Database Blog