This post is targeted at technology and IT delivery leaders who are at the crossroads of application and database modernization and are looking for options and advice on how to get this complex database technology transformation right.

The technology for storing and managing data – a database – is an essential element of almost every application in all industries. With the advent of technology, the volume and type of data being produced has grown immensely. This has compelled database technology to evolve rapidly to consume and process this growing data. AWS provides a wide range of database technology options to address these needs. The modernization of a legacy database technology can be done with ease if approached correctly.

In this post, we discuss the modernization pathways, AWS services and tools available, delivery options, and considerations to get it right.

The case for modernization

Almost any application collects, stores, retrieves, and manages data in some sort of database. Traditionally, this is most often a relational database management system (RDBMS) on a dedicated server. However, we’ve seen many customers face a few key issues in traditional legacy setups, such as the following:

High license and hardware cost

License compliance constraints

New sources and types of data (such as streaming or unstructured data)

Scalability, performance, and global expansion needs

Application modernization to gain cloud-native agility and speed of innovation

Depending on the modernization path outlined in this post, the business and technology gains of modernization include the following:

Break free from commercial licensing and use open-source compatible databases with global scale capabilities

Remove the undifferentiated heavy lifting of self-managing database servers and move to managed database offerings

Unlock the value of data, and make it accessible across application areas and organizations such as analytics, data lakes, business intelligence (BI), machine learning (ML), and more

Enable decoupled architectures (microservices, event-driven), allowing faster development and deployment, and higher speed to market

Use highly scalable purpose-built databases that are appropriate for non-relational and streaming data

Database modernization patterns

Once you decide on database modernization, you may wonder: where do I start?

The first step is to understand which modernization approach is right for you. In this section, we list the database modernization pathways that are available. The choice of modernization pathway depends on the complexity of use cases, technical maturity of internal organizations, desired speed, and commercial considerations. It’s also a fine balance between priority outcomes and the effort involved.

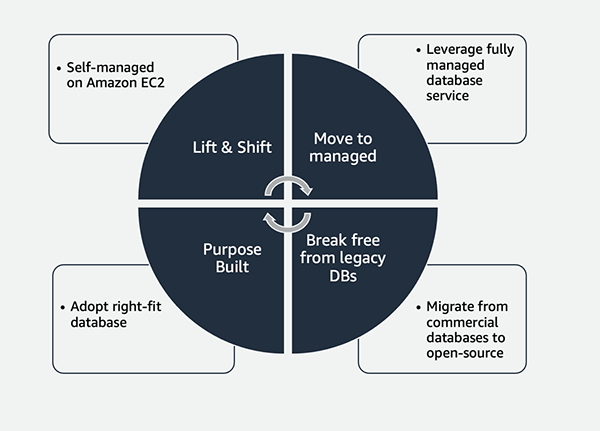

There are four common patterns for database modernization:

Lift and shift – Move a database server to the AWS Cloud with as few changes as possible:

You can host a database on Amazon Elastic Compute Cloud (Amazon EC2), with a plan for migration to Amazon Aurora or Amazon Relational Database Service (Amazon RDS) later on.

The database runs exactly as it did on premises with no impact (except the endpoint change) for the application that uses it.

This can speed up data center exits, but scores less gains because it still leaves you with the licensing from the database vendor.

You also still need to do a lot of heavy lifting on database installation, maintenance, patching, backup, and so on.

This approach also leaves you with the technical constraints of legacy databases discussed earlier.

Move to managed (homogenous migration) – Move to the same DB engine, but a managed DB instance:

You don’t have to make changes to the DB schema, data types, and table structure. For example, on-premises PostgreSQL to Aurora, or self-hosted SQL Server or Oracle on Amazon EC2 to Amazon RDS for SQL Server or Amazon RDS for Oracle, respectively.

You can use the automated backups, patching, failover, and recovery.

You can achieve gains in productivity through reduced effort in managing the database, better price, and flexibility with minimal to no changes to the upstream applications.

Break free from legacy databases (heterogenous migration) – Migrate from a commercial engine (Microsoft SQL Server or Oracle) to Aurora:

Breaking free from legacy databases allows you to migrate from complex vendor licensing and switch to a pay-as-you-go construct.

This also establishes a modern data platform, upon which customers can develop a modern data strategy.

You can use the enterprise performance with high scalability and cost efficiency with Amazon Aurora PostgreSQL-Compatible Edition or Amazon Aurora MySQL-Compatible Edition.

You have the opportunity to modernize monolith applications to scalable and event-driven microservices and serverless architectures with Amazon Aurora Serverless.

You can go global with Amazon Aurora Global Database, spanning multiple AWS Regions for low-latency read access and fast recovery from Region-wide outages.

Purpose built (heterogenous migration) – Change the database engine and data type from relational to non-relational, optimized for the use case:

You can modernize from a relational database to a specialty database, such as key-value NoSQL Amazon DynamoDB, document-oriented Amazon DocumentDB (with MongoDB compatibility), serverless graph database Amazon Neptune, and more.

This is the most complex method, but also the most transformational, and it enables new architectures and new levels of scale and performance.

The following figure illustrates these database modernization patterns.

Compare the complexity and value proposition for these options in the following table.

Migration Path

Complexity of Migration

Cost Benefit

Agility

Lift and shift

Low

Low

Low

Move to managed

Low

Medium

Medium

Break free from legacy databases

Medium

High

High

Purpose built

Highest

Highest

Highest

You can also consider the modernization pathways from the perspective of innovation velocity (how quickly you can make changes and release new features) and total cost of ownership (TCO). The following graph displays the relationship between these four different modernization options.

A consideration for self-managed databases

Although the lift and shift option with a self-managed database is quick and has the lowest complexity, self-managing your databases includes the following drawbacks:

Efforts for software installation, configuration, patching, and backups are required. AWS provides tools that make it straightforward, but much is still left to be done by the customers themselves.

Scaling and high availability is constrained because mostly vertical scaling of virtual machine is possible and the high availability read replica configuration and DB promotion on failover is completely the responsibility of the customer to implement.

You are still left to deal with licensing terms and are subject to audits.

The self-management of databases is time consuming, complex, and expensive. It’s estimated that IT teams spend 70% of their time on tasks that don’t deliver differentiated business value like hardware and software installation, upgrades, patching, backup, recovery, and security planning. Only a small subset of their time is spent on high business value activities.

Lift and shift offers some improvements here, but greater value is derived from the other options explored in this post.

The following figure illustrates how fully managed databases take most of the undifferentiated heavy lifting and allow you to focus on what’s important for your applications—data schema and queries. This is why many customers have moved from self-managed databases to managed Amazon RDS and Aurora.

Customer story

Visma embraced Amazon RDS for SQL Server to gain eight times the deployment speed. To support their customers, Visma had historically run Microsoft Windows .NET-based applications in their on-premises data center. Instead of a traditional lift and shift or rehosting migration, they built Amazon Machine Images (AMIs) for automatic scalability, used Amazon RDS as the database, and used AWS CloudFormation templates to quickly—and automatically—provision resources. The choice of the “move to managed” pattern allowed them to complete the migration in several months, gain eight-fold speed in deployments, reduce cost per customer by 50% and improve the customer service quality.

Break free from legacy databases to open-source

As discussed earlier, commercial databases are expensive, cause vendor lock-in, have punitive licensing terms, and dictate how to use the licenses. Scaling commercial databases in the cloud can be expensive, and you may feel left out from using all the benefits of the cloud. Scalability and agility are two important factors of adopting the AWS Cloud, and commercial database engines constrain you on both.

The solution for relational data use cases is to use Aurora, which provides enterprise-level performance at a tenth of the cost of a commercial database. Aurora is compatible with open-source PostgreSQL and MySQL. Aurora also provides the following:

A serverless option that scales automatically for the variable and unpredictable workloads and takes away the high cost of over-provisioning and manual scaling.

Aurora Global Database, which allows you to expand in multiple Regions and serve a worldwide user base with low latency.

Many other features for high performance and scalability, high availability and durability, security, developer productivity, and more. Refer to Amazon Aurora Features to learn more.

Customer story

Amdocs migrated the database for its flagship mission-critical application RevenueOne from an on-premises commercial database to Aurora to gain cost-effective scalability. Amdocs products were originally built on a license-based relational database, which was expensive, didn’t scale easily, and required experienced people to operate. By moving to Aurora, AWS assumed many of the responsibilities for infrastructure and database, from everyday updates and maintenance tasks to high availability (HA) and disaster recovery (DR) management.

“Moving from an on-premises license-based database to Amazon Aurora changed the development game by making services always available on demand,” says Zeev Likwornik, Cloud Technologies Lead at Amdocs.

Beyond relational databases

A relational database isn’t a perfect solution for every case. As discussed earlier, new types of sources and data formats mean that the traditional relational data structures become suboptimal and actually constrain and complicate the solution. For example, storing JSON-formatted semi-structured documents in an RDBMS can create a lot of technical challenges related to storing and accessing the data as well as performance implications for querying of data attributes.

AWS provides a broad set of fully managed, purpose-built databases that cater to business needs and help you solve your business and technical challenges and get additional benefits. Some of the benefits of AWS purpose-built databases are as follows:

Improved performance and scalability

Faster innovation

Time and cost savings

Automating undifferentiated heavy lifting tasks with managed services

The following figure illustrates the various AWS database services available. Refer to AWS Cloud Databases to learn more.

Customer story

The Australian Bureau of Statistics successfully ran the 2021 Census on a DynamoDB database to achieve high scalability. The Census Digital Service scaled to serve forms to over 2.5 million people in a 24-hour period on Census Day. At peak time, over 142 forms were submitted per second. This was achieved with the use of DynamoDB with streaming and queuing to deliver the data to ABS’s processing environment.

AWS services to modernize your database

AWS offers a range of services to help you modernize your database, such as the following:

Amazon Aurora – An open-source compatible relational database built for the cloud with performance and availability of commercial-grade databases at a tenth of the cost.

Babelfish for Aurora PostgreSQL – Babelfish enables PostgreSQL to understand the commands from applications written for SQL Server and therefore accelerates the migration of such applications from commercial SQL Server to Aurora PostgreSQL-Compatible with minimal code changes.

Amazon RDS – A managed relational database service that makes it simple to set up, operate, and scale databases in the cloud. Choose from popular engines like MySQL, MariaDB, PostgreSQL, Oracle, and SQL Server. Note that there is an option to deploy on premises with Amazon RDS on AWS Outposts.

AWS Cloud databases – Purpose-built databases, including key-value, in-memory, document-oriented, wide column, graph, time series, and ledger-managed databases.

AWS Database Migration Service – AWS DMS helps you migrate databases to AWS quickly and securely. The source database remains fully operational during the migration, minimizing downtime to applications that rely on the database. AWS DMS can migrate your data to and from the most widely used commercial and open-source databases, from on premises or the cloud.

AWS DMS Fleet Advisor – DMS Fleet Advisor is a fully managed capability of AWS DMS. To accelerate migrations, DMS Fleet Advisor automatically inventories and assesses your on-premises database and analytics server fleet and identifies potential migration paths.

AWS Schema Conversion Tool –AWS SCT converts your commercial database and data warehouse schemas to open-source engines or AWS-native services, such as Aurora and Amazon Redshift.

Complexities of database modernization

Although the benefits of moving off a commercial on-premises database should be clear by now, database modernization is not always trivial. As discussed earlier, different modernization pathways have different complexities. What defines these complexities?

A database is not a service in a vacuum; it always exists in a complex environment with other systems, and the closest one is the software application that uses the database as a data store. Any application can be described in layers of software code based on the responsibilities, or simply speaking, the code that does the following:

Presentation layer – The user interface (UI), including desktop forms or webpages. This layer is unlikely to require modifications with database modernization.

Application logic layer – This is where the data is being manipulated, such as hotel reservations or accounting calculations.

Data access layer – This is where most changes should ideally be encapsulated from a database modernization impact perspective, if the application is structured well.

Database – This layer contains the schema and the data. If this layer contains application logic (such as stored procedures or functions), then the heterogenous database migrations become more complex. Along with schema, the volume of data is a significant factor in determining the complexity of the migration.

The following figure illustrates the typical arrangement of these layers.

The complexity levels can be defined as follows:

Lowest – No code changes, except the endpoint changes to point from the old to the new database. Data migration is straightforward, and cutover to the new database can be done with some outage.

Low – Little or no code changes. The data structure can be easily mapped with the AWS SCT or handled with Babelfish (for SQL Server to PostgreSQL migration). Data migration is also straightforward.

High – Code changes are required, mostly to data access logic, but may spread to the application logic if the application architecture is not well designed. Data structure changes may be significant or the volume may require multi-stage migration.

Highest – Significant code changes are required, including the application logic, to use the capabilities of the purpose-built databases. Data structure changes are significant and may require an extract, transform, and load (ETL) process. Application cutover must be done with minimal or no outages.

Common difficulties

When planned and implemented well, database modernization can be smooth and efficient. However, there are factors that may make the process difficult. The following are some common factors:

Executive sponsorship – Database modernization is not properly business validated and not prioritized because of resource constraints and competing priorities. There is no clear business case or lack of executive sponsorship of such initiative in the face of competing priorities. As a result, the team involved is not focused on this work, distracted by other priorities or unable to get the required dependencies aligned. This leads to either a very slow or completely stalled modernization initiative.

Mitigation – Ensure executive sponsorship through data strategy and business case that includes assessment of the total cost of ownership and an optimization license assessment. Contact your dedicated account manager to learn more on these:

AWS offers a specialized program re:iMagine Data for senior executives and IT leadership who want to create a database migration and modernization strategy by utilizing AWS’s experience working with thousands of customers.

AWS and APN partners offer AWS Optimization and Licensing Assessment (AWS OLA), which will help contribute to a database migration and licensing strategy that includes Amazon RDS for Oracle and Amazon RDS for SQL Server. The results of such an AWS OLA helps you explore available deployment options to Amazon RDS based on your unique database licensing entitlements.

Scope and planning – Once started, cost and complexity of the project turns out to be significantly larger than anticipated (from 50% over to several times over). This is an effect of not properly assessing the complexity in the initial stage or choosing a very ambitious goal for the given application or team and modernization pathway.

Mitigation – Involve a competent and experienced AWS Partner to help and plan a proof of concept phase to reduce technology risks. Amazon Database Migration Accelerator (Amazon DMA) can help you create a migration plan and guide you or your migration partner if you face challenges in the migration journey.

Go-live – Once solved technically, there has been no solid launch plan, including cutover without outage (or with a minimal one). Although this is partially a planning issue when this aspect wasn’t properly considered, the complexity is often caused by the data volume, structure, or cutover requirements.

Mitigation – Develop a go-live plan that includes data migration, cutover, and rollback scenarios.

Do it yourself or get help?

To proactively mitigate the common reasons for failure, it’s essential to ask the following questions:

Does my team have the skills and experience to accomplish database modernization successfully by themselves?

Do they have required level of knowledge on AWS services?

Do they have experience in data migration?

Do they have the capacity (time) and priority to work on this project?

Unless you answer a firm “Yes!” to these questions, you may be better off seeking help from people who have such experience and knowledge and can be engaged to focus on this work.

There are a few options available to you—note these are not mutually exclusive and almost any combination of this is possible and has been practiced:

AWS services – Both Amazon Database Migration Accelerator (Amazon DMA) and AWS Professional Services can work with you or an AWS Partner of your choice to accelerate your database modernization.

Amazon DMA offers a complementary Databases & Analytics migration advisory services to create migration strategy and implementation plans, develop migration solutions, and accelerate database migrations and modernizations. You can collaborate with Amazon DMA or nominate an AWS Partner of your choice to deliver last-mile engineering.

AWS ProServe has the experts to do the complex modernization for your critical workloads, including database migration and application refactoring.

AWS Partners – AWS has a huge ecosystem of designated Partners that you can use to deliver database modernization. AWS Partners can help you accelerate your journey to the AWS Cloud. You can find the right accredited Partner for your needs. If you are engaging Partners to migrate from on premises or commercial cloud databases, you may be eligible for Migration Acceleration Funding from AWS to offset your costs. Contact you AWS Partner or account manager to learn more.

Getting it right

Whether you are working with an AWS Partner or have your team on it, common pitfalls can be avoided, once understood. In this section, we consider a few key points that the modernization process involves, as outlined in the following figure.

People and skills

People are the most important element of any technology transformation. In this regard, the following has to be ensured for a successful database modernization:

People involved in the planning and delivery of the database modernization have the skills and experience required in the scope of technologies involved, as well as the application context

People allocated to this work have their full focus on it—for example, they don’t have partial allocation competing with other priorities

Planning and process

The proper planning is another essential element of success. Make sure you do the following:

Make a plan for modernization that includes all the required phases, namely: assessment and discovery, proof of concept, development and testing, and data migration and launch

Make a list of necessary and desirables and prioritize the necessary elements

Review the estimates and assumptions on the proof of concept completion

Establish a launch plan that includes data migration, code deployment, and configuration changes for both success and rollback pathways

Have action plans with the responsible teams clearly assigned and timings defined

Assessment and discovery

This stage aims to confirm that the following elements have been defined and validated:

The business case for the modernization stacks up and has a return on investment (ROI) ideally under 12 months

The executive leadership sponsors the business case and drives the change

Both business and technical aspects are considered: business drivers, technical drivers, dependencies and impact estimate, estimate on changes required (first cut), and target costs estimate

The scope of the project is assessed carefully and understood, especially from the dependencies and impacts to the applications that use the database

The key technical risks are identified and assumptions are highlighted

Proof of concept

A proof of concept (PoC) stage aims to address the risks and assumptions identified via prototyping or small-scale experiments. A good PoC should remove or clarify the top identified risks and unknowns:

Technical high-risk items are addressed: confirmed feasibility or alternative options discovered

High-impact assumptions are confirmed

The technical assumptions of the launch plan are confirmed

Cost and effort estimates are validated and adjusted as needed

If you conduct this work with an AWS Partner, additional PoC funding may be available to you. Contact you AWS Partner or account manager to learn more.

Development

This is the phase where the technical solution confirmed via the PoC is implemented:

Database scripts are prepared for the target database, including data definition and mapping, for example with the AWS SCT.

Application changes (if required) are implemented.

Automated testing for the changes is implemented. This is an essential part of the development process and deployment strategy.

Migrate and launch

The launch phase includes more than just running a deployment job. It consists of the following elements planned and performed:

The target environment is set up.

The data migration and ongoing synchronization for the transition period is considered and planned. This includes:

The deployment and migration plan is understood.

Technical and business validation post-deployment is planned.

The cutover for the workload is planned with minimal downtime.

The rollback plan is defined for a no-go decision.

Databases are in sync with an option to roll back should the deployment validation fail.

Post-deployment data cleanup and decommissioning is planned.

Customer story

To stay competitive, Thomas Publishing strives to launch new products frequently and upgrade their Oracle E-Business Suite (EBS) applications. After deciding to move to AWS, Thomas was introduced to Apps Associates, an AWS Premier Consulting Partner that provides global business and IT services. While initially migrated to self-managed Oracle on EC2 instances, they later modernized the key content management and publishing applications to Aurora.

Since moving to AWS, Thomas Publishing has significantly reduced its costs. “After moving to AWS, we were able to shut down our largest data center, eliminating hundreds of thousands of dollars in associated real estate, facility operations, and power and cooling costs,” says Hans Wald, Chief Technology Officer at Thomas Publishing.

The company also gained the agility to bring new products to market faster. “Using AWS, our developers can spin up resources for a new website in one day, instead of the weeks it used to take. As a result, we can publish new product information for our customers faster,” says Wald.

Additional resources

Refer to the following additional resources for help with your database modernization project:

Migration playbooks containing specific technical details on feature compatibility, configuration, security, and other aspects of transitioning from one database to another:

Oracle to Aurora PostgreSQL Migration Playbook

Oracle to Aurora MySQL Migration Playbook

SQL Server to Aurora PostgreSQL Migration Playbook

SQL Server to Aurora MySQL Migration Playbook

AWS Re:Invent 2022 videos:

Amazon Relational Database re:Invent 2022 recap

AWS re:Invent 2022 – Thomson Reuters & BMC Software database modernization vision with DMA

AWS re:Invent 2022 – Modernizing a megascale database (PRT302)

AWS re:Invent 2022 – What’s new with database migrations (DAT218)

AWS Prescriptive Guidance:

Migration strategy for relational databases

Prioritization guide for refactoring Microsoft SQL Server and Oracle databases on AWS

Modernizing your application by migrating from an RDBMS to Amazon DynamoDB

Choosing a migration tool for rehosting databases

Conclusion

As demonstrated in this post, moving off commercial databases and managed instances can save a lot in costs as well as enable modern application architectures and higher speed to market, among other benefits. The transition of a database may be a complex task, but when done right, it can deliver those benefits. To do it right, make sure that you engage people who know what they are doing, that the dependencies and impacts are identified early, that the assumptions are confirmed and risks clarified through a PoC, and that you have a solid plan for data migration and application launch.

Comment on this post if you have questions on the topics covered, refer to the resources listed above, or reach to your dedicated account manager or AWS Partner.

About the authors

Andrew Grischenko is an AWS Solutions Architect in Partner Sales working with AWS customers and partners and helping them on cloud adoption, application and database modernization, and SaaS journeys.

Sandeep Rajain is a Database specialist Partner Solutions Architect. He works with partners and customers to envisage and build data strategy and provides technical assistance to accelerate their data modernization.

Read MoreAWS Database Blog