There are many scenarios in which you might need to migrate your Amazon DynamoDB tables from one AWS account to another AWS account, such as when you need to consolidate all your AWS services into centralized accounts. Consolidating DynamoDB tables into a single account can be time-consuming and complex if you have a lot of data or a large number of tables.

In this post, we provide step-by-step instructions to migrate your DynamoDB tables from one AWS account to another by using AWS Data Pipeline. With Data Pipeline, you can easily create a cross-account migration process that’s fault tolerant, repeatable, and highly available. You don’t have to worry about ensuring resource availability, managing intertask dependencies, retrying transient failures or timeouts in individual tasks, or creating a failure notification system.

Overview of the solution

There are multiple ways to export DynamoDB table data into Amazon S3. For example, DynamoDB does support exporting table data into Amazon S3 natively. For the purpose of this solution, we choose to use Data Pipeline for exporting DynamoDB table data into destination account.

Data Pipeline is a web service you can use to reliably process and move data between different AWS accounts at specified intervals. With Data Pipeline, you can regularly access your data in your DynamoDB tables from your source AWS account, transform and process the data at scale, and efficiently transfer the results to another DynamoDB table in your destination AWS account.

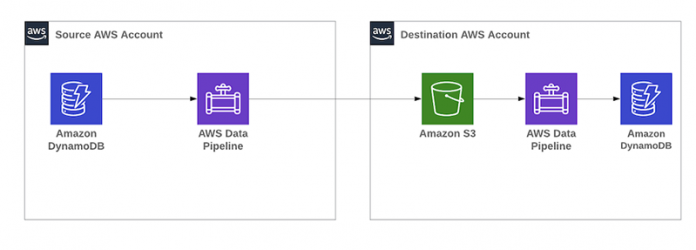

The following diagram shows the high-level architecture of this solution which uses the AWS Data Pipeline to migrate Amazon DynamoDB table from source account to destination account.

To implement this solution, complete the following steps:

Create a DynamoDB table in your source account.

Create an Amazon Simple Storage Service (Amazon S3) bucket in the destination account.

Attach an AWS Identity and Access Management (IAM) policy to the Data Pipeline default roles in the source account.

Create an S3 bucket policy in the destination account.

Create and activate a pipeline in the source account.

Create a DynamoDB table in the destination account.

Restore the DynamoDB export in the destination account.

Once you complete testing this solution, please refer to cleanup section to avoid any additional charges.

Prerequisites

To follow this solution walkthrough, you must have the following resources:

An AWS source account

An AWS destination account

If you have never used AWS Data Pipeline before, you will need to create DataPipelineDefaultRole and DataPipelineDefaultResourceRole Please refer to Getting started with AWS Data Pipeline for additional details.

Create a DynamoDB table in your source account

First, create a DynamoDB table in your source account that comprises customer names and cities:

On the DynamoDB console, choose Create table.

For Table name, enter customer and leave field type as String.

For Partition key, enter name.

Choose Add sort key and enter city.

Leave the remaining options at their defaults.

Choose Create.Table creation may take sometime.

After the table is created, choose the name of the table, and on the Items tab, choose Create item to create a few items.

The following screenshot shows what the DynamoDB table in your source account should look like with items.

Create an S3 bucket in the destination account

Now, create an S3 bucket in your destination account:

On the S3 console, choose Create bucket.

Enter a bucket name according to S3 naming rules. In this post we’ll use a bucket named mydynamodbexportforblog.

Leave the other options at their defaults.

Choose Create bucket.

Attach an IAM policy to the Data Pipeline default roles in the source account

In this step, you create an IAM policy and attach it to the Data Pipeline default roles in the source account:

On the IAM console, choose Policies in the navigation pane.

Choose Create policy.

On the JSON tab, enter the following IAM policy:

Your policy should look like the following screenshot, replacing mydynamodbexportforblog with the name of the S3 bucket you created in your destination account.

Choose Next: Tags.

You can add tag names as needed.

Choose Next: Review.

For Name, enter dynamodbexport.

Choose Create policy.

In the list of policies, select the policy you just created.

Choose Policy actions and choose Attach.

Select DataPipelineDefaultRole and DataPipelineDefaultResourceRole.

Choose Attach policy.

Create an S3 bucket policy in the destination account

Now, create an S3 bucket policy in the destination account:

On the S3 console, select the bucket you created.

Choose Permissions.

Choose Edit.

Enter the following bucket policy (provide the AWS account ID of the source account).

Your policy should look like the following screenshot, replacing mydynamodbexportforblog with the name of your S3 bucket.

Choose Save changes.

Create and activate a pipeline in the source account

The Data Pipeline launches an Amazon EMR cluster to perform the actual export. Amazon EMR reads the data from DynamoDB, and writes the data to an export file in an Amazon S3 bucket.

Follow below steps to create a pipeline with the Export DynamoDB table to S3 template:

On the Data Pipeline console, choose Get started now.

For Name, enter dynamodb-export-to-other-account.

For Source, select Build using a template.

Choose Export DynamoDB table to S3.

In the Parameters section, for Source DynamoDB table name, enter customer.

For Output S3 folder, enter s3://<<BUCKET NAME>>/ as the destination account S3 path.

In the Schedule section, leave settings at their defaults, or choose scheduling options as needed.

Now, create an S3 bucket in the source account for Data Pipeline logs, in this blog it is called my-dynamodb-migration .

Under Pipeline Configuration, for S3 location for logs, enter s3://<<BUCKET NAME>>/ (use the bucket name from the previous step)

Under Security/Access, choose Default for IAM roles.

Choose Edit in Architect.

In the Activities section, for Type, choose EmrActivity and extend the Step box to see the details.

In the Step box, add the BucketOwnerFullControl or AuthenticatedRead canned access control list (ACL).

These canned ACLs give the Amazon EMR Apache Hadoop job permissions to write to the S3 bucket in the destination account.AuthenticatedRead canned ACL specifies that the owner is granted Permission.FullControl and the GroupGrantee.AuthenticatedUsers group grantee is granted Permission.Read access.BucketOwnerFullControl canned ACL specifies that the owner of the bucket is granted Permission.FullControl. The owner of the bucket is not necessarily the same as the owner of the object.

For the purpose of this example, we are choosing BucketOwnerFullControl.

Be sure to use the following format: Dfs.s3.canned.acl=BucketOwnerFullControl.

The statement should be placed as shown in the following example.

In the Resources section, at the end of the EmrClusterForBackup section, choose Terminate After. Enter 2 weeks or the desired time at which to stop the EMR cluster.

The following screenshot shows the pipeline in the source account.

Choose Save.

Choose Activate to activate the pipeline.

The following screenshot shows the successful activation of the pipeline in the source account, which exports the DynamoDB table’s data in the destination S3 bucket.

Now you can sign in to the destination account and verify that the DynamoDB exported data is in the S3 bucket.

Create a DynamoDB table in the destination account

In this step, create a new DynamoDB table in the destination account that is composed of names and cities:

On the DynamoDB console, choose Create table.

For Table name, enter customer.

Choose Add sort key and enter city.

Leave the remaining options at their defaults.

Choose Create table.

At this point, the table doesn’t contain any items. Load the items from the source account by using the Import DynamoDB backup data from S3 template.

Import the DynamoDB export in the destination account

Now you’re ready to import the DynamoDB export in the destination account:

Attach an IAM policy to the Data Pipeline default roles in the destination account (follow the instructions earlier in this post when you attached an IAM policy to the roles in the source account).

On the DynamoDB console, choose Get started now.

For Name, enter import-into-DynamoDB-table.

For Source, select Build using a template.

Choose Import DynamoDB backup data from S3.

In the Parameters section, for Input S3 folder, enter the location where the DynamoDB export is stored from the source account in the format s3://<<BUCKET_NAME>>/<<folder_name>>/.

For Target DynamoDB table name, enter customer (the destination table name).

In the Schedule section, leave the settings at their defaults, or modify as needed.

Under Pipeline configuration, for S3 location for logs, enter the bucket name created to store the logs in the format s3://<<BUCKET_NAME>>/.

Under Security/Access, choose IAM roles as default.

Activate the pipeline to import the backup to the destination table.

Validate the destination DynamoDB table with the source DynamoDB table items.

You have successfully migrated an existing DynamoDB table from one AWS account to another by using Data Pipeline.

Key considerations

The time required to migrate tables can vary significantly depending on network performance, the DynamoDB table’s provisioned throughput, the amount of data stored in the table, and so on.

During the export phase, Data Pipeline performs a parallel scan on the DynamoDB table and writes the output to S3. The speed of the export depends on the provisioned read capacity units (RCUs) on the table and the amount of the provisioned RCUs you want to use for the export. If you want to speed up the process, use more provisioned RCUs for the export.

During the import phase, Data Pipeline reads data from S3 in parallel and writes the output to DynamoDB. Again, the speed of the import depends on the provisioned write capacity units (WCUs) on the table and the amount of the provisioned WCUs you want to use for the import. If you want to speed up the import, use more provisioned WCUs.

During large exports and imports, you can reduce your throughput costs, if you use provisioned capacity (compared to on-demand capacity) and enable auto scaling during the process to automatically scale up the RCUs and WCUs as needed.

Cleanup

After you test out this solution, remember to delete all the resources you created, such as the pipeline, S3 bucket, and DynamoDB tables in both source and destination accounts. Refer to below links for deleting the resources.

Deleting your Data Pipeline

Deleting your S3 bucket

Delete your DynamoDB table

Deleting IAM roles

Conclusion

In this post, we showed you how to migrate a DynamoDB table from one AWS account to another by using Data Pipeline. With Data Pipeline, you specify a DynamoDB table in your source AWS account, a schedule, and the processing activities required for your data movement to the DynamoDB table in your destination account. Data Pipeline handles running and monitoring your processing activities on a highly reliable, fault-tolerant infrastructure. For more information about importing and exporting DynamoDB by using Data Pipeline, see the Data Pipeline Developer Guide.

About the authors

Raghavarao Sodabathina is an enterprise solutions architect at AWS, focusing on data analytics, AI/ML, and serverless platforms. He engages with customers to create innovative solutions that address customer business issues and accelerate the adoption of AWS services. In his spare time, Raghavarao enjoys spending time with his family, reading books, and watching movies.

Changbin Gong is a senior solutions architect at AWS, focusing on cloud native, AI/ML, data analytics, and edge computing. He engages with customers to create innovative solutions that address customer business issues and accelerate the adoption of AWS services. In his spare time, Changbin enjoys reading, running, and traveling.

Read MoreAWS Database Blog