Amazon’s Timehub team builds and supports time systems that pay employees accurately and simplify workforce management for every country where Amazon does business. Timehub helps Amazon deliver on its Leadership Principle to Strive to be Earth’s Best Employer by enabling next-generation workforce management. It helps prevent pay defects through automation of error correction and delivering a smooth employee experience.

MyTime is Amazon’s primary Timehub system, supporting employees across more than 25 countries and over 40 business lines. To cover Amazon’s breadth and depth of operations globally, MyTime is set up to handle thousands of pay policy groups, accrual policies, time-off requests types, and pay codes. Typically, in a year, MyTime has to process hundreds of millions of pay extracts, time-off requests, employee schedule transactions, punch data, and pay code records. These transactions happen through automated mechanisms, as well as manual adjustment processes. Employees can capture their working hours, review their timecard, and review their time-off balances using MyTime. Managers have real-time visibility of their employees’ shift hours, overtime trends, and leave balances, which facilitates resource planning exercises.

This is the first post in a three-part series. In Part 1, we show you how we built a low-latency replication solution using AWS Database Migration Service (AWS DMS) and Amazon Aurora PostgreSQL-Compatible Edition as our target database. This solution addresses the Timehub team’s need to have a sub-minute latency, high-performant, single source of truth (SSOT) data store for Amazon’s time and attendance data sourced from the MyTime database. In the next two posts of this series, we show how the team designed resiliency (Part 2) and recovery from failure (Part 3) within the solution framework.

Problem statement

MyTime is Amazon’s implementation of a third-party product using Oracle 19c as its database. MyTime processes millions of transactions daily, from across the globe. The Timehub team’s vision was to set up a replication service that would move the data from the MyTime Oracle environment to an AWS-based data store seamlessly with latency of less than 1 minute. A solution architecture was needed that could address the sub-minute replication requirement and resolve two major business pain points with the existing solution.

The first pain point was the high response time for existing read APIs on the source database. The previous solution was built as batches of bulk read APIs from the MyTime Oracle database. The data processing latency from Oracle to the Timehub team’s analytical data lake system was high (40 minutes per batch run at 4-hour intervals). The main reason was attributed to the SQL within the APIs scanning through datasets (ranging up to 20 TB) to fetch the predicated data requested by applications. Apart from slowing down API responses, the solution was not serving the business need to run near-real-time analytics on the data to drive business decisions.

The prior solution also lacked a good mechanism to capture incremental change data. Downstream systems had to rely on custom data pull logic embedded within traditional extract, transform, and load (ETL) processes. This required reading and processing data for each table before loading them to the respective target data stores. The queried dataset was then processed to capture changed data through the custom logic. To support the custom logic, a snapshot of data had to be taken every 5–30 minutes. The custom logic then did a comparison of the last two snapshots to come up with the changed data. This design approach had the following inherent drawbacks:

Propagation delay between source and target – The custom solution that relied on querying data suffered from latency limitations, partly due to the nature of the solution, and mostly due to the scale of data velocity and data volume (redo growth of 190 Mbps per day and total data size of 20 TB).

Loss of data events – The custom change data capture (CDC) process would take anywhere between 3–15 minutes for the snapshot comparison. As a result, the snapshots would ignore the changes between the windows when data is extracted, leading to potential data loss scenarios.

Development effort for new dataset – Because the snapshot comparison logic to detect changes would vary across different datasets based on the unique columns and grain of data stored, additional development and testing efforts were required to onboard new datasets to the solution.

Load on source – The custom CDC process incurred additional resource demand on the Oracle source, which led to performance degradation for MyTime users at peak operation times, impacting their productivity.

Approach to the solution

To come up with a solution approach to the aforementioned problems of data propagation delay, possible data loss events, and development efforts, we evaluated AWS DMS, an AWS managed service for ongoing data replication. The primary objectives of the evaluation were to test the functionality, gather metrics on data propagation times, and measure any additional resource demand on the source database in a benchmarking environment. It required us to measure and simulate the production-like traffic in the test environment.

There were more than 3,000 tables in the source Oracle database supporting the MyTime application. Out of those, we identified a set of 300 tables that were regularly updated. The tables’ data volume in the production environment was close to 20 TB. To measure the likely traffic in production, we shortlisted 154 tables to replicate from the source database on an ongoing basis. To estimate the production workload requirements, we performed the following benchmarking analysis:

Captured data for two cycles of a 2-week period each for redo log growth on the production Oracle instance

Monitored the number of transactions processed for the same period of time

Simulated similar traffic in the benchmarking environment for multiple cycles through customized means and observed source database metrics with or without supplemental logging

Experimented with different replication instance classes until we right-sized for the desired latency (p95 30 seconds) with peak load

Based on the benchmarking tests, we were able to conclude the following:

Supplemental logging had no significant impact on the source database resource utilization

AWS DMS was able to sustain the transaction volume, fulfilling our latency requirements (p95 30 seconds)

Onboarding a task required just configuration changes

The following table depicts the metrics that we collected as part of our production workload analysis. They were key inputs towards deciding on the solution configuration. The tables show percentile distribution of redo log growth, which helped us simulate production-like traffic in lower environments during testing. As observed from the metrics, the peak load is higher than the load we receive 95% of the time.

Metric Name

p90

p95

p99

peak

Redo Growth (in MBPS)

50.9

57.4

72.5

190.2

Record Count (TPS)

3675

5170

9594

20018

Solution overview

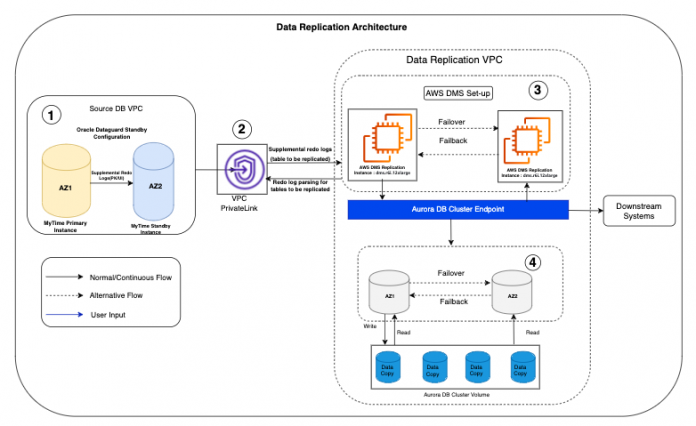

The following diagram illustrates the solution architecture.

The components in the architecture are as follows:

The source Oracle database, along with its Active Data Guard physical standby database, are hosted within a VPC (Source DB VPC in the preceding diagram). The storage is configured with Oracle Automatic Storage Management (Oracle ASM). AWS DMS and the target data store, Aurora PostgreSQL cluster, and endpoint are in another dedicated VPC within private subnets (Data Replication VPC in the diagram).

Because the source Oracle database is hosted in a different AWS account and VPC, we created an AWS PrivateLink VPC endpoint to the database endpoint service. We exposed both the source database and Oracle ASM service through the VPC endpoint in the source database AWS account.

AWS DMS is set up with an Oracle standby database as source and Aurora PostgreSQL cluster endpoint as target, all within the same Region. AWS DMS has two instances across Availability Zones within the same Region.

The Aurora PostgreSQL cluster has three instances: a primary instance along with two read replicas in different Availability Zones. By default, the Aurora database cluster endpoint connects to the writer (primary) instance of the Aurora database. Data is then automatically replicated across the reader instances within the Availability Zone.

In the following sections, we focus on the key considerations of the source and AWS DMS configuration for the replication.

Source (Oracle) configuration

The primary Oracle database is configured on Oracle ASM (version 19c). Data Guard is configured with Redo Transport Services for automated transfers of redo data and automatically applies redo to a standby, read-only database instance. For ongoing CDC through AWS DMS, minimal supplemental logging is enabled on the Oracle source database. We enabled supplemental logging on each table. We tested CPU utilization and throughput with and without supplemental logging enabled, and found that there is no noticeable difference in the performance metrics when supplemental logging is enabled. The source database was set to be in ARCHIVELOG ON mode for AWS DMS CDC tasks to run.

Additionally, we need database credentials as well as ASM user credentials to be able to connect to the database to read data and archived and redo logs, respectively.

AWS DMS configuration

In AWS DMS, there are two methods for reading the redo logs when doing CDC processing with Oracle as a source: Oracle LogMiner and AWS DMS Binary Reader. LogMiner is an Oracle API to read the online redo logs and archived redo log files. Binary Reader is an AWS DMS method that reads and parses the raw redo log files. For replications with a high volume of changes, LogMiner will have I/O and CPU implications on the server hosting the Oracle source database. Binary Reader has less I/O or CPU impact because logs are mined directly rather than running database queries. Because we didn’t want any performance impact on the source database, we decided to use an Active Data Guard physical standby database as the source database in Binary Reader mode, and this is the only mode supported for physical standby databases.

In our testing for replications with a high volume of changes, CDC performance was proven to be much better when using Binary Reader compared to Oracle LogMiner.

Instance selection

The following table summarizes our considerations when selecting a replication instance.

Parameter

Configuration

Determinant

Instance Class

dms.r6i.12xlarge

CPU and memory required

CPU

48

Number of tasks and parallel threads required, which in turn is dependent on the number of tables and volume

Memory

384 GB

Redo log growth and data volume for full loads

Storage

2 TB

Redo log growth and data volume for full loads (spillovers from memory)

Multi-AZ

Yes

Tolerance for downtime

Task design

For MyTime tables, the replication process was carried out in two phases: full load and ongoing replication (CDC).

Full load task setup

Dedicated tasks are created for the first-time, full load of data. Because full load can load multiple tables in parallel, to take advantage of multiple CPUs, we set parallel processes at 16 threads per task in the production implementation. For larger tables (with row count greater than 1 billion), the loading was done in parallel through multiple tasks. We made sure that the records targeted by each task don’t overlap. It was achieved by applying the filters in tasks so that they are mutually exclusive and evenly partition the data.

CDC task setup and handling referential integrity of data

Each task has its independent thread, and each task processes changed records independently to the target. We took this behavior into consideration while segregating tables into different CDC tasks. Putting related tables in different tasks for CDC may violate referential integrity. CDC tasks should be started as soon as possible after full load is completed successfully and validated. There is an option to set a timestamp from which the CDC task should start. You can set this timestamp after considering some safe buffer time. For example, if the full load task started at 13:00 UTC (it can be verified from the Amazon CloudWatch logs for AWS DMS), you can set the corresponding CDC task to start at 12:30 UTC. There is a possibility of data overlap, however, because we have primary keys defined on tables, the overlapping records are logged into the error tables.

Tasks with full load plus CDC compared to separate tasks for full load and CDC

One reason why we had to keep full load tasks separate from CDC tasks is because, for filters to work in CDC mode, supplemental logging had to be enabled on the filtered columns on top of the primary keys for those big tables. An important point to consider is the log retention time on the secondary Oracle database (in our case, it was 6 hours), which implies that we can’t set the CDC start time to a time older than 6 hours. AWS DMS reads the entire database redo log file (currently set to 16 GB in size) in memory as it’s generated, sorts the transactions by System Change Number (SCN), and applies them to the target in the same order as they were applied on the source.

Merging of an ongoing replication task into the existing ongoing replication task

We started building ongoing replication tasks for 50 tables initially; later, we had to ramp up to onboard 150 tables in four phases. The process we followed is depicted in the following flowchart. We started setting up full load and ongoing replication tasks for onboarding new tables to the replication framework. Essentially, the flow chart explains the end-to-end workflow to create the full load task, transition it to an ongoing replication task, and then merge it with the main ongoing replication task in order to maintain referential data integrity in the target relational database.

We use CloudWatch logs to track whether the task is caught up to the current redo log or not. If it’s caught up, we stop the task and merge it into the main ongoing replication task. Because we have primary and unique constraints in all tables, the overlapping records would go into exception tables, but by pulling the start time back by 30 minutes, we made sure there were no missing records.

Monitoring

For monitoring the AWS DMS task’s progress and replication instance’s utilization, we focused on the following CloudWatch metrics:

CDC latency source – The gap, in seconds, between the last event captured from the source endpoint and the current system timestamp of the AWS DMS instance. Unit: seconds.

CDC incoming changes – The total number of change events at a point in time that are waiting to be applied to the target. Unit: count.

CDC latency target – The number of rows accumulating in memory and waiting to be committed to the target. Unit: seconds.

CDC changes disk source – The number of rows accumulating on disk and waiting to be committed from the source. Unit: count.

CDC changes disk target – The number of rows accumulating on disk and waiting to be committed to the target. Unit: count.

CDC changes memory source – The number of rows accumulating in memory and waiting to be committed from the source. Unit: count.

CDC changes memory target – The number of rows accumulating in memory and waiting to be committed to the target. Unit: count.

AvailableMemory – An estimate of how much memory is available for starting new applications, without swapping.

CPUAllocated – The percentage of CPU maximally allocated for the task (0 means no limit). Units: percent.

CPUUtilization – The percentage of allocated virtual CPU (vCPU) currently in use on the instance. Units: percent.

DiskQueueDepth – The number of outstanding read/write requests (I/O) waiting to access the disk. Units: count.

FreeStorageSpace – The amount of available storage space. Units: bytes.

FreeMemory – The amount of physical memory available for use by applications, page cache, and for the kernel’s own data structures. Units: bytes.

FreeableMemory – The amount of available random-access memory. Units: bytes.

We dive deep into using CloudWatch metrics for AWS DMS and other custom measures to achieve operational excellence in the next post in this series.

Conclusion

In this post, we discussed how a solution built with AWS managed services (a combination of AWS DMS and Amazon Aurora PostgreSQL) helped Amazon Timehub overcome data freshness and CDC challenges with their existing custom ETL solution, resulting in sub-minute data propagation latency between source and target (from 4 hours previously). The solution helped reduce resource demands on the source database instance and de-risked data loss scenarios in the data transfer process. Additionally, it reduced the development time by 85% (from 1 week to 1 day for a new table addition for replication), and reduced the cost by 35% by moving from a custom ETL solution. Downstream operational overhead was reduced compared to a traditional ETL solution because the complexity of identifying changes (insertions, modifications, and deletions) is now taken care of by AWS DMS inherently.

In the next parts of this series, we discuss and dive deep into how Amazon Timehub built resilience into the solution and a data validation framework for speedy recovery from disasters or failures.

About the Authors

Ujjawal Kumar is a Data Engineer with Amazon’s Timehub team. He has over 12 years of experience as a data professional working in domains such as finance, treasury, sales, HCM, and timekeeping. He is passionate about learning all things data and implementing end-to-end data pipelines for data analytics and reporting. He is based out of Dallas, Texas.

Amit Aman is Data Engineering Manager with the Amazon’s Timehub team. He is passionate about building data solutions that help drive business outcomes. He has over 17 years of experience in building data solutions for large enterprises across retail, airlines, and people experience. He is based out of Dallas, Texas.

Saikat Gomes is part of the Customer Solutions team in Amazon Web Services. He is passionate about helping enterprises succeed and realize benefits from cloud adoption. He is a strategic advisor to his customers for large-scale cloud transformations involving people, process, and technology. Prior to joining AWS, he held multiple consulting leadership positions and led large-scale transformation programs in the retail industry for over 20 years. He is based out of Los Angeles, California.

Manmohan Singh is a Sr. Solutions Architect with AWS, focusing on building resilient, highly available, ultra-low-latency, cost-effective solutions, and is innovating with Amazon in database, data analytics, AI/ML, generative AI, and observability. He has over 25 years of IT experience in working with retail and finance verticals. He is based out of San Francisco, California.

Read MoreAWS Database Blog