This is a guest post by Niv Gelbermann Shkolnik , Software Engineer at Skai co-authored with Eran Balan, Senior Solutions Architect at AWS.

Skai is the leading omnichannel platform that helps performance marketers plan, activate, and measure programs across digital media for informed decisions, high efficiency and optimal return.

In this post, we discuss how Skai used AWS Database Migration Service (AWS DMS) to perform a real-time migration to one of its critical databases as part of a microservice modernization project. AWS DMS is a managed migration service that helps customers move databases to AWS. It can also be used to perform migrations between two existing data sources in AWS while the source database remains fully operational.

Challenge

Skai, like many companies, faces scaling challenges as its business grows. One approach to mitigate those challenges is to deprecate legacy services and create new microservices that will scale more easily.

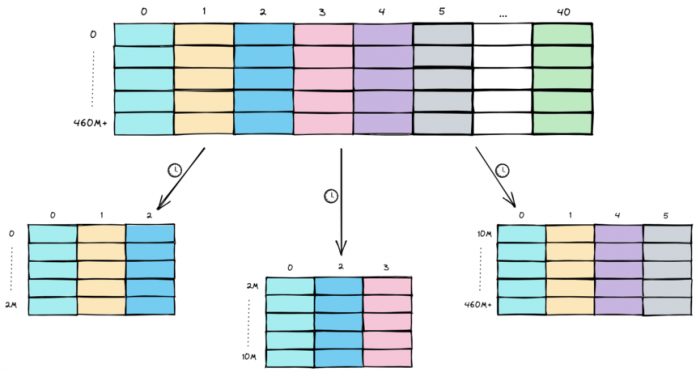

Skai decided to use this approach to deprecate a central monolithic service that could no longer carry the load it was handling; it was using a constantly growing MySQL table with more than 460 million rows and 40 columns. As part of the system redesign, the new service would have to handle this table’s data in a more scalable way. For that, we wanted to migrate the table under the following requirements:

Break the source table into several small target tables based on a single-column filter (equivalent to a single SQL “WHERE” clause) applied to the source table.

The target tables’ expected sizes at the time varied between a few million records to 450 million records.

Migrate only a few columns per target table, usually 3–4 columns.

Keep migrating continuous changes from the source database over a period of weeks or months until the migration to the new service is complete.

To make the requirements clear, let’s visualize the migration from the bloated source table to the target sub-tables:

The new data model allowed us to create a more efficient microservice in terms of processing latency and throughput as well as storage requirements.

We were looking for a tool that would handle this data migration reliably, with minimal effort from our side.

Solution

We chose AWS DMS to perform the migration for two main features:

First, the service supports several migration modes, one of which is full-load migration followed up with change data capture (in this post, we will abbreviate this to FL+CDC). The service can perform an initial transfer of all the data from the source database to the target, then continue syncing ongoing changes for an indefinite period based on binlogs. We expected the system refactoring would take a few months, so a solution that would continue the live replication during this period was a must-have.

Second, the service allows the configuration of selection rules and transformation rules, dictating what part of the data is transferred and how it is mutated during the transfer (if at all).

For each target table, we used selection rules to migrate only a specific subset of the source table rows and transformation rules to migrate a small subset of columns.

In preparation for the migration, we followed the Access CloudWatch Logs for an AWS DMS task guide for setting up user access to AWS DMS tasks logs.

In total, these were the main components required to configure prior to the migration:

Source database: a read replica of the deprecated microservice’s database.

Target database: the database of the new and refactored microservice.

AWS DMS replication instance: a data transmission and processing handler.

AWS DMS task: the metadata that defines the migration.

Source database

Because the source database handled live traffic from the soon-to-be-retired service, we were looking for a way to minimize the impact on the production environment. For this reason, we created a read replica of the deprecated database to serve as the AWS DMS source database, so any workload AWS DMS adds to its source database would only impact the read replica (this would later turn out to be more complicated than we predicted—more details to follow).

We made the modifications suggested in the DMS documentation to the primary instance of our source RDS cluster with the following additions:

Create a user called “dms,” with privileges for executing the migration:

Increase the binlog’s retention time:

The X parameter determines the number of hours the binlog data is retained. In case of migration issues, you might need to resync the changes to the target database. If the retention period isn’t long enough, you might need to start the migration process from scratch. We chose the X as 120 hours (5 days) because we estimated it would be enough time for us to investigate and fix any issues during the migration.

Target database

The Amazon RDS target database would be the database used by the new microservice. Live traffic would be routed to this service once we decided on the cutover from the deprecated service.

We made the modifications suggested in the DMS documentation to the database.

Additionally, we reviewed the target database hardware settings to verify that:

It has enough storage space for the estimated total data it should receive during the migration.

Its instance type is roughly equivalent to the source database instance type, which is recommended at least for the duration of the migration.

Replication instance

The replication instance is the Amazon Elastic Compute (Amazon EC2) instance that performs the selection, transformation, formatting, and transmission of the migrated data.

Since the source database was heavy on write operations, which translates to a heavy workload on the replication instance, we decided to use the same instance type for the replication instance as the source DB instance (in our case, db.m5.4xlarge, which we also applied to the target database for the duration of the migration).

It’s also important to provision enough disk space for caching and swapping. We provisioned about three times the expected size of the target database. You can read more on how to size your replication instance at the AWS DMS User Guide.

AWS DMS task

The migration task defines how the migration should work. We created several migration tasks, one per target table, and the main settings in our case were:

Migration type: Migrate existing data and replicate ongoing changes (FL+CDC).

Do nothing for target table preparation (you can also set it to drop or truncate the target table).

Do not stop after the full load completes.

Enabled CloudWatch logs.

Commit rate of 50K (max value, instead of the default 10K) to speed up the full load process.

In addition to these properties, a migration task is defined with a selection rule and zero or more transformation rules. Our selection rule specified the source table on the read replica, as well as a filter on one of its columns, to only migrate a subset of rows from the source data.

We used several types of transformation rules:

As mentioned above, the source had 40 columns, while the target tables had 3–4 columns only. To support this, we created a remove-column transformation on all the unnecessary columns.

To support new column names in the target tables, we created rename transformation rules on some of the migrated columns.

Challenges along the way

With configurations finished, we started the migration tasks. The full-load transfer ran and completed without issues, and the migration moved to the change data capture (CDC) stage. At this point, we stopped seeing data updates on the target database.

Initial investigation raised a read replica issue that started between the setup of the replica and the start of the migration process: the replica accumulated several days’ worth of replication lag. Further investigation yielded that the replication is, by default, single-threaded. This, combined with the outdated design of the deprecated microservice (e.g., frequent unoptimized queries and full-table scans), created a significant load on the replication process, causing a system lock.

To resolve the issue, we switched to multi-threaded replication by changing some settings:

Update the following parameters on the read replica’s parameter group:

slave_parallel_type = LOGICAL_CLOCK

slave_parallel_workers = 4 (and later even 16, or as many as the vCPUs of the database’s instance type)

slave_preserve_commit_order = 1

tx_isolation = READ-COMMITTED

Enable automatic backups via the Amazon RDS GUI. This setting is separate from the binlog retention hours setting mentioned previously and is required when using multi-threaded replication with slave_preserve_commit_order = 1.

With the new settings applied, all that was left for the CDC migration to continue was to restart the read replica, and wait for the replication to catch up with the lag.

Other learnings

When slave_preserve_commit_order is set to 1, data changes are guaranteed to be made on the read replica in the same order they were made on its source database. This can cause threads to wait for each other, slowing down the replication.

In our use case, the columns we wanted to preserve in the migration for each target table were identifier columns only, meaning they never changed on the source database. No illegal state could be reached by migrating changes in the wrong order. Consequently, we could change this setting back to 0, speeding up the replication even more until successful completion.

To summarize, we carried out individual migrations for each target table and the longest full load was completed within a few hours. Once all migrations were completed, we had the flexibility to discontinue the use of the old microservice and its associated database and perform the desired cutover which took a few minutes to complete.

Conclusion

In this post, we explored the way AWS DMS allowed Skai to transition to a new data model and modernized architecture. It helped us deprecate a monolithic service and in doing so, reduce storage costs, as well as upgrade central business logic designs, which in itself allows for faster development cycles in these areas.

At all points during the migration, AWS Support was readily available to counsel us about any challenge and reduce friction in unfamiliar territory, helping us streamline the migration processes.

We encourage you to look into your data models to determine if they should be modernized. Try out AWS Database Migration Service for a smooth, managed, and supported transition.

About the Authors

Niv Gelbermann is a Fullstack Software Engineer, based in Israel. He works at Skai’s omnichannel platform for marketers and campaign managers.

Eran Balan is an AWS Senior Solutions Architect based in Israel. He works with digital native customers to provide them with architectural guidance for building scalable architecture in AWS environments.

Read MoreAWS Database Blog