This is a guest post co-written with Hemant Singh and Mohit Bansal from PayU.

PayU is one of India’s leading digital financial services providers, and offers advanced solutions to meet the digital payment requirements of the market.

PayU provides payment gateway solutions to online businesses through its award-winning technology and has empowered over 500 thousand businesses, including the country’s leading enterprises, e-commerce giants, and SMBs, to process millions of transactions daily. We enable businesses to collect digital payments across 150+ online payment methods such as Credit Cards, Debit Cards, Net Banking, EMIs, pay-later, QR, UPI, Wallets, and more. We are also the preferred partner in the affordability ecosystem, offering maximum coverage of issuers and easy-to- implement integrations across card-based EMIs, pay-later options, and new-age cardless EMIs.

Data is at the center of decision-making and customer experience at PayU. At PayU, we use insights from data analytics and machine learning (ML) to drive multiple business flows. For example, we use ML to drive business solutions like the recommendation engine on PayU Checkout pages, fraud detection, and more.

A feature store is a centralized repository that manages ML features for training and inference. During training, it stores versioned feature data, enabling reproducibility and monitoring feature distributions. At inference time, the feature store consistently serves feature vectors, providing parity between training and serving data environments. In this post, we outline how at PayU, we use Amazon Keyspaces (for Apache Cassandra) as the feature store for real-time, low-latency inference in the payment flow.

Amazon Keyspaces is a scalable, highly available, and managed Apache Cassandra-compatible database service. With Amazon Keyspaces, you can run your Apache Cassandra workloads on AWS using the same Cassandra application code and developer tools that you use today. You don’t have to provision, patch, or manage servers, and you don’t have to install, maintain, or operate software. Amazon Keyspaces is serverless, so you pay for only the resources you use, and the service can automatically scale tables up and down in response to application traffic. You can build applications that serve thousands of requests per second with virtually unlimited throughput and storage.

Machine learning at PayU

We use multiple ML models to drive value of our applications. One such example is ML models that are used in business flows like the recommendation engine on PayU Checkout pages to rank payment modes. The ranking is based on recent data of the success rate of a payment mode. Ranking lets customers choose the right payment mode. This helps improve the payment success rate and reduce bounced or dropped transactions.

The inference to suggest ranking is in the critical path of payment flow, so low-latency inference is paramount. The ML model needs to access data from a low-latency feature store during the inference. The feature store also needs to support high throughput while maintaining consistent low latency. The data stored in the feature store are entities like merchant, customer, and payment client. During inference, the API accesses data from the feature store using the primary key of the entity, merchant ID, customer ID, or payment client ID. There are multiple such use cases where ML models need low-latency feature stores during inference.

Amazon Redshift is the single source of data for feature stores at PayU. Amazon Redshift is a fast, fully managed cloud data warehouse that makes it straightforward and cost-effective to analyze all your data. You can use data in your Redshift cluster to train ML models. Amazon Redshift is optimized for online analytics processing (OLAP), and we need a low-latency, high-throughput NoSQL OLTP database for our inference feature store. A periodic process is needed to write data from Amazon Redshift to the inference feature store. The time period is use case dependent.

In one of our use cases, we have 500 million records in two tables with over 30 features. The data to the feature store gets updated every 15 days. In another use case, we have about 50 million records in 10 tables with over 50 features. The data to the feature store gets updated every week. The business flow needs to perform ML inference at the rate of 100–200 TPS and the latency from the feature store should be in single-digit milliseconds.

Solution overview

We were looking for a fully managed NoSQL database service because we didn’t want to spend engineering effort on undifferentiated work like provisioning, managing, or patching the database. We also wanted to take advantage of the proficiency of our engineers on the Cassandra API.

We decided to use Amazon Keyspaces because it’s a fully managed, serverless database service compatible with Cassandra.

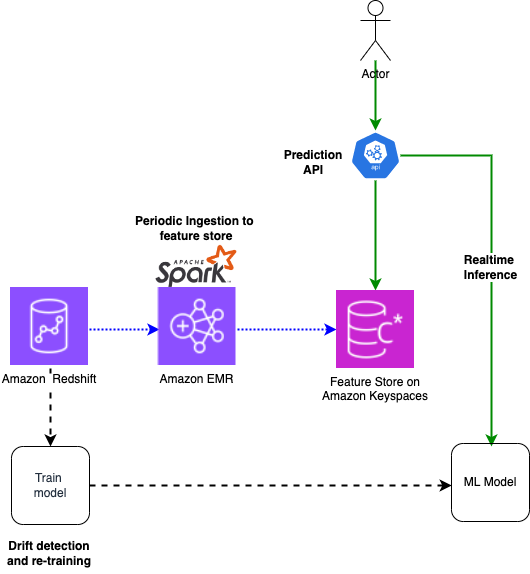

The following diagram shows the high-level architecture of the solution.

The data gets ingested to Amazon Redshift periodically from multiple sources like change data capture (CDC) from OLTP sources, clickstreams, and more. The Redshift data is used to train ML models. For the inference flow, data gets ingested to a feature store on Amazon Keyspaces through periodic Apache Spark batch jobs running on Amazon EMR. In the business flow, the Prediction API gets called. The API queries the feature store by primary key and uses the features for inference.

In the following sections, we discuss the implementation in more detail.

Cross-account access

PayU has a multi-account setup and a centralized data platform. Multiple applications produce data to the data platform and consume data from the data platform. We created the platform using custom data pipelines, data lakes on Amazon Simple Storage Service (Amazon S3), and Amazon Redshift. Resources for the data platform are hosted in a centralized account (referred to as data account), and the applications are hosted in multiple separate accounts (application accounts). Because the data platform hosts data for the whole organization, access to the data is closely governed for compliance reasons. The inference is part of the application microservices. We decided against hosting a feature store in the data account because that would mean opening cross-account access of data from application accounts to the data account. We decided to maintain a tighter permission boundary and host the feature store in the application account instead of the data account.

Therefore, the feature store is co-located in the application account. The data platform team is responsible for hosting ingestion pipelines in the data account and ingesting data into the feature store. This meant that the ingestion pipelines need to read data from Amazon Redshift and perform cross-account data ingestion to Amazon Keyspaces. The Spark job had to run in the data account and do a cross-account write to Amazon Keyspaces while making sure the data flows over a private network.

The following diagram shows how the data and applications are hosted in separate accounts.

The architecture contains the following key components:

Spark jobs run on Amazon EMR hosted in private Availability Zones of the data account. Spark jobs use Amazon Virtual Private Cloud (Amazon VPC) endpoints to access public services like Amazon S3 and Amazon Redshift.

The Amazon Keyspaces database is hosted in the application account.

The Spark job running on Amazon EMR needs cross-account access to the Amazon Keyspaces database. The job reads data from Amazon Redshift or Amazon S3, transforms the data, and writes it to the Amazon Keyspaces database.

The Spark job needs to access the Amazon Keyspaces database using a private endpoint.

The following diagram shows the solution architecture for cross-account access.

Implement the solution

In this section, we show how you can implement a similar solution. Complete the following high-level steps:

Create an AWS Identity and Access Management (IAM) role (Amazon Keyspaces role) in the application account that has permission to access Amazon Keyspaces and the Amazon Keyspaces VPC endpoint (DescribeNetworkInterfaces and DescribeVpcEndpoints, respectively) in the same account. For more information, refer to Using Amazon Keyspaces with interface VPC endpoints.

Note down the role ARN. You use this in the Cassandra application configuration file (named cassandra-application.conf) in the data account.

Create an Amazon Keyspaces VPC endpoint in the application account. The VPC endpoint should be in private subnet.

Note the Regional DNS of the Amazon Keyspaces endpoint. You use the DNS in cassandra-application.conf in the data account.

Create Amazon EMR EC2 instance profile IAM role (Spark role) in data account. The role should have permission to assume the cross-account Amazon Keyspaces role you created.

Note the EMR instance profile role ARN. You use this to update the trust policy of the Amazon Keyspaces role.

Create an AWS Security Token Service (AWS STS) VPC endpoint in the data account for AWS STS. The VPC endpoint should be in the private subnet where you create the EMR cluster.

Update the trust policy of the Amazon Keyspaces role to allow the EMR instance profile role to assume the Amazon Keyspaces role.

Create a cross-account VPC peering connection between the VPC that hosts the Amazon Keyspaces VPC endpoint and the Amazon EMR VPC. This makes sure Amazon EMR accesses Amazon Keyspaces over a private network. You can also implement the network connectivity using transit gateway. However, we implemented the solution using VPC peering connection.

Update the route tables of the EMR subnets to allow cross-account connection to the private subnet of the Amazon Keyspaces VPC endpoint over the peering connection.

Similarly, update the route tables of the private subnet of the Amazon Keyspaces VPC endpoint to allow connectivity with the EMR subnet.

Create a Cassandra configuration file (cassandra-application.conf) with the following properties:

basic.contact-points: The Regional DNS of the Amazon Keyspaces VPC endpoint from the Amazon Keyspaces account.

auth-provider: Specify as software.aws.mcs.auth.SigV4AuthProvider.

aws-region: The Region of the Amazon Keyspaces account.

aws-role-arn: The ARN of the Amazon Keyspaces role.

The following is the sample cassandra-application.conf file:

Create an Amazon Keyspaces truststore file. For instructions, see Using a Cassandra Java client driver to access Amazon Keyspaces programmatically.

Upload cassandra-application.conf and the Amazon Keyspaces truststore file to an S3 bucket in the data account.

Create an EMR cluster in a private subnet of the data account with bootstrap actions to copy cassandra-application.conf and the Amazon Keyspaces truststore file to the /home/hadoop directory of the EMR nodes.

Build aws-sigv4-auth-cassandra-java-driver-plugin JAR file from code at aws-sigv4-auth-cassandra-java-driver-plugin and upload it to the S3 bucket in the data account.

The approach has been tested on Amazon EMR version 6.10.0 which supports Spark 3.3.1. For this version of Spark, download spark-cassandra-connector-assembly_2.12-3.3.0.jar and upload it to the S3 bucket in the data account.

Run the Spark job to read and write data to Amazon Keyspaces. The following code is a sample run:

Choosing right capacity mode

The table was initially created with on-demand capacity mode. On-demand capacity mode is a flexible billing option capable of serving thousands of requests per second without capacity planning. This option offers pay-per-request pricing for read and write requests so you pay only for what you use.

We observed the batch ingestion process was taking a long time and increasing the capacity of Spark job was not helping. This was due to the peak traffic and scaling properties of Amazon Keyspaces. In Amazon Keyspaces on-demand capacity mode, if you need more than double your previous peak on a table, Amazon Keyspaces automatically allocates more capacity as your traffic volume increases. This helps make sure your table has enough throughput capacity to process the additional requests. However, you might observe insufficient throughput capacity errors if you exceed double your previous peak within 30 minutes. The service recommends that you space your traffic growth over at least 30 minutes before doubling the throughput.

The batch ingestion job needed high capacity for the duration of the job. Batch jobs have a predictable schedule and the capacity required for jobs could be computed from the size of ingested data and table. The AWS team advised us to use provisioned capacity mode during the batch ingestion with high capacity and switch back to on-demand when the batch ingestion was over. After we chose provisioned capacity mode with high enough capacity, the batch job finished within the SLA of two hours.

This process works because the batch ingestion is performed periodically; the period ranges from 1 week to 1 month.

Conclusion

We onboarded multiple ML use cases requiring real-time inference on this architecture, which uses Amazon Keyspaces as a feature store. The service provides flexibility in choosing the capacity mode and switching between on-demand and provisioned capacity mode using an API call. We have automation in place to manage the switch. A script calculates the desired provisioned capacity based on the size of table and makes the switch before running the batch job.

During the inference process, the capacity scales smoothly. Amazon Keyspaces is serverless, so we don’t need additional engineers to manage the infrastructure. Data engineers manage the infrastructure, enabling a leaner team. This flexibility makes sure our business can add additional use cases without adding significant overhead.

For more information about Amazon Keyspaces, including developer tools and sample code, refer to Amazon Keyspaces (for Apache Cassandra) resources.

About the authors

Hemant Singh is a Senior Data Engineering Manager at PayU in Bangalore, India, with over 12 years of experience in the field. Specializing in architecting and managing scalable data platforms, Hemant excels in building robust data pipelines, optimizing data workflows, and ensuring audit readiness. With a strong foundation in leveraging data for strategic insights and operational efficiency, he is dedicated to advancing data engineering best practices in the fintech industry. Hemant holds a Bachelor’s degree in Computer Science from VTU, Belgaum.

Mohit Bansal is a Data Engineer at PayU India, where he has been contributing for the past three years. He is a skilled professional with extensive experience in architecting and developing machine learning solutions and designing real-time ETL systems. Mohit excels in solving complex big data challenges and enhancing machine learning capabilities through insightful data analysis. He holds a Master’s degree in Technology with a specialization in Data from IIIT Bangalore.

Akshaya Rawat is a Solutions Architect at AWS. He works from New Delhi, India, for large startup customers of India to architect and build resilient, scalable systems in the cloud. He has 20 years of experience in multiple engineering roles.

Read MoreAWS Database Blog