The Intel Olympic Technology Group (OTG), a division within Intel focused on bringing cutting-edge technology to Olympic athletes, collaborated with AWS Machine Learning Professional Services (MLPS) to build a smart coaching software as a service (SaaS) application using computer vision (CV)-based pose estimation models. Pose estimation is a class of machine learning (ML) model that uses CV techniques to estimate body key point locations such as joints. These key points are used as inputs to calculate biomechanical attributes (BMA) that are relevant to athletes such as velocity, acceleration, and posture.

The Intel OTG team wants to go to market with this capability for the use case of smart coaching. The BMA metrics generated via pose estimation could augment the training and advice that coaches give athletes and help track athlete progression. Currently, to capture this data accurately without using computer vision requires specialized IoT sensors attached to the athletes’ bodies while they’re performing athletic movements. These sensors can be difficult to find except in specialized performance coaching centers. The sensors are also cumbersome to the athlete during their activity and prohibitively expensive. Between cameras, motion capture suit and sensors, and software licenses, it can cost cost over $100,000 to set up a motion sensor lab. As a comparison, this solution will provide pose estimation for a fraction of the cost, with the ability to analyze video samples captured using a standard mobile phone. The ability to analyze video using straightforward and lightweight capture options and perform analysis on standard video is a significant value add.

In Part 1 of this two-part post, we discuss the design requirements and how Intel OTG built solution on AWS with the help of MLPS. Part 2 will dive deeper into each stage of the architecture.

A multi-purpose video processing platform

The Intel OTG team is currently focused on track and field movements such as sprinting as their primary use case, while testing with other movements during the 2021 Tokyo Olympics. They wanted to set up a multi-purpose platform that can be utilized by a diverse segment of end users to ingest and analyze the videos.

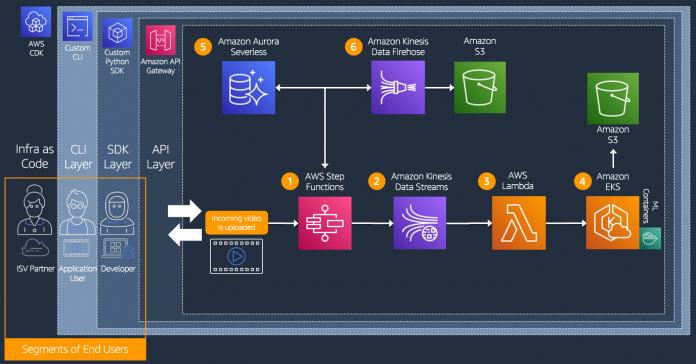

MLPS collaborated with Intel OTG to create scalable processing pipelines, which run ML CV model inference against athlete videos, using AWS infrastructure and services to offer these capabilities to three segments of users. These users have distinct requirements of the platform and interact with the platform in distinct ways:

Developer – Interacts with a Python SDK layer to support application development that incorporates capabilities such as submitting and processing jobs and modifying inference processing compute cluster settings. These capabilities can be embedded into larger applications or user interfaces.

Application user – Submits videos and simply wants to interact with an application interface. This can be a command line interface (CLI) with predefined commands. Alternatively, it can be a frontend such as a coaching dashboard that is connected to an inference processing compute cluster via an API layer.

Independent software vendor (ISV) partner – Interacts with infrastructure as code (IaC) that packages up the solution to be deployed in their own environments, such as an AWS account. They want the ability for customization and control over the underlying infrastructure.

Meeting technical design requirements

After the MLPS team understood the business requirements for delivering different capabilities to different user segments, they collaborated with Intel OTG to create a comprehensive view of the technical design requirements that could achieve this outcome. The following diagram illustrates the solution architecture.

The following are the high-level design tenets that informed the final architecture:

API, CLI, and SDK layers to match types of access required by different user segments.

Configurable IaC, such as via AWS CloudFormation templates, so that the final architecture can be customized and deployed independently for different ISV users into different environments.

Maintainable architecture utilizing microservices. Minimized infrastructural overhead by utilizing serverless and managed AWS services.

Ability to tune and minimize latency for pose estimation processing jobs by maximizing parallelization and resource utilization.

Fine-grained control of the latency and throughput offered to different tiers of end users.

Portable runtime and inference environment within and outside of AWS.

Flexible and evolvable data model.

Ultimately, the MLPS team met these requirements with the high-level process flow shown in the preceding figure. An API layer enabled by Amazon API Gateway was used as a bridge between the interaction layers (CLI, SDK) and the processing architecture backend. They used the AWS Cloud Development Kit (AWS CDK) for rapid code development and deployment, leaving a simple framework for future deployments of these resources. The flow includes the following steps:

After an incoming video is uploaded, AWS Step Functions serves as an entry point into the workflow orchestration, and controls submission of processing jobs via a series of AWS Lambda functions that call serverless AWS microservices.

Videos are batched and submitted to Amazon Kinesis Data Streams to provide parallelization of job processing through sharding.

Another layer of parallelization and throughput control is provided by individual consumer Lambda functions, which are invoked to process a single batch of video frames.

The compute engine for generating inference using ML models is accomplished using an Amazon Elastic Kubernetes Service (Amazon EKS) cluster, which provides the flexibility for a portable runtime and inference environment within or outside of AWS. A sequence of Kubernetes containers encapsulates the ML inference models and pipelines. An Amazon Aurora Serverless database enables a flexible data model that keeps track of users and jobs that are submitted. The separation of user groups tracked in this database enables the mapping of different tiers of end users to their access of varying levels of throughput and latency.

Logging is captured using Amazon Kinesis Data Firehose to deposit data from services like Lambda and persisted in Amazon Simple Storage Service (Amazon S3) buckets. For example, every frame batch processed from the submitter Lambda function is logged with the timestamp, name of action, and Lambda function response JSON, and saved to Amazon S3.

Adding innovative computer vision capabilities

The benefit of this application is to provide athletes and coaches important biomechanical attributes about their athletic movements to assist in training and performance improvement. One of the most important considerations for the Intel OTG team is to be able to reduce the friction in providing this feedback. This means only requiring common inputs, such as 2D video footage taken with a cell phone camera without the need for special equipment. This feature enables the input and feedback to take place in the field or on a track, with quick processing turnaround time.

This problem is especially challenging with 3D pose estimation, which typically requires expensive and specialized equipment, and sensors to be attached to the athlete during their movements. Computer vision technology in the form of pose estimation models now unlocks the capability to overcome these requirements. The AWS Machine Learning Solutions Lab, a prototyping team specializing in developing the science to solve tough customer problems, previously engaged with the Intel OTG team to lay the ML foundation for 3D pose estimation. They were able to test a few iterations of ML pipelines that incorporated both 2D and 3D pose estimation based on processing 2D video. The pipeline consisted of three general stages:

Frame extraction – Individual frames of the video were sampled and extracted at a consistent frames per second (FPS) rate and stored.

Person detection – They used the popular object detection model YOLOv5 pretrained on the Pascal VOC dataset and fine-tuned on their own custom dataset to produce bounding boxes for the athlete in each video frame.

Pose estimation – Both 2D and 3D pose estimation models were tested on the bounding box image outputs from the previous step. For 2D pose estimation, they used a custom version of HRNet. For 3D pose estimation, they used the 3DMPPE algorithm. This state-of-the-art 3D pose estimation technique consists of a camera distance-aware top-down method for multi-person per RGB frame performed in two stages: RootNet that estimates the camera-centered coordinates of a person’s root in a cropped image, and a PoseNet that predicts the relative 3D coordinates of the cropped image (based on the paper by Moon et. al).

These pipelines were tested on sample videos collected by Intel OTG on sprinting movements by Ashton Eaton, a decathlete and two-time Olympic gold medalist from the United States. The following are some examples of the different angles of video, with the model outputs used for pose estimation evaluation.

Final outcomes

As a result of these design choices, Nelson Leung, Lead AI/ML Architect of Intel OTG, remarked “AWS Professional Services has been an amazing partner in designing and developing a scalable and secure architecture for Intel’s 3DAT technology. The team listened, understood the customer needs, and successfully delivered to our key objectives. We developed a productized platform and further optimized the E2E latency, achieving an additional 25% improvement. This platform will enable the sports performance technology group to scale and engage in new business initiatives.”

At the end of the engagement, Jonathan Lee, Director of Sports Performance Technology of Intel OTG, remarked “The AWS Professional Services team was instrumental in helping us turn our 3D athlete tracking pipeline into a production-ready SDK. We can now confidently engage customers knowing that the SDK is ready to scale to any number of end users.”

The following is the detailed solution architecture. In Part 2 of this post, we will explore in more detail each piece of the architecture and the AWS services used.

Conclusion

The application is now live and ready to be tested with athletes and coaches alike. Intel OTG is excited to make innovative pose estimation technology using computer vision accessible for a variety of users, from developers to athletes to software vendor partners.

From the ideation and discovery stage with ML Solutions Lab to the hardening and deployment stage with AWS ML ProServe, the AWS team is passionate about helping customers like Intel OTG accelerate their ML journey. We were watching closely at the 2021 Tokyo Olympics this summer, envisioning all the progress that ML can unlock in sports.

Get started today! Explore your use case with the services mentioned in this post and many others on the AWS Management Console.

About the Authors

Han Man is a Senior Data Scientist with AWS Professional Services based in San Diego, CA. He has a PhD in engineering from Northwestern University and has several years of experience as a management consultant advising clients in manufacturing, financial services, and energy. Today he is passionately working with customers from a variety of industries to develop and implement machine learning & AI solutions on AWS. He enjoys following the NBA and playing basketball in his spare time.

Iman Kamyabi is an ML Engineer with AWS Professional Services. He has worked with a wide range of AWS customers to champion best practices in setting up repeatable and reliable ML pipelines.

Jonathan Lee is the Director of Sports Performance Technology, Olympic Technology Group at Intel. He studied the application of machine learning to health as an undergrad at UCLA and during his graduate work at University of Oxford. His career has focused on algorithm and sensor development for health and human performance. He now leads the 3D Athlete Tracking project at Intel.

Nelson Leung is the Platform Architect in the Sports Performance CoE at Intel, where he defines end-to-end architecture for cutting-edge products that enhance athlete performance. He also leads the implementation, deployment and productization of these machine learning solutions at scale to different Intel partners.

Troy Squillaci is a DecSecOps engineer at Intel where he delivers professional software solutions to customers through DevOps best practices. He enjoys integrating AI solutions into scalable platforms in a variety of domains.

Paul Min is an Associate Solutions Architect Intern at Amazon Web Services (AWS), where he helps customers across different industry verticals advance their mission and accelerate their cloud adoption. Previously at Intel, he worked as a Software Engineering Intern to help develop the 3D Athlete Tracking Cloud SDK. Outside of work, Paul enjoys playing golf and can be heard singing.

Read MoreAWS Machine Learning Blog