In this post, we show you a case study of an e-commerce site that had relational database management system (RDBMS) performance problems and how Amazon DynamoDB contributed to their solution. ZOZO has large-scale sale events requiring engineers to monitor and respond in real time to ensure that the service runs without problems. DynamoDB helped ZOZO reduce their engineering overhead by 85.8 percent. We also share some DynamoDB best practices.

This post focuses on ZOZOTOWN’s—one of Japan’s leading e-commerce sites—experience with DynamoDB. Shiori Hanzawa is one of the engineers responsible for developing and operating ZOZOTOWN’s e-commerce portal. In this post, she and I explain why ZOZOTOWN decided to migrate to DynamoDB.

Overview of ZOZOTOWN

ZOZOTOWN is one of Japan’s largest fashion shopping sites, handling more than 1,500 shops and 8,400 brands. It lists more than 830,000 items and has an average of 2,900 or more new arrivals every day (as of the end of December 2021). ZOZO, Inc., has developed various ZOZOTOWN services, including the specialty malls ZOZOCOSME and ZOZOSHOES, ZOZOUSED, which handles branded used clothing, ZOZOVILLA, which handles luxury and designer brands, and the fashion coordination application WEAR. In addition, ZOZOTOWN developed and uses measurement technologies such as ZOZOSUIT 2, ZOZOMAT, and ZOZOGLASS.

Why ZOZOTOWN adopted DynamoDB

ZOZOTOWN adopted a microservices architecture to simplify scaling as its business grows, including their cart submission function. The main process of this function is to pull product inventory and register them in the table of the cart item database. Some events—such as popular product launches—cause a surge in the number of requests, and access to specific inventory data also increases. As a result, the original database design became overloaded, resulting in frequent errors. The operational costs associated with this problem were significant, and the on-site engineers were exhausted from troubleshooting these events.

To solve their database throughput challenges, ZOZO replaced their existing database with DynamoDB, which delivers consistent performance at any scale, with no scheduled downtime due to maintenances windows or versions updates.

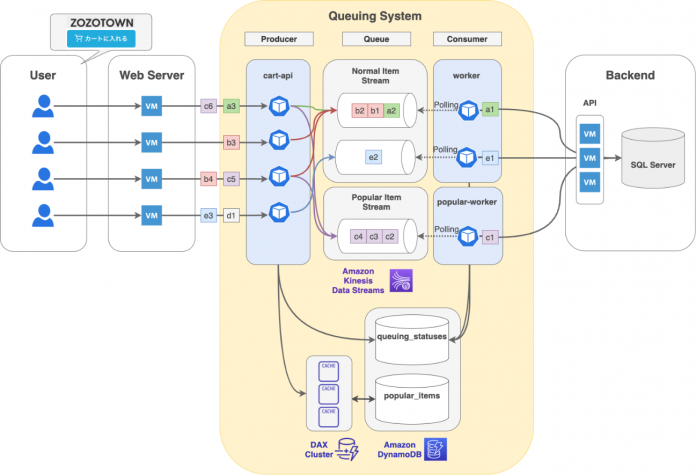

ZOZO began by replacing their inventory and cart tables, which ran on-premises using MS SQL Server, with DynamoDB. Figure 1 that follows illustrates the updated architecture, which is described in the subsequent paragraphs.

We plan to implement the replacement in several phases. As a first step, we decided to add a queue to the cart submission process and change to a capacity controllable configuration to reduce the load on the on-premises database. Before the replacement, the web server called a stored procedure in the on-premises database, and the stored procedure handled inventory allocation and cart registration. The replacement uses Amazon Kinesis Data Streams through a microservice for the cart before calling the stored procedure. It also uses DynamoDB to determine popular products and manage the queuing state of requests.

The process flow is:

A user calls the request registration API of the microservice for the cart submission function from the web server.

The request registration API:

Queries the DynamoDB popular products table.

Registers the initial status to the DynamoDB status management table.

Sends the status management table partition key and request information to Kinesis Data Streams. Because the streams are separated between popular and standard products, popular products are sent to their respective streams.

Returns the partition key of the state management table.

The worker:

Retrieves records containing request information from Kinesis Data Streams.

Changes the status of an item in the status management table to requested.

Calls the backend stored procedure execution API.

The stored procedure execution API performs inventory reserve and registration to the cart and returns the results.

The worker stores these results in the DynamoDB state management table.

The web server calls the status acquisition API and returns the request result to the user.

For more information about this migration, refer to this ZOZOTOWN blog post (Japanese).

DynamoDB best practices

One decision we made early on was how to choose between DynamoDB on-demand and provisioned capacity, which control how DynamoDB read/write throughput is charged and capacity is managed.

On-demand mode is a flexible billing option capable of scaling to virtually unlimited throughput without capacity planning. DynamoDB on-demand offers pay-per-request pricing for read and write requests so you pay only for what you use.

Provisioned capacity lets you specify the reads and writes throughput your application requires. You can also use auto scaling with provisioned capacity to automatically adjust your table’s throughput capacity based on traffic changes. This helps you govern your DynamoDB use to stay at or below a defined request rate in order to obtain cost predictability.

We found that on-demand is a good option if any of the following are true:

You create new tables with unknown workloads.

You have unpredictable application traffic.

You prefer the ease of paying for only what you use.

Provisioned capacity is a good option if any of the following are true:

You have predictable application traffic.

You run applications whose traffic is consistent or ramps gradually.

You can forecast capacity requirements to control costs.

We find it’s a good practice to start with on-demand capacity for a period of time, and then try provisioned capacity for the same period of time to determine which option is best for your workload.

Because the rate of cart-in requests varies greatly depending on the event and time of day, it was difficult for us forecast capacity. As a result, we chose on-demand capacity for the cart submission function. On-demand capacity’s flexible billing and ease of scale remove the need for us to plan for capacity. After migrating to DynamoDB, several popular products went on sale. With on-demand capacity, we were able to support these high-load events without any engineering overhead.

We also benefitted from using a cache to reduce read load from popular items, which resulted in cost savings and increased performance. Amazon DynamoDB Accelerator (DAX) is a fully managed, highly available, in-memory cache for Amazon DynamoDB. We implemented DAX for read-intensive workloads, especially those with high request rates for specific items. When our application retrieved these items from DAX, we reduced consumption of our read capacity units (RCUs), which lowered our cost structure. DAX uses time-to-live (TTL) eviction policies, so when a prescribed TTL expires, the data is automatically purged from the cache. Since DAX is a write-through cache, our clients update our DynamoDB table and cache in a single operation. The DAX client supports the same operations as DynamoDB and can be used by swapping the DynamoDB client endpoint. This eliminates the need for complicated cache management.

Techniques used in ZOZOTOWN’s cart replacement

In this section, we share some of the functions ZOZOTOWN is using now.

Controlling queues with conditional writes

A worker might get the same record from Kinesis Data Streams multiple times. Therefore, if you don’t control anything, you might run one request multiple times and add more items to the cart than were requested by the user, as shown in Figure 2 that follows.

To solve this problem, we use the conditional write feature. The process flow is:

Check the items in the status management table.

Branch to the following processes depending on the status:

If the status is is_queuing (queuing state), proceed to the next step.

If the status is is_requested (requested), skip the next step.

Conditionally update the status of the status management table to is_requested.

Request the stored procedure execution API.

Figure 3 that follows is an example of when a worker retrieves a request called a1 that has already been processed and a new request of a2 and a3.

The processing flow to make sure that requests match the user’s operations is:

The a1 record is processed. The worker issues a GetItem using the a1 record as the partition key, returning the status is_requested. The worker then skips subsequent processing.

The process moves to a2. Because the result of GetItem is not is_requested, a subsequent UpdateItem is performed. The update succeeds if the condition is satisfied, otherwise an error occurs.

Note that an overall timeout value is set for cart submission requests. If a timeout occurs for any reason, a timed_out_at value is added to the status management table and an error is returned to the user. Because it’s not possible to continue processing a request that has already stopped with an error, we use the UpdateItem operation and include a condition expression where timed_out_at must not exist.

We also add the condition that the status is is_queuing because multiple workers may process records in the same shard due to the specifications of the Kinesis Client Library.

a2 fails to update because there is timed_out_at at the time of UpdateItem. Therefore, subsequent processing is not performed, and it moves to a3 processing.

a3 performs a conditional UpdateItem, just like a2. As a result, the update succeeds because there is no timed_out_at. Only in such a case is it permissible to process subsequent requests.

Detecting hot keys and bot access

When data access in DynamoDB is imbalanced, it can result in a hot partition that receives a higher volume of read and write traffic compared to other partitions. In extreme cases, throttling can occur. ZOZO built an enhanced monitoring function that enables hot key detection powered by CloudWatch contributor insights. At an additional cost, the following information is provided as a graph of the most accessed or throttled items in the DynamoDB table or global secondary index (GSI). During load testing, we focused on traffic for the most accessed items, which helped us verify that our table design was efficiently distributing the load. We built a GSI using the access source as the partition key to help us identify if the request was made from a general user or a bot. Figure 4 that follows shows the most accessed partition key per minute.

The User dot at the bottom of the graph in Figure 4 indicates that a few items were added to the cart within one minute, which is normal behavior. However, the hundreds of consecutive requests per minute—labeled as Bot in the graph—are probably from one or more bots. ZOZO can use this information to identify bots and respond appropriately.

Conclusion

The DynamoDB replacement project for the high load function of cart submission was a huge success. Errors at the time of frequent events and latency at the time of access concentration have been reduced, improving performance.

For more information about this project, see ZOZOTOWN Cart payment function replacement phase 1 (Japanese).

About the authors

Shiori Hanzawa is a Manager of Cart Payment Infrastructure Block, Cart Payment Department, ZOZO, Inc. She joined Start Today (now ZOZO) in January 2013. Currently, she is driving a project to replace the cart payment function of the fashion shopping site ZOZOTOWN. She loves hardcore punk and enjoys having a few drinks.

Takashi Narita is a Senior NoSQL Specialist Solutions Architect. He provides architecture reviews and technical assistance to many AWS customers using DynamoDB.

Read MoreAWS Database Blog