Do you know how to rightsize a workload in Kubernetes? If you’re not 100% sure, we have some great news for you! Today, we are launching a fully embedded, out-of-the-box experience to help you with that complex task. When you run your applications on Google Kubernetes Engine (GKE), you now get an end-to-end workflow that helps you discover optimization opportunities, understand workload specific resource request suggestions and, most importantly, act on those recommendations — all in a matter of seconds.

This workload optimization workflow helps rightsize applications by looking at Kubernetes resource requests and limits, which are often one of the largest sources of resource waste. Correctly configuring your resource requests can be the difference between an idle cluster and a cluster that has been downscaled in response to actual resource usage.

If you’re new to GKE, you can save time and money by following the rightsizer’s recommended resource request settings. If you’re already running workloads on GKE, you can also use it to quickly assess optimization opportunities for your existing deployments.

Then, to optimize your workloads even more, combine these new workload rightsizing capabilities with GKE Autopilot, which is priced based on Pod resource requests. With GKE Autopilot, any optimizations you make to your Pod resource requests (assuming they are over the minimum) are directly reflected on your bill.

We’re also introducing a new metric for Cloud Monitoring that provides resource requests suggestions for each individual eligible workload, based on its actual usage over time.

Seamless workload rightsizing with GKE

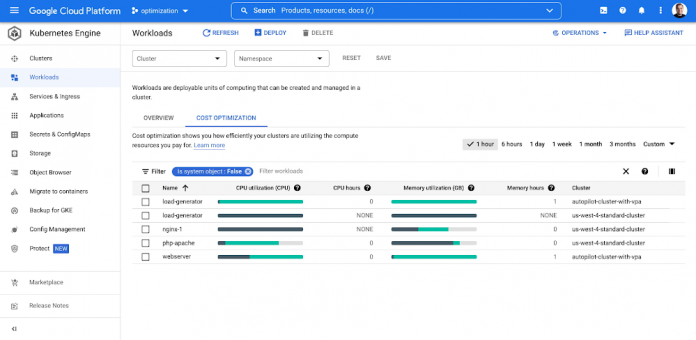

When you run a workload on GKE, you can use cost optimization insights to discover your cluster and workload rightsizing opportunities right in the console.

Here, you can see your workload’s actual usage and get signals for potentially undersized workloads that are at risk of either reliability or performance impact because they have low resource requests.

However, taking the next step and correctly rightsizing those applications has always been a challenge — especially at scale. Not anymore with GKE’s new workload rightsizing capability.

Start by picking up the workload you want to optimize. Usually, the best candidates are the ones where there’s a considerable divergence between resource requests and limits and actual usage. In the cost optimization tab of the GKE workloads console, just look for the workloads with a lot of bright green.

Once you pick a workload, go to workload details and choose “Actions” => “Scale” => “Edit resource requests” to get more step-by-step optimization guidance.

The guidance you receive relies heavily on new “Recommended per replica request cores” and “Recommended per replica request bytes” metrics (the same metrics that are available in Cloud Monitoring), which are both based on actual workload usage. You can access this view for every eligible GKE deployment, with no configuration on your part.

Once you confirm the values that are best for your deployment, you can edit the resource requests and limits directly in the GKE console, and they will be directly applied to your workloads.

Note: Suggestions are based on the observed usage patterns of your workloads and might not always be the best fit for your application. Each case might have its corner cases and specific needs. We advise a comprehensive check and understanding of values that are best for your specific workload.

Note: Due to limited visibility into the way Java workloads use memory, we do not support memory recommendations for JVM-based workloads.

Optionally, if you’d rather set the resource requests and limits from outside the GKE console, you can generate a YAML file with the recommended settings that you can use to configure your deployments.

Note: Workloads with horizontal pod autoscaling enabled will not receive suggested values on the same metric for which horizontal pod autoscaling is configured. For instance, if your workload has HPA configured for CPU, only memory suggestions will be displayed.

For more information about specific workload eligibility and compatibility with other scaling mechanisms such as horizontal pod autoscaling, check out the feature documentation here.

Next-level efficiency with GKE Autopilot and workload rightsizing

We’ve talked extensively about GKE Autopilot as one of GKE’s key cost optimization mechanisms. GKE Autopilot provides a fully managed infrastructure offering that eliminates the need for nodepool and VM-level optimization, thus removing the bin-packing optimization challenges related to operating VMs, as well as unnecessary resource waste and day-two operations efforts.

In GKE Autopilot, you pay for the resources you request. Combined with workload rightsizing, which primarily targets resource request optimization, you can easily now address two out of three main issues that lead to optimization gaps: app right-sizing and bin-packing. By running eligible workloads on GKE Autopilot and improving their resource requests, you should start to see a direct, positive impact on your bill right away!

Rightsizing metrics and more resources for optimizing GKE

To support the new optimization workflow we also launched two new metrics called “Recommended per replica request cores” and “Recommended per replica request bytes”. Both are available in the Kubernetes Scale metric group in Cloud Monitoring under “Kubernetes Scale” => “Autoscaler” => “Recommended per replica request”. You can also use these metrics to build your own customization and ranking views and experiences, and export latest optimization opportunities.

Excited about the new optimization opportunities? Ready for a recap of many other things you could do to run GKE more optimally? Check our Best Practices for Running Cost Effective Kubernetes Applications, the Youtube series, and have a look at the GKE best practices to lessen overprovisioning.

Cloud BlogRead More