This is a guest post by Sahil Thakral, Lead Platform Engineer, OLX Autos and Shashank Singh, Lead Platform Engineer, OLX Autos.

In 2019, OLX Autos merged with Frontier Car Group (FCG), a used auto marketplace in Germany. Until then, OLX Autos had been exclusively using AWS to host its cloud infrastructure. However, FCG was running on Google Cloud Platform (GCP). With the merger, OLX Autos became a multi-cloud company, which made operations more complex, costly, and time-consuming. OLX Autos sought to streamline its cloud infrastructure, and in January 2022, the business completed migrating their workloads to AWS.

In this post, we share our journey of database migration of our car transaction platform to AWS using AWS Database Migration Service (AWS DMS). We also dive into the path we took, challenges we faced, and solutions we devised to overcome. The journey has helped us optimize our platform. We continue to use AWS to make the OLX Autos platform better.

About OLX Autos

OLX Autos—part of the OLX Group—is a global marketplace for buying and selling used vehicles. The business aims to provide a safe, convenient, one-stop solution for both buyers and sellers. OLX Autos is revolutionizing the used vehicles trade by combining online and offline experiences—offering convenience, safety, and peace of mind for buyers and sellers alike. Since its inception, OLX Autos has expanded to 11 countries.

Why migration?

When OLX Autos merged with FCG, OLX Autos’s infrastructure was hosted on AWS, and FCG’s was hosted on GCP. FCG’s deployment on GCP and OLX Auto’s deployment on AWS had different architectures. This led to multiple problems:

Disparities created challenges for engineers. For example, a few services used a GitLab CI/CD flow, and others used an in-house CI/CD flow on top of GitLab. For a new engineer, it was a learning curve and an ongoing challenge to be productive while working with this disparity.

Debugging was hard for engineers. Engineers had to know the entire structure to get to the root cause. This resulted in higher Mean Time to Recover (MTTR).

OLX Autos is built on microservice design principles. There were many flows with inter-service communication where requests went from a service in AWS to a service in GCP, and vice versa. This communication happened over the public internet, thereby adding latency to service calls and degrading the end-user experience.

For transaction systems, there was no similarity across countries. The operation of OLX Autos changes from one country to another and hence it needs segregation. Most of the applications were using databases that were shared across countries, which made it harder to maintain. We wanted to segregate databases by countries and migrating the workload was the right time to do it.

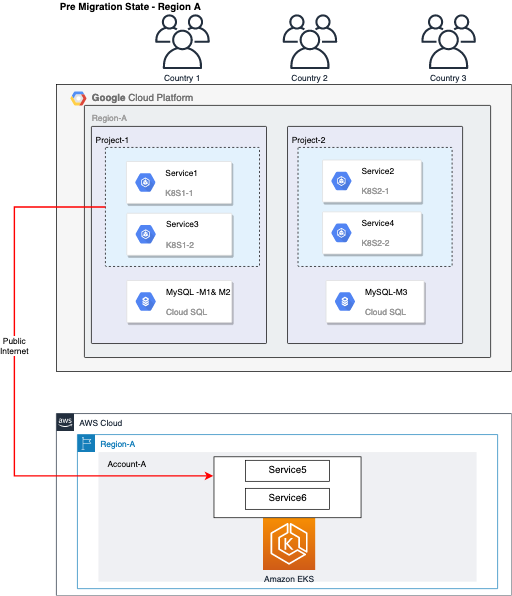

The following diagram illustrates our pre-migration architecture.

For the reasons mentioned above, migration to a single cloud was the ideal way forward. We chose AWS for the following reasons:

Quick support – We were already running our workload on AWS and using AWS Enterprise Support. We were satisfied with our experience with AWS Support. We had multiple channels to communicate with AWS Support team which helped during production issues.

Minimal downtime –We were confident in the reliability of AWS services. We experienced minimal downtime during our operations on AWS.

Engineering experience on AWS – Our engineering team had a history of using AWS services, which made is easier for us to operate on AWS.

Low cost – AWS services are cost-effective, and we could use Reserved Instances (RI) and Spot Instances (SI) to help further drive down the cost.

The following diagram illustrates our post-migration architecture.

Relational database migration

We had to migrate applications for nine countries from GCP to AWS. We decided to break the migration into smaller phases to mitigate our risk.

We broke the migration process of nine countries into seven phases. Our deployment was distributed across 3 regions. In some cases, we would have more than one country hosted on a single database in a region. In each phase, we targeted to migrate one or two countries at a time for the following reasons:

This strategy helped us reduce our risk and our debugging sphere in case of any issues.

Countries were operating in different time zones, so migrating one or two at a time allowed us to have a clear downtime window for migration activity.

It allowed us to start the migration with countries that had a smaller user base and low number of third-party integrations. This helped us learn from these migrations and apply those learnings to our bigger countries .

Data migration is one of the most crucial parts of any migration, and this was also our first major hurdle. We were using Cloud SQL for MySQL and PostgreSQL. The migration target would be Amazon Aurora. The size of a single MySQL database for our largest country was 1.5 TB. The size of PostgreSQL was less than 50 GB. We wanted to do a one-time migration of data followed by live replication of changes. Once the live migration was in progress, we would perform the application cutover.

First hurdle: Cloud SQL support

We started by doing a proof of concept on AWS DMS. We looked at a few open-source products as well, however getting support and expertise on them was challenging. Hence, we did not consider those products.

We wanted to use AWS DMS for the following reasons:

AWS DMS has been a reliable tool that we previously used to perform a large-scale migration from on-premises to AWS.

AWS DMS continuously replicates the changes from source database to target database during migration while keeping the source database operational.

A migration task can be set up within a few minutes on the AWS DMS console. These tasks can also be automated through AWS CloudFormation templates or Terraform which saves a lot of time and effort in migration of a large number of databases.

AWS DMS is a self-healing service and automatically restarts if an interruption occurs. AWS DMS provides an option of setting up a Multi-AZ replication for disaster recovery.

However, AWS DMS didn’t support Cloud SQL for MySQL as the migration source if Allow only SSL connections=True in source Cloud SQL databases. This configuration needed the Cloud SQL client to use the client-side certificate to connect (for more information, refer to Connect to your Cloud SQL instance using SSL).

AWS DMS task configuration didn’t support providing client-side certificates. All of our Cloud SQL databases were configured with Allow only SSL connections=True for security and compliance. Hence, we had to implement a custom solution. The solution we used was a combination of AWS DMS and a Cloud SQL proxy client.

AWS DMS with a Cloud SQL proxy

We created an Amazon Elastic Compute Cloud (Amazon EC2) instance and deployed a Cloud SQL proxy client on the instance. This would connect to Cloud SQL for MySQL using the client-side certificate, and the AWS DMS source endpoint would point to this EC2 instance instead of Cloud SQL.

The following diagram shows the overall migration strategy.

We chose the r5.2Xlarge EC2 instance type for the proxy server. We created an AWS DMS task to migrate existing data and replicate ongoing changes for each database. The schema was created using AWS Schema Conversion Tool (AWS SCT). The size of the replication instance was dms.r5.xlarge.

Smooth sailing for smaller instances

We used the DMS task type of “Migrate existing data and replicate ongoing changes” (Full load plus CDC) to migrate the data. We would start the migration task two days in advance of the cutover time. We took a downtime window during which we ensured the data correctness and did a clean cutover to new database. We took an extended downtime window so that in case of any mismatch we could do the data migration again using DMS task of type “Migrate existing data” (Full load).

The following points illustrate the overall approach:

Start data migration with DMS task of type “Full load plus CDC”, two days before the cutover window.

At the start of the downtime window, stop traffic at the source (GCP).

Wait for 15 minutes for the asynchronous transactions to get settled.

Do data sanity check and check that all data is migrated successfully.

If there is data mismatch, start the AWS DMS tasks of type “Full load” and migrate the data again in the downtime window.

Set up the application at the target (AWS) in parallel.

When all the AWS DMS jobs are complete, perform data sanity check again.

Switch traffic to the target (AWS).

This solution worked smoothly and allowed us to migrate two of our smaller countries without any issue. In less than two hours, we performed the entire migration, and the MySQL migration was completed in less than one hour. The size of the largest MySQL database for these countries was around 150 GB.

Second hurdle: Large databases

A successful migration of two countries gave us confidence, but we still had to migrate larger ones , where the data size was about 10 times larger (about 1.5 TB). The stakes were higher for larger countries because these were sources of larger revenue. Any mistake in the migration could result in a loss of business. This was the real test of our solution.

We targeted one of our largest countries – Indonesia. The size of the database instance for this market was about 1.5 TB. There were 22 databases hosted on the instance. We took downtime during off-business hours and worked to ensure that we didn’t exceed these four hours of downtime. Exceeding downtime for this country would have meant loss of business.

On the day of migration, we encountered a couple of issues. Upon investigation, we decided to roll back the migration and to re-examine our approach and architecture.

Retrospective

After this setback, we did a retrospective of our approach. From the failed attempt, we learned that migrating 1.5 TB in under four hours – over the internet and without multiple parallel tasks – would not be feasible. We went back to the basics and assessed the entire migration strategy. We learned the following out of the exercise:

Analyze the functionality and find out ways to reduce the volume of data to be migrated in the downtime window.

Do migration test-runs for the data size to be migrated. This can help tune the size of replication instances and set expectations around resource consumption like max CPU or memory.

Expect failure and have an efficient rollback strategy.

AWS DMS for large database migration

We reached out to AWS support to get insights into resets of the AWS DMS task progress. The Enterprise Support team explained that connection interruption would cause a recoverable error, prompting AWS DMS to restart the task. This meant that using a Cloud SQL proxy wasn’t the root of the problem. This gave us confidence to work around AWS DMS and use it effectively.

We applied our learning from retrospection; the result was the following approach:

We created a read replica of Cloud SQL for the MySQL database in GCP. We would use the replica as the source instead of the primary. This would save resources of the primary and enable us to start AWS DMS tasks like migrating existing data and replicating ongoing changes during business hours well before the downtime window.

We didn’t have to migrate all the data to make our systems work. For example, we could migrate historical data not used by applications at a later point.

In our earlier approach, we were migrating all our data using the AWS DMS task to migrate existing data and replicate ongoing changes. Now we categorized our tables in two sets:

The first set of tables were very large and contained a lot of historical data. We did not have to migrate historical data during this migration. These tables could be migrated during the downtime window using the “Full load” task and a column filter to filter out historical data. The tasks were started in the migration window.

The second set contained tables which were relatively smaller. For these tables, we would continue to use the “Full load plus CDC” type of DMS task. We would stop that replication after the migration was complete. For these tables, we created a task of type “Full load plus CDC”, and started the AWS DMS task two days before the migration window.

We parallelized migration by creating multiple AWS DMS tasks to migrate data in parallel. We had dedicated AWS DMS tasks for some large tables that were 70–80 GB in size. We created 30 DMS tasks for migration that executed in parallel.

We created custom scripts to measure the progress of the migration. The script counted the number of rows in the source and target databases. The script would trigger once migration of a database was completed.

The following diagram shows the final migration strategy.

This approach worked well for us. It allowed us to migrate our data in close to three hours, which was well under the downtime window. We didn’t encounter any problems in the migration. We relied on our scripts to measure the progress of data migration, which showed a linear increase in data count in the target table during the migration.

Post-migration checks confirmed that the data in the target database was clean. We applied the following checks:

Confirm the count of data in the source and destination databases matched

Confirm the data types, collation, and other properties of the tables in the source and destination databases matched

We automated these steps using custom scripts.

We followed this migration template to migrate the remaining countries successfully. This was one of our largest initiatives, which opened the gates for the rest of the migration.

Conclusion

OLX Autos’ migration to AWS was a major milestone for the team. AWS DMS again proved to be a reliable tool. We had used it for large-scale migration from on-premises to AWS in the past, and now migrated from other cloud providers to AWS. AWS DMS as a tool is reliable and offers ease of use and monitoring, however to migrate large databases you may need to plan to break the migration into multiple DMS tasks which can run in parallel.

Our experience validates the database proxy-based approach for large-scale migration. You can apply this approach to use AWS DMS for migration of MySQL databases from other cloud services to AWS. Using proxy gives you flexibility of providing connection parameters like client-side certificate.

We took away the following key learnings:

Test the approach for the largest possible volume with real data.

Separate migration of live data from historical data. This enables you to reduce the volume of data that needs to be migrated live or within a downtime window.

Cleanup the source databases to remove unwanted or temporary data and unused indexes. This will reduce the migration time.

Use parallel tasks to make the migration faster. Creating dedicated AWS DMS tasks for large tables is a good approach to follow.

Use a MySQL replica as a source for the migration. This helps you run the migration tasks even during business hours without impacting the end-user experience.

Build an independent mechanism to measure the progress of migration tasks.

Build scripts to validate metadata like count of rows, data types, collation and other properties of tables.

A successful migration has made our infrastructure more stable and reliable. Being on AWS enables us to scale quickly, thereby improving our time to market.

About the authors

Sahil Thakral is a Lead Platform Engineer at OLXAutos based in Delhi, India. In his more than 7 years of industry experience, Sahil has dealt with every aspect of software engineering, from frontend to backend to cloud infrastructure. In his current role, he is responsible for designing, developing, architecting, and implementing scalable, resilient, distributed & fault-tolerant platforms.

Shashank Singh is a Lead Platform Engineer at OLXAutos. An IT veteran, with expertise in designing, architecting, and implementing scalable & resilient Cloud Native Applications. His passion for solving complex challenges people encounter in their cloud journey helped span his knowledge across all leading cloud providers.

Akshaya Rawat is a Solutions Architect at AWS. He works from New Delhi, India. He works for large startup customers of India to architect and build resilient, scalable systems in the cloud. He has 20 years of experience in multiple engineering roles.

Read MoreAWS Database Blog