Today we hear from Freestar, whose ad monetization platform helps digital media publishers and app developers maximize ad revenue with scalable, efficient targeting opportunities. Founded in 2015 and reaching over 70% of U.S. internet users monthly, the company is now among the top 15 in U.S. internet reach. Vijaymohanan Pudukkudi, Vice President of Engineering – Architecture at Freestar, guides the company’s Ad Tech platform architecture. In this blog post, Vijay discusses Freestar’s decision to adapt to a multi-regional cluster configuration for its Memorystore deployment and leverage Envoy proxy to facilitate replication.

As Freestar has continued to grow, we support a global customer base that demands the lowest possible latencies, both from a customer experience and revenue standpoint. Specifically, we aim for ad-configuration delivery with a client-side latency under 80 ms at the 90th percentile, with server-side round trip time for requests under 20 ms at the 95th percentile.

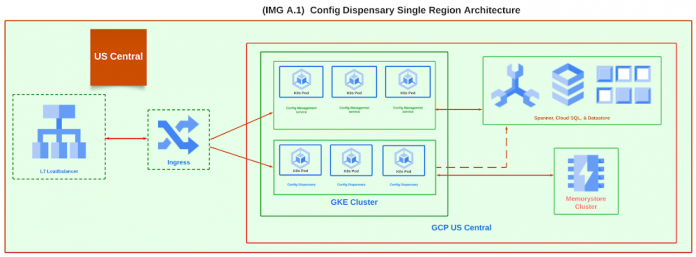

Since Freestar expanded globally, we’ve faced challenges with latency. Originally, we served ad configurations from a single region in Google Cloud’s US-Central region. This resulted in high latency for customers accessing the configuration from outside the US. One solution was to use Google Cloud’s Content Delivery Network (CDN), but it was deemed unsuitable because of the dynamic nature of configurations. To meet our ambitious goals, we had to evolve our single-region architecture (see IMG A.1) to be multi-regional.

The problem

As our global footprint grew, our risk analysis pinpointed a significant technical concern related to our critical service delivery infrastructure. Prior to transitioning to a multi-regional design, Freestar’s infrastructure was concentrated in the US-central region, spread across multiple zones. It left us exposed to potential regional disruptions in Google Kubernetes Engine, which could have led to a complete service downtime, affecting all our customers.

We also rely on Google Cloud’s Memorystore for rapid configuration lookups. When we transitioned to a multi-regional setup, additional network overhead was introduced when reading configurations from the Memorystore cluster located in the US-central region from other regions. When accessing the service from regions outside the US-Central, we observed an additional latency of 100 to 240 ms at the 95th percentile, and the total service latency would have increased from our benchmark of 80 ms to approximately 300 ms. Although this performance might be satisfactory in many scenarios, it didn’t meet the requirements for our specific use case. You can view the related data on inter-regional/continental network latency on Google Cloud in the publicly available Looker Studio Report. To address the issue, we decided to distribute the Memorystore cluster regionally.

The challenges

Currently, Memorystore does not support out-of-the-box replication across multiple regions within Google Cloud. One solution we considered was building application-level code to actively call individual regional API endpoints and trigger refreshes of Memorystore keys. However, this approach would have introduced multiple points of failure and lead to inconsistent configurations across regions. This inconsistency would then necessitate a process to ensure customer Ad configurations were kept consistent across all regions and refreshed as needed. Furthermore, this option would require a significant amount of development effort. Thus, we sought a different approach.

The solution

Ultimately, based on this solution blog, we chose to go with Envoy proxy to facilitate replication. This strategy was essential in enabling high-throughput applications and multi-regional access patterns on the Memorystore infrastructure. Motivated by this approach, we devised a solution to replicate and keep configurations consistent across multi-regional Memorystore clusters.

First, we configured the Envoy proxy to propagate all the write operations to every regional cluster. This procedure is triggered whenever an event occurs in the primary Memorystore cluster in the US-central region. The Envoy proxy is configured as a sidecar container, running alongside the microservice that initiates the Memorystore write operations.

This approach required minimal developer time because the only change necessary in the application code was updating the Memorystore configuration to utilize the Envoy proxy port and IP address. In this case, we used localhost, as the Envoy proxy, and the application ran within the same GKE Pod. Going forward, this solution will also enhance our ability to scale multi-regional clusters without requiring any application code updates.

Envoy proxy sidecar configuration

Remote cluster configuration

This cluster configuration (refer IMG A.2) defines remote regional memorystore IP address and port details. Envoy uses it to interact with the actual Memorystore cluster from the virtual configuration.

Local proxy cluster configuration

Following configuration (IMG A.3) is the us-central virtual / proxy configuration within the pod where the sidecar is running so that it can propagate or mirror the writes commands.

Envoy proxy listener configuration

The Envoy Listener (IMG A.4) initiates a process based on triggers from the application, in this instance, the Config Dispensary. The application interfaces with Memorystore using a local host and port 6379. Following this interaction, the system employs the ‘us_central1’ remote configuration, directing the command towards the actual cluster.

Examining the configuration closely, you’ll notice a specific block configuration set to mirror all commands into another local proxy cluster. Additionally, there’s an exclusion configuration designed to prevent all read operations from being mirrored, thereby restricting such actions.

The image below (IMG A.5) shows the actual configuration settings. It demonstrates how the application from the us-central region (primary location) interacts with Memorystore through Envoy proxy for write operations.

The architecture diagram (IMG A.6) below shows how the regional Memorystore cluster and the microservices communicate. Except in ‘us-central’, microservices function without a proxy. In ‘us-central’, the proxy oversees read/write activities within the region and distributes writes throughout other regions.

The fully configured envoy.yaml and Dockerfile configuration for the Envoy Proxy are available on GitHub.

The architecture diagram (IMG A.7) below provides a comprehensive view of our microservice infrastructure. We leverage services such as Cloud Spanner, Cloud SQL, and Datastore to store the primary copy of our ad configuration in the US-central region. Any changes to this primary configuration instigate a Memorystore refresh in the US-central region. Subsequently, these updates propagate to other regional Memorystore clusters with the assistance of the Envoy proxy.

What comes after ‘Go’: Post-deployment analysis

By switching to a multi-regional Memorystore Redis setup with Envoy proxy replication from a single-region Redis cluster, we’ve significantly reduced latency, matching the performance of the previous single-region deployment.

Though write replication takes around 120ms at the 95th percentile to synchronize data across regions, it’s inconsequential due to our system’s read-heavy characteristic, making the latency of write operations negligible.

Since implementing this setup two months ago, we’ve had no replication or Envoy proxy issues. The application uses the US-Central region as its main region and uses Envoy for all Memorystore operations. Memorystore handles about 40K requests per second at peak hours with 5ms latency at 95 percentile. At peak periods, our Ad Config Service efficiently handles more than 15K API requests per second. Achieving a consistent 80ms latency on the client side (web browser) at the 90th percentile, we’ve successfully reached our targets following the expansion of our critical services across multiple regions.

Collaborating with the Google Cloud team, we’ve turned complex challenges into a robust solution, and the Google Cloud team’s meticulous validation of our system made us confident that it complies with industry standards. We now have a reliable solution for replicating Memorystore data globally, and consider the collaboration with Google Cloud a great success.

Cloud BlogRead More