Most organizations need to monitor activity on databases containing sensitive information to ensure security auditing and compliance. Although some security operations teams might be interested in monitoring all activities like read, write, and logons, others might want to restrict monitoring to activities that lead to changes in data and data structures only. In this post, we show you how to filter, process, and store activities generated by Amazon Aurora database activity streams that are relevant for specific business use cases.

Database activity streams provide a near-real-time stream of activity in your database, help with monitoring and compliance, and are a free feature of Aurora.

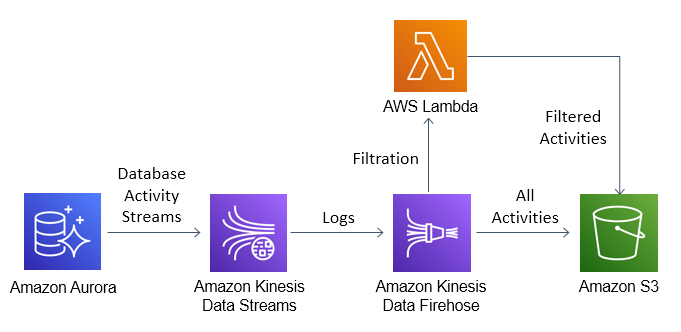

A best practice is to process and store activity (audit) information outside of the database and provide access to this information on a need-to-know basis. Database activity streams capture database activity from Aurora and stream it to Amazon Kinesis Data Streams, which is created on behalf of your Aurora DB cluster. Multiple AWS services such as Amazon Kinesis Data Firehose and AWS Lambda can consume the activity stream from Kinesis Data Streams.

Solution overview

Applications can consume an activity stream for auditing, compliance and monitoring. However, as of this writing, database activity streams don’t segregate activity by type. Some organizations may want to audit or monitor specific activities like Data Definition Language (DDL) and Data Manipulation Language (DML) requests but want to exclude Data Query Language (DQL) requests. We show how a Kinesis Data Firehose delivery stream captures the activity data from a Kinesis data stream and invokes a Lambda function to segregate the activity type and store it in different folders in an Amazon Simple Storage Service (Amazon S3) bucket.

Database activity streams work similarly with Amazon Aurora PostgreSQL-Compatible edition and Amazon Aurora MySQL-Compatible edition, with some differences: Aurora MySQL doesn’t support synchronous audit event publishing, and there are minor differences in the information published per event, although the JSON structure and format is the same.

The following diagram illustrates the architecture of our solution.

Prerequisites

To use this solution, you need an AWS account with privileges to create resources like an Aurora DB cluster, Kinesis data stream, Firehose delivery stream, and Lambda function. For this post, we assume the Aurora cluster already exists. For more information about creating the Aurora cluster, see Getting started with Amazon Aurora. For more information about database activity streams, see Requirements for database activity streams.

When you enable a database activity stream, you create a Kinesis data stream, Firehose delivery stream, and other resources, which all incur additional costs. Make sure you review the pricing page to understand associated costs before you provision resources. If you’re testing the solution, please make sure you delete resources that you created after your tests are over.

Create a database activity stream

To set up your database activity stream, complete the following steps:

On the Amazon RDS console, choose Databases in the navigation pane.

Select your Aurora cluster.

On the Actions menu, choose Start database activity stream.

For Master key, choose RDS-S3-Export.

You use this key to encrypt the activity stream data.

For Database activity stream mode, select Asynchronous.

For Apply immediately, select Apply immediately.

Choose Continue.

This initiates the creation of the activity stream and the associated Kinesis data stream. After the activity stream is configured, activity data is encrypted using the RDS-S3-Export key. This activity data is streamed through the Kinesis data stream.

Sample activity stream records

The following code is a sample of activity stream records. For details about the structure of Aurora PostgreSQL and Aurora MySQL activity stream records, see Monitoring database activity streams.

Lambda function

The Lambda function is invoked by Kinesis Data Firehose. We configure the Lambda event source after we create the function. Aurora activity data is Base64 encoded and encrypted using an AWS Key Management Service (AWS KMS) key. For this post, we decrypt the data and store it in an appropriate folder in the S3 bucket based on activity type. However, we recommend encrypting the activity data before storing it in the folder, or enabling encryption at the bucket level. We also discard heartbeat events and store other events in specific folders like CONNECT, DISCONNECT, ERROR, and AUTH FAILURE. Pay attention to comments within the Lambda code because they highlight activities like decryption, filtering, and putting data in a specific folder. For instructions on creating a Lambda function, see the AWS Lambda Developer Guide.

The following is the sample Lambda function code in Python 3.8 using Boto3. This code depends on the aws_encryption_sdk library, which needs to be packaged as part of the Lambda code. aws_encryption_sdk depends on the cryptography library, which is OS dependent. For Lambda, Linux cryptography libraries should be included in the Lambda package.

When choosing an existing or new Lambda execution role, make sure role has access to the S3 bucket, Kinesis Data Firehose, and AWS KMS. It’s always a best practice to follow the principle of least privileges.

The following Lambda code can be found at AWS Samples on GitHub:

Deploy the function

You can use console to deploy the Lambda function or use the following AWS Serverless Application Model (AWS SAM) template to deploy it. The entire package is available in AWS Samples on GitHub.

Sample parsed records

The following is a sample record parsed by the preceding Lambda function for a CREATE INDEX event:

Validate the data stream

After you create the activity stream, you can go to the Kinesis Data Streams console to see the stream details.

Create a Firehose delivery stream

To create your delivery stream, complete the following steps:

On the Kinesis Data Firehose console, choose Create delivery stream.

For Delivery stream name, enter a name.

For Choose a source, select Kinesis Data Stream.

For Kinesis data stream, choose the data stream you created when you created your database activity stream.

Choose Next.

For Transform source records with AWS Lambda, select Enable.

For Lambda function, choose the function you created.

Choose Next.

For Destination, select Amazon S3.

For S3 bucket, choose your S3 bucket.

S3 bucket folder structure

Our Lambda function creates the following folder structure based on the event type. You can customize this in the Lambda code before writing the object to Amazon S3.

Clean up

When you’re done testing the solution, make sure you delete the resources you created to avoid ongoing charges. If you have deploying the solution through AWS CloudFormation or Serverless Application Model, use the CloudFormation documentation to clean up the resources when not needed.

If you have created the resources manually from AWS console:

Go to RDS console and disable DAS (Refer to Step 3 above).

From Kinesis page, delete Kinesis Firehose delivery stream and Kinesis Data Stream.

From Lambda console, delete the Lambda function, although keeping the Lambda function will not incur any cost.

Delete the S3 bucket used in this solution.

Conclusion

In this post, we demonstrated the process of capturing a database activity stream and filtering records based on business requirements and specific use cases. With this approach, you can customize the Lambda function to capture events that are important for your use case and discard whatever is irrelevant. You can also perform additional activities like sending notifications on specific events.

For any questions or suggestions about this post, leave a comment.

About the Authors

Gaurav Sharma is a Solutions Architect at AWS. He works with digital-native business customers, providing architectural guidance on various AWS services. He brings more than 24 years’ experience in database development, database administration, and solution architecture.

Vivek Kumar is a Solutions Architect at AWS based out of New York. He works with customers to provide technical assistance and architectural guidance on various AWS services. He brings more than 23 years of experience in software engineering and architecture roles for various large-scale enterprises.

Nitin Aggarwal is a Solutions Architect at AWS. He helps digital native customers architect data analytics solutions and provides technical guidance on various AWS services. He brings more than 16 years of experience in software engineering and architecture roles for various large-scale enterprises.

Read MoreAWS Database Blog