Successful organizations learn from the past, are able to predict the future, and understand the shape of their present. Enterprise Data Integration is the magic that binds all these views together.

What is Enterprise Data Integration?

Enterprise Data Integration is the process of combining data from various sources and unifying that data in a sensible way. In the past, this usually meant unifying into a centralized warehouse of some sort. Today it’s also possible to do that unification in smaller but connected data marts that compose the data architecture for an enterprise. The topography of this space can be just as complex and varied as the data that flows through the systems.

This fluidity is due, in part, to the fast-changing nature of modern data structures and the easy and cheap storage options available to the modern data engineer. This means that capturing the nature and definition of data integration can be slightly elusive. It is often easier to understand data integration as a dynamic process of responding to organizational needs with data, moving and transforming data as needs arrive.

Why Data Integration is Important for Enterprises

The need enterprises have for data integration should be obvious to any person operating in the modern world. As the volume and complexity of data increases, so does our need to understand it.

The term data integration is, by necessity, broad; it has to encapsulate so much. The depth and breadth of data involved can be great, and the data itself can be extremely varied. Data integration services that position themselves to be modern solutions must be flexible enough to accommodate these complexities.

More important than flexibility is speed. Data integration platforms must meet the demands of end users who are increasingly expecting clean and consumable data in real-time. If you find out that it will rain after you’ve already left your house without your umbrella that information doesn’t do you much good. Timing is everything.

It’s worth noting that traditionally the purpose of data integration was to unify data into one centralized location. That still may be the goal of many. Very typically, destinations include data storage like warehouses, data lakes, or the combination of the two previous data storage types: data lakehouses. These destinations could be hosted and made available for data integration from anywhere, from on-prem to cloud hybrid to cloud native applications.

A Holistic Approach to Enterprise Data Integration and Strategy

A discussion on data integration cannot be wholly separate from a discussion of data strategy. It isn’t enough to have the power to connect to resources within your organization; you have to understand how to use the data once you gather it. The first step in that process is a successful data strategy.

Given the importance and complexity of modern data systems, a successful data strategy plans for change. These plans, known more cohesively as DataOps, are the processes and systems that combined enable better collaboration and a shorter development cycle. Data engineers are empowered to create new data products quickly.

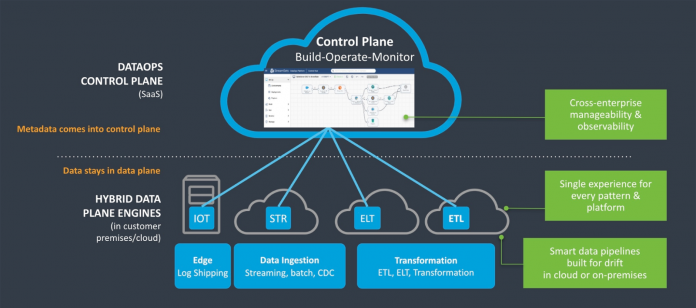

Separate from the data plane, which should account for almost any amount of complexity in data or data sources, the control plane where DataOps resides is where the chaos of data integration is controlled.

Some Key Features of DataOps Include

Reusability – Constantly shifting requirements are a given in the world of data integration. A successful DataOps strategy uses the power of reusable code and structures to pivot quickly when things change. New integrations can rest on the foundation created by previously successful pipelines, which can dramatically speed up development.

Monitoring – Monitoring can ensure that the data flowing through a data integration is as expected, on time and complete. In a world where outages can be disastrous, successful monitoring is key. But, it’s not just accuracy; monitoring can also increase the speed at which errors are identified and corrected.

Version control – Borrowed from the world of agile development, the concept of version control and – by extension – metadata management allows developers to maintain working copies of every change to their data integration. As you would imagine, this is more than a simple bookmarking tool, and version control allows for rollbacks if something goes wrong, making costly rewrites a thing of the past.

Collaboration – Maintaining a centralized plane to share metadata, version control, monitoring, and data pipelines within a team is essential to the speed of delivery. Imagine how fast teams can work when they understand at a glance what has been done and what still needs to be done – and have the tools to do it.

Enabling DataOps

Modernizing the tools used for data integration means selecting tools that power up your data engineers. Enterprise data platforms like StreamSets can help facilitate important DataOps best practices. StreamSets enables DataOps using StreamSets Control Hub. Control Hub is the control plane from which data integration pipelines can be designed, deployed, and managed.

Here are some ways Control Hub does that:

Reusability – StreamSets allows for several different ways of reusable pipeline design patterns, including fragments. Fragments are sets of connected stages that can be used over and over again in multiple pipelines. Edit a fragment once, and all the pipelines that utilize that fragment are updated too.

Monitoring – Every aspect of a data integration can be monitored in real-time through Control Hub, from engine (compute) health, error information, logs, and alerts like data drift alerts that capture changes in the structure of data flowing through a pipeline.

Version control – Control Hub maintains the version history for each pipeline and fragment, which means comparing two versions of the same pipeline or even swapping commits is easy with StreamSets.

Collaboration – Keeping global operational control in one place means that teams can understand the health and progress of their data integrations at a glance.

From Enterprise Data Integration to Data Engineering

Modern data integration platforms have to be flexible, powerful, and fast to leverage the tremendous power of data. With features like reusable pipeline design patterns, fragment creation and re-use, and data drift management, StreamSets helps enterprises achieve these goals. StreamSets can empower data engineers to build successful, repeatable, and scalable data solutions.

The post Enterprise Data Integration: What it is, Why it Matters, and How to Approach It appeared first on StreamSets.

Read MoreStreamSets