Our planet faces a global extinction crisis. UN Report shows a staggering number of more than a million species feared to be on the path of extinction. The most common reasons for extinction include loss of habitat, poaching, and invasive species. Several wildlife conservation foundations, research scientists, volunteers, and anti-poaching rangers have been working tirelessly to address this crisis. Having accurate and regular information about endangered animals in the wild will improve wildlife conservationists’ ability to study and conserve endangered species. Wildlife scientists and field staff use cameras equipped with infrared triggers, called camera traps, and place them in the most effective locations in forests to capture images of wildlife. These images are then manually reviewed, which is a very time-consuming process.

In this post, we demonstrate a solution using Amazon Rekognition Custom Labels along with motion sensor camera traps to automate this process to recognize engendered species and study them. Rekognition Custom Labels is a fully managed computer vision service that allows developers to build custom models to classify and identify objects in images that are specific and unique to their use case. We detail how to recognize endangered animal species from images collected from camera traps, draw insights about their population count, and detect humans around them. This information will be helpful to conservationists, who can make proactive decisions to save them.

Solution overview

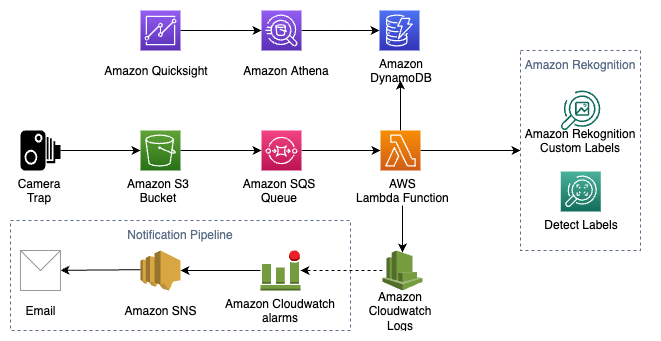

The following diagram illustrates the architecture of the solution.

This solution uses the following AI services, serverless technologies, and managed services to implement a scalable and cost-effective architecture:

Amazon Athena – A serverless interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL

Amazon CloudWatch – A monitoring and observability service that collects monitoring and operational data in the form of logs, metrics, and events

Amazon DynamoDB – A key-value and document database that delivers single-digit millisecond performance at any scale

AWS Lambda – A serverless compute service that lets you run code in response to triggers such as changes in data, shifts in system state, or user actions

Amazon QuickSight – A serverless, machine learning (ML)-powered business intelligence service that provides insights, interactive dashboards, and rich analytics

Amazon Rekognition – Uses ML to identify objects, people, text, scenes, and activities in images and videos, as well as detect any inappropriate content

Amazon Rekognition Custom Labels – Uses AutoML to help train custom models to identify the objects and scenes in images that are specific to your business needs

Amazon Simple Queue Service (Amazon SQS) – A fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications

Amazon Simple Storage Service (Amazon S3) – Serves as an object store for documents and allows for central management with fine-tuned access controls.

The high-level steps in this solution are as follows:

Train and build a custom model using Rekognition Custom Labels to recognize endangered species in the area. For this post, we train on images of rhinoceros.

Images that are captured through the motion sensor camera traps are uploaded to an S3 bucket, which publishes an event for every uploaded image.

A Lambda function is triggered for every event published, which retrieves the image from the S3 bucket and passes it to the custom model to detect the endangered animal.

The Lambda function uses the Amazon Rekognition API to identify the animals in the image.

If the image has any endangered species of rhinoceros, the function updates the DynamoDB database with the count of the animal, date of image captured, and other useful metadata that can be extracted from the image EXIF header.

QuickSight is used to visualize the animal count and location data collected in the DynamoDB database to understand the variance of the animal population over time. By looking at the dashboards regularly, conservation groups can identify patterns and isolate probable causes like diseases, climate, or poaching that could be causing this variance and proactively take steps to address the issue.

Prerequisites

A good training set is required to build an effective model using Rekognition Custom Labels. We have used the images from AWS Marketplace (Animals & Wildlife Data Set from Shutterstock) and Kaggle to build the model.

Implement the solution

Our workflow includes the following steps:

Train a custom model to classify the endangered species (rhino in our example) using the AutoML capability of Rekognition Custom Labels.

You can also perform these steps from the Rekognition Custom Labels console. For instructions, refer to Creating a project, Creating training and test datasets, and Training an Amazon Rekognition Custom Labels model.

In this example, we use the dataset from Kaggle. The following table summarizes the dataset contents.

Label

Training Set

Test Set

Lion

625

156

Rhino

608

152

African_Elephant

368

92

Upload the pictures captured from the camera traps to a designated S3 bucket.

Define the event notifications in the Permissions section of the S3 bucket to send a notification to a defined SQS queue when an object is added to the bucket.

The upload action triggers an event that is queued in Amazon SQS using the Amazon S3 event notification.

Add the appropriate permissions via the access policy of the SQS queue to allow the S3 bucket to send the notification to the queue.

Configure a Lambda trigger for the SQS queue so the Lambda function is invoked when a new message is received.

Modify the access policy to allow the Lambda function to access the SQS queue.

The Lambda function should now have the right permissions to access the SQS queue.

Set up the environment variables so they can be accessed in the code.

Lambda function code

The Lambda function performs the following tasks on receiving a notification from the SNS queue:

Make an API call to Amazon Rekognition to detect labels from the custom model that identify the endangered species:

Fetch the EXIF tags from the image to get the date when the picture was taken and other relevant EXIF data. The following code uses the dependencies (package – version) exif-reader – ^1.0.3, sharp – ^0.30.7:

The solution outlined here is asynchronous; the images are captured by the camera traps and then at a later time uploaded to an S3 bucket for processing. If the camera trap images are uploaded more frequently, you can extend the solution to detect humans in the monitored area and send notifications to concerned activists to indicate possible poaching in the vicinity of these endangered animals. This is implemented through the Lambda function that calls the Amazon Rekognition API to detect labels for the presence of a human. If a human is detected, an error message is logged to CloudWatch Logs. A filtered metric on the error log triggers a CloudWatch alarm that sends an email to the conservation activists, who can then take further action.

Expand the solution with the following code:

If any endangered species is detected, the Lambda function updates DynamoDB with the count, date and other optional metadata that is obtained from the image EXIF tags:

Query and visualize the data

You can now use Athena and QuickSight to visualize the data.

Set the DynamoDB table as the data source for Athena.

Add the data source details.

The next important step is to define a Lambda function that connects to the data source.

Chose Create Lambda function.

Enter names for AthenaCatalogName and SpillBucket; the rest can be default settings.

Deploy the connector function.

After all the images are processed, you can use QuickSight to visualize the data for the population variance over time from Athena.

On the Athena console, choose a data source and enter the details.

Choose Create Lambda function to provide a connector to DynamoDB.

On the QuickSight dashboard, choose New Analysis and New Dataset.

Choose Athena as the data source.

Enter the catalog, database, and table to connect to and choose Select.

Complete dataset creation.

The following chart shows the number of endangered species captured on a given day.

GPS data is presented as part of the EXIF tags of a captured image. Due to the sensitivity of the location of these endangered animals, our dataset didn’t have the GPS location. However, we created a geospatial chart using simulated data to show how you can visualize locations when GPS data is available.

Clean up

To avoid incurring unexpected costs, be sure to turn off the AWS services you used as part of this demonstration—the S3 buckets, DynamoDB table, QuickSight, Athena, and the trained Rekognition Custom Labels model. You should delete these resources directly via their respective service consoles if you no longer need them. Refer to Deleting an Amazon Rekognition Custom Labels model for more information about deleting the model.

Conclusion

In this post, we presented an automated system that identifies endangered species, records their population count, and provides insights about variance in population over time. You can also extend the solution to alert the authorities when humans (possible poachers) are in the vicinity of these endangered species. With the AI/ML capabilities of Amazon Rekognition, we can support the efforts of conservation groups to protect endangered species and their ecosystems.

For more information about Rekognition Custom Labels, refer to Getting started with Amazon Rekognition Custom Labels and Moderating content. If you’re new to Rekognition Custom Labels, you can use our Free Tier, which lasts 3 months and includes 10 free training hours per month and 4 free inference hours per month. The Amazon Rekognition Free Tier includes processing 5,000 images per month for 12 months.

About the Authors

Jyothi Goudar is Partner Solutions Architect Manager at AWS. She works closely with global system integrator partner to enable and support customers moving their workloads to AWS.

Jay Rao is a Principal Solutions Architect at AWS. He enjoys providing technical and strategic guidance to customers and helping them design and implement solutions on AWS.

Read MoreAWS Machine Learning Blog