If you’re an architect tasked with designing a solution with complex data requirements, you may decide to adopt a multimodel approach, combining multiple data models, possibly spanning multiple database technologies. For each model, you choose the best technology for the purpose at hand. AWS offers 15+ purpose-built engines to support diverse data models, including relational, key-value, document, in-memory, graph, time series, wide column, and ledger databases. Using purpose-built data models can deliver the best results for your application, but it requires you to understand how to combine models.

In this post, we discuss what multimodel is, its pros and cons, and why it can be the best choice. We discuss the challenges of building a multimodel solution. We demonstrate, using a movie example, how to tackle one of the main challenges: coming up with an overall data model that brings together multiple smaller models with different representations but overlapping data. We show how to use a knowledge graph built on Amazon Neptune to guide the effort.

We use “multimodel” interchangeably with polyglot persistence, a pattern in which a solution uses different data services for different kinds of data. Despite this pattern, many organizations prefer to use a single technology or model, such as relational. Multimodel comes into play for solutions in which one model does not suffice. If you’re building a customer relationship solution, you might build the customer record on a relational database but use a graph database to navigate customer relationships. Graph is purpose-built for querying relationships. Considering the data technologies on the market, it’s a better tool than relational for customer relationships. It might be cleaner and faster to implement. You could try to push forward with relational alone but end up with an awkward schema and slow queries that later need to be refactored. Multimodel in this case means adopting relational plus graph.

The trade-off is complexity. You have to maintain two different sorts of databases. You need two skillsets. Your solution needs to tie the two models together. Balancing these trade-offs, you should choose multimodel if the benefits outweigh complexity.

There are vendors that bundle multiple models—graph and document-based, for example—in a single database instance, a one-stop shop that reduces multimodel complexity. But the number of database services available is large and growing. It’s becoming less feasible for an old-school multimodel database to offer the models you need. More likely, your architecture chooses the services it needs from the database market at large and builds integrations to make them work together.

Bringing them together raises two challenges.

How to make the models run together. For example, how can I write to several models together? How can I query across multiple models?

How to design the overall model, or model of models.

In this post we focus on the second challenge. For examples of how to approach the first challenge, refer to Build a knowledge graph on Amazon Neptune with AI-powered video analysis using Media2Cloud and AWS Bookstore Demo App on GitHub.

Use case overview

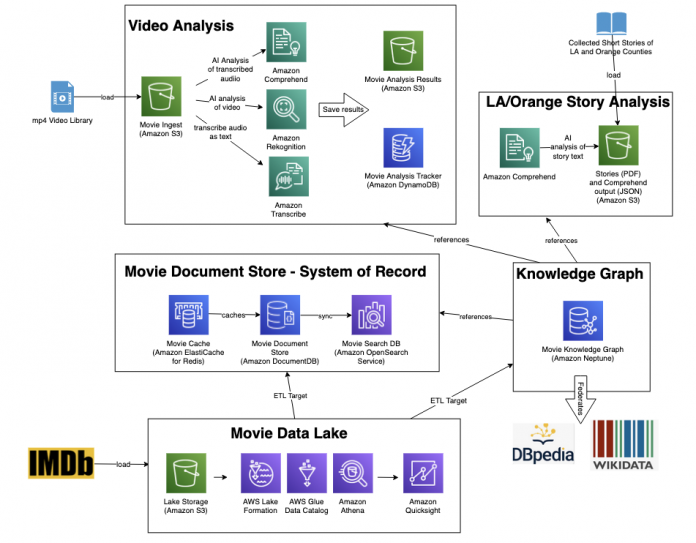

The following diagram depicts the movie solution that we want to build.

We break it down as follows:

Movie Document Store – System of Record: Our primary repository of movies and their contributors. We maintain them as JSON documents in an Amazon DocumentDB (with MongoDB compatibility) cluster called Movie Document Store. For fast lookups, we front-end the document store with a cache, Movie Cache, built using Amazon ElastiCache for Redis. Additionally, we sync the document store’s data to a search index on Amazon OpenSearch Service called Movie Search DB, enabling elaborate fuzzy and neural search of movie data.

Movie Data Lake: We store movie data in Amazon Simple Storage Service (Amazon S3) buckets. Using the AWS Glue Data Catalog, we define a table structure of this data, and we use Amazon Athena to query that data using SQL. We use AWS Lake Formation to enforce permissions on the tables and columns. Amazon QuickSight is a business intelligence (BI) service that can visualize and report on that data. The main source of the lake data is the Internet Movie Database (IMDB). We load it into the lake and use an extract, transform, and load (ETL) job built on AWS Glue to move that data into Movie Document Store.

Knowledge Graph: In a Neptune cluster, we build a knowledge graph of movies that draws connections between the different data sources in order to understand the domain as a whole. We load IMDB data from the lake via ETL as graph resources. We tie these resources to documents in the document store. We also maintain Uniform Resource Identifiers (URIs) of movies and contributors from Wikidata and DBPedia, enabling us to run federated movie queries between Neptune and those public graphs. Additionally, we link Neptune graph resources to video analysis and story analysis results.

Video Analysis: We have the MP4 media files for some of the movies. In the video analysis component, we run AI services such as Amazon Comprehend, Amazon Rekognition, and Amazon Transcribe to indicate people, things, emotions, sentiments, words, and key phrases that occur in the video. We maintain a summary of the results in Amazon DynamoDB tables. We store detailed results in an S3 bucket. The knowledge graph links movies to the analysis results for that movie. It links contributors to people observed in the video.

LA/Orange Story Analysis: PDF files of stories written by authors in LA and Orange counties. Knowing that authors from that area often mention movies in their stories, we use Amazon Comprehend to run AI analysis of the stories, checking for mention of specific movies or contributors. We store the results in an S3 bucket. In the knowledge graph, we link stories to movies and contributors mentioned in stories.

This is not an accidental architecture, but rather a sensible design to meet requirements. The architect might start with the document-oriented model – a good choice given that movie entities have a complex structure of nested attributes. But the document model isn’t ideal for navigating movie relationships. Hence the addition of a graph model. Adding data lake is necessary given the requirement for BI, plus the need to centrally stage IMDB data and move it via ETL to the document store and the graph.

The preceding figure isn’t a model. It’s a high-level depiction of a solution that calls out the need for several types of databases, but it doesn’t indicate what the data looks like or how it will be spread across the databases. In the discussion that follows, we build and explore that model.

Solution overview

Our solution uses the ideas of data mesh and knowledge graph. A data mesh is a self-serve marketplace of data products. Traditionally much of a company’s data is held in silos; in a data mesh, it is offered as well understood products to interested consumers. A knowledge graph is a database that brings together business objects from multiple sources and draws relationships among them so that we can better understand them.

To design the multimodel, we recommend dividing the solution into a set of data products. Document each product and describe how it relates to other products. Imagine you would publish these products to a data mesh marketplace. In fact, imagine the movie applications you intend to build as part of your movie solution are data mesh consumers that find the products in the marketplace. Even if you don’t actually adopt a data mesh, the mental model of documenting data products helps break down the multimodeling effort. Design as if you had a mesh.

The knowledge graph plays two roles in our design. First, it’s one of the models—with its own data products—as we discussed in the previous section. Second, it helps the mesh by maintaining in graph form all the data product definitions and their relationships. We can then use graph queries to ask the knowledge graph questions about how data products are related. A mesh marketplace could use this capability to recommend related data products to the consumer browsing a specific product.

The following figure shows how we bring these ideas together to model the movie solution.

The solution contains the following components:

As the data architect, we use a data modeling tool to design our data products. We use the Unified Modeling Language (UML), a visual modeling language offering a standard way to draw a data model using a class diagram. There are many UML visual editors available. We use MagicDraw, a third-party commercial modeling tool.

UML gives us not only a helpful diagram but also a model in machine-readable form, based on XML, from which we can generate code. We pass the UML models through a transformer to convert the UML to formats that the mesh and our knowledge graph understand.

We won’t use a data mesh. The dashed line shows the path from transformer to mesh using the mesh interface for producers to offer their products.

We load the data products into the knowledge graph in a Neptune database. Neptune supports two graph representations: property graph and Resource Description Framework (RDF). We use RDF. The transformer converts the data products to that form. We load that RDF data into the Neptune database.

We can then ask the knowledge graph product relationship questions from the mesh itself, from our applications, or from a power tool such as a Neptune Workbench notebook. We use a notebook to query and to drive the overall workflow.

Let’s now step through the solution.

Provision resources

To run the steps of this post in your AWS account, you need a Neptune database and a Neptune notebook. Follow the setup instructions. As discussed in the setup, you will incur usage cost for the services deployed. When you complete the setup, open the Neptune_Multimodel.ipynb notebook in your browser to follow along with the discussion.

If you prefer to read along to learn the concept, you may skip the setup.

Design UML models

We use UML class diagrams to model data products and their implementation. We draw each product and implementation as a box, which designates a class. We draw relationships between them using dashed arrows, known as usage relationships. We pepper the diagram with additional details using text within angled brackets. These details are called stereotypes, and allow us to express detail specific to multimodeling. That detail will help us map the UML model to mesh and knowledge graph.

There are many UML editors in the market. We use MagicDraw 2021x, which supports profiles as well as the ability to export models as XML Metadata Interchange (XMI) form. If you have MagicDraw, you may open the models and inspect them. If not, we prebuilt the models for you.

MMProfile

MMProfile defines stereotypes used in the other models. Each box in the next diagram is a stereotype. In UML, a collection of stereotypes is called a profile. We are not defining data products here, but rather creating a set of reusable stereotypes to use in the models that follow. The DataProduct stereotype can be applied to a class to indicate that the class represents a data product. The joins stereotype can be applied to a usage relationship drawn between two classes to indicate the one class joins to the other class.

StoryAnalysis

The following diagram shows the story analysis model.

Story analysis is the simplest of the models, defining two classes:

StoryAnalysis is a data product, indicated by the <<Data Product>> stereotype. It also has a <<hasSource>> stereotype with tags sourceDataSet and integrationType, indicating its source is an on-premises PDF library that is uploaded to an Amazon S3 bucket. It has two attributes defined: StoryTitle (whose stereotype indicates that it is the product key) and AnalysisStuff. That might seem brief and informal, but the goal of this model is not to define the full schema but rather to highlight the main details of the product so that we can relate it to other products.

LAOrangeStoryBucket is a data product implementation, indicated by the <<DataProductImpl>>. It is the implementation of StoryAnalysis, as the usage arrow stereotyped <<hasImpl>> indicates. The <<awsService>> stereotype has the tag service with the value S3. We see from its attributes that it contains Amazon Comprehend output; the implementation holds Amazon Comprehend results of the story PDF in an S3 bucket.

VideoAnalysis

The video analysis model, shown in the next figure, has one data product (VideoAnalysis) and four implementation classes, two of which are implemented using DynamoDB tables (IngestItem and AnalysisItem) and two using Amazon S3 buckets (VideoIngestBucket and VideoAnalysisBucket). The model shows several <<joins>> relationships. For example, VideoAnalysisBucket joins to AnalysisItem on the S3AnalysisLocation attribute. Additionally, VideoAnalysis has the complex attribute celebs, whose definition is broken into its own class CelebAppearance.

MovieDoc

The movie document store is shown in the next figure. There are three data products—MovieDocument, RoleDocument, and ContributorDocument—and there are <<joins>> relationships between them. Conceptually, a role represents a contributor appearing in a movie; therefore, a role joins a movie and a contributor. MovieDocument has attributes broken into their own classes (Ratings, TextAboutMovies), typical of a document-based model. Several attributes (such as MovieID) have data type IMDBID, indicating they bear the IMDB identifier. Some attributes have an enumerated value (for example, SeriesType draws a value from SeriesTypeEnum). There are seven implementation classes: three based on DocumentDB (MovieDocImpl, RoleDocImpl, ContribDocImpl), three based on OpenSearch Service (MovieSearchDocument, RoleSearchDocument, ContributorSearchDocument), and one based on ElastiCache (MovieElasticacheRedisCache). The <<hasSource>> usage relationship pointing to DataLakePackage indicates that this model is sourced from data from the data lake.

DataLake

The data lake model in the next figure has three data products: MovieLakeTable, RoleLakeTable, and ContributorLakeTable. No additional details are specified; they are similar to the document store products. Each has an AWS Glue Data Catalog table implementation: MovieTable, RoleTable, and ContribTable, respectively. The data that the AWS Glue table catalog resides in an S3 bucket whose implementation is defined by the class LakeStore. The whole model shares the same IMDB source; notice <<hasSource>> is defined at the package level.

KnowledgeGraph

The knowledge graph model is shown in the next figure. There are five data products: MovieResource, ContributorResource, StoryResource, VideoAnalysisResource, and CelebResource. Each is implemented in Neptune as an RDF graph (NeptuneImpl). Given the highly connected nature of a graph, it’s not surprising these products have relationships to products from the other models. For example, StoryResource joins, and has as its source, the story analysis model. MovieResource and ContributorResource join the document store model and are sourced by the lake model. VideoAnalysisResource and CelebResource refer to and source the video analysis model. Additionally, we indicate that MovieResource and ContributorResource are <<similarTo>> StoryResource because stories frequently mention movies.

It’s important to note that this is a product view of the knowledge graph. It’s meant to depict the products of the knowledge graph, not how those products are modeled in the graph itself. We define the graph model later in the post.

Convert UML to RDF

Open the Neptune_Multimodel.ipynb notebook and complete the following steps:

Run the initial cell to set up a reference to the S3 bucket we’ll use to stage RDF data.

Run the cell under Obtain UML files to get a copy of the UML models in the notebook instance.

Run the cell under Extract data products/impl from UML files.This runs a block of Python code that parses each of the UML models and extracts details about data products, implementation classes, and stereotypes. It uses the etree library to parse and query UML’s XML representation, known as XMI. It saves the results in a Python dictionary.

Run the cell under Combine UML output.

This organizes the UML data we extracted as a clean set of products and implementations, making it easier to convert them to RDF. It saves the results in a Python dictionary.

Run the cell under Look at Movie DocStore products.

This shows the portion of the dictionary created in the previous cell for products in the document store model. It’s a good way to check that the data we extracted from UML matches what we see in the UML model.

Finally, let’s convert the UML model collected in this dictionary to RDF using the RDFLib Python library. Run the cells under Create RDF.

This creates a file called mmgen.ttl in the mmgen folder on the notebook instance. This file defines the data products for our movie model as RDF.

mmgen.ttl is an OWL ontology. An ontology is a graph data model that allows us to define classes of resources. It is more elaborate than UML, enabling us to define classes using logical statements and to perform automated reasoning to infer new facts. We use ontology lightly in this example, just enough to introspect data products and their relationships using familiar OWL conventions. (To learn more about ontologies in Neptune, we recommend Model-Driven Graphs Using OWL in Amazon Neptune.)

We use three ontologies in this example. One is a base ontology, mm.ttl, that defines the following common classes and annotations, as RDF, that can be used to define any data product:

OWL class DataProduct. Each data product in our model is a subclass of this class.

OWL class DataProductImpl. Each data product implementation in our model is a subclass of this class.

Annotation properties for stereotypes of a product or implementation. For example, it has annotations for joins, hasSource, and hasImpl.

For annotations that are more complex than a simple relationship, classes to express the relationship. For example, it defines the Source class as the range of the hasSource annotation property.

mmgen.ttl, our second ontology, is generated but uses the base ontology. Let’s examine the generated MovieResource product to understand this relationship better. First, notice its definition as an OWL class that is a subclass of the base DataProduct class.

It uses simple annotation properties to express federation and its relationship to implementation.

The hasSource annotation is complex. We create an instance of the source class to express the details of its source relationship.

joins and similarTo are also complex annotations. We define instances of Ref and Similarity classes to express those relationships.

Our third ontology, movkg.ttl, is an elaboration of the knowledge graph products. The generated ontology defines the products, but we want to define further the properties and relationships of these products. We don’t specify this in the UML model, because UML generally isn’t a suitable modeling language for ontology. It’s suitable for the limited purpose of defining products, but we prefer more control over how our knowledge graph products represent actual movie resources. In movkg.ttl, we define the OWL data type and object properties and associate them with resource products as domain and range. The following is an excerpt:

Load the RDF files to Neptune

In the same notebook, run the cell under Copy generated RDF to S3 to copy the generated RDF mmgen.ttl to the S3 staging bucket.

Run the six cells under Upload three ontologies in the order presented. Each pair of cells loads one ontology using Neptune’s bulk loader (via the %load magic) then, when complete, checks its status via the %load_status magic. The load status should show no errors.

Ask product relationship questions

Query the knowledge graph with a SPARQL query by running the cell under Get list of data products to see products in our model. The basic element of RDF is the triple (subject-predicate-object). In the following query, we check for a product (an unknown to be assigned to the variable ?product) that is a subclass of the base DataProduct class.

The result shows a list of matching products.

Run the cell under Describe a product to view details of the movie resource. SPARQL has a special DESCRIBE query that brings back all facts about a given resource. Here we describe the MovieResource data product.

The Graph tab visualizes the result. In the following figure, the movie resource is highlighted. Try following the edges and viewing the neighboring nodes.

Run the cell under the heading Which products use OpenSearch and ElastiCache? We check for a product that is a subclass of DataProduct. We then follow the hasImpl relationship to find an implementation, and for that implementation we check if its awsService property is OpenSearch or Elasticache. It needs to have at least one implementation of each. The results show the three document store products.

Run the cell under Story and IMDB? to ask if the story product is related to the IMDB dataset. The query follows a dynamic path through the relationships of our ontology, starting with StoryAnalysis and ending with IMDBID. Surprisingly it finds a path, but recall that the stories we consider often mention movies, which have IMDB identifiers.

Finally, try running cells in the section Movie Example. Here we demonstrate the movkg ontology in action, populating movie instances that align with the ontology. We observe the relationships of movies with stories and videos. We also see federation in action. In the query under Pull in DBPedia, observe that the result has DBPedia facts about an instance of the MovieResource data product having MovieID of tt0081505 (an IMDB identifier). Recall that the MovieResource product has a federation relationship to DBPedia. The query is run on the Neptune database; Neptune uses SPARQL query federation to access DBPedia.

There are several other queries in the notebook. Give them a try!

Clean up

If you’re done with the solution and wish to avoid future charges, delete the CloudFormation stack.

Conclusion

In this post, we designed the overall model for a multimodel solution that encompasses several database services. Multimodeling can be complicated, depending on the number of models included. We used an approach combining a data mesh and knowledge graph to simplify the task by dividing the solution into a set of data products. We used UML to draw each model and its relationships to other models. We then converted the UML diagrams to formats suitable for publication to a data mesh marketplace or ingest to an RDF knowledge graph. Adopting a data mesh is optional in this approach; it’s the mental exercise of documenting data products that we emphasize. The knowledge graph plays two roles: as a repository for data product relationships that we can ask product recommendation questions to, and as set of data products in its own right.

If you are interested in exploring this topic further, check out Automate discovery of data relationships using ML and Amazon Neptune graph technology.

About the Author

Mike Havey is a Senior Solutions Architect for AWS with over 25 years of experience building enterprise applications. Mike is the author of two books and numerous articles. His Amazon author page is https://www.amazon.com/Michael-Havey/e/B001IO9JBI.

Read MoreAWS Database Blog