In this post, we deep dive into AWS Database Migration Service (AWS DMS) Time Travel, a feature that was introduced with DMS version 3.4.6 to make troubleshooting simple and secure. With Time Travel, you can now troubleshoot data discrepancies between source and target by retrieving and logging the SQL statements. We’ll discuss the steps to enable the Time Travel feature and how it can help you identify issues through an example.

Before Time Travel, debugging AWS DMS tasks and reproducing issues such as data discrepancies between the source and target required you to enable detailed debugging and rerun the task. This may have led you to spend additional time debugging the verbose logs. You may have also risked filling up your replication instance storage.

Time Travel logs the SQL statements in an encrypted Amazon Simple Storage Service (Amazon S3) bucket. It records the activity of the tables in scope, which is defined in the AWS DMS task table mapping. Time Travel is available in all Regions where AWS DMS supports migrations from PostgreSQL to either PostgreSQL or MySQL.

Solution overview

We walk you through setting up Time Travel through the AWS Command Line Interface (AWS CLI) as well as AWS CloudFormation. Use the AWS CLI if you already have a source and target database set up, have your own tools to run queries, and are looking for a hands-on experience setting up Time Travel task settings. Use the AWS CloudFormation instructions if you’re looking for an end-to-end automated setup of all the resources. You still get hands-on experience running queries and observing the Time Travel logs. Choose the best option that suits your interest.

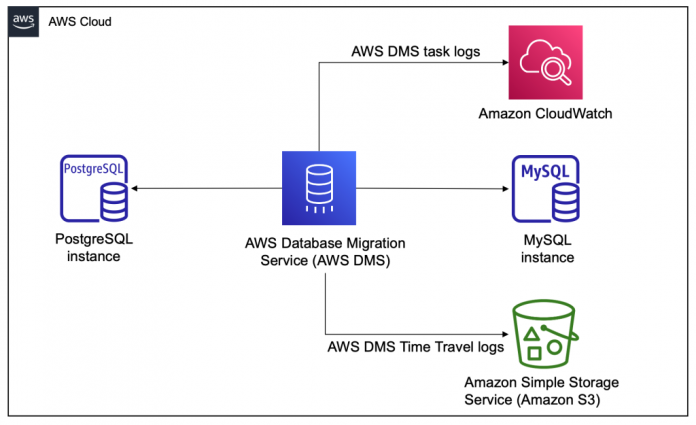

The following diagram illustrates the architecture.

The AWS DMS task migrates data from the PostgreSQL instance to MySQL instance. AWS DMS stores the task logs in the replication instance, which you can view using Amazon CloudWatch. Additionally, Time Travel offloads the SQL statements and relevant logs to Amazon S3 to avoid filling up your replication instance storage.

Prerequisites

To deploy this solution, the following is required:

An AWS account with sufficient privileges to launch the resources necessary for this solution

The AWS CLI set up and ready to run commands to interact with your AWS resources

Time Travel settings explained

Your AWS DMS replication task has a TTSettings section that provides information required to enable Time Travel:

Let’s look closer at the properties:

EnableTT – If this option is set to true, Time Travel logging is turned on for the task. The default value is false.

EncryptionMode – This is the type of server-side encryption being used on your S3 bucket to store your data and logs. You can specify either SSE_S3 (the default) or SSE_KMS. For this post, we use an AWS Key Management Service (AWS KMS) key, so we choose SSE_KMS.

ServerSideEncryptionKmsKeyId – If you specify SSE_KMS for EncryptionMode, provide the ID for your customer managed KMS key. Make sure that the key that you use has an attached policy that turns on AWS Identity and Access Management (IAM) user permissions and allows use of the key. Only your own customer managed symmetric KMS key is supported with the SSE_KMS option. For this post, we use the KMS key we created earlier.

ServiceAccessRoleArn – This is the Amazon Resource Name (ARN) used by the service to access the IAM role. Set the role name to dms-tt-s3-access-role. This is a required setting that allows AWS DMS to write and read objects from an S3 bucket. You will create this role in Step 4 of “Set up Time Travel using the AWS CLI”, or it will be automatically created if you choose to use CloudFormation to set up Time Travel.

BucketName – This is the name of the S3 bucket to store Time Travel logs. Make sure to create this S3 bucket before turning on Time Travel logs. You will create this role in Step 1 of “Set up Time Travel using the AWS CLI”, or it will be automatically created if you choose to use CloudFormation to set up Time Travel.

BucketFolder – This optional parameter sets a folder name in the S3 bucket. If you specify this parameter, AWS DMS creates the Time Travel logs in the path /BucketName/<BucketFolder>/task resourcename/YYYY/MM/DD/hh. If you don’t specify this parameter, AWS DMS creates the default path as /BucketName/dms-time-travel-logs/taskARN/YYYY/MM/DD/hh. For this post, we create a folder called myttfolder.

EnableDeletingFromS3OnTaskDelete – When this option is set to true, AWS DMS deletes the Time Travel logs from Amazon S3 when you delete the task. The default value is false.

EnableRawData – When this option is set to true, the data manipulation language (DML) raw data for Time Travel logs appears under the raw_data column of the Time Travel logs. For more details, see Using the Time Travel logs. The default value is false. When you set this to false, only the type of DML is captured.

OperationsToLog – This specifies the type of DML operations to log in Time Travel logs. You can specify one of the following: INSERT, UPDATE, DELETE, COMMIT, ROLLBACK, or ALL. The default is ALL. For this post, we set it to INSERT to investigate new records that are missing on the target.

MaxRecordSize – This specifies the maximum size of Time Travel log records that are logged for each row. Use this parameter to control the growth of Time Travel logs for particularly busy tables. The default is 64 KB.

To minimize the storage usage of your replication instance, AWS DMS offloads Time Travel logs to Amazon S3. Logs get pushed to Amazon S3 when any of the following conditions are met:

If the current size of logs on the DMS Replication instance for the task exceeds 1 GB, AWS DMS uploads the logs to Amazon S3 within 5 minutes. Therefore, AWS DMS can make up to 12 calls an hour to Amazon S3 and AWS KMS for each running task.

AWS DMS uploads the logs to Amazon S3 every hour, regardless of the size of the logs.

When a task is stopped, AWS DMS immediately uploads the Time Travel logs to Amazon S3.

The following screenshot shows a sample Time Travel log created in the S3 bucket.

Time Travel log describes events that occurred in the source. An event is a database operation that is captured by DMS. This includes INSERT, UPDATE or DELETE operations and metadata associated with the event such as raw SQL statements.

The Time Travel log file contains the following fields.

log_timestamp – The timestamp when this record was added to time travel log.

component – The DMS component from which this DMS Time Travel record originated. For example, SOURCE_CAPTURE/TARGET_APPLY.

dms_source_code_location – The DMS source code file which logged this record. This may be needed by the AWS DMS team for troubleshooting.

transaction_id – The transaction id captured from the WAL logs on the source PostgreSQL engine.

event_id – The internal DMS id associated with the event as captured from the source.

event_timestamp – This is the timestamp of the event as captured from the source.

lsn/scn – The Log Sequence Number as captured from the source. Please refer to pg_lsn Type for more information.

record_type – Describes the type of action captured from the source. For example, BEGIN, COMMIT, or DML. If record_type = DML then the event_type field is populated.

event_type – The type of event captured from the source. For example, INSERT/UPDATE/DELETE/COMMIT.

schema_name – The name of the schema defined in the DMS table mapping rule.

table_name – The name of the table as defined in the DMS table mapping rule.

statement – The SQL statement executed on the target as part of TARGET_APPLY component.

action – The DMS activity being logged. For example, migrate/apply.

raw_data – The data as captured from source or applied to target.

Set up Time Travel using the AWS CLI

To set up Time Travel using the AWS CLI, complete the following steps (make sure you replace the relevant parameters with your own values):

Create an S3 bucket where the Time Travel logs are stored. The following command creates the S3 bucket bucket-<<Your-Account-ID>>-us-east-1-dms-time-travel in the us-east-1 Region:

Create a KMS key to encrypt the logs:

Create an IAM policy. Make note of the policy ARN to use in a later step.

Use the following code in your policy.json file. In the Resource section, use the S3 bucket and KMS key you created earlier.

Create an IAM role dms-tt-s3-access-role with the appropriate trust policy to allow AWS DMS to assume the role:

Use the following in your trustpolicy.json file:

Attach the policy to the IAM role:

Create an AWS DMS source endpoint:

Create an AWS DMS target endpoint:

Create an AWS DMS replication instance:

Create an AWS DMS task with the source endpoint and target endpoint you created:

Modify the AWS DMS task to enable Time Travel. Save the Time Travel setting JSON from earlier in this post as task-settings-tt-enabled.json and run the following command. Note that the task should be in the stopped state prior to modifying the AWS DMS task.

Set up Time Travel using AWS CloudFormation

To set up Time Travel using AWS CloudFormation, complete the following steps:

Use the following command to clone the GitHub repository that contains the CloudFormation template.

Note that this template creates dms-vpc-role. If you have used AWS DMS in your account before, this would have already been created for you. If this is the case, choose –parameters ParameterKey=DoesDMSVPCRoleExist,ParameterValue=Y as an additional argument to the following command. The template also creates the role dms-cloudwatch-logs-role. If this role already exists, choose –parameters ParameterKey=DoesDMSCloudWatchLogRoleExist,ParameterValue=Y as an argument.

It may take up to 15 minutes for CloudFormation to deploy the resources.

Open AWS Cloud9 to run your tests. Note that AWS Cloud9 inherits the credentials that you used to deploy the CloudFormation template.

On the AWS Cloud9 console, choose Your environments.

Choose the environment DMSTimeTravelCloud9, then choose Open IDE.

Clone the repo into your Cloud9 environment, then navigate to the directory where the scripts are located and run the initial setup:

Verify connectivity to the source and target databases using the following commands:

Investigate duplicate or missing records due to case mismatch

Let’s troubleshoot a scenario where you encounter a row count mismatch between the source and target databases. PostgreSQL handles the VARCHAR data type differently than MySQL, which can result in duplicate key error in the target database and missing records.

Use the following DDL to create the product table in the source database. The combination of product_id and product_code identifies each record uniquely through the primary key constraint.

Navigate to the AWS DMS console, select the replicate-products task, and on the Actions menu, choose Restart/Resume.

Table preparation mode DROP_AND_CREATE lets AWS DMS create the table in the target. The following table is created on the target (MySQL):

In your source database, insert two records using the following DML. They’re identical except for the product_code column, which has the first character in uppercase and lowercase between the two records.

Under Overview details of the DMS task, choose View CloudWatch logs. Observe that the logs show errors and warnings similar to the following:

Time Travel captures the DML statements applied to the source, which provides you more information regarding this error. Navigate to the S3 bucket bucket-<<Your-Account-ID>>-us-east-1-dms-time-travel. Choose folder path myttfolder/<task ResourceName>/<YYYY>/<MM>/<DD>/<hh>. Choose the reptimetravel… log file. Observe the queries that ran on the source similar to the following.

Both records were inserted successfully on the source because the data type VARCHAR in PostgreSQL is case-sensitive, whereas in target (MySQL) it failed with duplicate error as the data type is case-insensitive when you use the default collation.

In this scenario, the product code has been entered twice in the source database, and the resolution to this issue is to remove the duplicate record, identifying and rectifying similar issues in the product table.

Clean up

To avoid incurring recurring charges, clean up your resources.

If you set up Time Travel using AWS CloudFormation, complete the following steps:

On the Amazon S3 console, empty the bucket you created (bucket-<<Your-Account-ID>>-<<AWS-Region>>-dms-time-travel).

Navigate to the DMS task, choose “replicate-products”, and under Actions, choose Stop.

Navigate to the Amazon RDS console, choose aws-dms-time-travel-stack-sourcerdsdbinstance, then choose Modify. Ensure that Enable deletion protection is unchecked, then choose Continue. In the next screen, choose to Apply immediately, followed by Modify DB instance. Repeat the steps for aws-dms-time-travel-stack-targetrdsdbinstance.

Run the following command to delete the CloudFormation stack:

If you set up Time Travel using the AWS CLI, run the following commands:

Delete the S3 bucket:

Delete the KMS key:

Detach the IAM policy timetraveldms from IAM role dms-tt-s3-access-role:

Delete the IAM role dms-tt-s3-access-role:

Delete the IAM policy timetraveldms:

Delete the AWS DMS task:

Delete the AWS DMS source endpoint:

Delete the AWS DMS target endpoint:

Delete the AWS DMS replication instance:

Conclusion

In this post, you learned how to set up AWS DMS Time Travel and how it can help debug your tasks. Time Travel captures the transactions and DML statements and uploads them to an S3 bucket. Time Travel also reduces the storage burden on your replication instances by offloading the logs to an S3 bucket. Try this feature and let us know how it helped your use case in the comments section.

About the authors

Kishore Dhamodaran is a Senior Solutions Architect with Amazon Web Services. Kishore helps customers with their cloud enterprise strategy and migration journey, leveraging his years of industry and cloud experience.

Suchindranath Hegde is a Data Migration Specialist Solutions Architect at Amazon Web Services. He works with our customers to provide guidance and technical assistance on data migration into the AWS Cloud using Database Migration Service (DMS).

Prabodh Pawar is a Senior Database Engineer with the Database Migration Service team at AWS. He builds services and tools that help to automate database migrations. Prabodh is passionate about working with customers and helping to streamline their migration journeys.

Read MoreAWS Database Blog