Real-time stream processing can be a game-changer for organizations. Customers across industries have incredible stories of how it transformed patient monitoring in healthcare, fraud detection in financial services, personalized marketing in retail, and improved network reliability in telecommunications, among others.

However, stream processing can be quite complex. People who build stream processing pipelines need to consider multiple factors and the delicate balance between completeness, latency, and cost. Further, managing distributed infrastructure adds to the complexity of operating critical streaming pipelines at scale. Ensuring fault tolerance, guaranteeing processing accuracy, and finding the right balance between cost and latency when sizing and scaling the cluster can be challenging.

Dataflow Streaming Engine reduces this complexity and helps you operate streaming pipelines at scale by taking on some of the harder infrastructure problems. Responsive and efficient autoscaling is a critical part of Dataflow Streaming Engine and a big reason why customers choose Dataflow. We’re always working to improve autoscaling and have recently implemented a set of new capabilities for more intelligent autotuning of autoscaler behavior. Let’s take a closer look at some of them.

Asymmetric autoscaling

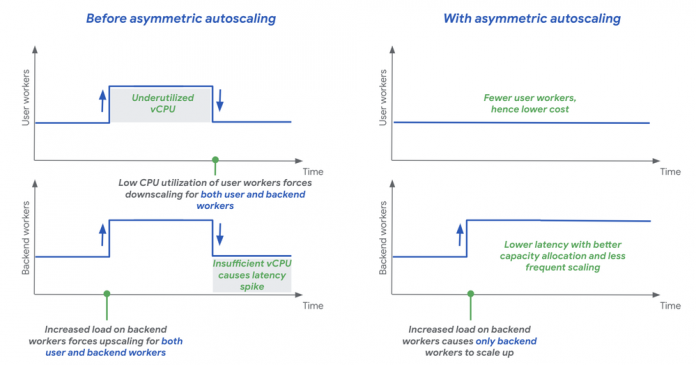

Dataflow has two pools of workers: user and backend. User workers execute user code, while backend workers handle data shuffling, deduplication, and checkpointing.

Previously, Dataflow scaled both pools proportionately to keep their workers ratio at an optimal level for an average job. However, no streaming job is average. For example, a shuffle-heavy job requires the backend to upscale, but not necessarily user workers.

Asymmetric autoscaling allows scaling the two worker pools independently to meet the requirements of a given streaming job without wasting resources. The following graph illustrates how a shuffle-heavy job benefits from asymmetric autoscaling:

Fewer user workers are needed, saving costsLess frequent latency spikes with more stable autoscaling decisions

Asymmetric autoscaling is GA and automatically enabled for Dataflow Streaming Engine jobs.

BigQuery autosharding

BigQuery is one of the common destinations for Dataflow streaming pipelines’ output. Beam SDK includes a built-in transform, known as BigQuery I/O connector, that can write data to BigQuery tables. Historically, Dataflow has relied on users to manually configure numFileShards or numStreamingKeys to parallelize BigQuery writes. This is painful because it is extremely challenging to get the configuration right and requires a lot of manual effort to maintain. Too low a number of shards limits the throughput. Too high adds overhead and risks exceeding BigQuery quotas and limits. With autosharding, Streaming Engine dynamically adjusts the number of shards for BQ writes so that the throughput keeps up with input rate.

Previously, autosharding was only available for BQ Streaming Inserts. With this launch, BigQuery Storage Write API also gets an autosharding option. It uses the streaming job’s throughput and backlog to determine the optimal number of shards per table, reducing resource waste. This feature is now GA.

In-flight job options update

Sometimes you may want to fine tune the autoscaling you get out of the box even further. For example, you may want to :

Save costs during latency spikes by setting a smaller minimum/maximum number of workersHandle anticipated input load spike with low latency by setting a larger minimum number of workersKeep latency low during traffic churns by defining a narrower range of min/max number of workers

Previously, Dataflow users could update auto-scaling parameters for long-running streaming jobs by doing a job update. However, this update operation requires stopping and restarting a job, which incurs minutes of downtime and doesn’t work well for pipelines with strict latency guarantees. In-flight Job Updates allow Streaming Engine users to adjust the min/max number of workers at runtime. This feature is now GA. Check out this blog to learn more about it.

Key-based upscaling cap

You may want to cap upscaling at some point to balance throughput with incremental cost. One way to do it is by using a CPU utilization cap, i.e., upscale until worker CPU utilization drops to a certain level. The theory is that user workers with low utilization should be able to handle more load. But this assumption is not true when the bottleneck isn’t user-worker CPU, but some other resource (e.g., I/O throughput). In some of these cases, upscaling can help increase throughput, even though the CPU utilization is relatively low.

Key-based upscaling cap is the new approach we introduce in the Dataflow Streaming Engine and replaces the previous cap derived from CPU-utilization. Event keys are the basic unit of parallelism in streaming. When there are more keys available to process in parallel, having more user workers can increase throughput, but only up to the limit of available keys. The key-based upscaling cap prevents upscaling beyond the number of available keys because extra workers sit idle and don’t help increase throughput. This approach offers two customer benefits:

Upscaling achieves higher throughput for I/O-bound pipelines when there are more keys to be processed in parallel.It saves cost when adding more user workers doesn’t help improve throughput.

Key-based upscaling cap is GA and automatically enabled for Dataflow Streaming Engine jobs.

Downscale dampening

Customers need to achieve low latency for their streaming jobs at low cost. Traditionally, to save cost, the autoscaler tracked job state and upscaled when latency spiked or downscaled when CPU utilization dropped.

When input traffic is spiky, autoscaling based on static parameters often leads to a pattern we call ‘yo-yoing’. It happens when a too-aggressive downscale causes an immediate subsequent upscale. This leads to higher job latency and sometimes additional costs.

With downscale dampening, the autoscaler tracks the autoscaling history. When it detects yo-yoing, it applies downscale dampening to reduce latency at similar or lower job cost. This sample test job is an illustrative example. Downscale dampening is GA and automatically enabled for Dataflow Streaming Engine jobs.

Fast worker handover

When the autoscaler changes the number of workers, the new workers need to load the pipeline state. One way to do this is to run an initial scan from the persistence store. This initial scan operation can take minutes, which results in a drop in pipeline throughput and an increase in tail latency. Instead, Dataflow Streaming Engine jobs that have the fast worker handover enabled transfer in-memory directly from “old” to “new” workers, avoiding the initial persistence store scans. This reduces the handover time from minutes to seconds. As a result, you can see significant improvements in both throughput and latency when autoscaling events happen. Fast-worker handover is already rolling transparently for Dataflow Streaming Engine users, so once you enable Streaming Engine, you don’t need to enable this feature separately.

Thank you for reading this far! We are thrilled to bring these capabilities to our Streaming Engine users and see different ways you use Dataflow to transform your businesses. Get started with Dataflow right from your console. Stay tuned for future updates and learn more by contacting the Google Cloud Sales team.

Cloud BlogRead More