Migrating an Amazon Aurora PostgreSQL-Compatible Edition or Amazon Relational Database Service (Amazon RDS) for PostgreSQL database from one AWS account to another is a common use case in scenarios when you acquire, sell, or merge operations, or if you’re reorganizing your AWS accounts and organizational structure. In this post, we discuss the steps and best practices to migrate an Amazon Aurora PostgreSQL-Compatible Edition and Amazon RDS for PostgreSQL from one AWS account to another with reduced downtime using AWS Database Migration Service (AWS DMS). You can also apply the concepts covered in this post for other use cases such as cross-VPC replication, cross-subnet replication, and converting unencrypted to encrypted database instances.

This migration approach involves two phases: the initial data load and ongoing replication (change data capture). The majority of the migration steps mentioned in this post are applicable for both Amazon Aurora PostgreSQL-Compatible Edition and Amazon RDS for PostgreSQL. For simplicity, we focus on the migration steps for Amazon Aurora PostgreSQL-Compatible Edition, but we will call out the differences for Amazon RDS for PostgreSQL in a later section of this post.

Phase 1: Initial data load

There are multiple options to perform an initial data load, such as the following:

Snapshot restore – Restoring from a DB cluster snapshot for Amazon Aurora PostgreSQL-Compatible Edition or restoring from a DB snapshot for Amazon RDS for PostgreSQL

Database cloning – Aurora cloning for Amazon Aurora PostgreSQL-Compatible Edition

Logical restore – Using a native or third-party database migration utility such as pg_dump and pg_restore

Managed service – Using a managed service such as AWS DMS

In this post, we use Aurora cloning to perform the initial load for Amazon Aurora PostgreSQL-Compatible Edition and RDS DB snapshot restore for Amazon RDS for PostgreSQL. Aurora cloning and RDS DB snapshot restore are fast and cost-effective options compared to other approaches.

Phase 2: Change data capture

AWS DMS is a fully managed service that supports change data capture (CDC) using PostgreSQL logical replication. AWS DMS uses logical replication slots to retain write-ahead logs (WALs) for replication until the logs are decoded. AWS DMS supports test_decoding and pglogical plugins for logical decoding.

Unlike pglogical, which can do selective decoding based on the replication scope of an AWS DMS task, the test_decoding plugin decodes all schema table updates. In comparison to test_decoding, the pglogical plugin consumes less network bandwidth, CPU, and I/O during the replication for a use case that just requires replication for selective tables. Because all of the tables must be replicated, we use test_decoding in this post.

You can only replicate specific data definition language (DDL) statements, such CREATE, ALTER, and DROP, with an AWS DMS CDC-enabled task. For unsupported DDLs and workarounds, see Limitations on using a PostgreSQL database as a DMS source. AWS DMS also allows the replication of large objects (LOBs) and validates data replicated for tables with a primary key or unique key.

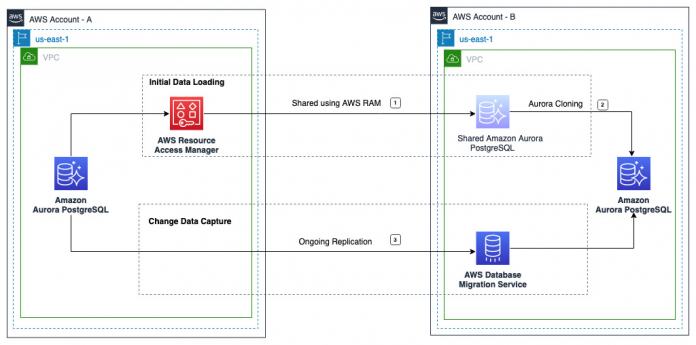

Solution overview

In this post, we use Aurora cloning for the initial data load and an AWS DMS CDC-only task for ongoing replication. The following diagram illustrates this architecture.

To implement this solution, we complete the following steps:

Configure logical replication.

Perform the initial data load and capture the log sequence number (LSN).

Configure ongoing replication using AWS DMS.

Monitor replication.

Validate the data.

Perform cutover.

For this demonstration, the source AWS account is 6144xxxxxxxx and the target account is 2634xxxxxxxx. Both AWS accounts are part of same AWS Organization.

The source Aurora PostgreSQL DB cluster is named aurora-pgsql-source-01 and the target is aurora-pgsql-target-01. Both DB clusters are running on PostgreSQL 13.7 engine version and use an AWS Key Management Service (AWS KMS) customer managed key (CMK) for encryption.

For ongoing replication, we use an AWS DMS replication instance of version 3.4.7 in the target AWS account. In this post, we also use dms_sample as a sample schema and the default PostgreSQL database postgres for migration.

Prerequisites

Make sure you meet the following prerequisites:

The encrypted Aurora PostgreSQL DB cluster must not use the default Amazon RDS AWS KMS key (aws/rds) to support cross-account Aurora cloning and RDS DB snapshot sharing.

Your source and target AWS accounts must have connectivity using either VPC peering or AWS Transit Gateway. For more information, see Create a VPC peering connection.

You should use the AWS primary user account for the PostgreSQL DB instance as the user account for the PostgreSQL source endpoint for AWS DMS. If you don’t use the primary user account for the DB instance, see Prerequisites for using an AWS-managed PostgreSQL database as a DMS source.

The VPC security group associated with the source and target Aurora PostgreSQL DB cluster should allow inbound connections from the AWS DMS replication instance. The security group associated with the replication instance should also allow all outbound connections. For more information, see Setting up a network for a replication instance.

Amazon Aurora PostgreSQL-Compatible Edition must be version 3.4 with PostgreSQL 11.9 compatibility (or higher) to have AWS DMS CDC support. Make sure to apply any minor version updates and patches to your Aurora PostgreSQL DB cluster before configuring replication. The aurora_volume_logical_start_lsn() function that’s used in this post is built into the highest minor version of each major Amazon Aurora PostgreSQL-Compatible Edition release.

Amazon RDS for PostgreSQL must be on the available versions of PostgreSQL 11.x or higher to have AWS DMS CDC support.

You should have the latest version of the AWS Command Line Interface (AWS CLI) installed and configured on your host such as Amazon Elastic Compute Cloud (Amazon EC2), AWS Cloud9, or a similar instance.

Limitations

Note the following limitations:

You can’t create a clone in a different AWS Region from the source Aurora DB cluster. For more information on additional limitations, see Limitations of cross-account cloning.

If your tables use sequences, then the sequences will not be advanced on the target even though data is being copied from the source during ongoing replication with AWS DMS. During cutover, you need to update the NEXTVAL of the sequences in the target database after stopping replication from the source database.

AWS DMS supports CDC for PostgreSQL tables with primary keys. If a table doesn’t have a primary key, the write-ahead logs don’t include a prior image of the database row, and AWS DMS ignores DELETE and UPDATE record operations for that table during ongoing replication. You can use the table-level parameter replica identity as a workaround. For more information, see Additional configuration settings when using a PostgreSQL database as a DMS source.

For more information about the limitations of having a PostgreSQL database as source and target using AWS DMS, see Limitations on using a PostgreSQL database as a DMS source and the Using PostgreSQL as a target for AWS Database Migration Service.

Prepare the source database for replication

Before you start the initial data load, you must enable logical replication and create a replication slot for the source database. For more details, see Enabling CDC with an AWS-managed PostgreSQL DB instance with AWS DMS.

Enable logical replication

By default, logical replication is turned off in AWS-managed PostgreSQL DB instances. You need to set rds.logical_replication to 1 in the custom parameter group associated with source Aurora PostgreSQL DB cluster. This static parameter requires the reboot of the writer instance of the Aurora PostgreSQL DB cluster to take effect. For more information, see Setting up logical replication for your Aurora PostgreSQL DB cluster.

You can verify the status of logical replication and the replication slot using the following command:

Create a PostgreSQL replication slot

Create a PostgreSQL replication slot using the test_decoding plugin for each database that requires ongoing replication. This allows you to retain the WAL logs needed for ongoing replication using AWS DMS. When you create a replication slot, we recommend that you commit or cancel long-running transactions. Use following command to check for transactions:

In this post, you create the replication slot for example, replication_slot with the test_decoding plugin using the following command:

We recommend creating the replication slot during a low traffic window because the source database generates WAL logs for all changes. Up until we start utilizing generated WAL logs, WAL logs take up storage space. The volume of transactions in the source database determines how much storage is needed. We recommend having enough storage in the source database instance and monitoring the disk usage with Amazon CloudWatch. You can also create an alarm.

Perform the initial data load and capture the LSN

In this section, you perform the initial data load in the target database and capture the LSN of the WAL records to configure ongoing replication. Performing the initial data load for Amazon Aurora PostgreSQL-Compatible Edition consists of several steps.

Allow the target AWS account to clone the DB cluster

For Aurora PostgreSQL DB cluster cloning in the target AWS account, you must grant permission to the target AWS account. Use AWS Resource Access Manager (AWS RAM) to set the sharing permissions to allow the target AWS account to clone the Aurora PostgreSQL DB cluster that is owned by source AWS account. For more information, see Share resources owned by you. In this post, we use the create-resource-share AWS CLI command to grant permission to clone the DB cluster in the target AWS account:

Open the AWS RAM console in the target AWS account, and in the navigation pane, choose Shared with me and Resource shares to accept the invitation. For more details, see Accepting invitations to share clusters owned by other AWS accounts.

You also share the AWS KMS key that is used to encrypt the source DB cluster with the target AWS account by adding the target account to the KMS key policy. For details on updating a key policy, see Key policies in AWS KMS. For an example of creating a key policy, see Allowing access to an AWS KMS key.

Create the target Aurora PostgreSQL DB cluster using an Aurora clone

Create an Aurora clone of the source DB cluster in the target AWS account. For more information, see Cloning an Aurora cluster that is owned by another AWS account. The source DB cluster remains available for all the transactions during the cloning process with no impact to performance.

To create the target Aurora PostgreSQL DB cluster, complete the following steps:

On the Amazon RDS console, choose Databases in the navigation pane.

Select your DB cluster (shared from the source account) and on the Actions menu, choose Create clone.

Provide a unique name (for example, aurora-pgsql-target-01). Most of the remaining settings are defaults from the source instance.

After you make the required changes for the target instance, choose Create clone.

While the target instance is initiating, the Status column of the writer node displays Creating. When the instance is ready, the status changes to Available.

Capture the LSN

When your Aurora PostgreSQL DB cluster is in Available state, connect to your new target Aurora PostgreSQL DB cluster to obtain the LSN at which the target cluster is consistent:

Save the output to use for ongoing replication at a later step.

Drop the replication slot from the target Aurora PostgreSQL cluster

The target Aurora PostgreSQL cluster doesn’t need the replication slots. Drop the replication slots, and turn off logical replication by setting rds.logical_replication to 0 in the target DB cluster. In this post, you have one replication slot called replication_slot. You can use the following commands to list and drop the replication slot:

Configure ongoing replication

In this step, you prepare the source and target PostgreSQL databases for ongoing replication and configure ongoing replication using AWS DMS.

Create a schema for AWS DMS control tables in the source and target database

Control tables provide information about an AWS DMS task. In this post, you create a schema called dms_control_schema in both the source and target databases. While creating the AWS DMS migration task, you specify this schema in the AWS DMS task setting ControlSchema. You don’t need to replicate the control tables in the target database. Use the following code to create a schema in both the source and the target databases:

Disable all the triggers in the target PostgreSQL database schema

Disable the triggers of the schema that you intend to replicate in the target database. Alternatively, you can set the database parameter session_replication_role to replica at global level in a custom parameter group or at session level using an AWS DMS extra connection attribute in the target endpoint.

In this post, you generate DDL statements to disable and enable the triggers of the dms_sample schema using the following sample script. This script is an example. Before running, you should test and change it according to your requirements.

This script only generates DDL statements for triggers that are set to Enabled:

To generate the DDL statements to disable the triggers of sample schema dms_sample, call the function with triggerState set to true as follows:

Before disabling the triggers, call the function again with triggerState set to false to generate the DDL statements to enable the triggers of the sample schema dms_sample. You must save the output because you use it to enable the triggers during the cutover stage.

Now, disable the triggers using the generated DDL statements.

Create an AWS DMS replication instance

Create an AWS DMS replication instance called dms-repl-instance-pgsql-01 in the target AWS account. For more information on replication instance sizing, see Choosing the right AWS DMS replication instance for your migration.

Create an AWS DMS source and target endpoints

Create an AWS DMS source endpoint called source-ep in the target AWS account for the source database. You can use extra connection attributes to configure the source PostgreSQL database while creating the source endpoint. For more information, see Endpoint settings when using PostgreSQL as a DMS source. In this post, we use the following endpoint settings for the AWS DMS source endpoint source-ep:

Create an AWS DMS target endpoint called target-ep for the target PostgreSQL database.

Create an AWS DMS migration task

Create an AWS DMS database migration task in the target AWS account to specify the schema for ongoing replication. The task details depend on your migration project. For more information, see Working with AWS DMS tasks. Note the following key steps while creating the AWS DMS database migration task in this post:

For Task identifier, enter migration-task-cdc-01.

For Replication instance, choose dms-repl-instance-pgsql-01.

For Source database endpoint, choose source-ep.

For Target database endpoint, choose target-ep.

For Migration type, choose Replicate data changes only (CDC).

For CDC start mode for source transactions, select Enable custom CDC start mode.

For Custom CDC start point for source transactions, choose Specify a log sequence number and specify the LSN for System change number. We provide detailed guidance in the next section on how to acquire the LSN.

For Custom CDC stop mode for source transactions, choose Disable custom CDC stop mode.

For Target table preparation mode, leave as default.

For Include LOB columns in replication, choose Limited LOB mode.

For Turn on validation, choose Enable.

In the Advanced task settings section, under Control table settings, specify dms_control_schema for Create control table in target using schema.

The AWS DMS control tables such as awsdms_apply_exceptions and awsdms_validation_failures_v1 will reside in schema dms_control_schema of the target database. If you don’t enter any information for this option, then AWS DMS uses the Public schema of the database for control tables.

For Table mappings, specify the source database schema under Selection rules. In this post, we migrate all tables from the dms_sample schema.

Custom CDC start point for source transactions

Connect to the source database to check if the LSN you captured earlier is still available in the replication slot. If you find the specified LSN in the replication slot, use the captured LSN as the system change number for the CDC start point.

If you don’t find the details of the captured LSN in the replication slot, use pg_logical_slot_peek_changes() to check the replication slot and use the very first LSN that is greater than the LSN you captured earlier. We do not recommend using pg_logical_slot_get_changes() to view changes in a replication slot because this will consume the changes and cause the AWS DMS operation to fail.

In this post, we have captured the LSN 1/87F75990. Use the following query in the source database for verification:

We don’t have the captured LSN in the replication slot. Use the following query in the source database to find the LSN greater than the captured LSN:

In this post, we don’t have the captured LSN 1/87F75990 in the source database replication slot and 1/87F75B28 is the first available in the replication slot, which is greater than 1/87F75990. Therefore, we use 1/87F75B28 as the system change number for the CDC start point.

Monitor replication

You can monitor your AWS DMS task using CloudWatch metrics. AWS DMS provides statistics for replication tasks, including incoming and committed changes, and latency between the replication host and both the source and target databases. For a complete list of the available metrics, see Replication task metrics. You can determine the total latency, or replica lag, for a task by combining the CDCLatencySource and CDCLatencyTarget metric values.

You can also use the following command on the source PostgreSQL database to monitor replication lag:

Validate the data

You use AWS DMS data validation to make sure all the pre-existing data in the tables is migrated accurately and it also compares the incremental changes for a CDC-enabled task as they occur. During data validation, AWS DMS compares each row in the source with its corresponding row at the target, verifies the rows contain the same data, and reports any mismatches. See AWS DMS data validation for more details.

You can increase ThreadCount to a higher number so AWS DMS can complete the validation faster. With increased ThreadCount, AWS DMS runs more simultaneous queries, consuming more resources on the source and the target. We recommend monitoring the memory, CPU, and database performance metrics to ensure the source, target, and replication instance have sufficient resources when increasing ThreadCount.

To troubleshoot the tables shown as Mismatched records, Suspended records, Table error, or Error, check the awsdms_validation_failures_v1 table in the target database schema dms_control_schema. For more information, see Troubleshooting. You can also look for any error or warning messages in the migration task log.

Perform cutover

You can plan the cutover of the application to the target Aurora PostgreSQL database when the replication lag is minimal. We recommend planning your cutover during a low traffic window. The following are some of key cutover steps:

Stop your application.

Stop accepting all connections except the one related to AWS DMS on the source Aurora PostgreSQL database by updating the VPC security group associated with the source and removing inbound rules that allow connectivity.

Make sure that there are no change events that are waiting to be applied to the target. Check pg_stat_activity and pg_prepared_xacts from the source PostgreSQL database to ensure no open transactions. Use the CDCIncomingChanges metric to monitor your AWS DMS migration task.

Make sure that CDC latency is 0. You can use CDCLatencySource and CDCLatencyTarget metrics to monitor the replication task.

Make sure that there are no data validation error in AWS DMS.

Stop the AWS DMS database migration task. For more information, see Stop Replication Task.

Update the sequences in the target PostgreSQL database based on the last_value of those sequences in the source PostgreSQL database. In this post, we use the following sample script in the source PostgreSQL database to generate the SQL statements to update the sequences in the target PostgreSQL database. This script is an example. Before running, you should test and change this according to your requirements.

Call the function to generate the SQL statements to update sequences in dms_sample schema:

Now you should review and run the generated SQL statements in the target database to update the sequence.

Enable the triggers in the target PostgreSQL database schema that were previously disabled before starting the AWS DMS CDC-only task. In this post, we use the DDL statements we saved earlier to enable the triggers in the dms_sample schema. We recommend reviewing the SQL statements before running them in the target database.

Update the application configuration or DNS CNAME records with the target Aurora PostgreSQL endpoints.

After the application has successfully connected to the target DB instances, you may decide to temporarily stop or delete the Amazon RDS resources in the primary AWS account. To stop the Aurora cluster, refer to Stopping an Aurora DB cluster and to delete the Aurora cluster, refer to Deleting Aurora DB clusters and DB instances. Aurora allows you to delete the source cluster that has one or more clones associated with it without affecting the clones.

Start your application.

Amazon RDS for PostgreSQL

We can use the migration steps mentioned in this post for Amazon RDS for PostgreSQL as well. In this example, we use an RDS for PostgreSQL DB snapshot for the initial data load and an AWS DMS CDC-only task for ongoing replication. The following diagram illustrates the architecture.

In this section, we only highlight the steps that are different for Amazon RDS for PostgreSQL.

Enable logical replication

For Amazon RDS for PostgreSQL, modify rds.logical_replication to 1 in the custom DB parameter group associated with your DB instance and reboot the DB instance.

Perform the initial data load and capture the LSN

We perform initial data loading into the target RDS for PostgreSQL DB instance by restoring the RDS DB snapshot. Performing the initial data load for Amazon RDS for PostgreSQL consists of the following steps:

Create a DB snapshot of the RDS for PostgreSQL DB instance in the source AWS account.

Share the DB snapshot with the target AWS account.

Copy the DB snapshot, because the shared DB snapshot is encrypted with AWS KMS CMK in this post. This step is optional if the DB snapshot is not encrypted.

Restore the DB instance using the copy of the shared DB snapshot to create the target RDS for PostgreSQL DB instance.

When you restore the snapshot, provide the DB instance identifier rds-pgsql-target-01 and DB instance settings based on your workload. For more information, see Tutorial: Restore an Amazon RDS DB instance from a DB snapshot.

When your RDS for PostgreSQL DB instance is in Available state, obtain the LSN from the instance log of the target RDS for PostgreSQL instance.

In this post, we use following dblogs.sh script to list the database logs for the target and find the value after for the log entry redo done:

You must save this output to find the custom CDC start point for source transactions as mentioned in earlier step for AWS DMS ongoing replication. In this post, the captured LSN from the instance log of the target is 15/600002A8:

In this post, we don’t find the captured LSN in the source database replication slot. Therefore, we use 15/600002E0 for Amazon RDS for PostgreSQL as the system change number for the CDC start point in the AWS DMS migration task for ongoing replication. You should follow the steps mentioned earlier to start the AWS DMS task and perform the cutover.

Clean up

As part of this migration, you have deployed AWS DMS-related resources in your AWS account to replicate data. These resources will incur costs as long as they are in use. Be sure to remove any resources you no longer need.

Conclusion

In this post, we discussed the various steps involved in migrating your Aurora PostgreSQL or RDS for PostgreSQL database from one AWS account to another. We also used AWS DMS for ongoing replication to reduce the downtime. With the complexity involved in database migrations, we highly recommend testing the migration steps in non-production environments prior to making changes in production.

We welcome your feedback. If you have any questions or suggestions, leave them in the comments section.

About the Authors

Alok Srivastava is a Senior Database Consultant at AWS. He works with AWS customers and partners in their journey to the AWS Cloud with a focus on database migration and modernization programs.

Wanchen Zhao is a Senior Database Specialist Solutions Architect at AWS. Wanchen specializes in Amazon RDS and Amazon Aurora, and is a subject matter expert for AWS DMS. Wanchen works with ISV partners to design and implement database migration and modernization strategies and provides assistance to customers for building scalable, secure, performant, and robust database architectures in the AWS Cloud.

Read MoreAWS Database Blog