It’s common in an enterprise for data that logically fits together to be separated into different databases. Some data is better suited for one storage than another, and it may not be feasible to locate all your data in one data store. But this data often needs to be linked back together to provide a solution for an organizational use case. The challenge is to bring it together from multiple systems.

In this post, we show you how to use SPARQL to query data in Amazon Neptune and Amazon Simple Storage Service (Amazon S3) together.

Knowledge graphs vs. data lakes

Consider a use case where we want to analyze climate data, taken from weather stations from all over the world, over many years. Each weather station has taken billions of readings over time, and some weather stations have existed for over 100 years.

Weather stations are well suited to use knowledge graphs. In the graph, stations are resources with essential properties such location, altitude, and sensor types. We may add additional properties, possibly bringing them in from other sources. For example, we may include further data about the station’s city, including Köppen climate classification, population, demographics, and economy. We can then run traversal queries on this data, such as “List all weather stations in cities in Europe or Africa with populations less than 1 million.”

Neptune is a graph database service that supports the W3C recommendations of SPARQL and RDF, which makes it straightforward to build applications that work with highly connected datasets.

In contrast, the readings that are taken from sensors at weather stations are well suited to be stored in a compressed data lake, because they are large in number and the model is not highly connected in nature. The exact number of readings is unknown, and growing, so a data lake is suitable due to its ability to store an almost unlimited amount of data, and answer questions like: “List all weather stations with an average yearly temperature reading over 18 degrees Celsius, and an average humidity above 50%.”

Amazon S3 is an object storage service that can store any amount of data. For example, you can store a large number of compressed Apache Parquet files as a data lake.

For our use case, data is distributed across two systems, Neptune and Amazon S3, but we want to ask questions that retrieve data from both systems at the same time. For example, “List all weather stations in cities in Europe or Africa with populations less than 1 million, and an average yearly temperature reading over 18 degrees Celsius, and an average humidity above 50%.”

Solution overview

We set up an architecture that uses a single SPARQL 1.1 Federated Query to query all the linked data, as a single graph, with some data coming from Neptune and other data (in a virtual graph) originating from Parquet files in Amazon S3.

For this post, we used publicly available data from the National Centers for Environmental Information (NCEI). We selected and then separated a subset of the available data, regarding global temperature readings over time.

All the code, data, and scripts we used in this post are available in the Graph Virtualization in Amazon Neptune GitHub repository.

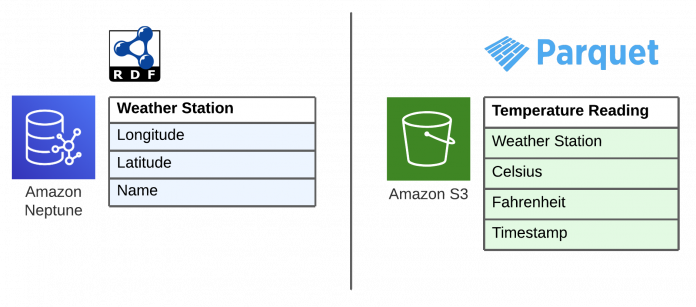

The data is separated and loaded into Amazon Neptune and Amazon S3 as follows:

Neptune – The coordinates of the weather station in longitude and latitude, its name, and unique identifier

Amazon S3 (in Apache Parquet format) – Temperature readings in both Celsius and Fahrenheit, the timestamp of the reading, and the unique identifier of the weather station

The following diagram illustrates the solution architecture.

We use the following services in our solution:

Amazon Athena – Athena is a serverless, interactive analytics service that provides SQL access to data, via AWS Glue, that lives in an S3 data lake.

Amazon Elastic Container Service – Amazon ECS is a fully managed container orchestration service that makes it straightforward for you to deploy, manage, and scale containerized applications

AWS Glue – AWS Glue is a fully managed extract, transform, and load (ETL) AWS service that has the ability to analyze and categorize data in an S3 data lake

Amazon Neptune – Neptune is a fast, reliable, and fully managed graph database service purpose-built and optimized for storing billions of relationships and querying graph data with millisecond latency

Amazon Simple Storage Service – Amazon S3 is an object storage service offering industry-leading scalability, data availability, security, and performance

Ontop-VKG – Ontop is a virtual knowledge graph system that exposes a SPARQL endpoint that maps to a SQL endpoint

Additionally, we provide an AWS Cloud9 development environment to build and deploy the Ontop container.

We perform the following steps to link the datasets:

Run an AWS CloudFormation template to create the main stack.

Run scripts in the AWS Cloud9 IDE, created by the main stack, to set up the test data.

Run an AWS Glue crawler, created by the main stack, to crawl the data in Amazon S3, in order to define the table structure needed for Athena.

Using the AWS Cloud9 IDE, build the Ontop container and deploy it to the ECS cluster. Ontop exposes a SPARQL endpoint, which, when mapped to the SQL endpoint from Athena, can access the data in the S3 data lake.

After we create a SPARQL endpoint that can access the S3 data lake, we can use the SPARQL 1.1 Federated Query feature to run a single graph query on Neptune, which returns data from both datasets.

Prerequisites

For this walkthrough, you should have an AWS account with permissions to create resources in Neptune, AWS Glue, Amazon ECS, and related services. No prior knowledge of the tools and technologies in this post is required to follow this guide, but a basic understanding of the following is advised:

Linked data

SPARQL and RDF

Amazon S3

Athena

Amazon ECS

Customers are responsible for the costs of running the solution. For help with estimating costs, visit the AWS Pricing Calculator.

Set up resources with AWS CloudFormation

To provision resources using AWS CloudFormation, download a copy of the CloudFormation template from our GitHub repository. Then complete the following steps:

On the AWS CloudFormation console, choose Create stack.

Choose With new resources (standard).

Select Upload a template file.

Choose Choose file to upload the local copy of the template that you downloaded. The name of the file is ontop_main.yaml.

Choose Next.

Enter a stack name of your choosing. Remember this name to use in later steps.

You may keep the default values in the Parameters section.

Choose Next.

Continue through the remaining sections.

Read and select the check boxes in the Capabilities section.

Choose Create stack.

The stack creates a Neptune cluster, a Neptune notebook instance, an ECS cluster on which to deploy Ontop, an S3 bucket in which to maintain climate readings data, and an AWS Glue Data Catalog database and crawler to expose that data as a data lake. It also creates an AWS Cloud9 IDE, which you use to set up the Ontop container.

Copy climate files in AWS Cloud9

Complete the following steps to set up your data:

On the AWS Cloud9 console, find the environment OntopCloud9IDE.

Choose Open to open the environment, and run the following code:

Substitute <yourstackname> with the name of the CloudFormation stack you created previously. These steps copy the climate data files to the S3 bucket created by the stack. The copy takes a few minutes to complete.

Keep the IDE open as you proceed to the next steps.

Set up and test the data lake

Open a new tab in your browser and complete the following steps to create your data lake:

On the AWS Glue console, choose Crawlers in the navigation pane.

Select the crawler ClimateCrawler and choose Run, then wait for the crawler to complete.

Under Data Catalog in the navigation pane, choose Tables.There should be a table called climate.

On the Athena console, navigate to the query editor.

Under Settings, choose Manage and set the location for query results to the S3 bucket created by AWS CloudFormation.

In the Athena query editor, select the climate table, then run a SQL query to confirm climate readings are available from the lake:

Build the Ontop container

Move back into the AWS Cloud9 IDE to build the Ontop container.

Before building, you can examine the files. Ontop runs in a Docker container in Amazon ECS. The Dockerfile describes all the configuration and resources that Ontop needs to run this example.

The following is a summary of the files; visit the Ontop tutorial for more details:

Athena JDBC driver– Ontop needs this driver to know how to connect to the SQL endpoint exposed by Athena.

climate.owl – This is the OWL ontology file that describes the model that we are exposing from Ontop. The file includes the following statements to describe the relationship between a WeatherStation (stored in Neptune) and a TemperatureReading (stored in Amazon S3). To understand more about creating OWL ontologies for other use cases, see our GitHub repo.

climate.obda – This is the mapping file that defines the graph model that will be exposed by Ontop. The file contains the following mapping, where the TemperatureReading RDF statements are defined and mapped to columns from the climate table in the AWS Glue Data Catalog accessible via Athena:

climate.properties – This property file describes all the JDBC connection parameters that Ontop needs to connect to Athena, and other properties for Ontop such as logging configuration.

lenses.json – Ontop lenses define a view over the SQL data, defined at the Ontop container level, giving Ontop additional information about the schema to optimize the SPARQL-SQL mapping. The following uniqueConstraint defines the combination of a station and timestamp, and can be seen as a composite unique key. This helps the Ontop container generate more efficient SQL queries when retrieving temperature readings from the data lake.

Build the container

To build the Ontop container from these files, complete the following steps:

Run the following in the terminal and substitute your stack name:

When this completes, the container is added to the ECR repository.

On the Amazon ECR console, open the ontop-lake private repository, and check for the build.

Deploy the Ontop container

Complete the following steps to deploy the container:

In the AWS Cloud9 IDE, run the following in the terminal to deploy a CloudFormation stack called ecs1 that creates a service in the ECS cluster using the Ontop container image:

You can confirm that the ecs1 stack is complete on the AWS CloudFormation console. It may take up to 10 minutes.

When it’s complete, open the Amazon ECS console and navigate to the new cluster called test.

Find the running task and copy its private IP address.This is the IP address on which the Ontop container handles SPARQL requests.

Use the Neptune Workbench to load the test RDF data

Complete the following steps to load the test RDF data:

On the Neptune console, choose Notebooks in the navigation pane.

Select the notebook instance with a name starting with aws-neptune-notebook-for-gv.

On the Actions menu, choose Open Jupyter.

In Jupyter, open the climate-data-queries.ipynb notebook.

Run the first cell in the notebook to load the test RDF data about weather stations into Neptune.

Query the linked data in Neptune Workbench

In the same notebook, look to the following example SPARQL queries. We can now query the data from the Neptune graph and the data lake in a single query.

In the example query in the fourth cell in the notebook, look to the SERVICE keyword, which contains the IP address of the Ontop service running in Amazon ECS.

Note that all the data about the weather station itself comes from Neptune, outside the scope of the SERVICE clause.

For more information about using SPARQL 1.1 Federated Query with Neptune, refer to Benefitting from SPARQL 1.1 Federated Queries with Amazon Neptune.

Clean up

To avoid further costs, delete the resources by following the cleanup instructions in our GitHub repository.

Conclusion

In this post, we showed you how to set up an architecture, using only open source technologies and AWS components, that can use a single SPARQL 1.1 Federated Query to expose linked data from two different sources.

Data is often distributed in different systems within an organization because some data is better suited for one storage system over another, but it still needs to be linked together to answer queries. We showed how you can expose a data lake of unlimited size as a SPARQL endpoint, link it to a graph database in Neptune, and answer a question across the two sources—all in one place.

You can apply this solution to expose any data from Amazon S3 as a SPARQL endpoint, provided it is compatible with an Athena endpoint, as described in the Athena FAQs.

After your data from Amazon S3 is exposed as a SPARQL endpoint, you can also use it on its own by simply calling the HTTP endpoint directly. This way, you can expose your S3 data as a graph for use with any tooling or software that is compatible with SPARQL 1.1 Protocol.

About the Authors

Mike Havey is a Solutions Architect for AWS with over 25 years of experience building enterprise applications. Mike is the author of two books and numerous articles. His Amazon author page.

Charles Ivie is a Senior Graph Architect with the Amazon Neptune team at AWS. As a highly respected expert within the knowledge graph community, he has been designing, leading, and implementing graph solutions for over 15 years.

Read MoreAWS Database Blog