When designing cloud systems, a major consideration is reliability. As infrastructure architects, what do we want to guarantee to our customers, whether they’re internal or end users? How much time can we possibly allow our services to be down? How do we design services for resiliency?

A huge portion of reliability is dependent on availability (also called “uptime” or “uptime availability”). In this Google Cloud blog post “Available… or not?” availability is defined as whether or not a system is able to fulfill its intended function at a point in time. In other words, how often is a web page able to serve content to visitors? Will I always be served the webpage, regardless of latency, when I visit that link?

Service availability, SLAs, and SLOs

Generally, service availability is calculated as # of successful units / # of total units. Such as, uptime / (uptime + downtime), or successful requests / (successful requests + failed requests). Availability acts as a reporting tool and a probability tool for discussing the likelihood that your system will perform as expected in the future. It’s then reported as a percentage, like 99.99%. For example, “my web page serves content to visitors 99.99% of the time.”

Within Google Cloud, services and products have their own service-level agreements (SLAs) that describe their target availability. For example, if properly configured, a single Compute Engine instance in one zone will offer a Monthly Uptime Percentage of >= 99.5% .

Note, an SLA is different from an SLO (Service-Level Objective). An SLO defines a numerical target for a service’s availability, whereas an SLA defines a promise to the service’s users that an SLO is met over a given time period. For simplicity, we’ll use the term “SLA” for the remainder of the document.

However, it’s rare that customer systems on Google Cloud are made up of a single Compute Engine instance. In reality, applications are much more complicated, with services intertwined and dependent on each other. Can you guarantee your users an SLA of >=99.5% for an application running on a single Compute Engine instance if that instance is also dependent on a Cloud Storage bucket that is only 99.0% available?

What we need to calculate is the combined availability across all the different services that make up an application — the composite availability of an application. We need to analyze how the relationship between services in an application impacts the resulting availability of the application overall. This approach allows us to better design more resilient systems and therefore offer users a better experience.

Depending on the relationship between the services, the composite availability might be higher or lower than an individual service alone. Let’s take a look at some application design examples. While this calculation only speaks to the architectural-level availability (i.e., procedural and operational risks that are specific to customer’s systems are excluded), it provides meaningful “upper-bounds” of availability to help guide design.

Dependent services

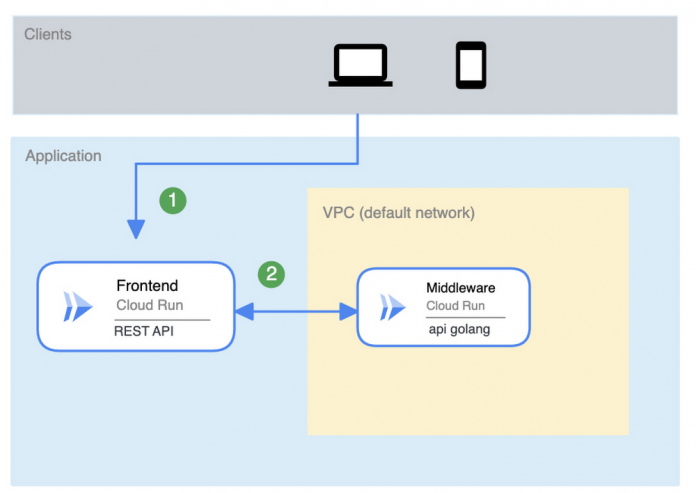

Dependent services are defined as services where one service’s availability is dependent on the other. There are a few common variants of dependent service architecture. The first one to consider is “Serial Services.”

Serial services

Consider an application where successive services are dependent on each other directly:

If the frontend is directly reliant on the middleware, the likelihood of the entire system becoming unavailable is compounded by each of the services’ availability. The reliability of the system becomes:

Or formulaically, for any number of dependent serial services:

Here, it is critical to notice that the SLA of the system has gone the system does from the SLAs of the individual services. That is to say, your architecture choices — namely, your architecture’s dependencies — can be more impactful than your provider’s guarantees. Even if you have “3.5” nines for each Cloud Run service, you can only get “3” nines for your system.

Parallel services

Another common dependent service architecture is to place your services in parallel, where your app is the composite of all of them. For example, middleware is now dependent on a backend running on Cloud SQL and a cache running on Memorystore. Let’s assume you need both the cache and the backend up and running for the middleware to function.

At first, this seems like this design might be an improvement from the last one — as the calls to the different services can be made independently. However, the cache or backend failing will carry the same reliability impact as the middleware failing. Though the backend does not rely on the cache, the system does.

As middleware uses each service equally, the overall observable availability of the set of services is the probability that ANY of these services being up at a given time:

Because these systems still depend on each other, we’re stuck at (SLA_1) * (SLA_2) … * (SLA_N) = SLA of the system. And as this list of dependent services gets longer, we see our system’s SLA go down.

Independent services

So… how can we improve the SLA of a system? We need a way to introduce services that can increase the resiliency of an application as a whole. A good example of this is redundancy!

Redundant services

Having independent copies of the service means that as long as one copy is running, your application is running. So what if we duplicated the application — perhaps across multiple regions — and load balanced between them?

Now we have two sets of our application and have introduced a load balancer. The likelihood of the application being down is now the probability that all of the services across both regions are failing at the same time — or the “probability failure”:

An improvement on the system! This calculation also maps to our real-life scenarios. In the event of a regional outage, the application is still up and running!

Real-life considerations

With all this in mind, it begs the question: why can’t we get infinite 9s in our applications then? Theoretically, you could duplicate your application to your heart’s content. But in reality, things are a bit more complicated. There are considerations of capacity and resource budgets, time and effort associated with configuration — and in the end, oftentimes, the bottleneck of reliability isn’t even the infrastructure at all. It’s the network, application logic, and most notably, the real life implications of people managing complex systems.

Reliability is the responsibility of everyone in engineering: development, product management, Ops, Dev, SRE, and more. Team members are accountable for knowing their project’s reliability target, risk and error budgets, and prioritizing and escalating work appropriately.

Ultimately, designing for composite availability is important but is only a piece of the puzzle. It’s important to contextualize your infrastructure with your own customer defined SLOs, your error budgets, and what operational complexity your teams can take on. Finding a balance is the key for your application’s success and your users’ happiness.

Further reading:

NASA Lessons LearnedAvailable…or not?SRE fundamentals: SLAs vs SLOs vs. SLisGoogle Cloud Reliability Architecture Guidance

Cloud BlogRead More