Babelfish for Aurora PostgreSQL is a capability for Amazon Aurora PostgreSQL-Compatible Edition that adds an endpoint to PostgreSQL that understands the SQL Server wire protocol Tabular Data Stream (TDS), as well as commonly used T-SQL statements from client applications written for Microsoft SQL Server. Migrating from legacy SQL Server databases can be time-consuming and resource-intensive. When migrating your databases to Amazon Aurora PostgreSQL-Compatible Edition, you can automate the migration of your database schema using the AWS Schema Conversion Tool (AWS SCT) and migration of data using AWS Database Migration Service (AWS DMS), but the majority of effort, time, cost, and risk is in migrating the client application logic. Migrating application logic often requires rewriting a lot of code to use different interfaces, refactoring other code to handle semantic differences, and sometimes redesigning code because of extensive use of proprietary capabilities from the old-guard database providers.

With Babelfish, Aurora PostgreSQL-Compatible Edition understands T-SQL, Microsoft SQL Server’s SQL dialect, and supports the same communications protocol, so your apps that were originally written for SQL Server can work with Aurora with little or no T-SQL code changes. Although Babelfish helps reduce the effort required to modify and move client applications to Aurora, proper analysis is needed to identify the scope of changes.

In this post, we show you how to evaluate the T-SQL queries within the client applications, for assessing the complexity of SQL Server to Babelfish migration.

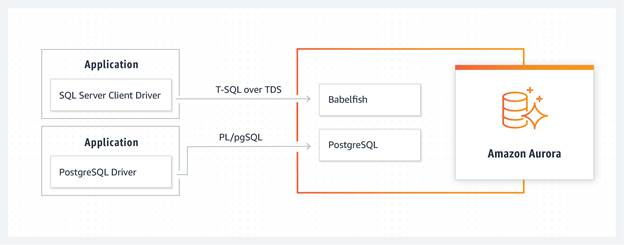

Babelfish architecture overview

With Babelfish, Aurora PostgreSQL-Compatible Edition can support both Postgres PL/pgSQL and T-SQL. A Babelfish instance is bilingual, speaking both protocols and languages with one single cluster. Your client application can connect directly to the TDS endpoint and speak T-SQL. It can also speak to the PostgreSQL endpoint and use PL/pgSQL. Support for T-SQL includes elements such as the SQL dialect, cursors, catalog views, data types, triggers, stored procedures, and functions. This way, you can keep the legacy app mostly as it’s written in T-SQL. If desired, new development can also be done in T-SQL. Over time, you may choose to gradually migrate to PostgreSQL.

The following diagram shows an overview of the Babelfish architecture.

Solution overview

Babelfish Compass is a compatibility assessment tool for Babelfish for PostgreSQL. With Babelfish Compass, you can quickly analyze T-SQL Data Definition Language (DDL), Data Manipulation Language (DML), and SQL code to identify the SQL features that aren’t compatible with Babelfish for PostgreSQL. The reason for analysis is to gather information that helps you decide if you should start a migration project from SQL Server to Babelfish. Babelfish Compass produces an assessment report that lists, in great detail, all of the SQL features found in your SQL/DDL code, and whether or not these are supported by Babelfish.

Note that Babelfish Compass is a standalone tool that doesn’t store any confidential or sensitive information. All information stored is derived from the SQL/DDL scripts that you provide as input. Although Babelfish Compass is part of the Babelfish product, it’s technically separate from Babelfish itself as well as from the Babelfish code, and is located in a separate GitHub repository.

Extract DDL and DML for analysis

Babelfish Compass usage typically starts by creating an assessment report for the analyzed SQL Server scripts. However, you first need to extract the DDL and DML from the SQL Server Database for analysis by Compass.

To extract the DDL from within the SQL Server database, you can use a SQL Server client tool such as Microsoft SQL Server Management Studio (SSMS). Regardless of the tool, we recommend that you export your SQL Server DDL in two phases:

Generate the DDL for the tables without foreign keys, indexes, and constraints.

Generate the DDL for other objects, such as views and stored procedures.

Refer to Using SQL Server Management Studio (SSMS) to migrate to Babelfish for more information about extracting DDL from a SQL Server instance.

Capture T-SQL queries

Apart from server-side DDL, we also need to consider client-side SQL queries during a database migration. By capturing client-side T-SQL queries with SQL Server Profiler, Babelfish Compass can extract the queries from the captured files and perform an assessment on them.

To capture T-SQL statements with SQL Server Profiler, follow these steps:

In SQL Server Profiler, under Trace Properties, use the TSQL_Replay template.

Initiate the tracing in SQL Server Profiler.

Run the client application against the SQL Server database that you are migrating to Babelfish.

When you’re done capturing the client application’s T-SQL, save the captured results (in SQL Server Profiler) by choosing Save As and Trace XML File for Replay.

This creates an XML file containing the captured SQL queries.

Choose Save.

Run Babelfish Compass with the XML file created in previous step as input, and specify the command line option -importfmt MSSQLProfilerXML.

For the details of the various command line options that BabelfishCompass.bat supports, refer to the Babelfish Compass User Guide.

From the command line, navigate to the location of the Babelfish scripts. In our example, the Babelfish Compass version is v2022-12 and the installation path is c:BabelfishCompass.

Run BabelfishCompass.bat with the appropriate parameters. The following code shows the command and its output:

Babelfish Compass extracts the SQL batches and saves them in a file under directory extractedSQL. In this example, the file MyCapture.xml was saved to extractedSQL/MyCapture.xml.extracted.sql.

Because captured SQL often contains several similar statements that only differ in the value of a lookup key, by default Compass deduplicates the captured SQL prior to analysis. Deduplication is performed by masking the values of all string/numeric/hex constants.

To suppress de duplication, specify the command line option -nodedup.

Capture SQL Server Extended Events

In addition to analyzing the output of SQL Server Profiler, Compass also supports processing queries captured through Extended Events.

To capture SQL statements with SQL Server Extended Events, complete the following steps:

Run the client application against the SQL Server database.

Use SQL Server Extended Events to capture SQL queries.

Extract the captured events from the .xel file as .xml files containing <event…>…</event> XML documents.

Note that the .xel files can’t be processed by Compass.

The following code shows one of the ways you can extract the data from an .xel file into XML format. You can save the output of the following query into an .xml file. In this example, we have saved it to ClientQueries.xml:

Run Babelfish Compass with the XML file as input, and specify the command line option – importfmt extendedEventsXML.

For example:

When a report is created, Babelfish Compass automatically performs the following actions:

Open an Explorer window in the directory where the report files are stored

Open the generated assessment report in the default browser

Print the full path name of the report file to stdout

Optionally, you can generate additional cross-reference reports to obtain details about the unsupported features.

You should discuss the results of the Babelfish Compass assessment with the application owner, and interpret the findings in the context of the application to be migrated. In these discussions, it may be possible to descope the migration by identifying outdated or redundant parts of the application that don’t need to be migrated.

Use the assessment results that show the unsupported SQL features in the SQL/DDL/DML code to decide if it’s the right time to start a migration project to Babelfish. If the current version of Babelfish is deemed to be insufficiently compatible with the application in question, we recommended rerunning the analysis when future releases of Babelfish are available, which will provide more functionality.

If proceeding with a migration, modify the SQL/DDL/DML scripts or remove the SQL/DDL/DML statements that are reported as not supported or requiring review. Then invoke the SQL/DDL/DML script against Babelfish (with sqlcmd) to recreate the schema in Babelfish.

Keep in mind that a Babelfish migration involves more than just the server-side SQL/DDL code—for example, it includes interfaces with other systems such as ETL or ELT, SSIS or SSRS, replication tools, and more. These aspects may not be reflected in the server-side view provided by Babelfish Compass.

Conclusion

In this post, we demonstrated how to capture and assess T-SQL code within the client application. We captured the T-SQL from the client application using SQL Server Profiler and passed it to Babelfish Compass to perform the Babelfish compatibility assessment. The assessment generates a detailed report with supported and unsupported SQL Server features on Babelfish. We recommend testing this complete solution in test or development environments prior to production deployment.

Leave any thoughts or questions in the comments section.

About the Authors

Aruna Gangireddy is a Consultant with AWS Professional Services with around 19 years of experience working with different Microsoft technologies. Her specialty is in SQL Server and other database technologies. Aruna has in depth AWS knowledge and expertise in running Microsoft workloads on AWS and enabling customers with homogeneous and heterogeneous migrations between onprem and AWS Cloud.

Jeevan Shetty is a Sr. Database Consultant with the AWS Professional Services Team. He has been supporting and enabling customers to migrate their database from OnPrem data center to AWS cloud and also in migration from commercial database engines to open source database in Amazon.

Read MoreAWS Database Blog