In the rapidly evolving landscape of artificial intelligence and conversational applications, Character.AI stands out as a trailblazer of innovation. Founded in 2021 by former Google AI developers Noam Shazeer and Daniel De Freitas, Character.AI has quickly become a leader in the personalized AI market. The company’s mission is to revolutionize the way we interact with technology by creating AI-powered Characters that offer empathetic and personalized conversations. These Characters range from historical figures to helpers, and even user-generated personas, providing a wide array of interactive experiences for users across its web and mobile web applications. With a significant user base and a valuation soaring to $1 billion, Character.AI’s journey in enhancing user engagement through advanced caching mechanisms is not just a technical endeavor but a testament to its commitment to user satisfaction and innovation.

Overview

At Character.AI, our goal is to deliver an unparalleled user experience, where application responsiveness plays a crucial role in ensuring user engagement. Recognizing the importance of low latency and cost-effectiveness, we embarked on a journey to optimize our caching layer, a critical component of our application’s infrastructure. Our journey led us to adopt Google Cloud’s Memorystore for Redis Cluster, a decision driven by our scaling needs and our quest for efficiency. This blog post aims to share our experiences, challenges, and insights gained throughout this journey, highlighting our transition from initial integration to overcoming scaling hurdles and finally embracing Memorystore for Redis Cluster.

Initial integration

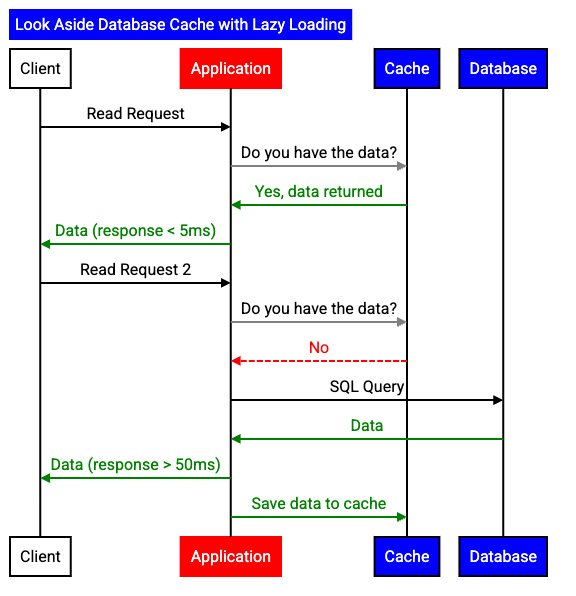

Our initial foray into using Memorystore for caching within our Django application was straightforward, thanks to Django’s versatile caching options. We chose Memorystore for Redis (non-cluster) for its full Redis compatibility, which met our requirements across numerous application pods. The implementation of look-aside lazy caching significantly improved our response times, with Memorystore typically delivering single-digit millisecond responses. This approach, coupled with a high expected cache hit rate, ensured that our application remained responsive and efficient, even as we scaled.

Fig. 1: Lazy loading lookaside caching pattern workflow

Look-aside caching involves the application checking the cache first to see if the requested data is available in the cache. This involves making a call to another datastore in the event the data is not present in the cache. Memorystore generally performs at a single-digit millisecond response time, and the expected cache hit rate is high, so this tradeoff pays dividends after the cache warms.

Lazy-loading means that data is loaded into the cache on a cache miss instead of pre-loading all of the possible data ahead of time. The time-to-live (TTL) for each data record is configurable in the application logic, so that data expires from the cache after a specified duration in the cache. The application lazily loads the data back into the cache after it expires. This ensures high cache hit rates during active user sessions and fresh data during the next session.

Fig 2. Lazy loading occurs on cache miss otherwise content is served from the cache. As our user base expanded, we encountered the limitations of a single Memorystore instance.

Scaling with a proxy

As our user base grew, we encountered the limitations of a single Memorystore instance. Twemproxy allowed us to create a consistent hash ring to shard out our operations over multiple Memorystore instances.

Fig. 3: Utilizing twemproxy to provide transparent sharding of multiple Memorystore instances

Initially this worked well, but we ran into a few key issues. First, managing, tuning and monitoring Twemproxy caused additional operational burden on our team, resulting in several outages as Twemproxy struggled to scale to our needs. While we needed to tune the proxy for performance, the largest issue was the fact that the hash ring was not self-healing. In the event that one of the many target Memorystore instances was undergoing maintenance or overwhelmed, the ring would collapse, and we would lose cache hit rate, causing excessive database load. We also experienced acute TCP resource contentions in our Kubernetes cluster as we scaled both application and Twemproxy pods.

Scaling in software

Fig. 4: Utilizing application based sharding patterns with ring hashing. Integrating ring hashing directly into our codebase offered temporary relief but soon revealed its own set of scaling issues.

We came to the conclusion that while Twemproxy had many benefits, it was not meeting our needs from a reliability standpoint as we scaled, so we implemented ring hashing in the application layer itself. At this point, we had written additional services as we scaled out our backend systems and had to maintain ring implementations in three services; two Python-based services shared a single implementation with a separate implementation in our Golang service. Again, this worked better than our previous solutions, but we started running into issues as we scaled further. The per-service ring implementation started running into the 65K maximum connection limit on the indexes and managing long lists of hosts became burdensome.

Migration to Memorystore for Redis Cluster

The release of Memorystore for Redis Cluster from Google Cloud in 2023 marked a turning point in our scaling journey. This service allowed us to leverage sharding logic closely integrated with the Redis storage layer, simplifying our architecture and eliminating the need for external sharding mechanisms. The transition to Memorystore for Redis Cluster not only enhanced our application’s scalability but also maintained high cache hit rates and predictable loads on our primary database layer as we grew.

Fig. 5: Memorystore highly available sharding architecture

Character.AI’s journey with Memorystore for Redis Cluster exemplifies our commitment to leveraging cutting-edge technology to enhance the user experience. With Memorystore for Redis Cluster, we no longer have to worry about manually maintaining proxies or hashrings. Instead, we can rely on a fully-managed solution to deliver predictable low latencies and zero-downtime scalability. Offloading this management overhead to Google Cloud allows us to focus on our core technologies and user experience, so we can deliver transformative AI experiences to our users worldwide. As we continue to grow and evolve, we remain focused on exploring new technologies and strategies to further enhance our application’s performance and reliability, ensuring that Character.AI remains at the forefront of the AI industry.

Get Started

Learn more about Memorystore for Redis Cluster.

Start a free trial today! New Google Cloud customers get $300 in credits.

Cloud BlogRead More