Developers want to perform bulk updates to modify items in their Amazon DynamoDB tables. Reasons for performing bulk updates can include:

Adding or updating attributes – backfilling a Time to Live (TTL) value or populating an attribute to support a new access pattern or global secondary index (GSI). For example, enriching existing data with new attributes from another dataset such as a Machine Learning model that provides personalization data. A common use case is an insurance company finding all items with a specific policy type and updating them due to regulatory changes.

Proactively locating and deleting items that are no longer required in the table, without using the native TTL functionality. For example, Gaming customers have a need to find and delete stale player statistics.

DynamoDB doesn’t provide native functionality to perform bulk updates. Developers must build and manage their own solution. There are many possible approaches to this, including using Amazon EMR (see Backfilling an Amazon DynamoDB Time to Live (TTL) attribute with Amazon EMR) or AWS Glue.

In this post, we provide a solution that you can use to efficiently perform a bulk update on DynamoDB tables using AWS Step Functions – a serverless orchestration service that lets you integrate with Lambda functions and other AWS services. You can modify, deploy and test the solution as necessary to meet the needs of your own bulk update.

The estimated upper limit for this solution is a DynamoDB table containing 180 billion items with an average size of 4KB. If your table is larger than this, or the average size of the items in your table is greater than 4KB, you should check the limitations section of this post – EMR or Glue based approaches may be better suited to you.

AWS Step Functions

Step Functions is used for this solution because of the following features:

It’s serverless

It enables you to build large-scale parallel workloads to perform tasks, including queuing and restarting after failure

It allows you to modify the speed and associated demand on tables during a bulk update

It’s likely to be familiar to developers already working with AWS Lambda

It allows you to monitor progress via the AWS Management Console, AWS Command Line Interface (AWS CLI), or SDK

Solution overview

An efficient bulk update should distribute work across DynamoDB table partitions.

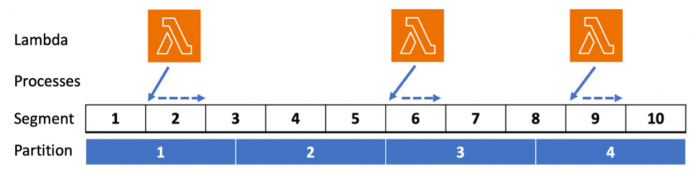

Parallel scan allows you to logically break a table into a specified number of segments. This solution processes these segments in a random order to spread the work across underlying table partitions. Step Functions assigns segments to concurrent Lambda function invocations. Each Lambda function invocation iterates and updates the items in its assigned segment.

The following diagram illustrates three Lambda functions, each processing one randomly chosen segment. There are ten segments covering a DynamoDB table made up of four partitions.

The following diagram illustrates the solution architecture

The solution contains the following key components:

Step Functions – This serverless orchestration service lets you integrate with Lambda functions and other AWS services to build business-critical applications. Step Functions will control the bulk update process using a Distributed Map state.

Lambda – This compute service lets you run code without provisioning or managing servers. There are two Lambda functions in this solution that are invoked and monitored by Step Functions:

GenerateSegments – This function is invoked one time to generate the desired number of segments and randomly sort them for processing.

ScanAndUpdateTable – This function is invoked many times. Each invocation updates all of the items in an assigned segment.

Amazon Simple Storage Service (Amazon S3) – This object storage service offers industry-leading scalability, data availability, security, and performance. S3 is used to store bulk update job configuration

DynamoDB – This fully managed, serverless, NoSQL database is designed to run high-performance applications at any scale. The solution references two tables: one for reading items to be processed, and one to store the items after modification. These can be the same table to perform an update in place, or different tables for a migration with update.

Solution parameters

When you deploy the solution, you have control over a set of parameters:

SourceTable – The name of the DynamoDB table containing items for update. This table must exist in the same account and Region as the deployed solution.

ConsistentRead – Should the process use consistent reads. Eventually consistent reads are half the price of strongly consistent reads. The limitations section contains guidance for this parameter. The default is to use strongly consistent reads.

TotalSegments – The number of logical segments to break the SourceTable into. Increasing this value means more Lambda function invocations are needed to process the table, but each invocation process fewer items and will complete in less time. All invocations must complete within the maximum Lambda duration (15 minutes at the time of writing). The default is 1,000. The maximum is 1,000,000. The benchmark and estimations section contains guidance for this parameter.

DestinationTable – The name of the DynamoDB table where the updated item will be written. This can be the same as SourceTable. This table must exist in the same account and Region as the deployed solution.

ParallelLambdaFunctions – The maximum concurrent Lambda function invocations. Increasing this value means the bulk update progresses faster, but it will place more demand on tables. The default is 10.

RateLimiter – Each Lambda invocation will process items as fast as possible. Set this parameter to the maximum number of items each Lambda function will process per second, or -1 for no rate limiting. The default is no rate limiting. The benchmark and estimations section contains guidance for this parameter.

The ScanAndUpdateTable Lambda contains a Python function named process_item that you modify to implement the specifics of your item update. This is detailed in the Modify the ScanAndUpdateTable Lambda section.

Benchmark and estimations

I benchmarked the solution by updating 100 million items in a DynamoDB table.

The benchmark reveals that each lambda invocation, if not rate limited, processes approximately 200 items per second. Let’s call this the rate and use it to make some estimations:

Estimation

Value

Additional table writes per second (WCU)

ParallelLambdaFunctions * rate * average item KB

Additional table reads per second (RCU)

(halve this for eventually consistent reads)

(ParallelLambdaFunctions * rate * average item KB) / 4

Total completion time (in seconds)

estimated number of items in the table / (ParallelLambdaFunctions * rate)

Minimum value for TotalSegments parameter

estimated number of items in the table / (desired Lambda invocation duration in seconds * rate)

If the average size of your items is 4KB or greater, you should set the RateLimiter to avoid creating hot partitions. Estimate your maximum possible rate using the formula: 1000 / average item size in KB. Use your choice of rate in the estimations above. You can also set the RateLimiter to obtain a predictable throughput per Lambda invocation.

Limitations

Multiplying the rate, maximum value for TotalSegments, and the maximum Lambda function duration of 900 seconds (at the time of writing), the estimated upper limits for this solution can be calculated. Some examples are shown in the table below:

Average Item Size

Calculated Rate

Estimated Upper Limit

1 KB

200 (no rate limiting)

180 billion items

5 KB

200 (no rate limiting)

180 billion items

100 KB

10

9 billion items

200 KB

5

4.5 billion items

400 KB

2

1.8 billion items

The parallel scan operation is used to process the SourceTable. DynamoDB doesn’t provide snapshot isolation for a scan operation, even when the ConsistentRead parameter is set to true. A DynamoDB scan operation doesn’t guarantee that all reads in a scan see a consistent snapshot of the table when the scan operation was requested.

You should consider any race conditions between your bulk update and other workloads updating items in your table. Using strongly consistent reads can reduce the likelihood of this. If a race condition is a concern, you should pause other workloads updating items during the bulk update, or invest additional engineering effort to remove the race condition. For example, by including optimistic locking.

Updating a single item is atomic, but does not utilize transactions. You should not use this solution if you need transactions. For example, to ensure multiple items are successfully updated together, or not at all.

It is possible for the Step Function to fail. For example, if the update experiences throttling and SDK automatic retries are exhausted. Whilst you can redrive the Step Function from where it failed (this is a good strategy), some items will be re-processed. You should ensure your item updates are idempotent to enable re-processing to complete safely.

Clone, modify and deploy the solution

I will demonstrate the steps to clone the solution, modify the ScanAndUpdateTable Lambda function, deploy and run your bulk update.

Clone the solution

Run the following command to clone the solution:

Change into the directory for this solution:

Modify the ScanAndUpdateTable Lambda

The file functions/scan-and-update-table.py contains source code for the ScanAndUpdateTable Lambda function. It contains a Python function named process_item. Using your preferred text editor, modify and save this function to implement your item update logic.

There are several update examples included in the function that you can use. These include adding an additional attribute to your items, deleting selected items and copying items from the source table to the destination table.

For this post, let’s modify the function to add a processed_at attribute and save the item to the DestinationTable using the UpdateItem operation.

Deploy the solution

To deploy the solution, you will need the AWS Command Line Interface (CLI) and Serverless Application Model (SAM) CLI.

Once installed, start the deployment using guided sam deploy, providing your values for parameters when prompted:

The solution will deploy, creating all the necessary resources, including AWS Identity and Access Management (IAM) roles. It will display the Amazon Resource Number (ARN) of the deployed Step Function.

Start and monitor your bulk update

You can start and monitor your bulk update using the AWS CLI or Console.

To start your bulk update, run the following AWS CLI command, replacing <arn> with the ARN of your deployed Step Function:

Alternatively, you can access your deployed Step Function in the AWS Console and choose Start execution.

The AWS Console provides a page displaying a high-level overview of the running Step Function. Choose the Map Run link to open a page showing the progress of the bulk update.

The processing status of each segment is shown. You can modify the speed of the bulk update (and associated demand on the DynamoDB tables) at any time by updating the Maximum concurrency value on this page.

You can monitor and update the Step Function with the AWS CLI using the describe-execution, list-map-runs, describe-map-run and update-map-run commands.

Clean up

Delete all objects in the S3 configuration bucket and use the sam delete command to delete all resources created by this solution.

Conclusion

In this post, we provided a solution to efficiently perform a bulk update on DynamoDB tables using Step Functions. You can deploy the solution and modify it to meet the needs of your bulk updates. You should test any modifications before running against a production table and also ensure a suitable backup of the table is in place.

The provided solution spreads the bulk update processing across your table to improve performance and minimize impact on other workloads. You can control the speed at which the bulk update runs and the associated demand on your tables at any time during the update.

The estimated upper limit for this solution is a DynamoDB table containing 180 billion items with an average size of 4KB. If your table is larger than this, or the average size of the items in your table is greater than 4KB, you should check the limitations section of this post – EMR or Glue based approaches may be better suited to you.

To get started with this solution, follow the steps provided in this post or visit the solution page.

To learn more about DynamoDB, please see the DynamoDB Developer Guide.

About the Author

Chris Gillespie is a UK-based Senior Solutions Architect. He spends most of his work time with fast-moving “born in the cloud” customers. Outside of work, he fills his time with family and trying to get fit.

Read MoreAWS Database Blog