Microservices architectures provide development teams a mechanism to reduce time to market, and enable hyper-scaling of business-critical applications. They also provide the flexibility to make technology decisions best suited for the needs of each service. This includes the selection of a purpose-built database to store and retrieve data in a highly scalable and efficient manner.

Purpose-built databases provide a comprehensive list of highly available, secure, and reliable services for managed database engines such as relational, graph, document-object, key-value, and more. Amazon MemoryDB for Redis is compatible with Redis and provides microsecond read and single-digit millisecond write latencies. Backed by a Multi-AZ transaction log, MemoryDB for Redis provides durability and high availability for high-performance workloads. MemoryDB for Redis enables mission-critical data storage for in-memory key-value workloads, with added flexibility using Redis data structures.

Redis is an extremely popular choice for low-latency, high-throughput requirements within containerized workloads. Redis has become a preferred in-memory layer for many enterprise organizations and startups. This post shows example Python microservices on Amazon Elastic Container Service (Amazon ECS) and MemoryDB for Redis as the backend primary database. We include sample patterns for key-value access, service-to-service communication, and high velocity data ingestion using MemoryDB for Redis. The sample application provides durable storage for user profiles, event-driven streaming data, and in-memory aggregations of profile activity.

Overview of the architecture

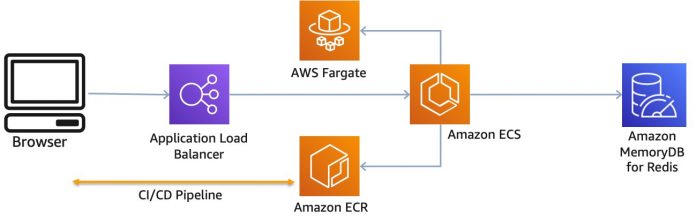

The following architecture demonstrates container-based microservices deployed to Amazon ECS. The application code is deployed into container images and hosted in Amazon Elastic Container Registry (Amazon ECR) and configured using an ECS task definition. The microservices interact with MemoryDB for Redis using the open-source redis-py-cluster Redis cluster aware client. The application code also uses Flask to configure a simple API. Although the application is built using a shared MemoryDB for Redis cluster, microservices patterns allow independent clusters to be used in a database-per-service model. MemoryDB for Redis supports ACLs which can restrict user access to certain keys, and commands based on an access string.

Sample application

The following sample application shows how different services can communicate with MemoryDB for Redis as a low-latency in-memory database. We deploy a Multi-AZ cluster with automatic failover, including two shards to handle writes and two replica nodes for read scalability and failover. The clustered architecture of MemoryDB for Redis provides the ability to easily invoke online scaling operations such as adding shards as the workloads grows.

In this post, we demonstrate three use cases: how to use MemoryDB for Redis as a primary database for your application, for data streaming, and for in-memory aggregation and reporting.

Primary database

We use MemoryDB for Redis as the primary database for user profiles because the data is stored durably. The following code uses a hash data structure in MemoryDB for Redis to durably persist and retrieve user profiles via the profile microservice. A hash in Redis is a collection of unordered fields and values. Hashes can represent objects such as shopping carts, posts, and product catalogs. In the following example code, we use the commands HMSET, and HGETALL:

Data streaming

We use MemoryDB for Redis to ingest profile events asynchronously from the profile microservice. The events are stored durably and consistently. The sample application uses the Redis Streams data structure to capture high velocity events and scale reads using consumer groups. Streams are an append-only log data structure that can collect large volumes of data. Consumer groups allow many clients to coordinate reads and process data from the stream. In our example, the profile service emits events associated with profile actions. Other use cases for Redis Streams include message brokers, message queues, and website activity tracking. The following example code uses the commands XREADGROUP, and XACK:

In-memory aggregation and reporting

You can also use MemoryDB for Redis to retrieve and model in-memory aggregations. MemoryDB for Redis stores and updates these aggregations durably and consistently with low latency. In this example, all the events from the profile stream are aggregated into a sorted set data structure to return in-memory aggregates of profile events such as number of profiles read by the profile service. Deleted profiles are removed as well. Sorted set commands allow for retrieval of specific range and rank of elements based on a score attributed to a value at insertion. Example use cases include leaderboards and priority queues. The following example code uses the command ZREVRANGEBYSCORE:

Solution overview

The following diagram shows the resources created, and architecture used. The Amazon Virtual Private Cloud (Amazon VPC) has private subnets to deploy container based microservices, and MemoryDB for Redis nodes. The resources communicate using the Configuration Endpoint exposed by the MemoryDB for Redis cluster. Container images for the application code are stored in the Elastic Container Registry. Amazon CloudWatch is used for logging and monitoring. AWS Secrets Manager is used to store configuration data. The microservices are made available using an Application Load Balancer.

Test the app

After successful deployment, we run API calls against the microservices and observe the output. We can use the Application Load Balancer DNS endpoint to run sample commands to run.

Profile service

The following example code creates profiles:

The following is the example output:

To read a profile, we enter the following code:

We get the following output:

To delete a profile, we enter the following code:

We get the following output:

Reporting service

After these profiles are modified or read, the service emits an event to a Redis stream. This stream is read by the aggregator service to update a Redis sorted set to maintain an aggregate number of profile operations. We run multiple reads against profiles to build up the aggregations. We delete a profile to see it removed from the returned list.

These profile operations are reported against using the reporting service. We retrieve the results of the profile commands using the following request. The 3 in the example request returns the top 3 read profiles:

We get the following output:

View the generated resources

The following screenshot shows our MemoryDB for Redis cluster.

The following screenshot shows our Amazon ECR repositories.

The following screenshot shows our ECS cluster and services.

Getting started with MemoryDB for Redis

We can create a MemoryDB for Redis using the latest version of the AWS Command Line Interface. The following example creates a MemoryDB subnet group:

The following example creates a MemoryDB for Redis user:

The following example creates a MemoryDB for Redis ACL, and adds the created user:

The following example creates a MemoryDB for Redis cluster in the created subnet group, and associates the created ACL. The security group specified should allow traffic to authorized resources on the port chosen for the cluster. The default port on MemoryDB for Redis is 6379. The example cluster has 1 shard, with 2 replicas per shard using an db.r6g.large instance type.

Conclusion

In this post, we identified how microservices can improve the scalability and resilience of applications. One dimension of the architecture is a purpose-built database that fits the data access and latency requirements of your workload. MemoryDB for Redis provides durability and high performance for key-value access of in-memory data. You can store this information to accommodate additional access patterns using Redis data structures. We examined the hash, stream, and sorted set data structures while implementing use cases for MemoryDB for Redis. Examples included key-value storage and retrieval, data streaming, and in-memory aggregations and reporting.

Learn More

Learn more about MemoryDB by checking out this launch announcement, and by listening to the latest episode of the Official AWS Podcast. You can also review the FAQs, and documentation for creating a MemoryDB for Redis cluster. Find more information about microservices in the Implementing Microservices on AWS whitepaper.

About the Author

Zach Gardner, AWS Solutions Architect.

Read MoreAWS Database Blog