In this blog post, we’re going to show you how to use two technologies together: The generative AI functionality in Google Cloud Vertex AI, an ML development platform, and Neo4j, a graph database. Together these technologies can be used to build and interact with knowledge graphs.

The code underlying this blog post is available here.

Why should you use generative AI to build knowledge graphs?

Enterprises struggle with the challenge of extracting value from vast amounts of data. Structured data comes in many formats with well defined APIs. Unstructured data contained in documents, engineering drawings, case sheets, and financial reports can be more difficult to integrate into a comprehensive knowledge management system.

Neo4j can be used to build a knowledge graph from structured and unstructured sources. By modeling that data as a graph, we can uncover insights in that data not otherwise available. Graph data can be huge and messy to deal with. Generative AI on Google Cloud makes it easy to build a knowledge graph in Neo4j and then interact with it using natural language.

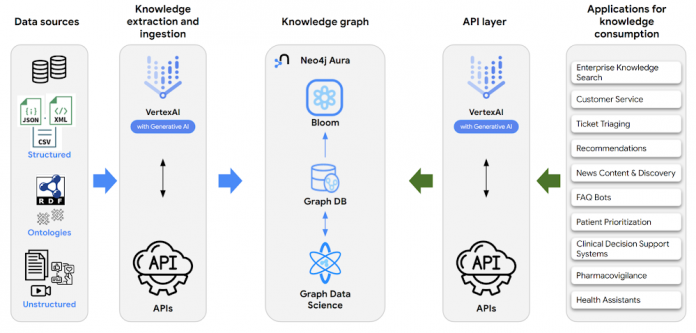

The architecture diagram below shows how Google Cloud and Neo4j work together to build and interact with knowledge graphs.

The diagram shows two data flows:

Knowledge extraction – On the left side of the diagram, blue arrows show data flowing from structured and unstructured sources into Vertex AI. Generative AI is used to extract entities and relationships from that data which are then converted to Neo4j Cypher queries that are run against the Neo4j database to populate the knowledge graph. This work was traditionally done manually with handcrafted rules. Using generative AI eliminates much of the manual work of data cleansing and consolidation.

Knowledge consumption – On the right side of the diagram, green arrows show applications that consume the knowledge graph. They present natural language interfaces to users. Vertex AI generative AI converts that natural language to Neo4j Cypher that is run against the Neo4j database. This allows non technical users to interact more closely with the database than was possible without generative AI

We’re seeing this architecture come up again and again across verticals. Some examples include:

Healthcare – Modeling the patient journey for multiple sclerosis to improve patient outcomes

Manufacturing – Using generative AI to collect a bill of materials that extends across domains, something that wasn’t tractable with previous manual approaches

Oil and gas – Building a knowledge base with extracts from technical documents that users without a data science background can interact with. This enables them to more quickly educate themselves and answer questions about the business.

Now that we have a high level picture of where this technology can be used, let’s focus on a particular example.

Dataset and architecture

In this example we’re going to use the generative AI functionality in Vertex AI to parse resumes. Resumes have information like name, jobs and skills. We’re going to build a knowledge graph from those entities that shows what jobs and skills people have and share with one another.

The architecture to do this is a specific version of the architecture we saw above.

In this case, we have just one data source, rather than many. The data all comes from unstructured text in the resumes.

Once we’ve built the knowledge graph, we’ll use a Gradio application to interact with it using natural language.

Knowledge extraction

To begin, let’s decide on the schema to be used within Neo4j. Neo4j is a schema flexible database, allowing you to bring in new data and relevant schema, connect them to existing ones, or iteratively modify the existing schema based on the use case.

Here is a schema that represents the resume data set:

To transfer unstructured data to Neo4j, we must first extract the entities and relationships. This is where generative AI foundation models like Google’s PaLM 2 can help. Using prompt engineering, the PaLM 2 model can extract relevant data in the format of our choice. In our Talent Finder chatbot example, we can chain multiple prompts using PaLM 2’s “text-bison” model, each extracting specific entities and relationships from the input resume text. Chaining prompts can help us avoid token limitation errors.

The prompt below can be used to extract position and company information as JSON from a resume text:

The output from text-bison model is then:

The text-bison model was able to understand the text and extract information in the output format we wanted. Let’s take a look at how this looks in Neo4j Browser.

The screenshot above shows the knowledge graph that we built, now stored in Neo4j Graph Database.

We’ve now used Vertex AI generative AI to extract entities and relationships from our unstructured resume data. We wrote those into Neo4j using Cypher queries created by Vertex AI generative AI. These steps would previously have been very manual. Generative AI helps automate them, saving time and effort.

Knowledge consumption

Now that we’ve built our knowledge graph, we can start to consume data from it. Cypher is Neo4j’s query language. If we are to build a chatbot, we have to convert the input natural language, English, to Cypher. Models like PaLM 2 are capable of doing this. The base model produces good results, but to achieve better accuracy, we can use two additional techniques:

Prompt Engineering – Provide a few samples to the model input to achieve the desired output. We can also try chain of thought prompting, to teach the model how to achieve a certain Cypher output.

Adapter Tuning (Parameter Efficient Fine Tuning) – We can also adapter tune the model using sample data. The weights generated this way will stay within your tenant.

The data flow in this case is then:

With a tuned model, we can use a simple prompt to turn text-bison into a Cypher expert as:

Vertex AI generative AI responds like this in Cypher:

As shown above, when the “Front end” role was referred to, the model was able to generate the important front end skills to consider, then generate the Cypher based on that.

Summary

In this blog post, we walked through a two part data flow:

Knowledge Extraction – Taking entities and relationships from our resume data and building a knowledge graph from it.

Knowledge Consumption – Enabling a user to ask questions of that knowledge graph using natural language.

In each case, it was the unique combination of generative AI capabilities in Google Cloud Vertex AI and Neo4j that made this possible. The approach here automates and simplifies what was previously a very manual process. This opens up applying the knowledge graph approach to a class of problems where it was not previously feasible.

Next steps

We hope you found this blog post interesting and want to learn more. The example we’ve worked through is here. We hope you fork it and modify it to meet your needs. Pull requests are always welcome!

If you have any questions, please reach out to [email protected]

Cloud BlogRead More