Editor’s note: OVO Energy was founded in 2009 as an energy retailer that redesigned the energy experience to be fair, simple and green for consumers. Inspired by Google’s carbon-aware load shifting to data centers with the cleanest energy, OVO Energy implemented its own solution using Cloud Composer and Cloud Run Jobs. Here Jack Lockyer-Stevens, Software Engineer at OVO Energy, describes the solution which he open-sourced as VertFlow.

Our world needs a greener grid

The transition to cleaner sources of electricity, such as wind and solar power, is critical to mitigating climate change and reducing humanity’s dependence on fossil fuels.

It’s tempting to think this can be easily achieved by building more wind and solar farms. But what happens when the wind doesn’t blow and the sun doesn’t shine? The variable output of renewable energy sources means we have to supplement them with power generated from fossil fuels when demand is high.

One critical tool for addressing this is demand response, which incentivizes consumers to shift their power consumption to different times when more renewable energy is available on the grid. OVO Energy offers this service to owners of electric vehicles, allowing them to charge at greener, off-peak times and return surplus power back to the grid when clean power supply is low.

The grass is greener in the other Google Cloud region

If OVO Energy can shift electricity demand from hardware, then why not for software?

Workloads running in a Google Cloud region draw from the local electricity grid, and some grids have a lower carbon intensity than others.

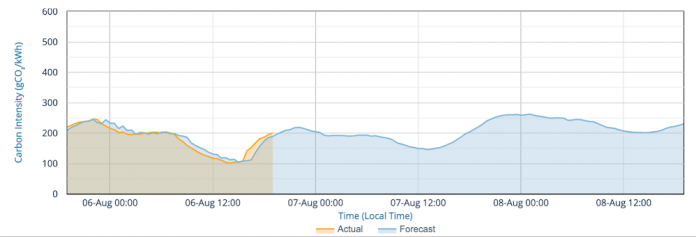

Regions like Paris (europe-west9) have an abundance of carbon-free power. Other locations like London (europe-west2), whose carbon intensity over three days is pictured above, are more variable as the availability of renewable power waxes and wanes with the wind and sun.

Cloud Run Jobs gives a container the green light in seconds

Cloud Run Jobs is a new serverless platform for running containers to completion on Google Cloud. It’s great for batch processes like sending invoices or running extract, transform, load (ETL) jobs. Rather than managing and paying for a Kubernetes cluster 24/7, it allows you to kick off an arbitrary container on fully managed Google infrastructure, in any region, in seconds.

Cloud Run Jobs pairs well with Cloud Composer, a fully managed workflow orchestration service built on Apache Airflow. It acts as mission control for scheduling, triggering and monitoring containerised jobs.

At OVO Energy we developed VertFlow, a free open source task operator for Airflow that can reduce the carbon emissions associated with a workload by automatically deploying your container into the greenest Google Cloud region at runtime. It does this using real-time carbon intensity data from CO2 signal.

It’s a breeze to deploy greener Cloud Run Jobs on Composer with VertFlow

Let’s walk through the following steps to run your first container with VertFlow:

Setup VertFlow: Install, connect, authorize.

Create a DAG using the VertFlowOperator to deploy your Cloud Run Job.

Kick off the process and see which Google Cloud region is selected.

You need an Airflow environment to get started. Cloud Composer is a fully managed service based on Airflow and can be provisioned in minutes using the Cloud Console, gcloud or Terraform.

1.Setup VertFlow: Install, connect, authorize

Start by installing VertFlow on your Composer environment:

Authorize your environment to manage Cloud Run Jobs on your behalf, by updating permissions on the service account:

You will need to get an API key from Electricity Maps to enable VertFlow to access real-time carbon intensity data for the electricity grid in each Cloud Run region. You can get a free one for non-commercial use. Commercial users need to sign up here for an API Key.

Navigate to the Airflow UI and save the key in a new Airflow Variable called VERTFLOW_API_KEY.

Ensure your environment has outbound access to the internet.

2.Create a workflow using theVertFlowOperatorto deploy your Cloud Run Job.

Create a Python file locally to define your workflow (known as a DAG in Airflow).

We use the VertFlowOperator to define a job specification, including the address of the container image to run, command to execute and the allowed list of regions in which to deploy the job.

Update the vertflow_example.py file in the dags directory with the service account you want your Cloud Run container to use.

Upload the workflow to your Cloud Composer environment:

3. Kick off the process and see which Google Cloud region is selected.

Trigger the DAG:

VertFlow will run your container in the allowed_region with the lowest carbon intensity. You can then monitor its progress in the Airflow UI:

You should see Hello World in the logs of the Cloud Run Jobs console:

In this example, Belgium was a clear winner. We used nearly a third less carbon than if we’d run the job elsewhere.

VertFlow puts wind in the sails of OVO Energy’s sustainability journey

At OVO Energy we host many of our customer journeys on Google Cloud, from onboarding customers to processing payments. Many of our data and analytics pipelines comprise containerized batch jobs often orchestrated using the KubernetesPodOperator on Cloud Composer V1. This setup is rife for optimization by migrating to Cloud Composer V2 to take advantage of the more efficient resource consumption of Google Kubernetes Engine (GKE) Autopilot. VertFlow was developed as the last piece of the puzzle to allow us to go fully serverless and practice what we preach on sustainability.

VertFlow is perfect for batch workloads that are:

Containerized and stored in Artifact Registry

Relatively short, satisfying Cloud Run’s one hour runtime limit

Are I/O light but compute intensive, such as our energy forecasts. This ensures that the carbon saving of moving the compute is not outweighed by the carbon cost of transmitting the data over a larger distance.

Do not need access to resources on a VPC, such as our data pipelines that read from/write to BigQuery only

Are legally allowed to move from one region to another. We set the allowed_regions parameter for jobs with data residency constraints

Find out more about how OVO Energy is powering progress towards net zero carbon living with Plan Zero and learn more about VertFlow.

Cloud BlogRead More