Many customers migrating their on-premises database workloads to the AWS Cloud select AWS Database Migration Service (AWS DMS) as their tool of choice to quickly migrate databases. AWS DMS is a service that supports homogeneous and heterogeneous migrations between different database platforms. Although setup and configuration of AWS DMS is very straightforward, selecting the right network topology to enable communications between source and target databases might require additional analysis. You must select the right balance between performance, reliability, costs, and access requirements. If you have limitations on the number of available Classless Inter-Domain Routing (CIDR) ranges in your internal network, AWS services allow listed, or direct access restrictions between on-premises and AWS databases imposed by internal security policies, you must implement more creative solutions to migrate databases to AWS.

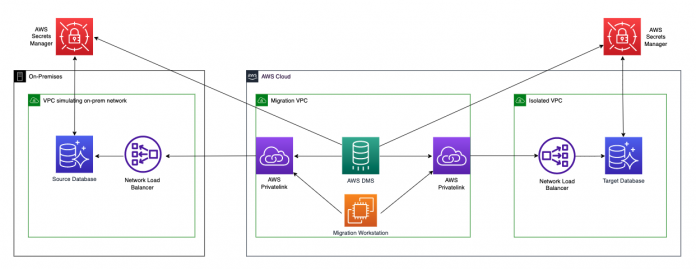

In this post, we present a solution that enables the migration of databases hosted on premises to isolated VPCs using AWS PrivateLink. This pattern allows you to establish network connectivity between the AWS DMS replication instance and databases hosted on VPCs with overlapping CIDR ranges, and eliminates the need to use AWS Transit Gateway or VPC peering via Amazon Virtual Private Cloud (Amazon VPC). Throughout this post, we discuss the proposed pattern in detail and provide Terraform scripts to help you build a proof of concept (POC) environment that can be tailored to match your specific requirements.

Solution overview

This solution demonstrates how to configure different AWS services to simulate customer environments consisting of AWS DMS instances hosted on VPCs without direct network connectivity to on-premises and target networks. The objective is not to provide a deep dive on the services used, but to act as a guideline on how to achieve connectivity for these services without the need for a direct peering relationship with the VPC hosting AWS DMS. Finally, to validate the architecture, we provide SQL statements to create sample database objects and insert data, and AWS Command Line Interface (AWS CLI) commands to migrate objects and data using AWS DMS. We use the following AWS services and resources in our proposed architecture:

Three VPCs (on premises, AWS DMS, and target)

Two Network Load Balancers (on premises to AWS DMS and AWS DMS to target)

Two AWS PrivateLink endpoints (on-premises to AWS DMS and AWS DMS to target)

Two Amazon Aurora PostgreSQL-Compatible Edition databases (on premises and target)

Two AWS Secrets Manager database secrets

One AWS DMS instance

One Elastic Cloud Compute (Amazon EC2) instance (migration workstation)

The solution is represented in the following diagram.

Prerequisites

Implementation of this solution uses Terraform; therefore, we assume the following prerequisites have been satisfied prior to running the scripts:

Access to an AWS account

Terraform has been configured with AWS

Access to the GitHub repository containing the Terraform scripts

If all the prerequisites are in place, you can proceed to run the Terraform scripts to create the environment on your AWS account.

Provision the infrastructure

We have created Terraform scripts hosted in a Git repository to deploy the infrastructure. Complete the following steps:

Using git, clone the repository to your local machine:

Navigate to the provisioning directory:

Initialize the working directory that contains the Terraform configuration files:

Create a run plan:

Run the actions proposed in the Terraform plan to create the infrastructure:

Deployment takes around 15–20 minutes to complete.

Creation of VPCs

The first stage of the Terraform deployment creates three VPCs. Each VPC uses similar CIDR ranges and there is no connectivity among them. The first VPC simulates the on-premises network and hosts the source Aurora PostgreSQL database. The second VPC hosts the AWS DMS and EC2 instances used for database migration purposes. The third VPC hosts the target Aurora PostgreSQL database. For a complete list of features and the steps necessary to create a VPC in your AWS account, refer to Get started with Amazon VPC.

Creation of Aurora PostgreSQL clusters

The Terraform scripts create Aurora PostgreSQL clusters to simulate the source and target database environments. Each Aurora cluster is provisioned on a separate VPC without direct connectivity among them.

Creation of Secrets Manager secrets

Two Secrets Manager secrets are provisioned to provide secure storage of source and target database credentials during the migration. For additional information on how to create secrets, refer to Create and manage secrets with AWS Secrets Manager.

Creation of Network Load Balancers

Two Network Load Balancers are provisioned to forward connections generated on the AWS DMS instance to the appropriate source or target database.

Creation of VPC endpoints

Two VPC endpoints are provisioned to point to the source and target Network Load Balancers.

Creation of VPC endpoint services

Two VPC endpoint services are provisioned, one in the source VPC and one in the target VPC. Each one is associated with their respective Network Load Balancer.

Creation of an AWS DMS instance

An AWS DMS instance is provisioned to automate the migration of the on-premises database to the target AWS database. After the instance has been created, an AWS Identity and Access Management (IAM) role is created to enable AWS DMS to access the respective source and target secrets. Next, two AWS DMS endpoints are provisioned for the source and target databases using the VPC endpoints previously created for the respective Network Load Balancers.

Creation of EC2 instance

An EC2 instance is provisioned by the Terraform deployment to act as a bastion host from which SQL statements are run via the PostgreSQL client, and AWS CLI commands to interact with AWS DMS are run. AWS Systems Manager Session Manager is enabled to securely connect to the EC2 instance. The EC2 instance is provisioned with the required PostgreSQL and AWS CLI software to simplify the migration activities.

Validation of Terraform build

After the environment has been deployed, we can validate that all the AWS services have been properly provisioned by looking at infrastructure deployed by running the following command:

You can expect to see an output similar to the following screenshot.

Validate the solution

To validate the environment we just built using the Terraform modules, we perform a test migration using AWS DMS. The workflow involves the creation of a set of tables on the source database, insertion of data records on the new tables, and finally the creation and run of the AWS DMS migration task. All the steps listed in the following sections are run from the EC2 bastion host.

Prepare the source database

To prepare the source database, complete the following steps:

In the output of the terraform output command, take note of the following four fields (you need them to connect to each database):

Use the PostgreSQL client (psql) in the bastion host to connect to the source database via the Network Load Balancer VPC endpoint:

Create a database, schema, and table objects:

Describe the table:

The output must look similar to the following screenshot.

Insert sample records in the newly created table:

Connect to the target database

Before we start the migration, verify that you can connect to the target database.

Use the PostgreSQL client installed in the bastion host to connect to the target postgres database via the target VPC endpoint:

Create the target database by running the following statement:

Create the AWS DMS replication task

The AWS DMS replication tasks are the core component of the database migration process. They contain all the metadata require to instruct AWS DMS on how to perform the migrations. Therefore, before we create the replication task, let’s create two JSON configuration files to use for the task settings and table mappings.

Create the task-settings.json file using the following sample JSON document:

The TargetTablePreMode setting indicates how to handle loading the target at full-load startup. The available options are as follows:

DO_NOTHING – Data and metadata of the existing target table aren’t affected

DROP_AND_CREATE – The existing table is dropped and a new table is created in its place

TRUNCATE_BEFORE_LOAD – Data is truncated without affecting the table metadata

For this example, we chose the TRUNCATE_BEFORE_LOAD option given the simplicity of the test environment, but you can select the option that better fits the needs of your organization.

Create the table-mappings.json file using the following JSON document:

We configure a few environment variables to store information such as Amazon Resource Names and the AWS Region in which the resources are deployed:

Run the following AWS CLI command to create an AWS DMS migration task called dms-task-demo:

Wait approximately 60 seconds for the task to be created and then run the AWS DMS migration task:

Monitor the migration task while it is running. You can run the following command in a loop at different intervals:

When the task is complete, connect to the target database using a PostgreSQL client:

Describe the target table to validate it is migrated successfully:

The output must look similar to the following screenshot.

Query the contents of the demo_schema.demo_accounts table to list all the records match the records stored in the source database:

The output must look similar to the following screenshot.

After you verify the contents of the database, delete the replication task by running the following command:

For more information about AWS DMS best practices, refer to Best practices for AWS Database Migration Service.

Clean up

When you finish your test, make sure to remove all the created resources so you avoid incurring future costs. Run the following command to destroy all the objects managed by your Terraform configuration:

Conclusion

In this post, we presented a solution for implementing communication between VPCs using VPC endpoints and Network Load Balancers for performing database migrations using AWS DMS in a secure and efficient way. We also provided all the required Terraform automation scripts and AWS CLI commands to provision the different AWS services, and SQL statements to create and query database objects. We encourage you to try this solution.

As always, we welcome your feedback, so please leave your comments.

About the Authors

Xavier Navas is a Devops Consultant at Amazon Web Services. He works with customers in their journey to the cloud with a focus on building complex CI/CD pipelines, automation and infrastructure as code. In his spare time, Xavier enjoys going to the gym, boxing and learning new technologies.

Israel Oros is a Database Migration Consultant at AWS. He works with customers in their journey to the cloud with a focus on complex database migration programs. In his spare time, Israel enjoys traveling to new places with his wife and riding his bicycle whenever weather permits.

Read MoreAWS Database Blog