The Industrial Internet of Things (IIOT) revolution has transformed the way various industries such as manufacturing and automobile work. Industry 4.0—also called the Fourth Industrial Revolution or 4IR—is the next phase in the digitization of the manufacturing sector, driven by disruptive trends including the rise of data and connectivity, analytics, human-machine interaction, and improvements in robotics. For these industries, to meet Industry 4.0, proactive monitoring of assets is a crucial requirement. As a result, identifying and reporting anomalies in devices is a critical task for asset managers and analysts. The traditional way to build an anomaly detection solution requires a deep understanding of multiple technical domains, such as ETL (extract, transform, and load), data science, and business intelligence (BI). This is a very time-consuming and error-prone job. Any deviation or error could result in monetary and non-monetary losses.

In this post, we provide an approach to build a low-code, optimized anomaly detection solution using features such Amazon Aurora zero-ETL integration, Amazon Redshift ML (bring your own model), and Amazon SageMaker.

Solution overview

Amazon Redshift is a fully managed, petabyte-scale cloud data warehouse service. You can structure your data, measure business processes, and get valuable insights quickly by using Amazon Redshift. It provides built-in features to accelerate the process of modeling, orchestrating, and reporting from a dimensional model.

Amazon Relational Database Service (Amazon RDS) makes it straightforward to set up, operate, and scale a relational database in the AWS Cloud. It provides cost-efficient, resizable capacity for an industry-standard relational database and manages common database administration tasks. You can choose from seven popular engines: Amazon Aurora MySQL-Compatible Edition, Amazon Aurora PostgreSQL-Compatible Edition, Amazon RDS for MySQL, Amazon RDS for MariaDB, Amazon RDS for PostgreSQL, Amazon RDS for Oracle, and Amazon RDS for SQL Server.

Amazon Aurora MySQL zero-ETL integration with Amazon Redshift enables near-real-time analytics on petabytes of transactional data stored in Aurora MySQL databases. Data written into Aurora databases is made available in Amazon Redshift within seconds. This enables you to act on the data quickly without managing complex data pipelines. In addition, as of the writing of this post, zero-ETL integration also supports (in preview) Amazon Aurora PostgreSQL, Amazon DynamoDB, and Amazon RDS for MySQL as sources and Amazon Redshift as target.

Zero-ETL allows you to analyze data from multiple Aurora databases in a Redshift data warehouse. You can enhance data analysis with a rich set of analytics capabilities in Amazon Redshift, such as high-performance SQL, built-in machine learning (ML) and Spark integrations, materialized views, data sharing, and direct access to multiple data stores and data lakes.

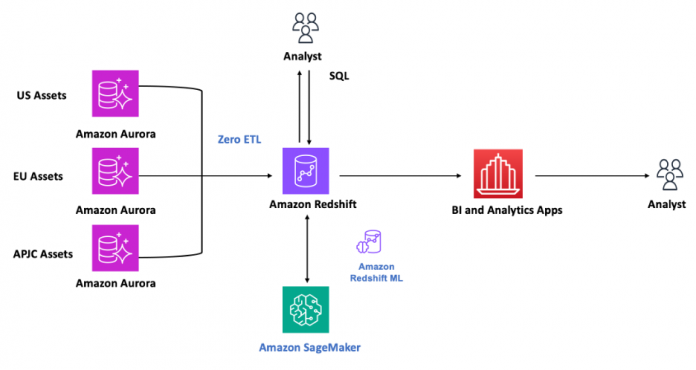

The following diagram illustrates the architecture of our solution.

Prerequisites

To get started, you need an Aurora instance and a Redshift data warehouse with the Redshift ML feature enabled.

For an introduction to zero-ETL integration and instructions on setting it up, see Getting started with Amazon Aurora zero-ETL integrations with Amazon Redshift.

For an introduction to Redshift ML and instructions on setting it up, see Create, train, and deploy machine learning models in Amazon Redshift using SQL with Amazon Redshift ML.

Refer to the Amazon Aurora MySQL-Compatible Edition, Amazon Redshift, and Amazon SageMaker pricing pages to understand more about this solution’s costs.

Source data overview

The data used for this use case is simulated data related to turbofan engine units. This dataset was generated using Commercial Modular Aero-Propulsion System Simulation (C-MAPSS) by NASA. It is a widely used dataset in the study for prognostics and asset health measurement.

The dataset contains measurements related to different turbofan engine units taken over multiple cycles. Each cycle has three operational parameters and 21 measurements working under different conditions. The measurements include features such as zone temperature, zone pressure, fan speed, fuel flow, coolant flow, and more. You can get more details about the dataset on the Kaggle website. We used this data as historical data to train our model.

We analyzed this data and obtained interesting insights around the deterioration of units over time, statistical distribution of sensor measurements, correlation between different sensors measurements, and more, as shown in the following figures.

Create an Aurora source database and populate it with the source data

Complete the following steps to prepare your data:

Create the required source tables in Aurora:

Load the historical transactional data into the Aurora table (asset_readings_history) using LOAD DATA FROM S3 or any other methods as per your use case.

Load the primary data into the Aurora table (asset_master) using LOAD DATA FROM S3 or any other methods as per your use case.

Create a zero-ETL integration between Aurora and Amazon Redshift

Before you create a zero-ETL integration with Amazon Redshift, you need to configure an Aurora database cluster and Redshift data warehouse with the required parameters and permissions. The high-level steps are as follows:

Configure Aurora MySQL database cluster parameters.

Create a source database cluster.

Create a target Redshift data warehouse.

Create the zero-ETL integration between the Aurora MySQL database and Amazon Redshift.

For detailed steps, refer to Getting started with Amazon Aurora zero-ETL integrations with Amazon Redshift. If the sources and targets are in the same account, the Amazon RDS console can automate configuring the sources and targets.

Create a materialized view in Amazon Redshift

Zero-ETL integration is a fully managed solution that makes transactional or operational data available in Amazon Redshift in near-real time. Zero-ETL will replicate the asset tables you created and data into Amazon Redshift.

Connect to Amazon Redshift and create a materialized view on top of the replicated asset tables using the following SQL:

Anomaly detection

SageMaker Random Cut Forest (RCF) is an unsupervised learning algorithm designed to detect anomalous data points within a dataset. Examples of anomalies that are important to detect include when website activity uncharacteristically spikes or when a price of stock fluctuates beyond usual trends. For more details about this algorithm, refer to How RCF Works.

Build, train, and test the RCF model using SageMaker

Complete the following steps to build, train, and test your model:

Unload the historical data from the Redshift table (asset_readings_history) and store it in an Amazon Simple Storage Service (Amazon S3) bucket using the Redshift copy command.

We use this data as training data to build the model.

Create a SageMaker notebook instance to prepare and process data, and train and deploy ML models from a compute instance running the Jupyter Notebook application.

We use the notebook instance to prepare and process data, and train and deploy ML models. For more details, refer to Use Notebook Instances to build models.

Read and load the historical source data into a pandas data frame.

Build and train the model using your source data and RCF algorithm:

Create an inference endpoint using the SageMaker Python SDK deploy() function from the job you defined. Specify the instance type where inference is computed as well as an initial number of instances to spin up:

Integrate the model with Amazon Redshift

We create a model in Redshift ML using the preceding SageMaker inference endpoint. Use the default AWS Identity and Access Management (IAM) role with your CREATE MODEL command as follows:

Connect to Amazon Redshift and run the following command:

Check the model status using the following command:

This command will show information about your ML model, including its status, the parameters used to create it, and the prediction function with its input argument types.

Predict anomalies using live data

Insert sample incremental asset reading data into asset_readings_inc_demo. When the model is ready, run the inference query using the function name from the create model statement:

The following table shows our results.

Unit

MeasureValue

AnomalyFactor

125

472

5.81888

194

515

4.37081

125

526

3.63245

249

603

1.88502

249

513

4.47532

194

641

2.24393

240

502

4.9565

194

578

2.98961

240

626

2.96595

With Amazon Redshift query editor v2, after you run a query and the results display, you can generate a chart to display a graphic visualization of the current page of results. We used the preceding results to plot the following histogram of the anomaly scores.

This histogram shows the distribution of the number of units across different anomaly scores. Assets in lower bins indicate a cluster of normal assets, whereas a tail on the right side represents anomalous assets. However, determining an appropriate threshold depends on your business use case and data characteristics.

Clean up

To avoid incurring charges, clean up the Aurora MySQL instance, Redshift database, and SageMaker notebook if you used this solution in your environment for testing purposes. You can also delete the zero-ETL integration.

Delete the Aurora database cluster

Complete the following steps:

On the Amazon RDS console, in the navigation pane, choose Databases.

Select the DB cluster that you want to delete.

On the Actions menu, choose Delete.

To create a final DB snapshot for the DB cluster, choose Create final snapshot. This is the default setting.

If you chose to create a final snapshot, enter a snapshot name.

To retain automated backups, choose Retain automated backups. This is not the default setting.

Enter delete me to confirm.

Choose Delete.

Delete the Redshift cluster

Complete the following steps:

On the Amazon Redshift console, in the navigation pane, choose Clusters.

Select the cluster to delete.

On the Actions menu, choose Delete.

Choose Delete cluster.

Delete the SageMaker notebook

Complete the following steps to delete the SageMaker notebook and related resources:

On the SageMaker console, under Inference in the navigation pane, choose Endpoints.

Select the endpoint you created and on the Actions menu, choose Delete.

Under Inference in the navigation pane, choose Endpoint configurations.

Select the endpoint configuration you created and on the Actions menu, choose Delete.

Under Inference in the navigation pane, choose Models.

Select the model you created and on the Actions menu, choose Delete.

Under Notebooks in the navigation pane, choose Notebook instances.

Select the notebook you created and on the Actions menu, choose Stop.

Wait until the notebook status changes to Stopped.

Select the notebook and on the Actions menu, choose Delete.

On the Amazon S3 console, delete the bucket you created for storing model artifacts and the training dataset.

Delete the zero-ETL integration

Complete the following steps:

On the Amazon RDS console, in the navigation pane, choose Zero-ETL integrations.

Select the zero-ETL integration that you want to delete.

On the Actions menu, choose Delete.

Confirm the deletion.

Conclusion

In this post, we showed you how to use Aurora zero-ETL integration, Redshift ML, and SageMaker to build a real-time anomaly detection pipeline. We used the Aurora zero-ETL integration to build a real-time data pipeline to load historical and standard data to Amazon Redshift. To learn more about Aurora zero-ETL integration with Amazon Redshift, refer to Working with Amazon Aurora zero-ETL integrations with Amazon Redshift and Working with Amazon Aurora zero-ETL integrations.

We then used Redshift ML to demonstrate how you can bring your own anomaly detection model using algorithms such as RCF and make inferences. This allows you to democratize ML by making predictions with Amazon Redshift SQL commands. For more information about building different models with Redshift ML, see Amazon Redshift ML.

About the Authors

Kanchan Bhattacharyya is a Senior Database Specialist at Amazon Web Services. He works with enterprise customers providing technical assistance on database operational performance and sharing database best practices. He specializes in Amazon RDS for SQL Server, Amazon RDS for PostgreSQL, Amazon RDS for MySQL, and Amazon Aurora.

Ajinkya Chavan is a Senior Analytics Specialist at Amazon Web Services. He works with enterprise customers providing technical assistance on operational performance and best practices of Amazon Redshift and Amazon OpenSearch Service.

Vijay Kardile is a Senior Analytics Specialist at Amazon Web Services. He works with enterprise customers providing technical assistance on operational performance and best practices of Amazon EMR and Amazon MSK.

Read MoreAWS Database Blog