As the prevalence of machine learning (ML) and artificial intelligence (AI) grows, you need the best mechanisms to aid in the experimentation and development of your algorithms. You might begin with the several built-in algorithms in Amazon SageMaker that simply require you to point the algorithm at your data and start a SageMaker training job. At the other end of the spectrum, you might be quite specialized and have several highly customized algorithms and Docker containers to support those algorithms, and AWS has a workflow to create and support these bespoke components as well. However, it’s increasingly common to have invested time and energy into researching, testing, and building several custom ML algorithms, but use widely used frameworks such as scikit-learn. In this scenario, you don’t need or want to invest the time, money, and resources to create and support bespoke containers.

To address this, SageMaker offers a solution using script mode. Script mode enables you to write custom training and inference code while still utilizing common ML framework containers maintained by AWS. Script mode is easy to use and extremely flexible. In this post, we discuss three primary use cases for using script mode, and how script mode can accelerate your algorithm development and testing while simultaneously decreasing the amount of time, effort, and resources required to bring your custom algorithm to the cloud.

Solution overview

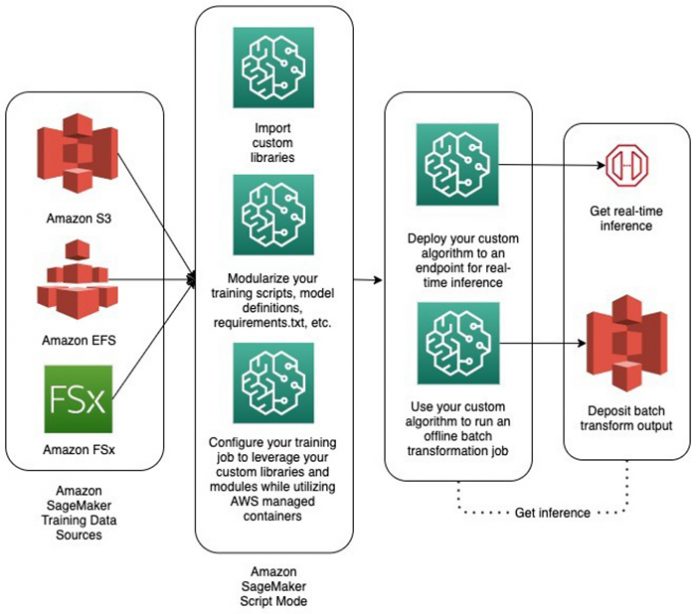

The following diagram illustrates our architecture for this solution. Moving from left to right, you first see the three options for storing your model training and testing data, which include Amazon S3, Amazon EFS, or Amazon FSx. Amazon SageMaker is then used to train your model. Here we use script mode to customize the training algorithm and inference code, add custom dependencies and libraries, and modularize the training and inference code for better manageability. Next, Amazon SageMaker is used to either deploy a real-time inference endpoint or perform batch inference offline.

SageMaker provides every developer and data scientist with the ability to build, train, and deploy ML models quickly. Script mode allows you to build models using a custom algorithm not supported by one of the built-in choices. This is referred to as script mode because you write your custom code (script) in a text file with a .py extension.

SageMaker supports most of the popular ML frameworks through pre-built containers, and has taken the extra step to optimize them to work especially well on AWS compute and network infrastructure in order to achieve near-linear scaling efficiency. These pre-built containers also provide some additional Python packages, such as Pandas and NumPy, so you can write your own code for training an algorithm. These frameworks also allow you to install any Python package hosted on PyPi by including a requirements.txt file with your training code or to include your own code directories.

Prerequisites

To follow along with this post, you must create the following prerequisite resources:

AWS Identity and Access Management (IAM) role

SageMaker notebook instance (with the SageMaker script mode example from the GitHub repo cloned)

Amazon Simple Storage Service (Amazon S3) bucket

To create these resources, launch the following AWS CloudFormation stack:

Enter a unique name for the stack, S3 bucket, and notebook. You can leave the other settings at their default.

After the script is complete, you can go to the Resources tab of the stack to review the resources created.

Run the notebooks using script mode

Now you can navigate to the /amazon-sagemaker-examples/sagemaker-script-mode/ folder and start working your way through the sagemaker-script-mode.ipynb notebook and accompanying files. For the sake of completeness, we explain in detail the steps necessary to create the resources that are automatically created for you with the CloudFormation script:

IAM role – To build and run an ML model using SageMaker, you must provide an IAM role that grants SageMaker permission to access Amazon S3 in your account to fetch the training and test datasets. If you’re accessing SageMaker from outside the AWS Management Console, you also must add sagemaker.amazonaws.com as a trusted entity to you IAM role.

SageMaker notebook instance – For instructions, see Create a Notebook Instance. Attach the IAM role you created for SageMaker to this notebook instance.

S3 bucket – For instructions on creating a bucket to store the output of your human workflow, see Step 1: Create your first S3 bucket.

Accompanying Jupyter notebooks – This project consists of a multi-part Jupyter notebook, available on GitHub. The notebook covers using script mode to do the following:

Implement custom algorithms using an AWS managed container

Modularize your training code and model definitions

Import custom libraries and dependencies

Implement custom algorithms using an AWS managed container

The first level of script mode is the ability to define your own training job, model, and inference process without any dependencies. This is done using a customized Python script and pointing that script as the entry point when defining your SageMaker training estimator. For this post, we demonstrate implementing a custom random forest regressor to predict housing prices using a synthetic housing dataset.

Script mode in SageMaker allows you to take control of the training and inference process without having to create and maintain your own Docker containers. For example, if you want to use a scikit-learn algorithm, just use the AWS-provided scikit-learn container and pass it your own training and inference code. The SageMaker Python SDK packages this entry point script (which can be your training or inference code), uploads it to Amazon S3, and sets the following environment variables, which are read at runtime and load the custom training and inference functions from the entry point script:

SAGEMAKER_SUBMIT_DIRECTORY – Set to the S3 path of the package

SAGEMAKER_PROGRAM – Set to the name of the script (which in our case is train_deploy_scikitlearn_without_dependencies.py)

The process is the same if you want to use an XGBoost model (use the XGBoost container) or a custom PyTorch model (use the PyTorch container). Because you’re passing in your own script, you define the model, the training process, and the inference process.

In the following code, we include an entry point script called train_deploy_scikitlearn_without_dependencies.py, which contains our custom training and inference code. You can review the source code for the custom script in its entirety on GitHub.

After the estimator finishes training, we deploy it to a SageMaker endpoint:

Then we use the SageMaker endpoint to make predictions:

If you want to come back to this notebook after you deployed the SageMaker endpoint, you can use the following snippet of code to invoke it:

Modularize your training code and model definitions

The second level of script mode is the ability to modularize and logically organize your custom training jobs, models, and inference processes.

Sometimes keeping all your code in one Python file can be unwieldy. Script mode gives you the flexibility to parse out your code into multiple Python files. To illustrate this feature, we build a custom PyTorch model and logically separate the model definition from the training and inference logic. This is done by stipulating the source directory when defining your SageMaker training estimator (illustrated in the following code). The model isn’t supported out of the box, but the PyTorch framework is and can be used in the same manner as scikit-learn was in the previous example.

In this PyTorch example, we want to separate the actual neural network definition from the rest of the code by putting it into its own file as demonstrated in the pytorch_script/ folder. You can review the source code for the file organization in its entirety on GitHub.

Again, after the estimator finishes training, we deploy it to a SageMaker endpoint:

Then we use the endpoint to make predictions:

Import custom libraries and dependencies

The third level of script mode is the ability to bring your own libraries and dependencies to support custom functionality within your models, training jobs, and inference processes. This supercharges your customization options, and allows you to import libraries you created yourself or Python packages hosted on PyPi.

Perhaps the number of Python files you have is becoming unwieldy, or you want more organization. In this scenario, you might be tempted to create your own Python library. Or maybe you want to implement a function not currently supported by SageMaker in the training phase (such as k-fold cross-validation).

Script mode supports adding custom libraries, and those libraries don’t have to be in the same directory as your entry point Python script. You simply need to stipulate the custom library or other dependencies when defining your SageMaker training estimator (illustrated in the following code). SageMaker copies the library folder to the same folder where the entry point script is located when the training job is run.

In this example, we implement k-fold cross-validation for an XGBoost model using a custom-built library called my_custom_library. Although XGBoost is supported out of the box on SageMaker, that version doesn’t support k-fold cross-validation for training. Therefore, we use script mode to use the supported XGBoost container and the concomitant flexibility to include our custom libraries and dependencies. You can review the source code for the custom library in its entirety on GitHub.

After we train the model with k-fold cross-validation, we deploy it to a SageMaker endpoint:

Then we use the endpoint to make predictions:

Clean up

To avoid incurring future charges, make sure to delete each SageMaker endpoint created in this workshop. You can do this by running the cleanup cell at the end of the notebook:

Also make sure to delete any S3 buckets used for storing data. Finally, delete the SageMaker notebook.

Conclusions

In this post, we discussed three primary use cases for using script mode, and how script mode can accelerate your algorithm development and testing while simultaneously decreasing the amount of time, effort, and resources required to bring your custom algorithm to the cloud. Script mode can help if you require an added level of customization in your ML model development and deployment but don’t need or want to invest the time, money, and resources to create and support bespoke containers. Check out the example code in the accompanying GitHub repo and begin testing your ML models in the AWS Cloud today.

About the Authors

Bobby Lindsey is a Machine Learning Specialist at Amazon Web Services. He’s been in technology for over a decade, spanning various technologies and multiple roles. He is currently focused on combining his background in software engineering, DevOps, and machine learning to help customers deliver machine learning workflows at scale. In his spare time, he enjoys reading, research, hiking, biking, and trail running.

David Ehrlich is a Machine Learning Specialist at Amazon Web Services. He is passionate about helping customers unlock the true potential of their data. In his spare time, he enjoys exploring the different neighborhoods in New York City, going to comedy clubs, and traveling.

Read MoreAWS Machine Learning Blog