At Meta, we support real-time communication (RTC) for billions of people through our apps, including Messenger, Instagram, and WhatsApp.

We’ve seen significant benefits by adopting the AV1 codec for RTC.

Here’s how we are improving the RTC video quality for our apps with tools like the AV1 codec, the challenges we face, and how we mitigate those challenges.

The last few decades have seen tremendous improvements in mobile phone camera quality as well as video quality for streaming video services. But if we look at real-time communication (RTC) applications, while the video quality also has improved over time, it has always lagged behind that of camera quality.

When we looked at ways to improve video quality for RTC across our family of apps, AV1 stood out as the best option. Meta has increasingly adopted the AV1 codec over the years because it offers high video quality at bitrates much lower than older codecs. But, as we’ve implemented AV1 for mobile RTC, we’ve also had to address a number of challenges including scaling, improving video quality for low-bandwidth users as well as high-end networks, CPU and battery usage, and maintaining quality stability.

Improving video quality for low-bandwidth networks

This post is going to focus on peer-to-peer (P2P, or 1:1) calls, which involve two participants.

People who use our products and services experience a range of network conditions – some have really great networks, while others are using throttled or low-bandwidth networks.

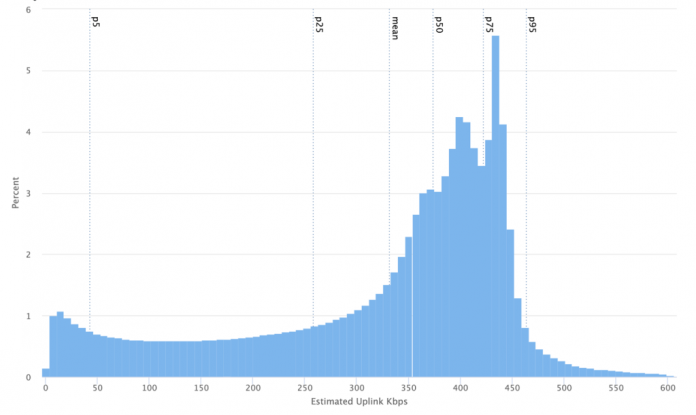

This chart illustrates what the distribution of bandwidth looks like for some of these calls on Messenger:

Figure 1: Bandwidth distribution of P2P calls on Messenger.

As seen in Figure 1, some calls operate in very low-bandwidth conditions.

We consider anything less than 300 Kbps to be a low-end network, but we also see a lot of video calls operating at just 50 Kbps, or even under 25 Kbps.

Note that this bandwidth is the share for the video encoder. Total bandwidth is shared with audio, RTP overhead, signaling overhead, RTX (re-transmissions of packets to handle lost packets)/FEC (forward error correction)/duplication (packet duplication), and so on. The big assumption here is that the bandwidth estimator is working correctly and estimating true bitrates.

There are no universal definitions for low, mid, and high networks, but for the purpose of this blog post, less than 300 Kbps will be considered as low, 300-800 Kbps as mid, and above 800 Kbps as a high, HD-capable, or high-end network.

When we looked into improving the video quality for low-bandwidth users, there were few key options. Migrating to a newer codec such as AV1 presented the greatest opportunity. Other options such as better video scalers and region-of-interest encoding offered incremental improvements.

Video scalers

We use WebRTC in most of our apps, but the video scalers shipped with WebRTC don’t have the best quality video scaling. We have been able to improve the video scaling quality significantly by leveraging in-house scalers.

At low bitrates, we often end up downscaling the video to encode at ¼ resolution (assuming the camera capture is 640×480 or 1280×720). With our custom scaler implementations, we have seen significant improvements in video quality. From public tests we saw gains in peak signal-to-noise ratio (PSNR) by 0.75 db on average.

Here is a snapshot showing results with the default libyuv scaler (a box filter):

Figure 2.a: Video image results using WebRTC/libyuv video scaler.

And the results after downscaling with our video scaler:

Figure 2.b: Video image results using Meta’s video scaler.

Region-of-interest encoding

Identifying the region of interest (ROI) allowed us to optimize by spending more encoder bitrate in the area that’s most important to a viewer (the speaker’s face in a talking head video, for example). Most mobile devices have APIs to locate the face region without utilizing any CPU overhead. Once we have found the face region we can configure the encoder to spend more bits on this important region and less on the rest. The easiest way to do this was to have some APIs on encoders to configure the quantization parameters (QP) for ROI versus the rest of the image. These changes provided incremental improvements in the video quality metrics like PSNR.

Adopting the AV1 video codec

The video encoder is a key element when it comes to video quality for RTC. H.264 has been the most popular codec over the last decade, with hardware support and most applications supporting it. But it is a 20-year-old codec. Back in 2018, the Alliance for Open Media (AOMedia) standardized the AV1 video codec. Since then, several companies including Meta, YouTube, and Netflix have deployed it at a large scale for video streaming.

At Meta, moving from H.264 to AV1 led us to our greatest improvements in video quality at low bitrates.

Figure 3: Improvements over time, moving from H.262 to AV1 and H.266

Why AV1?

We chose to use AV1 in part because it’s royalty-free. Codec licensing (and concurrent fees) was an important aspect in our decision-making process. Typically, if an application uses a device’s hardware codec, no additional codec licensing costs will be incurred. But if an application is shipping a software version of the codec, there will most likely be licensing costs to cover.

But why do we need to use software codecs even though most phones have hardware-supported codecs?

Most mobile devices have dedicated hardware for video encoding and decoding. And these days most mobile devices support H.264 and even H.265s. But those encoders are designed for common use cases such as camera capture, which uses much higher resolutions, frame rates, and bitrates. Most mobile device hardware is currently capable of encoding 4K 60 FPS in real time with very low battery usage, but the results of encoding a 7 FPS, 320×180, 200 Kbps video are often worse than those of software encoders running on the same mobile device.

The reason for that is prioritization of the RTC use case. Most independent hardware vendors (IHVs) are not aware of the network conditions where RTC calls operate; hence, these hardware codecs are not optimized for RTC scenarios, especially for low bitrates, resolutions, and frame rates. So, we leverage software encoders when operating in these low bitrates to provide high-quality video.

And since we can’t ship software codecs without a license, AV1 is a very good option for RTC.

AV1 for RTC

The biggest reason to move to a more advanced video codec is simple: The same quality experience can be delivered with a much lower bitrate, and we can deliver a much higher-quality real-time calling experience for our users who are on bandwidth-constrained networks.

Measuring video quality is a complex topic, but a relatively simple way to look at it is to use the Bjontegaard Delta-Bit Rate (BD-BR) metric. BD-BR compares how much bitrate various codecs need to produce a certain quality level. By generating multiple samples at different bitrates, measuring the quality of the produced video provides a rate-distortion (RD) curve, and from the RD curve you can derive the BD-BR (as shown below).

As can be seen in Figure 4, AV1 provided higher quality for all bitrate ranges in our local tests.

Figure 4: Bitrate distortion comparison chart.

Screen-encoding tools

AV1 also has a few key tools that are useful for RTC. Screen content quality is becoming an increasingly important factor for Meta, with relevant use cases, including screen sharing, game streaming, and VR remote desktop, requiring high-quality encoding. In these areas, AV1 truly shines.

Traditionally, video encoders aren’t well suited to complex content such as text with a lot of high-frequency content, and humans are sensitive to reading blurry text. AV1 has a set of coding tools—palette mode and intra-block copy—that drastically improve performance for screen content. Palette mode is designed according to the observation that the pixel values in a screen-content frame usually concentrate on the limited number of color values. Palette mode can represent the screen content efficiently by signaling the color clusters instead of the quantized transform-domain coefficients. In addition, for typical screen content, repetitive patterns can usually be found within the same picture. Intra-block copy facilitates block prediction within the same frame, so that the compression efficiency can be improved significantly. That AV1 provides these two tools at the baseline profile is a huge plus.

Reference picture resampling: Fewer key frames

Another useful feature is reference picture resampling (RPR), which allows resolution changes without generating a key frame. In video compression, a key frame is one that’s encoded independently, like a still image. It’s the only type of frame that can be decoded without having another frame as reference.

For RTC applications, since the bandwidth keeps on changing often, there are frequent resolution changes needed to adapt to these network changes. With older codecs like H.264, each of these resolution changes requires a key frame that is much larger in size and thus inefficient for RTC apps. Such large key frames increase the amount of data needing to be sent over the network and result in higher end-to-end latencies and congestion.

By using RPR, we can avoid generating any key frames.

Challenges around improving video quality for low-bandwidth users

CPU/battery usage

AV1 is great for coding efficiency, but codecs achieve this at the cost of higher CPU and battery usage. A lot of modern codecs pose these challenges when running real-time applications on mobile platforms.

Based on local lab testing, we anticipated a roughly 4 percent increase in battery usage, and we saw similar results in public tests. We used a power meter to do this local battery measurement.

Even though the AV1 encoder itself increased CPU usage three-fold when compared to H.264 implementation, the overall contribution of CPU usage from the encoder was a small part of the battery usage. The phone display screen, networking/radio, and other processes using the CPU contribute significantly to battery usage, hence the increase in battery usage was 5-6 percent (a significant increase in battery usage).

A lot of calls run out of device battery, or people hang up once their operating system indicates a low battery, so increasing battery usage isn’t worthwhile for users unless it provides increased value such as video quality improvement. Even then it’s a trade-off between video quality versus battery use.

We use WebRTC and Session Description Protocol (SDP) for codec negotiation, which allows us to negotiate multiple codecs (e.g., AV1 and H.264) up front and then switch the codecs without any need for signaling or a handshake during the call. This means the codec switch is seamless, without users noticing any glitches or pauses in video.

We created a custom encoder that encapsulates both H.264 and the AV1 encoders. We call it a hybrid encoder. This allowed us to switch the codec during the call based on triggers such as CPU usage, battery level, or encoding time — and to switch to the more battery-efficient H.264 encoder when needed.

Increased crashes and out of memory errors

Even without new leaks added, AV1 used more memory than H.264. Any time additional memory is used, apps are more likely to hit out of memory (OOM) crashes or hit OOM sooner because of other leaks or memory demands on the system from other apps. To mitigate this, we had to disable AV1 on devices with low memory. This is one area for improvement and for further optimizing the encoder’s memory usage.

In-product quality measurement

To compare the quality between H.264 and AV1 using public tests, we needed a low-complexity metric. Metrics such as encoded bitrates and frame rates won’t show any gains as the total bandwidth available to send video is still the same, as these are limited by the network capacity, which means the bitrates and frame rates for video will not change much with the change in the codec. We had been using composite metrics that combine the quantization parameter (QP is often used as a proxy for video quality, as this introduces pixel data loss during the encoding process), resolutions, and frame rate, and freezes it to generate video composite metrics, but QP is not comparable between AV1 and H.264 codecs, and hence can’t be used.

PSNR is a standard metric, but it’s reference-based and hence does not work for RTC. Non-reference, video-quality metrics are quite CPU-intensive (e.g., BRISQUE: Blind/Referenceless Image Spatial Quality Evaluator), though we are exploring those as well.

Figure 6: High-level architecture for PSNR computation in RTC.

We have come up with a framework for PSNR computation. We first modified the encoder to report distortions caused by compression (most software encoders already have support for this metric). Then we designed a lightweight, scaling-distortion algorithm that estimates the distortion introduced by video scaling. This algorithm can combine these scaling distortions with the encoder distortions to produce output PSNR. We developed and verified this algorithm locally and will be sharing the findings in publications and at academic conferences over the next year. With this lightweight PSNR metric, we saw 2 db improvements with AV1 compared to H.264.

Challenges around improving video quality for high-end networks

As a quick review: For our purposes, high bandwidth covers users for whom bandwidth is greater than 800 kbps.

Over the years, there have been huge improvements in camera capture quality. As a result, people’s expectations have gone up, and they want to see RTC video quality on par with local camera capture quality.

Based on local testing, we settled on settings resulting in video quality that looks similar to that of camera recordings. We call this HD mode. We found that with a video codec like H.264 encoding at 3.5 Mbps and 30 frames per second, 720p resolution looked very similar to local camera recordings. We also compared 720p to 1080p in subjective quality tests and found that the difference is not noticeable on most devices except for those with a larger screen when we conducted subjective quality tests.

Bandwidth estimator improvements

Improving the video quality for users who have high-end phones with good CPUs, good batteries, hardware codecs, and good network speeds seems trivial. It may seem like all you have to do is increase the maximum bitrate, capture resolution, and capture frame rates, and users will send high-quality video. But, in reality, it’s not that simple.

If you increase the bitrate, you expose your bandwidth estimation and congestion detection algorithm to hit congestion more often, and your algorithm will be tested many more times than if you were not using these higher bitrates.

Figure 7: Example showing how using higher bandwidth increases the instances for congestion.

If you look at the network pipeline in Figure 7, the higher the bitrates you are using, the more your algorithm/code will be tested for robustness over the time of the RTC call. Figure 7 shows how using 1 Mbps hits more congestion than using 500 Kbps and using 3 Mbps hits more congestion than 1 Mbps, and so on. If you are using bandwidths lower than the minimum throughput of the network, however, you won’t hit congestion at all. For example, see the 500-Kbps call in Figure 7.

To mitigate these issues, we improved congestion detection. For example, we added custom ISP throttling detection, something that was not being caught by the traditional delay-based estimator of WebRTC.

Bandwidth estimator and network resilience comprise a complex area on their own, and this is where RTC products stand out. They have their own custom algorithms that work best for their products and customers.

Stable quality

People don’t like oscillations in video quality. These can happen when we send high-quality video for a few seconds and then drop back to low-quality because of congestion. Learning from past history, we added support in bandwidth estimation to prevent these oscillations.

Audio is more important than video for RTC

When network congestion occurs, all media packets could be lost. This causes video freezes and broken audio, (aka, robotic audio). For RTC, both are bad, but audio quality is more important than video.

Broken audio often completely prevents conversations from happening, often causing people to hang up or redial the call. Broken video, on the other hand, often results in less delightful conversations, but, depending on the scenario, it could also be a block for some users.

At high bitrates like 2.5 Mbps and higher, you can afford to have three to five times more audio packets or duplication without any noticeable degradation to video. When operating in these higher bitrates with cell phone connections, we saw more of these congestion, packet loss, and ISP throttling issues, so we had to make changes to our network resiliency algorithms. And since people are highly sensitive to data usage on their cell phones, we disabled high bitrates on cellular connections.

When to enable HD?

We used ML-based targeting to guess which call should be HD-capable. We relied on the network stats from the users’ previous calls to predict if HD should be enabled or not.

Battery regressions

We have lots of metrics, including performance, networking, and media quality, to track the quality of RTC calls. When we ran tests for HD, we noticed regressions in battery metrics. What we found was that most battery regressions do not come from higher bitrates or resolution but from the capture frame rates.

To mitigate the regressions, we built a mechanism for detecting both caller and callee device capabilities, including device model, battery levels, Wi-Fi or mobile usage, and so on. To enable high-quality modes, we check both sides of the call to ensure that they satisfy the requirements and only then do we enable these high-quality, resource-intensive configurations.

Figure 8: Signaling server setup for turning HD on or off.

What the future holds for RTC

Hardware manufacturers are acknowledging the significant benefits of using AV1 for RTC. The new Apple iPhone 15 Pro supports AV1’s hardware decoder, and the Google Pixel 8 supports AV1 encoding and decoding. Hardware codecs are an absolute necessity for high-end network and HD resolutions. Video calling is becoming as ubiquitous as traditional audio calling and we hope that as hardware manufacturers recognize this shift, there will be more opportunities for collaboration between RTC app creators and hardware manufacturers to optimize encoders for these scenarios.

On the software side, we will continue to work on optimizing AV1 software encoders and developing new encoder implementations. We try to provide the best experience for our users, but at the same time we want to let people have full control over their RTC experience. We will provide controls to the users so that they can choose whether they want higher quality at the cost of battery and data usage, or vice versa.

We also plan to work with IHVs to collaborate on hardware codec development to make these codecs usable for RTC scenarios including low-bandwidth use cases.

We also will investigate forward-looking features such as video processing to increase the resolution and frame rates on the receiver’s rendering stack and leveraging AI/ML to improve bandwidth estimation (BWE) and network resiliency.

Further, we’re investigating Pixel Codec Avatar technologies that will allow us to transmit the model/share once and then send the geometry/vectors for receiver side rendering. This enables video rendering with much smaller bandwidth usage than traditional video codecs for RTC scenarios.

The post Better video for mobile RTC with AV1 and HD appeared first on Engineering at Meta.

Read MoreEngineering at Meta