In this post, we discuss AWS Database Migration Service (AWS DMS) and how you can use Amazon CloudWatch and logs to monitor the performance and health of a replication task. Additionally, we discuss how to set up CloudWatch Alarms for an AWS DMS task as well as instance related metrics that we configure to receive automated alerts. Finally, we will go over troubleshooting a homogenous migration between self-managed Oracle 12c to Amazon Relational Database Service (Amazon RDS) for Oracle 19c.

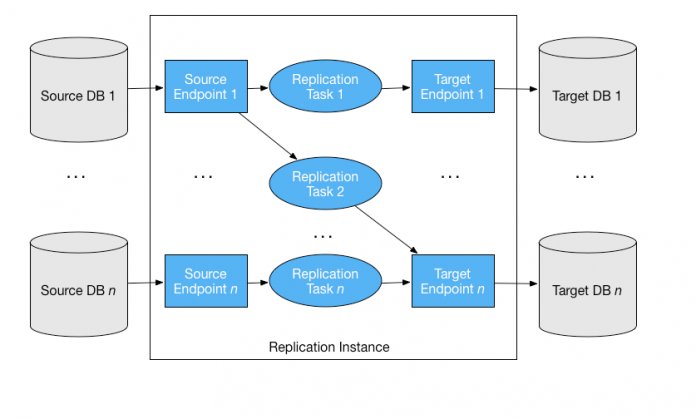

The following diagram illustrates what an AWS DMS setup typically looks like with multiple tasks defined under an AWS DMS replication instance:

Prerequisites

To follow along with this post, you should have familiarity with the following AWS services:

AWS Database Migration Service (AWS DMS)

Amazon CloudWatch

Amazon RDS for Oracle

CloudWatch metrics for the replication instance

It’s always recommended to monitor the CloudWatch metrics for your replication instance in order to ensure the instance doesn’t get overloaded and impact the performance of the AWS DMS replication task. Metrics like CPU, memory and IOPS usage play a critical role in a successful migration. Various factors can influence these metrics depending on the source and target DB engine as well as the type of the data.

For example, certain data types like XML have been known to consume additional CPU cycles during migration. Additionally, the CPU usage is greatly increased while employing parallel threads. Therefore, we need to ensure that the instance isn’t throttled.

AWS DMS task performance depends a lot on the availability of replication instance memory. During CDC AWS DMS uses two stream buffers (for incoming and outgoing data) as well as a very critical component known as a sorter. If any of these buffers lack suitable memory, the task will experience slower performance or a hung state.

Memory is also one of the critical resources to monitor while migrating changes between systems with a high transaction per second value (TPS) or for large objects (LOBs).

AWS DMS replication instances use GP2 EBS volumes for storage which carry certain limitations such as IOPS limits. While working with CSV-based targets like MySQL, PostgreSQL and Amazon Redshift the replication incurs additional I/O. I/O is also incurred when the allocated memory buffers are full and changes are being swapped out to disk.

The following table lists critical metrics to consider when running multiple tasks under the same instance.

Category

CloudWatch Metric

Explanation

Infrastructure

CPUUtilization

The percentage of CPU utilized on the replication instance. Elevated CPU usage may slow down the migration task.

FreeableMemory

The amount of memory that is currently in use that can be freed and used for other purposes. It’s is a combination of buffers and cache in use on the replication instance. As explained earlier a major part of full load (cached changes) and ongoing replication depends on memory. It’s important to monitor this metrics for task performance reasons and also to avoid potential out-of-memory related errors.

SwapUsage

The amount of swap space in bytes used by the replication instance. The swap usage mentioned here is from an operating system standpoint and excessive swap usage together with low freeable memory indicates memory pressure. This is different from the AWS DMS swap files that get stored in the disk during a CDC replication.

Workload

ReadIOPS

Similar to an RDS instance, the total baseline IOPS is three times the amount of allocated GP2 volume size with a burstable IOPS until 3000 for volume size up to 1 TB. This is consumed by both read and write I/O.

WriteIOPS

Write in the replication instance includes the writes of the task logs, the replication instance logs. If a detailed debug is enabled, it contributes to more writes because extra information is written to the disk. Also as mentioned previously we incur more write IOPS when replicating to a CSV-based target

ReadThroughput

The average number of bytes read from disk per second. This depends on the instance class bandwidth. For more information, refer to Amazon EBS-optimized instances.

WriteThroughput

The average number of bytes written to disk per second. This depends on the instance class bandwidth. For more information, refer to Amazon EBS-optimized instances.

Performance

ReadLatency

The average amount of time taken for the read operations. Generally, GP2 is an SSD-based volume and it provides single-digit millisecond latency. Therefore, for any spike in read latency, you can check for an I/O related bottleneck like IOPS or throughput.

WriteLatency

The average amount of time taken for the write operations. Generally, GP2 is an SSD based volume and it provides single-digit millisecond latency. Therefore, for any spike in write latency, you can check for IO related bottleneck like IOPS or throughput.

DiskQueueDepth

The number of outstanding read/write requests (I/Os) waiting to access the disk. This can be due to many reasons like exhaustion of I/O credits, or the workload hitting max throughput.

CloudWatch metrics for the replication task

Replication task-related metrics play a pivotal role in determining performance. We encourage you to monitor these metrics periodically to ensure a successful migration.

The following table lists important replication task metrics for full load and CDC:

Category

CloudWatch Metric

Explanation

Full Load

FullLoadThroughputBandwidthTarget

The outgoing data from the replication instance to the target endpoint in KB per second

Full Load

FullLoadThroughputRowsTarget

The outgoing data from the replication instance to the target endpoint in rows per second

CDC

CDCIncomingChanges

The total number of change events that are waiting to be applied at the target instance. This is an important metric to consider when we don’t see the latest data in the target. Possible causes can be a sudden outburst of traffic from the source instance or AWS DMS isn’t able to apply the changes to the target in a timely manner

CDC

CDCLatencySource

The gap in seconds between the last event capture timestamp from the source instance and the present timestamp of the replication instance. A high value for this indicates that the reading process from the source isn’t fast enough. This can be due to the use of LogMiner for a highly active Oracle source where Binary Reader should be used, for example.

CDC

CDCLatencyTarget

The gap in seconds between the oldest events waiting to be committed to the target and the current timestamp of the replication instance. A high value for this metrics indicates that the target apply process is delayed or it can’t keep up with the source capture rate. One method to curve it is to make sure that the target instance isn’t throttled and that the target tables have up to date stats along with the right set of indexes. Batch apply can also help in some cases.

CDC

CDCChangesMemorySource

The number of changes waiting in the replication instances memory buffer while waiting for transactions to be committed at the source instance.

CDC

CDCChangesMemoryTarget

The number of changes waiting in the replication instances memory buffer while waiting for transactions to be committed at the target instance.

CDC

CDCChangesDiskSource

When the allocated memory buffer is full, the changes still waiting to get committed at the source are flushed on to disk. This metrics indicates how many changes are being preserved at the disk level.

CDC

CDCChangesDiskTarget

When the allocated memory buffer is full, the changes still waiting to get committed at the target are flushed on to disk. This metrics indicates how many changes are being preserved at the disk level.

Task debuggers

Although the preceding CloudWatch metrics help you to check the status of AWS DMS tasks, CloudWatch logs for the AWS DMS task are the next best place to look for any table errors, exceptions, task failures and performance problems. With the default logging level, AWS DMS prints out the error or warning messages indicating the likely reason for a table load or task failure. However, you may often need to elevate the logging levels to get additional details to troubleshoot further. This can also be one of the common troubleshooting approaches provided by AWS DMS Support in case sufficient information isn’t available using the default logging.

When required we can turn on the debug logging for the following AWS DMS components:

Category

CloudWatch Metric

Explanation

Full Load

SOURCE_UNLOAD

This logging component refers to reading data from the source endpoint during full load operation and should be turned on when AWS DMS fails to read the source data during the full load process

CDC

SOURCE_CAPTURE

This logging component refers to the reading of the ongoing change components from the source instance and passing them to the AWS DMS sorter component for processing. Debugging will help in scenarios when AWS DMS can’t capture the change information from the source (read from redo logs from Binary Reader in the case of an Oracle source).

Full Load

TARGET_LOAD

This logging component refers to writing data to the target endpoint during a full load operation. This should be turned on when AWS DMS fails to dump the data it has read from the source endpoint into the target during a full load phase.

CDC

TARGET_APPLY

This logging component refers to writing ongoing change components from the source instance and passing them to the AWS DMS sorter component for processing. Debugging will help in scenarios when AWS DMS can’t capture the change information from the source (read from redo logs from Binary Reader in the case of an Oracle source).

Task

TASK_MANAGER

The task manager is the main component that is responsible for handling the various other components and streams for a successful migration. Setting up debugging at the task manager will help you figure out task crash or hung scenarios as well as abrupt failures.

Performance enhancers

Various factors can directly and indirectly influence the speed of a data migration while using AWS DMS. In this section, we have listed some of the common factors for full load as well as CDC when using Oracle Database as your source.

1. Network bandwidth

The network pipe plays a key role when transmitting data and this is more relevant for scenarios when you’re migrating data from or to your on-premises network. Depending on the data transfer requirement, you may want to use an AWS Direct Connect or a dedicated VPN connection that can cope with the change data generation.

2. Source and target DB node

If critical OS metrics like CPU cycles, memory units, or IOPS get throttled at either the source or the target node, the entire migration will stall. Therefore, monitoring key performance indicators for the DB instances at

both sides, is of paramount importance. If multiple replication tasks need to be run concurrently (which is often the requirement), it should be done in a phased manner after you ensure the resources can keep up.

3. Replication instances

In addition to the replication instance metrics called out earlier, it is important to correctly size your replication instance. Each replication instance is capable of supporting up to 200 tasks, but this may not work for all kinds of workloads. We recommend running individual dataset migration/ replication tests to identify your performance SLA. Also note that the storage attached to the AWS DMS replication instance is primarily intended for storing in-transit files; it doesn’t need to be equivalent to the transmitted data size. For example, we don’t need to have a 100 GB replication instance storage in order to transmit 100 GB of data.

4. Task parallelism

We can speed up the data replication by loading multiple tables in parallel during full load. Because this post focuses on the use of RDBMS endpoints, this option applies purely to the full load process. Task parallelism is controlled by the parameter ‘MaxFullLoadSubTasks’ which determines how many full load sub-tasks are run in parallel. By default, this parameter is set to 8, which means during full load, eight tables (if selected in table mapping) are loaded together. We can adjust this parameter in the full-load task settings section of the task JSON:

When migrating data for huge tables, we can increase the value of the parameter up to 49, but you should be cognizant of the load at the source and target DB instance, as well as monitor the key metrics of the AWS DMS replication instance. For more information, refer to Full-load task settings.

5. Table parallelism

In addition to achieving parallelism by loading multiple tables in parallel, AWS DMS also allows us to load a single large table using multiple parallel threads. This is particularly useful for tables with billions of records having multiple partitions and sub-partitions (Oracle source). Even if the source table isn’t partitioned we can use parallel load using column boundaries.

You need to set table-level parallelism in the table mapping JSON:

The number of full load sub-tasks is still controlled by the parameter MaxFullLoadSubTasks. Let’s say we want AWS DMS to load eight tables in parallel; however, one of these tables is very large with 10 partitions. We decide to load this table using parallel full load threads, so we need a total of seven to load the smaller tables plus 10*1 to load the bigger table, so 17 sub-tasks.

For more information about table and collection settings rules and operations, refer to Using parallel load for selected tables, views, and collections.

6. Commit rate

The commit rate parameter indicates the total number of records that can be transferred together at the target instance during a full load operation. By default, the value is set to 10,000 records. Increasing this parameter (up to 50,000) often leads to better performance for full load and helps in avoiding the ORA-01555 (Snapshot too old error messages). A greater value for the commit rate will lead to a faster data load, so it will take lesser time for source oracle instance to hold the consistent undo snapshot. However, you should be mindful of record sizes and especially if huge LOBs are involved, because they may lead to the replication instance running out of resources, leading to sub-optimal performance.

We can change this parameter in the full load task settings:

7. CDC threads

For RDBMS targets, unlike full load parallel settings, we don’t have a mechanism to employ parallel CDC threads to speed up the process. Therefore, every task always uses a single thread. If an AWS DMS replication task needs to apply millions of change events, it’s advisable to use multiple CDC tasks for a logically independent group of tables so that you can use more than one CDC threads. This is particularly recommended for Oracle OLTP systems with huge DML traffic. One additional thing to consider while using more than one AWS DMS task for CDC is the network traffic generated, which will vary based on the methodology that’s being used. If you’re using Oracle as the source and LogMiner setup, the mining of the table changes happens at the source instance itself, and AWS DMS only copies over the required events over the network pipe. However, if you’re using Binary Reader mode which is widely used, each AWS DMS task reads the complete redo/ archive log file. Therefore, using multiple tasks to speed up CDC will also multiply the traffic over the network pipe.

8. Binary reader mode

By default, the Oracle source endpoint uses the native LogMiner utility to mine through CDC changes. However, this may not be feasible for systems running multiple tasks in parallel or with high volumes of change events, because this will take a toll on the IOPS and CPU resources of the source instance. Binary Reader offloads the mining task to the replication instance instead by directly reading the database log files. In general, we should use it if redo generation rate exceeds 30 GB per hour. Another use case can be where we have multiple AWS DMS replication tasks defined on the same source instance and the redo log generation rate is more than 10 GB per hour.

To use Binary Reader to access the redo logs, we need to add the following extra connection attributes to the source endpoint:

Use the following format for the ECAs to access from an Oracle server that uses ASM with Binary Reader:

For more information about the pros and cons of Binary Reader mode, refer to Using Oracle LogMiner or AWS DMS Binary Reader for CDC.

9. Batch apply

The AWS DMS CDC process is single threaded, in which AWS DMS reads the source database transaction logs and translates the changes before passing them to the sorter component. The sorter component manages incoming changes in the commit order and sequentially forwards them to the target apply component of the AWS DMS task. Due to this sequential nature, the process if often slower for a highly transactional system leading to high target latency. If the workload involves high volume of CDC changes, updating the same set of records over and over again or inserting or updating and deleting the same records, you can improve the target apply throughput using batch apply.

During batch apply, AWS DMS processes the changes using the following steps:

AWS DMS collects the set of changes in the batch coming from the source database transaction logs. The batch timing depends on the settings for the BatchApplyMemoryLimit and BatchApplyTimeoutMin parameters.

AWS DMS creates a net changes table in replication instance memory comprising of all the changes captured during the batch interval.

AWS DMS will reduce the number of changes on the net changes table using net change algorithm depending on the nature of DML statements.

AWS creates and applies the net changes table on the target DB instance.

The changes from the net changes table flow into the specific target tables, employing SQL queries with joins.

We can turn on the batch apply setting by toggling the following parameter in the target metadata settings:

10. LOB setup

LOB columns need special treatment not needed due to the fact that AWS DMS can’t determine LOB size per row per column to be able to allocate appropriate memory on the replication instance side. AWS DMS provides full, limited and inline LOB modes to migrate LOB data.To set the appropriate configuration for LOB data migration, we need to know the max size of the LOB columns and how the LOB distribution looks. For example, our approach will vary if only 10% of records in the LOB column have 2 MB data and the remaining 90% records have less than 64 KB of data.

To find out which tables have LOB columns, use the following query:

The following screenshot shows our output:

Use the following query script (‘lob_size_deep_dive.sql’) to find the LOB data distribution for a specific column of the table. Do note that it creates a staging table to store the query output:

The script has distributed the LOB data into various buckets depending on their size range. More than 99% of records are approximately ~1.7 KB, so this can be our cutover value for InlineLOBMaxSize.

In general, we should always use the limited LOB mode after ensuring that there are no LOB column values exceeding the LobMaxSize parameter. If there are, instead of using a very high value for the parameter LobMaxSize, we should use inline or per table LOB mode.

Inline LOB mode offers the following advantages:

Automatic switch between limited LOB mode and full LOB mode

Small LOBs are transferred inline, which is efficient

Large LOBs are transferred using LOB lookup

During CDC, both small and large LOBs are transferred using LOB lookup

In the following, the parameter InlineLobMaxSize determines the LOB size at which point AWS DMS switches to reading changes using full LOB mode:

Per-table LOB mode has the following advantages:

You can override task-level LOB settings for some or all of your tables

LOB is truncated to the bulk-max-size specified for tables with full LOB mode

We need to specify the parameter bulk-max-size in the table mapping settings using the following JSON block:

The following table summarizes the various LOB modes and associated behaviors during a full load and CDC phase:

LOB MethodTask Mode

Full Load Phase

CDC Phase

Full Lob Mode

Full load and full load and CDC: Load tables without the LOB column first and require the primary key to look up and move LOB data in chunks (insert and update, two DMLs for one row).

Require primary key to lookup (SELECT and WHERE) and transfer LOB data in chunks for ongoing replication.

Limited Lob Mode

Full load: Load LOB column, treat as VARCHAR and truncate to maxlobsize (no primary key required). Use CSV files for MySQL, PostgreSQL, MariaDB, Amazon Aurora and Amazon Redshift.Full load and CDC: Require primary key (otherwise remove LOB column for both full load and CDC phase if no primary key)

Require primary key to look up and transfer LOB (truncate to maxlobsize), otherwise remove LOB column. (SELECT with WHERE from source and insert in target).

Inline Lob Mode

During a full load, AWS DMS checks all LOBs and performs an inline transfer for the LOBs that are smaller than InlineLobMaxSize. AWS DMS transfers all LOBs larger than the InlineLobMaxSize in FullLobMode.

When LOB size is more than inline LOB size, migrate to target with INSERT and UPDATE. When LOB size is less than inline LOB size, we migrate to target inline. We don’t use INSERT and UPDATE

For more information about best practices when migrating LOBs in AWS DMS, refer to Migrating large binary objects (LOBs).

CloudWatch alarms

You can proactively monitor metrics related to AWS DMS tasks and replication instances using CloudWatch alarms. You can create a CloudWatch alarm to monitor and send alerts when any metrics exceed a certain threshold. CloudWatch uses Amazon Simple Notification Service (Amazon SNS) to send notifications via email. Setting up CloudWatch alarms for AWS DMS is useful in the following use cases:

You can monitor the CPU usage and freeable memory of the replication instance while ongoing change replication is in-progress. Because ongoing replication is a memory-intensive process, excessive memory usage and swapping can lead to performance issues in the replication task and potential out-of-memory errors in the AWS DMS replication instance. You can set a CloudWatch alarm on freeable memory of the replication instance with threshold set to 80% and whenever the consumed memory goes above 80%, it sends a notification.

You can set up a CloudWatch alarm to monitor the latency of the AWS DMS task. This is particularly useful in scenarios where the AWS DMS task isn’t able to read or write the data fast enough but the task is in a running state. When the alert gets triggered we can carry out necessary troubleshooting to take care of the latency issue.

You can also set up a CloudWatch alarm for every error (‘]E:’) or warning (‘]W:’) messages in the task logs to make sure these messages aren’t ignored even when they may not cause the AWS DMS task to change states.

Let’s look at each use case in more detail.

Monitor memory of the replication instance

The following is an example of creating a CloudWatch alarm on FreeableMemory of the replication instance and sending email notifications. We first use the AWS Command Line Interface (AWS CLI) to create an SNS topic:

Then we subscribe to the topic:

Monitor task latency

You can use the following code to create a CloudWatch alarm named “DMSMemory” that is triggered when memory utilization crosses 70 % for 300 seconds for the specified replication instance. When the alarm is triggered, a notification is sent to the specified SNS topic.

Monitor for error messages

In the following example, we create a CloudWatch alarm that is triggered whenever a warning or error message appears in the DMS task logs, and sends an email notification to the email ID used to setup the SNS topic.

For this example, we use AWS DMS console to create a metric based alarm whenever AWS DMS encounters the “ORA-00001: unique constraint” violation error.

Open the AWS DMS task log and choose Create metric filter:

For Filter name, enter a name.

For Filter pattern, enter ORA-00001.

For Metric namespace, enter a custom namespace; this allows you to group similar metrics in the future.

For Metric name, enter the name of the metric (it must be unique to the namespace).

For Metric value, enter 1.

For Unit, choose Count.

Choose Create:

Alternatively, you can use the following AWS CLI command to create the metric filter. In the following example, the log group name is the name of the log group for the AWS DMS task replication instance.

The metric Filter is visible from on the AWS DMS Console.

You can also use the AWS CLI to create an alarm. In the following code, the alarm notifies users via email if the total value for “Unique Constraints Violation” exceeds 5 in a period of 5 minutes:

Demonstration

To try out some of the methods we’ve discussed so far and showcase their benefits, we’ve designed a test case with three AWS DMS tasks. All these tasks have been run using Oracle Enterprise Edition 12c on Amazon EC2 as source and Amazon RDS for Oracle Enterprise Edition 19c as target, using an AWS DMS replication instance version 3.4.6 (dms.r5.large and sized 50 GiB):

AWS DMS full load task using full LOB compared to limited LOB mode

To demonstrate the improvement seen with limited LOB mode, we use AWS DMS to load the following table with around 5GB of LOB data and 10 million records:

The full load task with full LOB mode took around ~33 minutes to complete whereas the full load task using limited LOB mode took just less than 9 minutes to load the same data. This example illustrates the efficiency and speed of the limited LOB mode, and we can correctly determine the LOB field size and distribution.

The LOB data for this table is pretty evenly distributed as can be observed using the output of the ‘lob_size_deep_dive.sql’ script-

In addition to duration, let’s look at some of the CloudWatch metrics to understand the differences. From the replication task metrics, we can observe that the task pre-allocates memory blocks to fill the LOB data during limited LOB mode. This is shown by comparing the memory consumed against the total bytes written by the task. As a result, the task uses more memory to avoid the costly LOB lookup, resulting in increased speed. Therefore, we can write the same amount of data in much less time, accounting for the steep slope of the total bytes written metrics.

The following graph shows our findings in full LOB mode.

The following graph shows our findings in limited LOB mode.

Next, moving on to the replication instances metrics for CPU and NetworkTransmitThroughput, we can see two flat tops in the following graphs.

In the following graph, the Full load task run with full LOB mode from 22:50 hrs to 23:22 hrs. In the same graph, the full load task run with limited LOB mode from 23:25 hrs to 23:34 hrs. During full LOB mode, the replication instance had to spend extra effort in performing the LOB lookups to read the LOB field data, requiring more CPU cycles and yielding less NetworkTransmitThroughput resulting in increased duration. During limited LOB mode, we can read the LOB data together with the other non-LOB table columns, avoiding the need to perform additional lookups and the associated delay, which explains the lower CPU usage and the improved NetworkTransmitThroughput performance.

You can see the impact of NetworkTransmitThroughput in the following graph.

We can observe a similar trend by looking at the WriteIOPS metrics of the target. As shown in the following graph, we achieve better performance using limited LOB mode as indicated from 23:25 hrs to 23:34 hrs in comparison to using full LOB mode from 22:50 hrs to 23:22 hrs:

AWS DMS CDC task using transactional apply compared batch apply

To demonstrate the improvement offered by batch apply mode, we used AWS DMS to load the following table with around 500,000 inserts and 2.5 million updates, resulting in a record volume of 400 MB:

As mentioned earlier, transactional apply is the default mode. In specific scenarios where we insert or update and delete the same record multiple times within a short interval, batch apply can be extremely useful in improving the target apply performance. To showcase this, we use the following PL/ SQL to perform an insert followed by five updates on the same record, and make sure to commit after every DML operation:

This caused a change generation of 500,000 insert events and 2.5 million update events, and we attempted to replicate them using a CDC-only task using the default transactional apply and then the batch apply modes.

When the tasks were complete, we checked the task’s metrics in CloudWatch to check the latency trend and the incoming changes volume. As shown in the following graphs, the CDC-only task with transactional apply took a significantly longer (3.5 hours) to replicate over the change set resulting in much higher target latency of ~198 minutes. The same work load using batch apply only took around 7 minutes to get replicated, with a latency spike of 108 seconds.

The much lower value of the CDCIncomingChanges metrics during batch apply also reflects the efficiency and speed of the batch apply process. Although we attempted this test with an ideal work load, general batch apply was found to be pretty useful when dealing with target latency issues where the update volume is significantly higher.

The following shows the metrics for the CDC-only task using transactional apply.

The following shows the metrics for the CDC-only task using batch apply.

We can observe a similar benefit if we check the CDCThroughBandwidthTarget metric of the two replication-only AWS DMS tasks. When using transactional apply, we could only send data out at a maximum of 372 KB/second, as shown in the following graph.

We could achieve a maximum of 25,000 KB/second using batch apply, as shown in the following graph.

AWS DMS parallel load using column boundaries

To realize the improvement offered by the parallel load feature on a large table, we reused the same table EVENTS_LOB from the first example with more LOB data this time, and used aa AWS DMS task for the full load using 10 column boundaries. See the following code:

We also used another AWS DMS task to load the same table without the parallel setup. Both the tasks were modified to use limited LOB mode with a commit rate of 50,000. The task with parallelism was able to complete in 8 minutes compared to the other task, which took around 22 minutes. Although the task in this case migrated only 5 GB of data with 11 parallel threads, just imagine the benefit we can achieve with table-level parallelism for DB objects containing billions of records, sized several terabytes, and using a maximum full load sub-tasks of 49 threads.

In this example, we used column boundaries for ease of understanding and because it’s widely used. However, you also have the option to use partitions and sub-partitions (with Oracle as source) as required.

Next, when looking at the CloudWatch metrics, it’s important to note that the parallel load introduces additional sessions with SELECT queries on the source database, which can be a live production environment, so we need to make sure that we don’t use all the resources. Even for this job, we can observe a slightly increased CPU usage at the source as seen in the following graph. The spike on the left is while running the parallel load, and the dip on the right is without parallelism settings.

The effect on the CPU is more pronounced on the target. In the following graph, we can observe a CPU spike reaching a max of 80% with parallel load ran between 22:55 hrs to 23:05 hrs compared to 20% without the parallel settings ran between 23:11 hrs to 23:16 hrs. As expected, we ended up writing data faster on the target instance, accounting for the increased WriteIOPS as well.

The bottom line is that if the source and target allow for it; you should always aim for parallel load of bigger tables for better performance.

Conclusion

In this post, we discussed AWS DMS and ways to use different CloudWatch metrics at the replication instance and task level along with the debugging utilities to aid with troubleshooting. We also covered some of the performance enhancers that can improve replication performance, and how to set up CloudWatch alarms to alert us on a specific error or warning message. Lastly, we included a few AWS DMS task demos to show case some of the improvements that we discussed.

Use CloudWatch metrics to make more informed decisions about performance tuning your AWS DMS environment.

We welcome your feedback; leave your comments and questions in the comments section.

About the Authors

Arnab Saha is a Senior Database Specialist Solutions Architect at AWS. Arnab specializes in Amazon RDS, Amazon Aurora and Amazon Elastic Block Store. He provides guidance and technical assistance to customers thus enabling them to build scalable, highly available and secure solutions in AWS Cloud.

Manojit Saha Sardar is a Cloud Support DBA with Amazon Web Services and Subject Matter Expert for AWS Database Migration and RDS for PostgreSQL services. He has over a decade of experience in working with relational databases. At AWS, he works with the Customers to handle a variety of migration scenarios and assist them in troubleshooting issues related to RDS for Oracle as well as RDS for PostgreSQL.

Read MoreAWS Database Blog