As Artificial Intelligence and Machine Learning (AI/ML) models grow larger, training and inference applications demand accelerated compute such as NVIDIA GPUs. Google Kubernetes Engine (GKE) is a fully managed Kubernetes service that simplifies container orchestration, and has become the platform of choice to deploy, scale, and manage custom ML platforms. GKE can now automatically install NVIDIA GPU drivers, making it easier for customers to take advantage of GPUs.

Previously, using GPUs with GKE required manually installing the GPU drivers by applying a daemonset. While the manual approach has the benefit of giving customers more explicit control over their environment, for other customers, it felt like unnecessary friction that delayed the deployment workflow.

Going forward, GKE can automatically install GPU drivers on behalf of the customer. With the GA launch of GKE’s automated GPU driver installation, using GPUs is now even easier.

“Automatic driver installation has been a great quality-of-life feature to simplify adding GPUs to GKE node pools. We use it on all of our AI workloads” – Barak Peleg, VP, AI21

Additionally, off-loading the installation management of GPU drivers to Google allows for the drivers to be precompiled for the GKE node, which can reduce the time it takes for GPU nodes to startup.

Setting up GPU driver installation

To take advantage of automated GPU driver installation when creating GKE node pools, specify the DRIVER_VERSION option with one of the following options:

default: Install the default driver version for the GKE version.

latest: Install the latest available driver version for the GKE version. Available only for nodes that use Container-Optimized OS.

If you’d prefer to manually install your drivers, the DRIVER_VERSION can be specified as disabled, which will skip automatic driver installation. Currently, if you do not specify anything, the default behavior is the manual approach.

Here’s how to enable GPU driver installation via gcloud:

<ListValue: [StructValue([(‘code’, ‘gcloud container node-pools create POOL_NAME \rn–accelerator type=nvidia-tesla-a100, count=8, gpu-driver-version=latest \rn–region COMPUTE_REGIONrn–cluster CLUSTER_NAME’), (‘language’, ”), (‘caption’, <wagtail.rich_text.RichText object at 0x3eda4473a790>)])]>

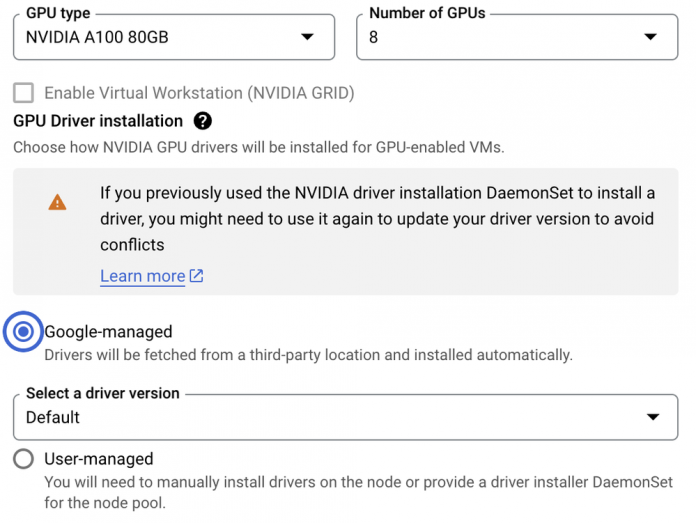

You can also enable it through the GKE console UI. In the node pool machine configuration, select GPUs. You will then be given the option for ‘Google-managed” or ‘User-managed’, as shown below.

If you select the ‘Google-managed’ option, , the necessary GPU drivers will be automatically installed when the node pool is created. This eliminates the need for the additional manual step. For the time being, GPU driver installation is set to ‘User-managed’. In the future, GKE will shift the default selection to ‘Google-managed’ once most users become accustomed to the new approach.

To learn more about using GPUs on GKE, please visit our documentation.

Cloud BlogRead More