Do you want to copy or archive your Amazon Relational Database Service (Amazon RDS) for PostgreSQL or Amazon Aurora PostgreSQL-Compatible Edition logs directly to Amazon Simple Storage Service (Amazon S3)? Does your organization have the regulatory requirements to audit all the DDL or DML activity against your RDS for PostgreSQL database?

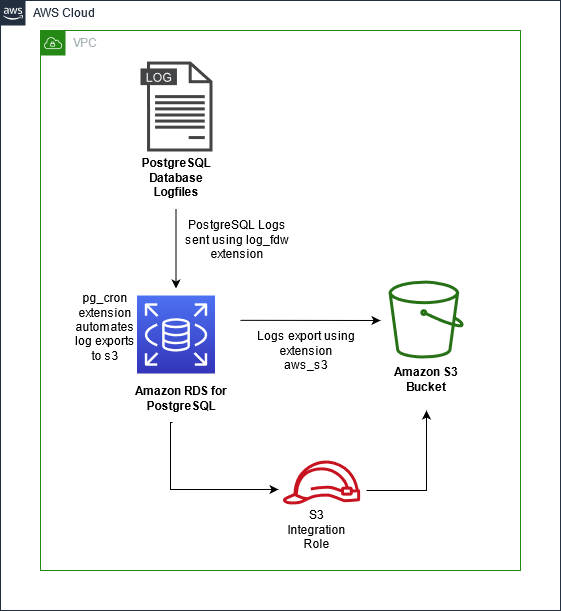

With the addition of the pg_cron extension for Amazon RDS for PostgreSQL (version 12.5 and higher) and for Amazon Aurora for PostgreSQL (version 12.6+), you can now automate exports of PostgreSQL engine logs directly to Amazon S3 by using the PostgreSQL extensions log_fdw, aws_s3, and pg_cron. With these extensions, you can copy and archive the PostgreSQL database logs directly over to Amazon S3.

In this post, we show you how you can use the PostgreSQL extensions and automate the export of PostgreSQL logs directly to Amazon S3. We also show you an example of how you can filter the engine logs and export only the DML statements to Amazon S3.

Prerequisites

For this post, we use an Amazon RDS for PostgreSQL instance. Before you get started, make sure you complete the following prerequisites.

Create or have access to an AWS account

Install and configure the AWS Command Line Interface (AWS CLI)

Create an RDS for PostgreSQL instance (version 12.5 or higher). For instructions on provisioning an instance, see Create and Connect to a PostgreSQL Database with Amazon RDS

Launch an Amazon Elastic Compute Cloud (Amazon EC2) instance to access the database instance with psql client installed and configured

Create an S3 bucket where we store the logs exported from the database

Create the required AWS Identity and Access Management(IAM) roles and policies and attach the role to your RDS for PostgreSQL instance to provide access to the S3 bucket

Create the following PostgreSQL extensions in your RDS for PostgreSQL database

pg_cron – To enable the pg_cron extension, modify the database parameter group and add value pg_cron to the shared_preload_libraries parameter. Restart the database

log_fdw – We use the log_fdw extension to load all the available RDS for PostgreSQL or Aurora PostgreSQL DB log files as a table

aws_s3 – With the aws_s3 extension, you can query data from your RDS for PostgreSQL DB instance and export it directly into files stored in an S3 bucket. This requires you to create an S3 bucket and IAM role, and attach the role to the DB instance

Solution overview

To start, we first create the necessary resources.

Create custom parameter groups and allow extension support

Create an IAM role and policy, and attach the role to your RDS for PostgreSQL instance

Then we’re ready to implement the solution.

We use the log_fdw extension to import the PostgreSQL logs into our RDS for PostgreSQL database. These logs are stored in a table

Next, we export these logs to an S3 bucket using the extension aws_s3

To automate the whole process, we use the pg_cron extension and schedule a job that sends these logs to Amazon S3 periodically

The following diagram illustrates the solution architecture.

Let’s look into the details of each step of this solution.

Create custom parameter groups and allow extension support

Create and associate a parameter group for your RDS for PostgreSQL instance (log-stream-demo-instance in this post) using the AWS CLI with the following code.

Modify the parameter group to support pg_cron and log_fdw.

Restart the database to allow the parameter group changes to take place.

Create an IAM role and policy and attach the role to your RDS for PostgreSQL instance

Create a bucket and appropriate IAM roles for aws_s3 setup, allowing Amazon RDS to access Amazon S3.

Set up a bucket for exporting logs.

Create an IAM policy with the least-restricted privilege to the resources in the following code and name it postgres-s3-access-policy. The policy must have access to the Amazon S3 bucket where the files are stored (for this post, log-export-bucket).

Create an IAM role named postgres-s3-export-role and modify the trust relationship.

AssumeRole allows Amazon RDS to access other AWS services on your behalf.

Associate the IAM roles to the DB instance and cluster.

Import PostgreSQL logs into the table using extension log_fdw

To use the log_fdw functions, we must first create the extension on the database instance. Connect to the database using psql and run the following command.

With the extension loaded, we can create a function that loads all the available PostgreSQL DB log files as a table within the database. The definition of the function is available on GitHub.

With the function created, we can run the function to load the PostgreSQL logs into the database. Each time we run the following command, the logs.postgres_logs table is updated with the most recent engine logs.

We can view the logs through a SELECT command on the logs.postgres_logs table. By narrowing down our SELECT command, we can tailor our query to a specific use case.

In the following example, we’re looking only at error messages from the engine logs that occurred within the last hour.

Export PostgreSQL logs from table into Amazon S3 using aws_s3

Now that we have a function to query for new log statements, we use aws_s3 to export the retrieved logs to Amazon S3. From the prerequisites, we should already have an S3 bucket created and we should have attached an IAM role to the DB instance that allows for writing to your S3 bucket.

Create the aws_s3 extension with the following code:

Run the query export to Amazon S3 function:

Note: This query uses dollar quoting to handle nested quotation marks. The dollar quotes consist of a dollar sign ($), a numbered quote Q1, Q2, and another dollar sign, surrounding the string they are quoting. In this example, those quoted strings are “%(INSERT|UPDATE|SELECT|DELETE)%” and “1”.

As of this writing, cross-Region exports are blocked.

Automate the log exports using extension pg_cron

Now that we have the steps to perform log uploads to Amazon S3 using the log_fdw and aws_s3 extensions, we can automate these steps using pg_cron. With pg_cron, we can write database queries to be run on a schedule of our choosing.

As part of the prerequisites, you should have pg_cron added to the shared_preload_libraries parameter in your database instance’s parameter group. After pg_cron is loaded into shared_preload_libraries, you can simply run the following command to create the extension:

With pg_cron created, we can use the extension to perform the PostgreSQL log uploads on a cron defined schedule. To do this, we need to schedule a cron job, passing in a name, schedule, and the log export query we want to run. For example, to schedule log uploads every hour with the same query described earlier, we can run the following command:

After we schedule the uploads, we can query the cron.job table to see our scheduled cron job along with its info:

We can also view the run history from each run of this cron job by querying the pg_cron audit table. This provides information on the run time of each upload, whether it succeeded or failed, and more. See the following code:

If you decide at any time that you want to cancel these automated log uploads, you can unschedule the associated cron job by passing in the job name specified previously. In the following example, the job name is postgres-s3-log-uploads:

For more information about scheduling jobs with pg_cron, see Scheduling jobs with pg_cron on your Amazon RDS for PostgreSQL or Amazon Aurora for PostgreSQL databases.

Common errors and troubleshooting

You may get the following error when exporting the database logs from Amazon RDS to an S3 bucket:

The message usually occurs when the outbound rules are missing in the security group attached to your RDS instance. When you call the aws_s3.query_export_to_s3 function, Amazon RDS makes the PutObject HTTPS API call to upload the file to S3 bucket. Make sure that the policy attached to the role provides access to the S3 bucket.

You also get an error if you create an extension but the particular Amazon RDS for PostgreSQL version doesn’t support the extension. For example, the extension pg_cron is supported starting Amazon RDS for PostgreSQL version 12.5. If you’re using a version lower than 12.5, an error occurs. For more information about supported extensions, see PostgreSQL extensions supported on Amazon RDS.

Best practices

We recommend setting the parameter log_destination to ‘csvlog‘ in your parameter group. With this, the log_fdw extension creates tables that contains log statements with columns, which makes it easier for the users to query the data.

Exporting PostgreSQL logs to Amazon S3 using extensions is only recommended for low volume exports. Exporting a huge amount of data out from Amazon RDS for PostgreSQL to Amazon S3 is an intensive operation and may impact other transactions running on the cluster. For larger log transferring and monitoring of logs, we recommend using Amazon CloudWatch. CloudWatch provides various metrics, alarms, and tools that simplify the process of monitoring your database. CloudWatch also provides the option of exporting to Amazon S3 if this specific functionality is also desired.

There are various ways for improving upload speed that can vary depending on your workload. For example, if you want to upload error log statements and DDL statements, running parallel exports may improve your upload speed. For more information about best practices when exporting to Amazon S3, refer to Export and import data from Amazon S3 to Amazon Aurora PostgreSQL.

The audit table cron.job_run_details stores the historical runtime information of the scheduled cron jobs. This table can grow in size very quickly. As a best practice, you can schedule a job to clean up the older records from the table periodically.

Summary

In this post, we showed how you can use the PostgreSQL extensions log_fdw, aws_s3, and pg_cron to automate the export of PostgreSQL logs to Amazon S3. This is a great use case for storing historical audit-related data. You can also use various visualization tools on the data stored on Amazon S3 to perform analytics and build dashboards to see the trends.

If you have any questions or suggestions on this post, leave us a comment.

About the Authors

Bilva Sanaba is a Software Development Engineer with AWS RDS PostgreSQL team. He has worked on designing and developing various customer facing features such aws_s3.

Zachary Bessette is a Software Development Engineer with AWS RDS PostgreSQL team. He has worked on designing and developing various customer facing features such as pg_cron.

Sukhpreet Kaur Bedi is a Database Specialist Solutions Architect with AWS focusing on Amazon RDS/Aurora PostgreSQL engines. She helps customers innovate on the AWS platform by building highly available, scalable, and secure database architectures.

Read MoreAWS Database Blog