Modernizing database workloads and architecture is the optimal way to increase efficiencies and reduce licensing and infrastructure costs. To achieve this, you can use an Amazon Aurora relational database to break free from legacy database solutions. Several large-scale companies are migrating Oracle databases to Amazon Relational Database Service (Amazon RDS) for PostgreSQL and Amazon Aurora PostgreSQL-Compatible edition databases when moving to the AWS Cloud.

When migrating from Oracle to Aurora PostgreSQL or Amazon RDS for PostgreSQL, one of the key challenges is to implement interval partitioning, which is natively available in Oracle, but not in PostgreSQL. Interval partitioning allows a database to automatically create a new partition when newly inserted data exceeds the range of an existing partition. It’s an extension to range partitioning to handle situations where the specific range is continuous but range partitions need to be created when required.

In this post, we demonstrate how to configure interval partitioning in an Aurora PostgreSQL database using PostgreSQL extensions such as pg_partman and pg_cron, monitor the scheduled jobs using AWS Lambda, and alert on failures using Amazon Simple Notification Service (Amazon SNS).

For additional information about partitioned tables in PostgreSQL, refer to the following posts:

Designing high-performance time series data tables on Amazon RDS for PostgreSQL

Speed up time series data ingestion by partitioning tables on Amazon RDS for PostgreSQL

Improve performance and manageability of large PostgreSQL tables by migrating to partitioned tables on Amazon Aurora and Amazon RDS

Solution overview

We demonstrate this automation by creating a scheduled job to configure daily, monthly, quarterly, and yearly partitions and make sure we always have partitions in advance. This way, if a record is inserted for a future date, it’s inserted in its appropriate partition. Additionally, we showcase the monitoring of PostgreSQL partition maintenance job using Lambda and scheduling it using Amazon EventBridge. Here EventBridge is used to centralize the monitoring of multiple PostgreSQL databases. When EventBridge triggers the Lambda function, it passes a json parameter which is a path in the parameter store containing PostgreSQL database details. The json parameter can be one or multiple comma separated key-value pairs, with each key-value pair for a separate PostgreSQL database. The Lambda function uses this key-value pair to login to PostgreSQL database and to monitor the partition maintenance job status.

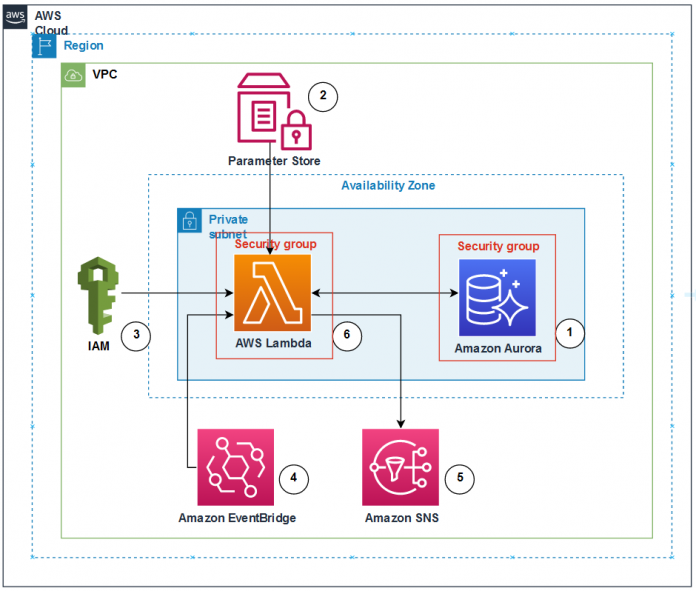

The following diagram illustrates the architecture used in this post.

The architecture consists of the following components:

An Aurora PostgreSQL database with pg_cron and pg_partman extensions. We use pg_cron to schedule the pg_partman function that performs the partition maintenance operations of adding new partitions.

We use an EventBridge rule to schedule a Lambda function that monitors the status of partition maintenance job that is scheduled using pg_cron. It passes a path in AWS Systems Parameter Store, which contains additional configuration used by the Lambda function.

We use AWS Identity and Access Management (IAM) to provide necessary permission to Lambda so that it can access different AWS services.

We use Amazon SNS to send alerts when failures occur in the pg_cron job. The Lambda function sends notification to the SNS topic that is configured in Parameter Store.

Parameter Store contains information about the database to verify the pg_cron job status.

Lambda is triggered by an EventBridge rule that runs on a schedule. It uses the input passed by EventBridge to identify the parameters defined in Parameter Store. Lambda connects to the database using these parameters and temporary token generated using generate_db_auth_token api, since the Aurora PostgreSQL database is configured for IAM database authentication. After connecting to the database, the Lambda function verifies the cron.job_run_details table for any job failures and sends notifications to Amazon SNS when failures found.

Prerequisites

To implement this solution, you must have an Aurora PostgreSQL instance with latest minor version available for 12 and above or a RDS for PostgreSQL instance with latest minor version available for 12 and above configured with IAM database authentication inside a VPC.

In the following sections, we walk you through the steps to set up:

pg_partman for partition maintenance operations

pg_cron to schedule the partition maintenance job

A database user to connect using IAM database authentication

Deploy below resources using AWS Serverless Application Model (AWS SAM):

IAM role

Parameter Store parameters

Lambda function

Interface VPC endpoints and security group

EventBridge rule

Amazon SNS topic

Set up pg_partman

In our example, we will use demodb as the database where we will create the partitioned tables. To set up pg_partman, complete the following steps:

Log in to database demodb that contains partitioned tables and install the pg_partman extension:

To test the automation using pg_cron, pg_partman maintenance, and the Lambda function to monitor the cron job status, create the sample schema data_mart and the partitioned tables data_mart.events_daily, data_mart.events_monthly, data_mart.events_quarterly, data_mart.events_yearly, and data_mart.events_range in the demodb database:

Set up the partition table configuration by calling the partman.create_parent function in the demodb database. The following commands create partitions for the data_mart.events_daily, data_mart.events_monthly, data_mart.events_quarterly, data_mart.events_yearly, and data_mart.events_range tables:

Verify that the partitions have been created. For example, the following query lists the partitions created for the table data_mart.events_yearly in the demodb database:

Configure the partman.part_config table to automatically create new partitions for time partitioned tables when a partition maintenance job is run:

Run the following query in demodb to verify the details of the partition tables, configured in the partman.part_config table:

Run the partman.run_maintenance_proc procedure in the demodb database to create future partitions, based on the configuration settings in the table partman.part_config:

Set up pg_cron

The pg_cron extension is created in the default database postgres. Run the following steps to complete the setup.

Modify the custom DB parameter group of the Aurora PostgreSQL instance by adding pg_cron to the parameter shared_preload_libraries, then restart the cluster shared_preload_libraries=pg_cron.

Log in to the postgres database in Aurora cluster and create the extension:

Configure a job to run the partman.run_maintenance_proc() procedure at regular interval, which ensures that the partitions are created in advance in demodb database. To do so, log in to the postgres database in the Aurora cluster and run the following commands (note that the job is scheduled in UTC, irrespective of database time zone settings. The documentation of pg_cron provides additional details on cron syntax). In the example below, run_maintenance_proc job is scheduled to run at 1:00 AM every day:

To verify the details of the job, run the following commands in the postgres database:

Set up a database user

Because the Aurora PostgreSQL cluster is configured for IAM database authentication, Lambda can use this feature to connect to the database. To set up a database user, complete the following steps:

Log in to the postgres database in the Aurora cluster and create the database user (for example, rds_iamuser) :

Row-level security is implemented on the cron.job_run_details table, which stores the run details of the cron job. Therefore, we have to create a function with SECURITY DEFINER that only returns the details of failed job.

Run the following statements in the postgres database:

After the function has been created, grant access to rds_iamuser to execute the function public.get_job_run_details

AWS SAM

In this section, we describe the AWS services that are deployed using the AWS SAM. To deploy the AWS services mentioned in this post, choose an Amazon Elastic Compute Cloud (Amazon EC2) host or bastion server with an IAM role attached with permissions to create an IAM role, the parameter store, Lambda functions, interface VPC endpoints, security groups, the EventBridge rule, and SNS topic.

Log in to the Amazon EC2 host and install the AWS SAM CLI.

Download the source code and deploy by running the following command:

Provide the following parameters:

Stack Name – Name for the AWS CloudFormation stack

AWS Region – AWS Region where the stack is being deployed

pNotificationEmail – Email ID that is configured to receive alerts

pVpc – VPC ID where the Lambda function is deployed

pPrivateSubnet1 – Private subnet 1 where the Lambda function is deployed

pPrivateSubnet2 – Private subnet 2 where the Lambda function is deployed

pApgClusterName – PostgreSQL cluster where the partition maintenance job is running

pCronHist – Cron history in minutes; provide a value greater than or equal to 2

pDbHost – PostgreSQL instance endpoint

pDbPort – PostgreSQL instance port

The following is the sample output when you run sam deploy –guided with the default setting arguments:

In the following sections, we describe the AWS services deployed with AWS SAM.

IAM role

AWS SAM creates an IAM policy with the permissions detailed in the following code. It also attaches an AWS managed IAM policy called AWSLambdaVPCAccessExecutionRole to the IAM role. This is required to create Lambda functions within the VPC.

Parameter Store parameters

The Lambda function requires few parameters to connect to the database and fetch job history details from the table. The values for these parameters are prompted by AWS SAM during deployment.

The parameters follow the hierarchy /check_cron_job_status/<database name>/<parameter name>. For example, if check_cron_job_status is the Lambda function name and demopg is the database name, the parameters for the database are defined as follows:

/check_cron_job_status/demopg/cron_hist_in_minutes – This parameter controls how far in history should we query the table cron.job_run_details, which contains the job run log. This parameter is also used to schedule the Lambda function.

/check_cron_job_status/demopg/db_host:demopg.cluster – Set to <abc123abc>.<region>.amazonaws.com. This parameter provides the Aurora PostgreSQL database writer endpoint.

/check_cron_job_status/demopg/db_port – Set to 5432. This is the port for the Aurora PostgreSQL database.

Lambda function

AWS SAM also deploys the Lambda function. It uses Python 3.8 and is deployed in the same VPC as the PostgreSQL database. Make sure that the security group attached to the PostgreSQL cluster allows connectivity from Lambda. The handler calls main.check_cron_job_status, which connects to the PostgreSQL database to verify the partition maintenance job status that is scheduled using pg_cron.

When failures occur in the partition maintenance job, the function sends notifications like in the following screenshot.

Interface VPC endpoints and security group

AWS SAM creates Amazon SNS and Parameter Store interface VPC endpoints. This is required so that Lambda can access the parameters defined for the database in Parameter Store and send notifications to the email ID configured in the SNS topic.

It also creates a self-referencing security group that is attached to Amazon SNS, Systems Manager interface endpoints, and Lambda, thereby allowing connections between them.

EventBridge rule

AWS SAM uses the input value passed for cron history to create an EventBridge rule that runs the Lambda function on schedule. When the Lambda function is triggered, the EventBridge rule passes a JSON parameter that identifies the path in Parameter Store. For example, the JSON parameter for a database cluster called demopg looks like the following code:

If the Lambda function has to be configured to monitor the cron job status of multiple database clusters, we can provide a comma-separated list of key-value pairs like the following code (after configuring the Parameter Store for the database) in the EventBridge rule, or set up a separate EventBridge rule for each database cluster:

Amazon SNS topic

AWS SAM configures an SNS topic with the email ID entered. Amazon SNS sends an email to the ID for verification. Choosing Confirm subscription completes the process and the email ID starts receiving notifications whenever Lambda sends one.

After the email ID is confirmed, the SNS topic looks like the following screenshot.

Troubleshooting

If for any reason records are inserted into a table (for example, the data_mart.events_daily table) that don’t contain a matching partition, such records go to the DEFAULT partition and the partition maintenance job (partman.run_maintenance_proc()) fails.

To verify the records in the default partition, run the following command:

To fix the issue immediately, run the following command to create and move the records to their respective partitions:

After the data movement is successful, future runs of the partition maintenance job (partman.run_maintenance_proc()) should occur without issues.

To fix the issue during the next maintenance window, exclude the problematic table from the partition maintenance job using the following commands:

Limitations

Currently, Lambda can identify failures when the partition maintenance job scheduled using pg_cron in Aurora PostgreSQL fails. If pg_cron is scheduled infrequently, then there is a possibility that data might get inserted into the default partition until the partition maintenance job runs and causes a failure. Also, the limitation of pg_partman, if any, will apply.

Test the solution

To test the solution, use the provided CloudFormation template, which deploys a VPC, EC2 instance, and Aurora PostgreSQL database with the extension and tables provided in this post. It also creates and attaches an IAM role that grants permission to the EC2 instance to create an IAM role, parameter store, Lambda function, interface VPC endpoints, security group, EventBridge rule, and SNS topic. As a security best practice, follow the principle of least privilege when granting permissions to the IAM role.

For full instructions on deploying the template, refer to Creating a stack on the AWS CloudFormation console.

Upload the source code to an Amazon Simple Storage Service (Amazon S3) bucket in the AWS account by downloading the source code from the GitHub repo.

On the AWS CloudFormation console, deploy the template vpc_ec2_db.yml (this file is within the .zip file downloaded from GitHub).

Modify the following parameters:

pEC2KeyPair – The EC2 Key pair in the AWS account.

pEc2InstanceProfile – The EC2 instance profile that has permission to create AWS services deployed by the CloudFormation template.

pS3CodeLocation – The path of the source code .zip file that is uploaded to the S3 bucket.

pSourceIp – The IP subnet of the system or laptop that is used to connect to Amazon EC2.

After you deploy the template, log in to Amazon EC2 using the key pair and run the following command to deploy the Lambda function:

Clean up

To clean up the resources used in this post, complete the following steps:

Delete the CloudFormation stack:

Confirm the stack has been deleted:

If you created the VPC, EC2 instance, and Aurora PostgreSQL database with the template provided in the previous section, delete that CloudFormation stack too:

Conclusion

In this post, we demonstrated how to implement interval partitioning when migrating Oracle databases to Amazon RDS for PostgreSQL and Aurora PostgreSQL. We showed the process to automate the creation of interval partitioning using pg_cron and pg_partman extensions. We also discussed the setup of event monitoring and notifications using Lambda and Amazon SNS. We recommend testing this complete solution in lower environments prior to production deployment.

Leave any thoughts or questions in the comments section.

About the Authors

Jeevan Shetty is a Consultant with the AWS Professional Services Team. He has been supporting and enabling customers to migrate their database from OnPrem data center to AWS cloud and also in migration from commercial database engines to open source database in Amazon.

Suratwala, Mansi is a Database Consultant working at Amazon Web Services. She closely works with companies from different domains to provide scalable and secure database solutions in AWS cloud. She is passionate to collaborate with customers to achieve their cloud adoption goals.

Santhosh Kumar Adapa is a Oracle Database Cloud Architect at Amazon Web Services. He works with internal Amazon customers to build secure, scalable and resilient architectures in AWS cloud and help customers perform migrations from on-premise databases to AWS RDS and Aurora Databases.

Bhanu Ganesh Gudivada is a Database Consultant with the Professional Services team at AWS based out of Hyderabad, India and specializes in database migrations. He is helping and enabling customers to build high-available, cost-effective database solutions and migrate their commercial engines to AWS cloud. Curious to learn and implement new technologies around the databases and orchestrate migrations through automation.

Read MoreAWS Database Blog