Amazon Quantum Ledger Database (Amazon QLDB) is a fully managed ledger database that provides a transparent, immutable, and cryptographically verifiable transaction log. You can use Amazon QLDB to track each application data change, and it maintains a complete and verifiable history of changes over time. Because of those key features, banking customers have adopted Amazon QLDB as a database of choice to store attestation records for preventive auditing and scanning results for release information during the application deployment cycle.. This provides an immutable and auditable history record of every preventative attestation throughout application lifecycles. However, because Amazon QLDB does not yet support an end-to-end backup with restore solution, we need to explore other approaches to improve data resiliency.

This post shows how to use the AWS Cloud Development Kit (AWS CDK) to set up Amazon QLDB, populate the Amazon QLDB data using AWS Lambda functions, and set up Amazon QLDB streaming to provide data resiliency of the data stored in the Amazon QLDB ledger. We also extend the architecture to replay the data into another Amazon QLDB ledger to provide extra data resiliency.

Prerequisites

To go through the deployment steps, you need the following:

An AWS Cloud9 instance with AWS CDK installed.

An AWS account

The AWS services used in this solution are all serverless services, so the solution is cost-effective. We estimate the AWS cost incurred for finishing the hands-on steps in this post is less than $0.10.

Solution overview

You can choose from two approaches to provide Amazon QLDB data resiliency:

Export Amazon QLDB journal data to an Amazon Simple Storage Service (Amazon S3) bucket as batch tasks at intervals

Stream Amazon QLDB journal data out to an Amazon Kinesis Data Streams instance, from where the journal data can be replicated to multiple destinations, such as an S3 bucket or another Amazon QLDB ledger.

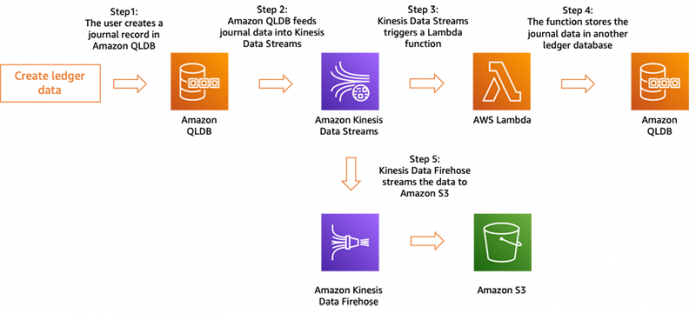

In this post, we elaborate on the latter approach. You can also use this streaming fan-out architecture for data resiliency to create read replicas for analytical queries. Another benefit of using a streaming option instead of exporting to Amazon S3 is that it provides near-real-time data replication instead of relying on batch processing. The following diagram represents the overall architecture.

Data replicated to another Amazon QLDB ledger remains the same as the source in terms of the actual contents. However, the data has new cryptographic hashes and Amazon QLDB metadata, such as document ID and transaction time. So it’s not exactly the same as the source ledger.

This solution uses TypeScript development with the AWS CDK to set up the Amazon QLDB database and the streaming of the journal data. It also provisions other components in the architecture to send the journal stream to the destination Amazon QLDB ledger and S3 bucket, in order to sync the data and mitigate the current Amazon QLDB limit of not supporting the typical database backup and restore.

The AWS CDK Typescript code sets up two AWS CloudFormation stacks to implement the architecture:

QldbBlogDbStack – Creates Amazon QLDB ledgers:

QldbBlog – The source ledger

QldbBlogStreaming – The destination ledger

QldbBlogStreamStack – Creates the following resources to enable streaming from one ledger database to the other:

A Kinesis data stream instance with AWS Key Management Service (AWS KMS) customer master key (CMK) encryption. Note that the data stream shard number is set to 1 to guarantee record ordering so that the journal data can be replayed to the destination ledger in the same exact order.

An Amazon QLDB stream instance that feeds all journal changes of the QldbBlog ledger to the Kinesis data stream. The Amazon QLDB stream’s inclusiveStartTime is set to 2020-01-01 in order to make sure all data is replicated to the source ledger from the very beginning.

An Amazon Kinesis Data Firehose delivery stream connected to the Kinesis data stream, which stores all Amazon QLDB journal change records to an S3 bucket.

Set up the AWS CDK environment and clone the repo

Set up your AWS CDK environment in an AWS Cloud9 environment. For instructions, see Getting started with the AWS CDK.

When the AWS CDK is set up, clone the GitHub repo.

Set up Amazon QLDB streaming

To deploy the AWS CDK app, complete the following steps:

Compile the codes of the Lambda function required by the AWS CDK custom resource, which is used to create tables in the source ledger.

Compile the codes of the Lambda function that extracts PartiQL statements from data stream events and runs them against the destination ledger.

Compile the AWS CDK codes.

Run the following command to deploy QldbBlogDbStack, which provisions two ledgers (source and destination) and creates the initial tables in the source ledger.

Run the following command to deploy QldbBlogStreamStack, which sets up journal record streaming on the source ledger, the Lambda function that replays journal records to the destination ledger, and the Kinesis Data Firehose instance that replicates journal records to the S3 bucket.

When the deployment steps are complete, the AWS CDK custom resource has created four tables in the source ledger QldbBlog, and the destination ledger QldbBlogStreaming has the same four tables, which are replicated by the PartiQL replay Lambda function.

You can now create indexes and populate data into the tables of the source ledger. For instructions, follow the manual option in Create tables, indexes, and sample data. The populated data is replicated in the destination ledger by the PartiQL statement by the Lambda function asynchronously.

It is important to note that QLDB system metadata will not match between two different ledgers. For example, a document copied via stream from one ledger to another will not have matching document_id’s. Given this, it is crucial that any applications referencing data in the mirrored ledgers reference user-defined keys for document lookup or timestamps.”

In addition, after the AWS CDK deployment, the journal change events received from the Amazon QLDB stream are also stored in the S3 bucket by the Kinesis Data Firehose instance.

Clean up

Run the following commands to clean up the AWS resources provisioned in the previous steps.

Considerations for In-Order processing of Streams

To make sure the destination ledger has the same data as the source ledger, we need to make sure the PartiQL statements in the journal record stream are replayed in exactly the same order into the destination ledger. To guarantee the journal record order, in the AWS CDK codes, we set up the Kinesis data stream with 1 shard because Kinesis Data Streams can ensure data order at the shard level but not across a whole multi-shard stream.

If your source ledger has to deal with large transaction volume, Kinesis data stream throttling may occur. For that, you can monitor the data stream metric PutRecords.ThrottledRecords. For more information, see Monitoring the Amazon Kinesis Data Streams Service with Amazon CloudWatch. If throttling occurs, you need to increase the shard number of the data stream instance, which means you need to consider the possibility that the sequence of the PartiQL statements received from the data stream isn’t exactly the same as the sequence of the actual PartiQL execution on the source ledger.

Also, even if Kinesis Data Streams guarantees the order, the Amazon QLDB stream itself can publish duplicate and out-of-order records to the Kinesis data stream. For more information, see Handling duplicate and out-of-order records.

In either case, we need to consider an approach to deal with this situation. We present one possible option in this post.

First, change the logic of the Lambda function replayQldbPartiQL in this post so that after it receives the BLOCK_SUMMARY records and extracts the PartiQL statements, instead of running those statements against the destination ledger straightaway, it feeds the PartiQL statements and their corresponding startTime into a settling buffer, such as an Amazon Elasticsearch Service (Amazon ES) index.

For the schema details of BLOCK_SUMMARY records, see Block summary records.

Next, set up another Lambda function. From the settling buffer, this function picks up the earliest PartiQL statement from the ones whose startTime is older than a certain settle time window (for example, older than 2 minutes), and replays the PartiQL statement against the destination ledger. It iterates the same logic over the rest of the PartiQL statements in the settling buffer.

Conclusion

This post demonstrated how to use Amazon QLDB streaming to provide extra data resiliency to mitigate the current Amazon QLDB limit of not supporting standard database backup and restore.

We showed you how to set up the exchange-to-exchange solution via the AWS CDK, including provisioning two Amazon QLDB ledgers and setting up a Kinesis data stream instance to stream out journal records from the source Ledger and replay them into the another ledger to provide near-real-time data redundancy. In addition, the AWS CDK stacks provisioned a Kinesis Data Firehose delivery stream instance to replicate the journal records streamed out from the source ledger to an S3 bucket, which provides another level of data resiliency.

If you find the solution in this post useful for your use case, try it out in your deployment. If you have any questions or suggestions, leave a comment—we’d love to hear your feedback!

About the authors

Deenadayaalan Thirugnanasambandam is a Solution Architect at AWS. He provides prescriptive architectural guidance and consulting that enable and accelerate customers’ adoption of AWS

Jasper Wang is a Cloud Architect at AWS. He loves to help customers enjoy the cloud journey. When not at work, cooking a lot as a father of two daughters and having fun with bush walks over the weekends

Read MoreAWS Database Blog